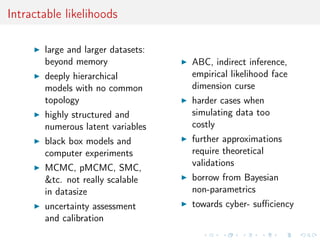

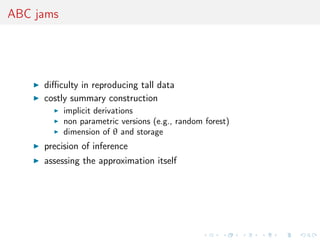

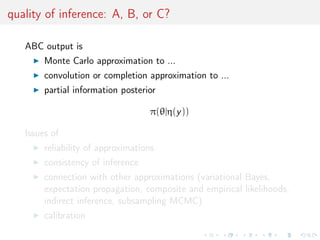

This document discusses challenges and recent advances in Approximate Bayesian Computation (ABC) methods. ABC methods are used when the likelihood function is intractable or unavailable in closed form. The core ABC algorithm involves simulating parameters from the prior and simulating data, retaining simulations where the simulated and observed data are close according to a distance measure on summary statistics. The document outlines key issues like scalability to large datasets, assessment of uncertainty, and model choice, and discusses advances such as modified proposals, nonparametric methods, and perspectives that include summary construction in the framework. Validation of ABC model choice and selection of summary statistics remains an open challenge.

![ABC methods

Bayesian setting: target is π(θ)f (x|θ)

When likelihood f (x|θ) not in closed form, likelihood-free rejection

technique:

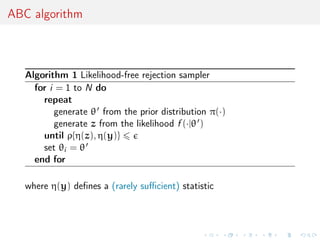

ABC algorithm

For an observation y ∼ f (y|θ), under the prior π(θ), keep jointly

simulating

θ ∼ π(θ) , z ∼ f (z|θ ) ,

until the auxiliary variable z is equal to the observed value, z = y.

[Tavaré et al., 1997]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-2-320.jpg)

![ABC methods

Bayesian setting: target is π(θ)f (x|θ)

When likelihood f (x|θ) not in closed form, likelihood-free rejection

technique:

ABC algorithm

For an observation y ∼ f (y|θ), under the prior π(θ), keep jointly

simulating

θ ∼ π(θ) , z ∼ f (z|θ ) ,

until the auxiliary variable z is equal to the observed value, z = y.

[Tavaré et al., 1997]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-3-320.jpg)

![ABC methods

Bayesian setting: target is π(θ)f (x|θ)

When likelihood f (x|θ) not in closed form, likelihood-free rejection

technique:

ABC algorithm

For an observation y ∼ f (y|θ), under the prior π(θ), keep jointly

simulating

θ ∼ π(θ) , z ∼ f (z|θ ) ,

until the auxiliary variable z is equal to the observed value, z = y.

[Tavaré et al., 1997]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-4-320.jpg)

![connections with Econometrics

Similar exploration of simulation-based techniques in Econometrics

Simulated method of moments

Method of simulated moments

Simulated pseudo-maximum-likelihood

Indirect inference

[Gouriéroux & Monfort, 1996]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-6-320.jpg)

![ABC advances

Simulating from the prior is often poor in efficiency

modify the proposal distribution on θ to increase the density of

x’s within the vicinity of y.

[Marjoram et al, 2003; Bortot et al., 2007, Beaumont et al., 2007]

borrow from non-parametrics for conditional density estimation

and allow for larger

[Blum & François, 2010; Fearnhead & Prangle, 2012]

change inferential perspective by including in inferential

framework

[Ratmann et al., 2009; Del Moral et al., 2010]

incorporate summary construction as model choice

[Joyce & Marjoram, 2008; Pudlo & Sedki, 2012]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-7-320.jpg)

![ABC advances

Simulating from the prior is often poor in efficiency

modify the proposal distribution on θ to increase the density of

x’s within the vicinity of y.

[Marjoram et al, 2003; Bortot et al., 2007, Beaumont et al., 2007]

borrow from non-parametrics for conditional density estimation

and allow for larger

[Blum & François, 2010; Fearnhead & Prangle, 2012]

change inferential perspective by including in inferential

framework

[Ratmann et al., 2009; Del Moral et al., 2010]

incorporate summary construction as model choice

[Joyce & Marjoram, 2008; Pudlo & Sedki, 2012]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-8-320.jpg)

![validation of ABC model choice

Amounts to solve

When is a Bayes factor based on an insufficient statistic

η(y)

consistent?

Answer: asymptotic behaviour of Bayes factor driven by the

asymptotic mean value of summary statistic under both models

[Marin et al., 2015]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-14-320.jpg)

![validation of ABC model choice

Amounts to solve

When is a Bayes factor based on an insufficient statistic

η(y)

consistent?

does not solve the issue of selecting the summary statistic in

realistic settings

[Pudlo et al., 2015]](https://image.slidesharecdn.com/ati-151113112906-lva1-app6891/85/Intractable-likelihoods-15-320.jpg)