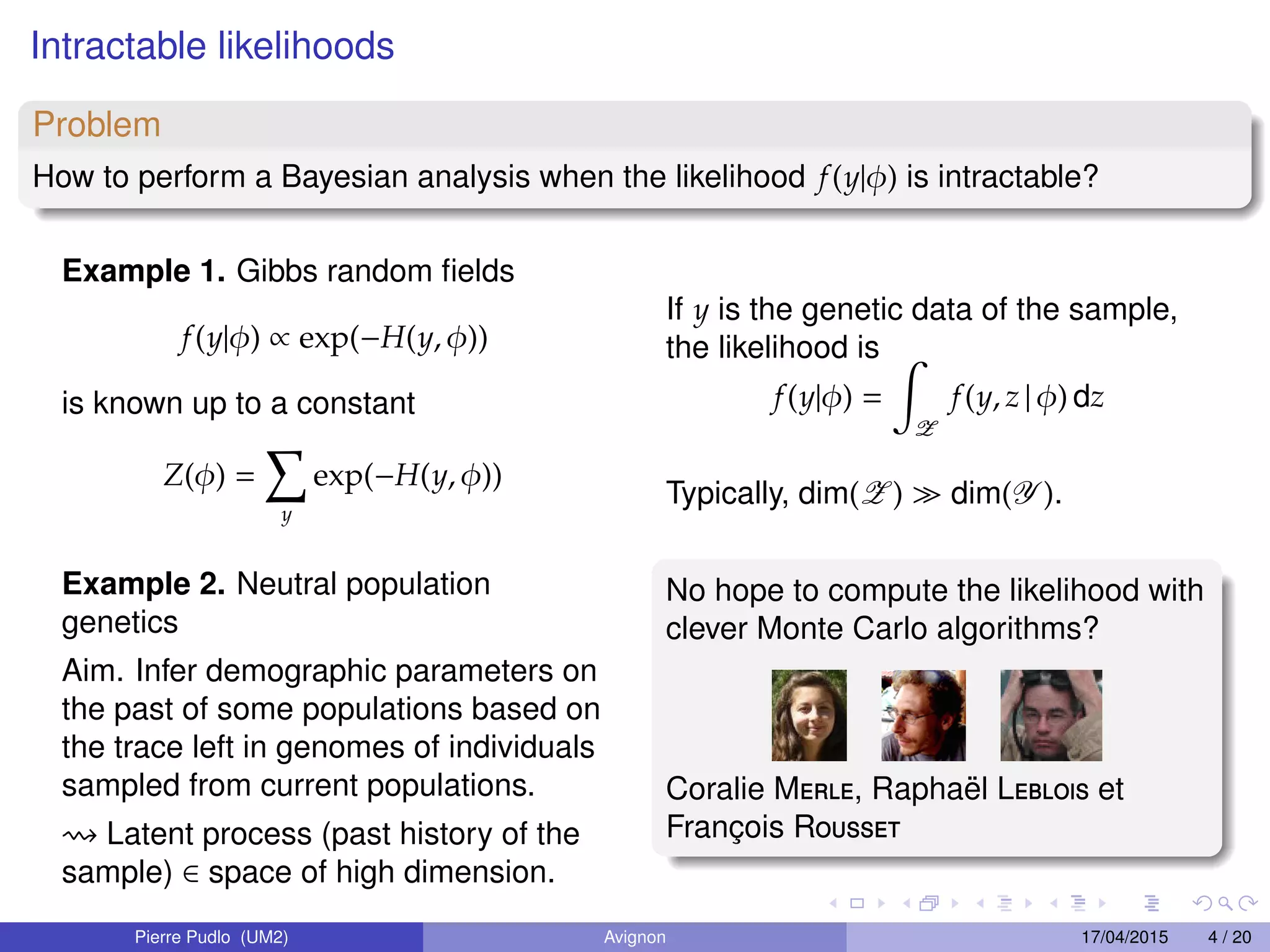

1. The document discusses likelihood-free computational statistics methods for Bayesian inference when the likelihood function is intractable. It covers approximate Bayesian computation (ABC), ABC model choice, and Bayesian computation using empirical likelihood.

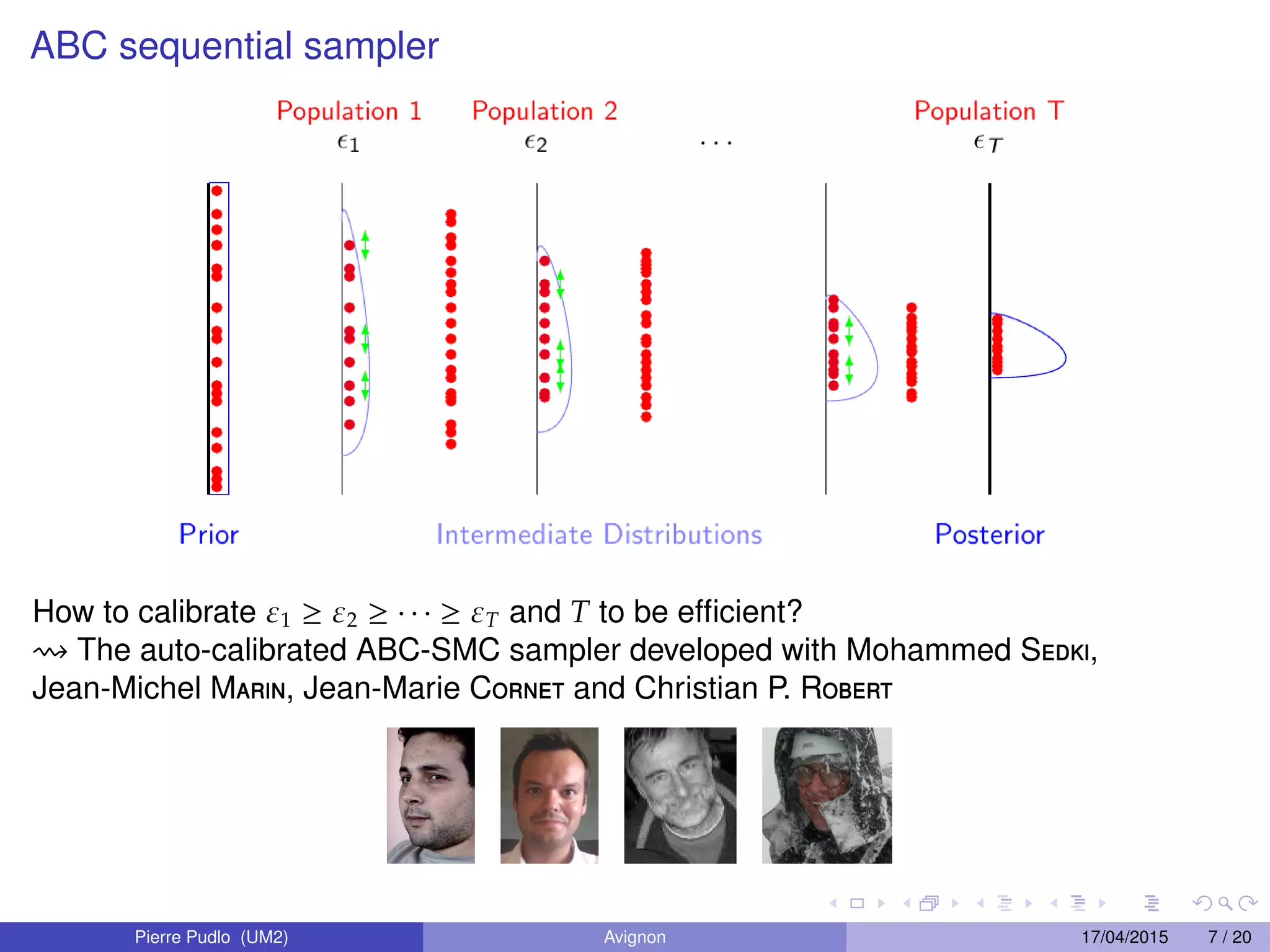

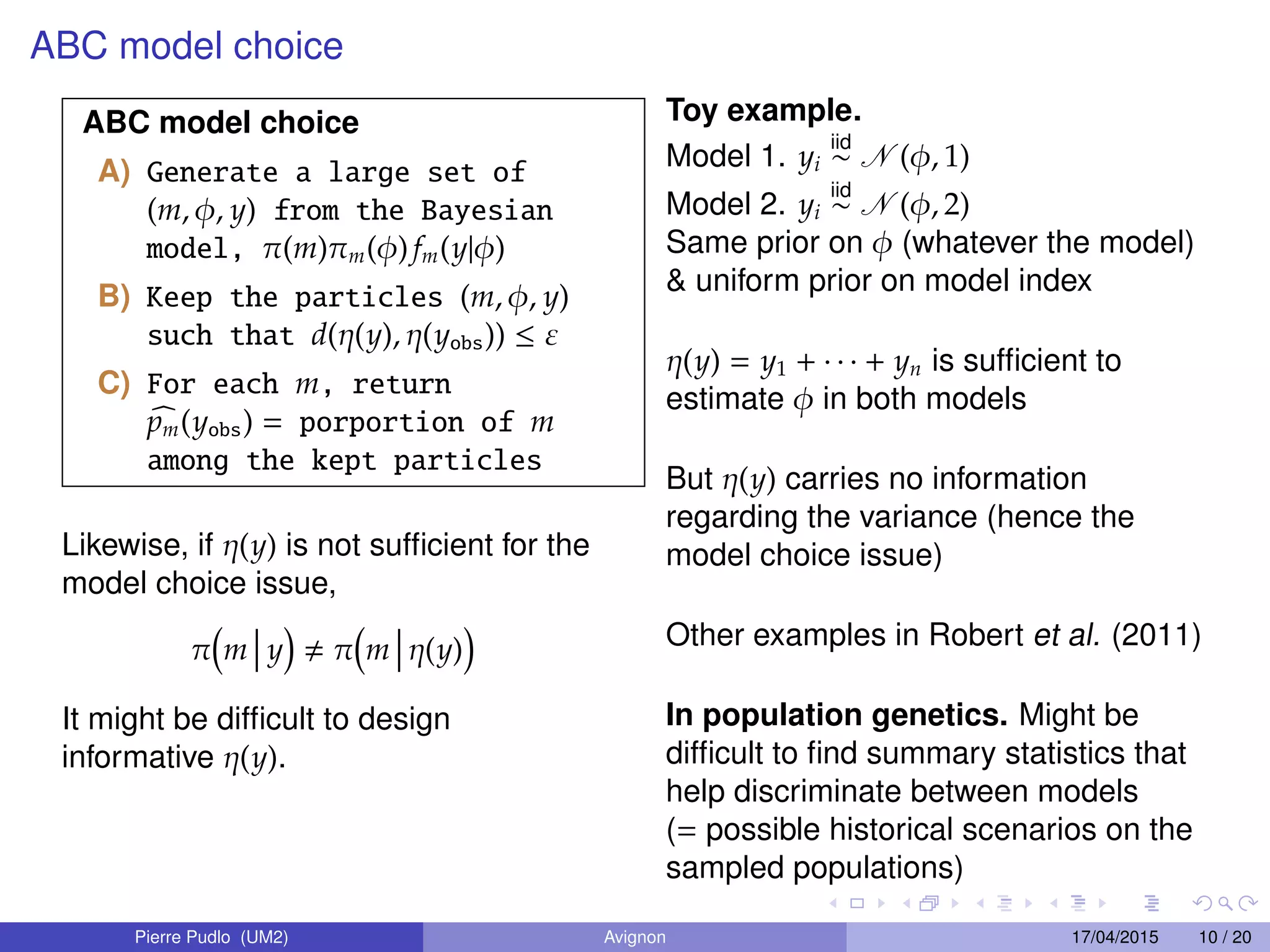

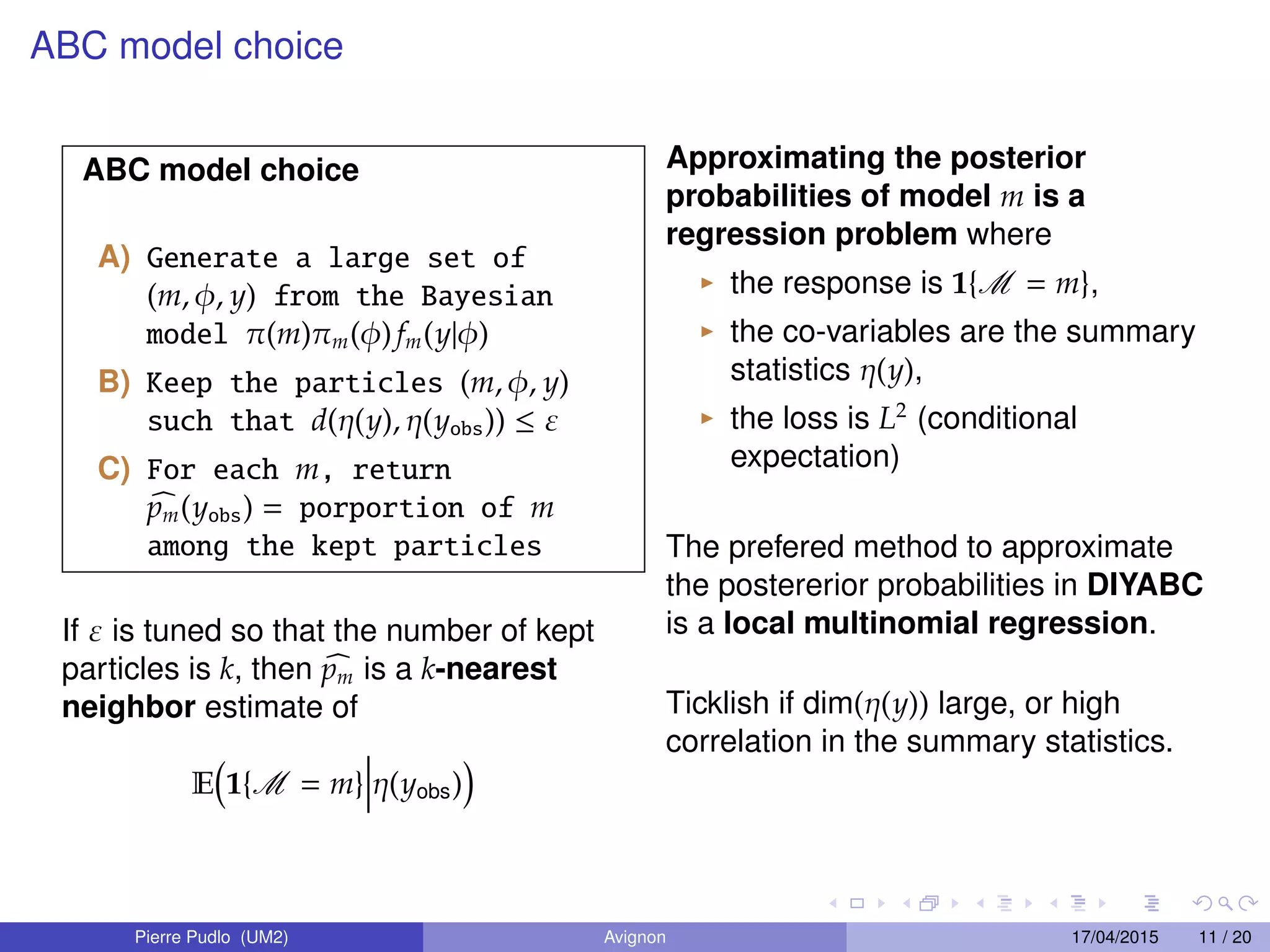

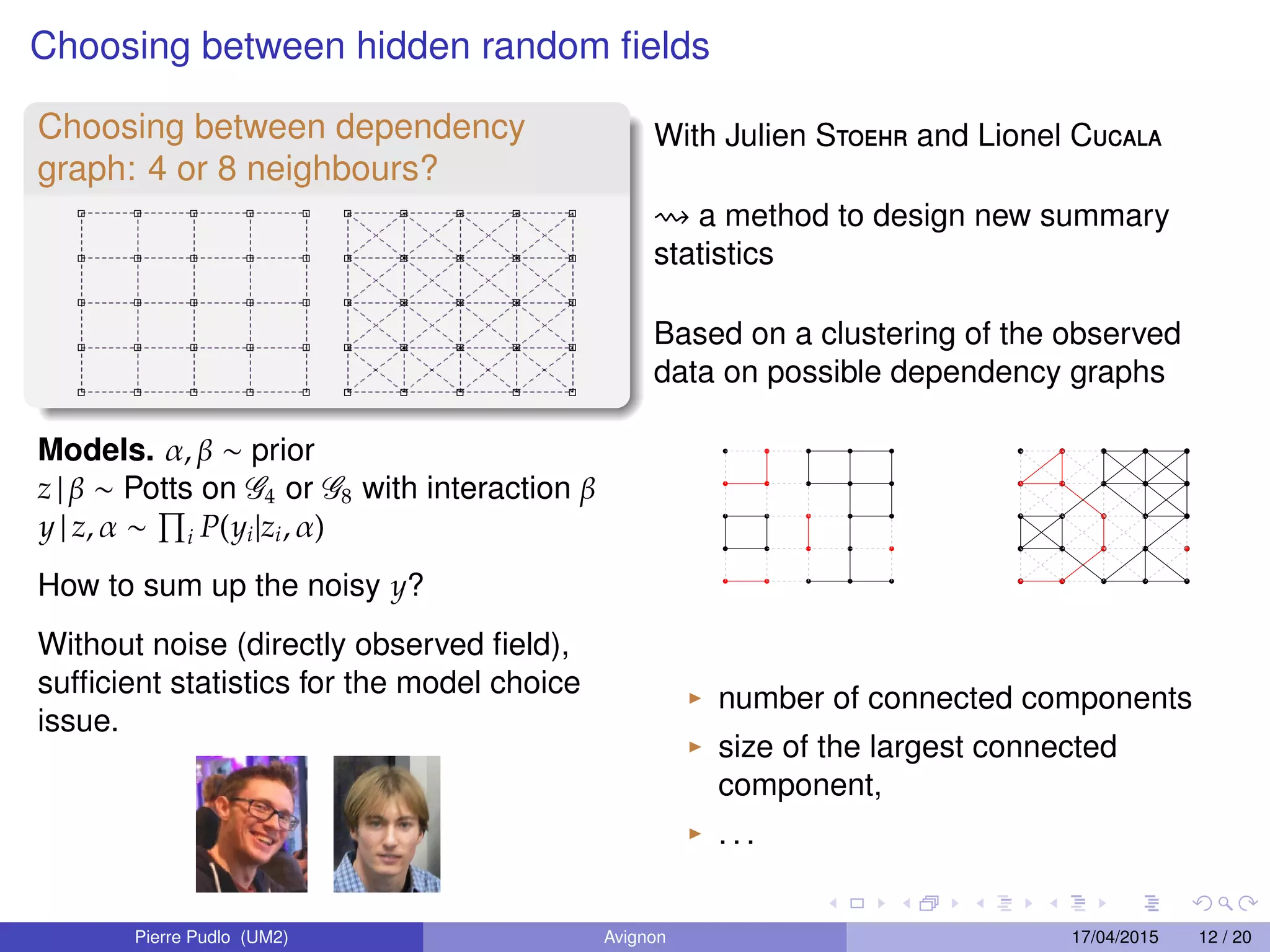

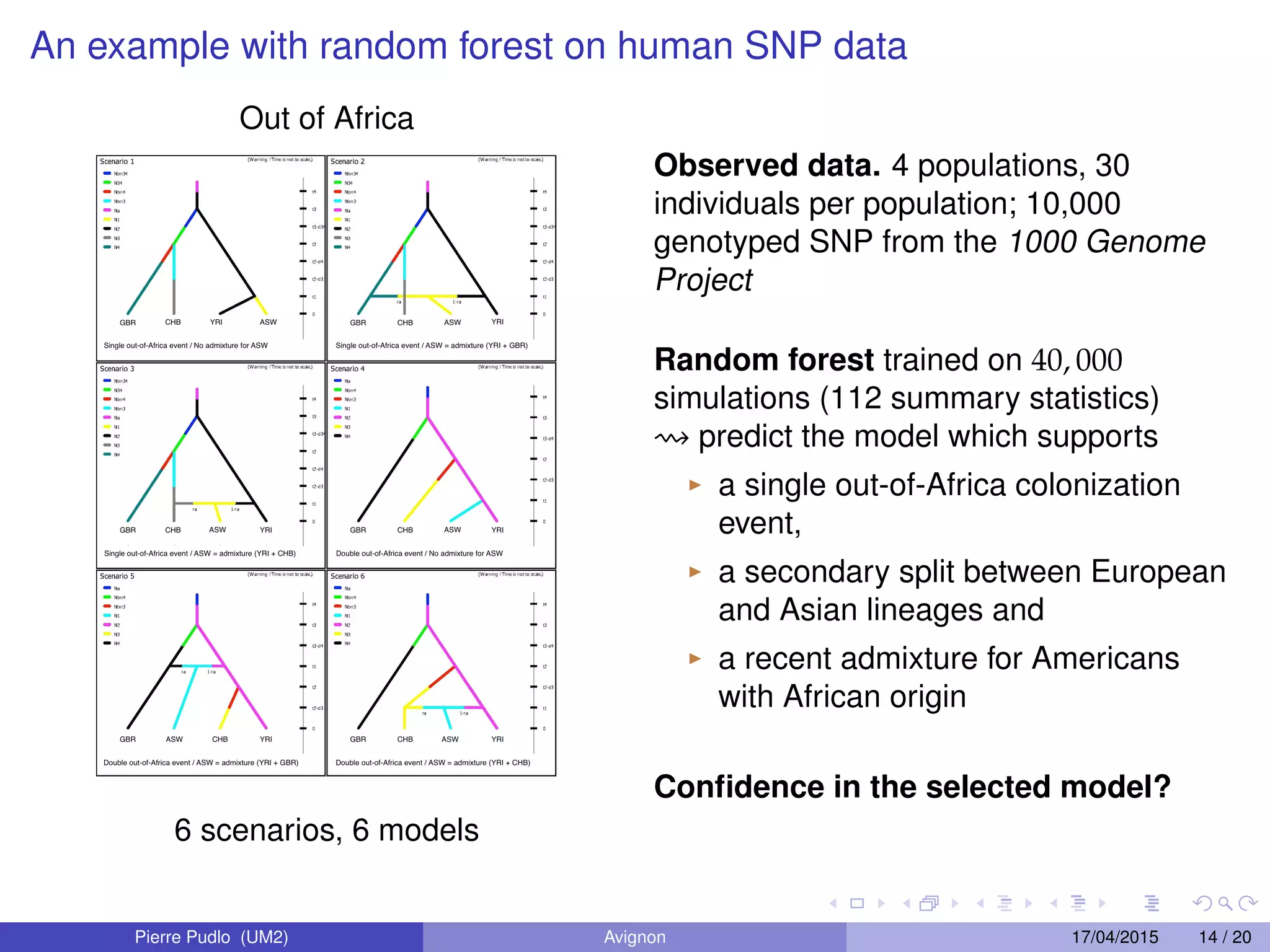

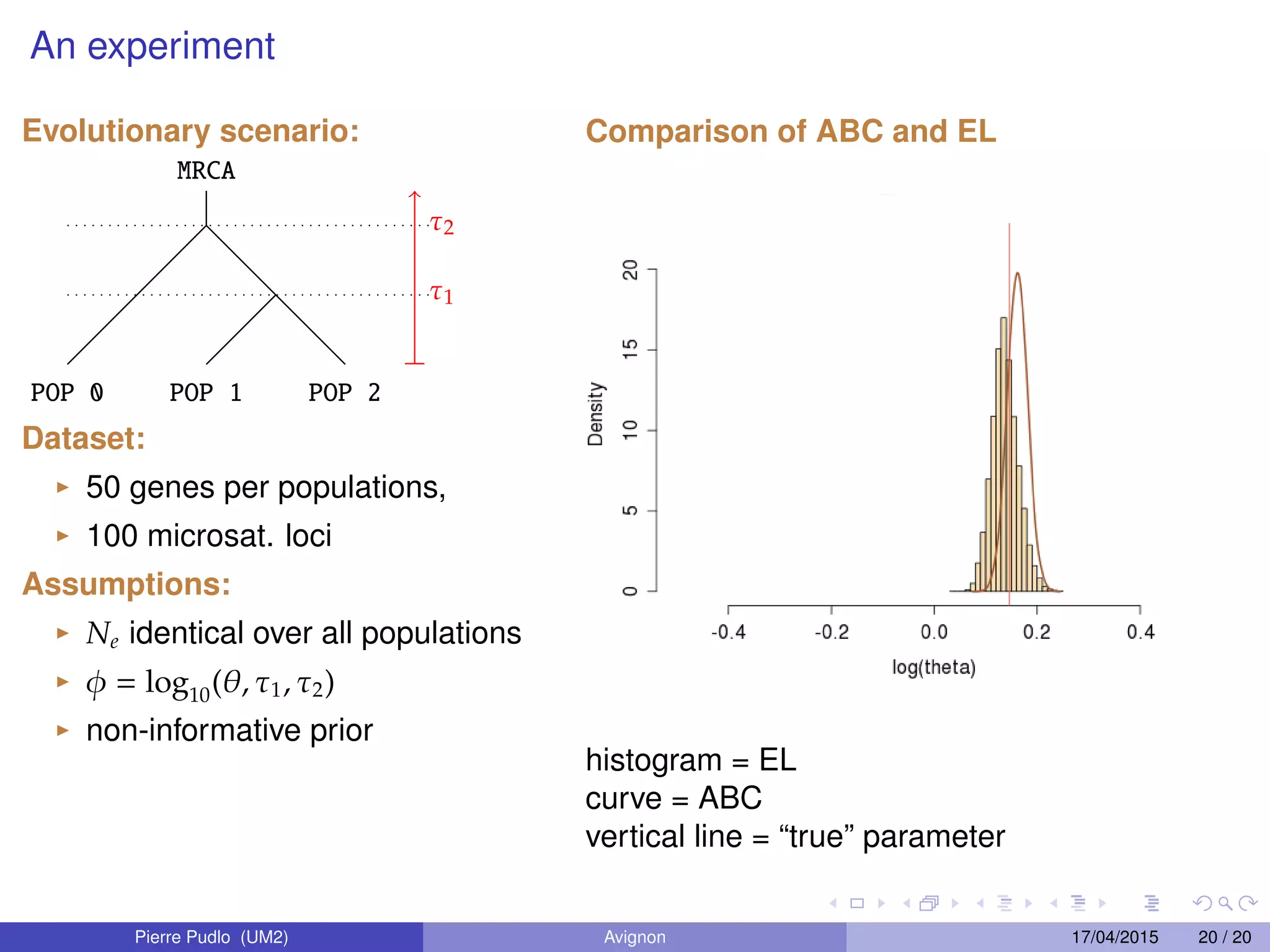

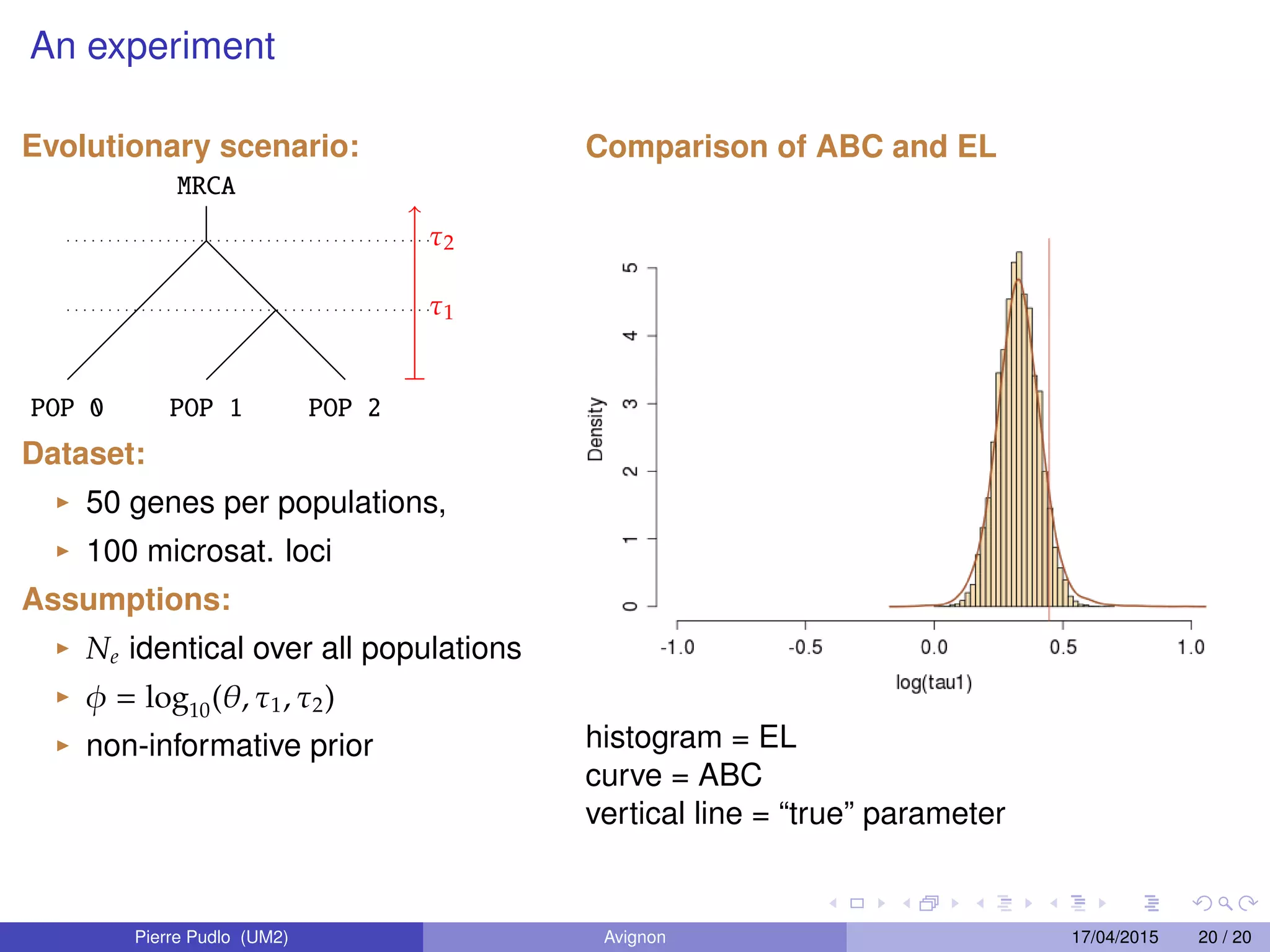

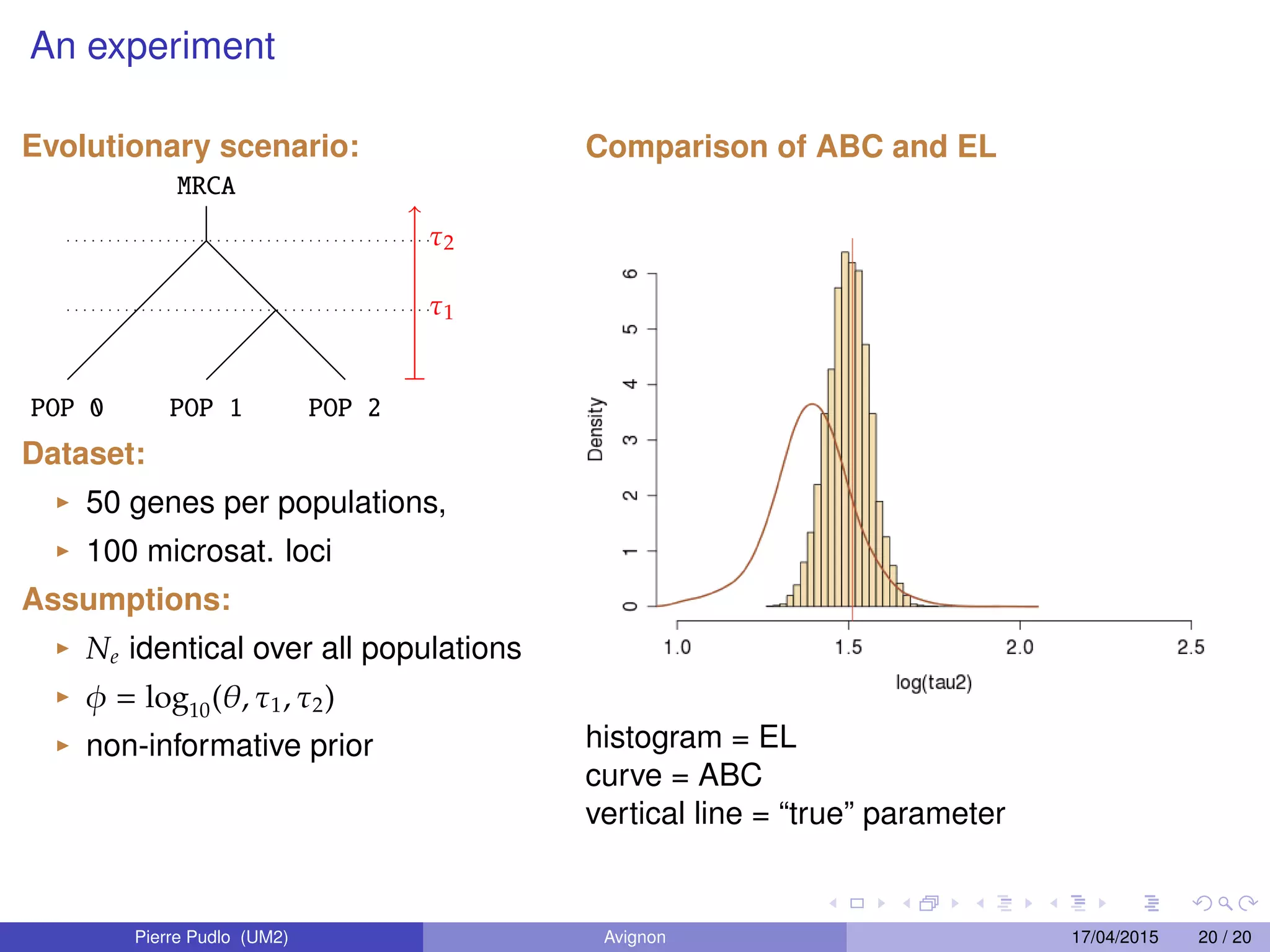

2. ABC approximates the posterior distribution by simulating data under different parameter values and retaining simulations that best match the observed data. ABC model choice extends this to model selection problems.

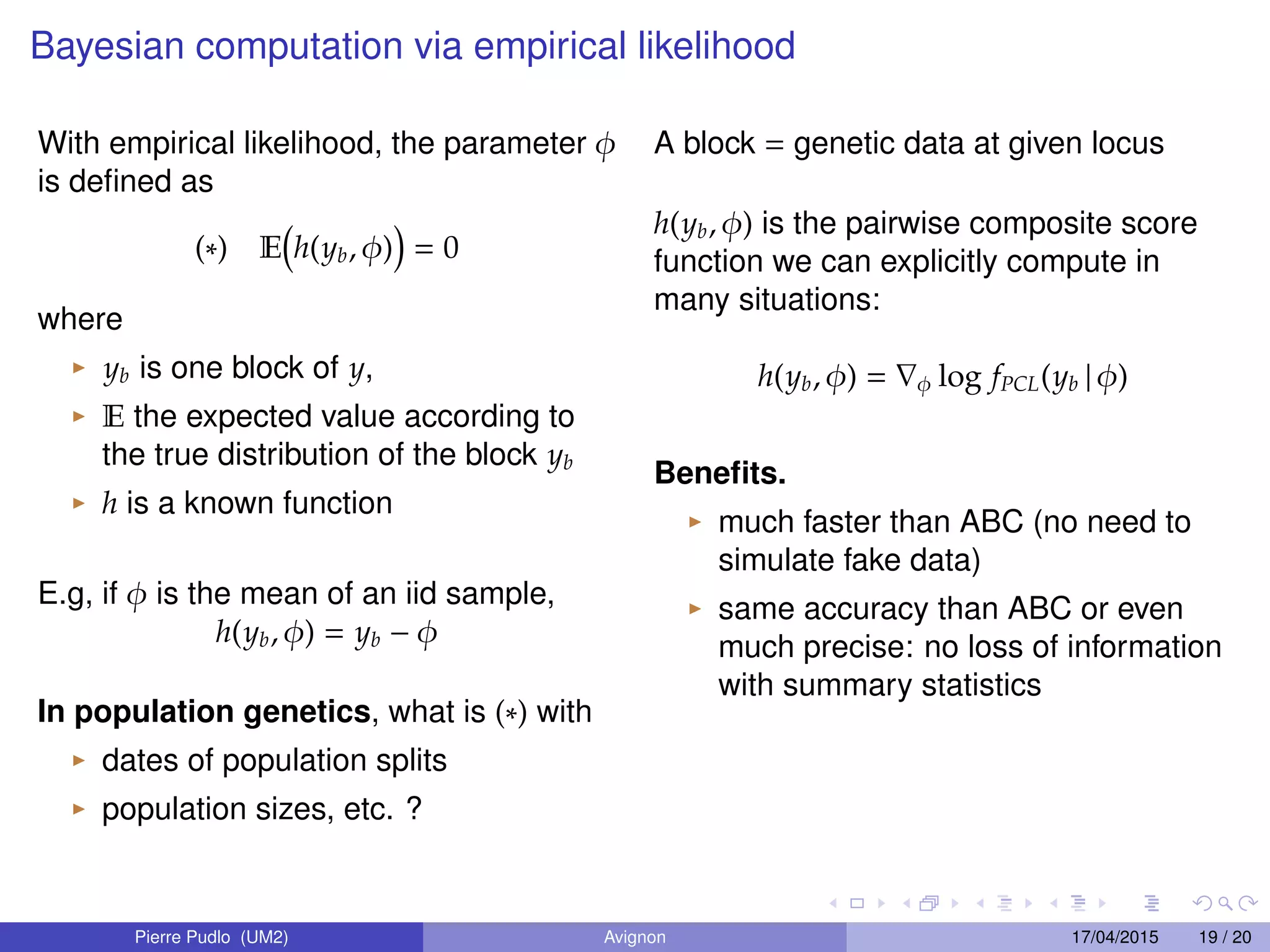

3. Empirical likelihood provides an alternative to ABC by reconstructing a likelihood function from independent blocks of data, allowing faster Bayesian inference without loss of information from summary statistics.