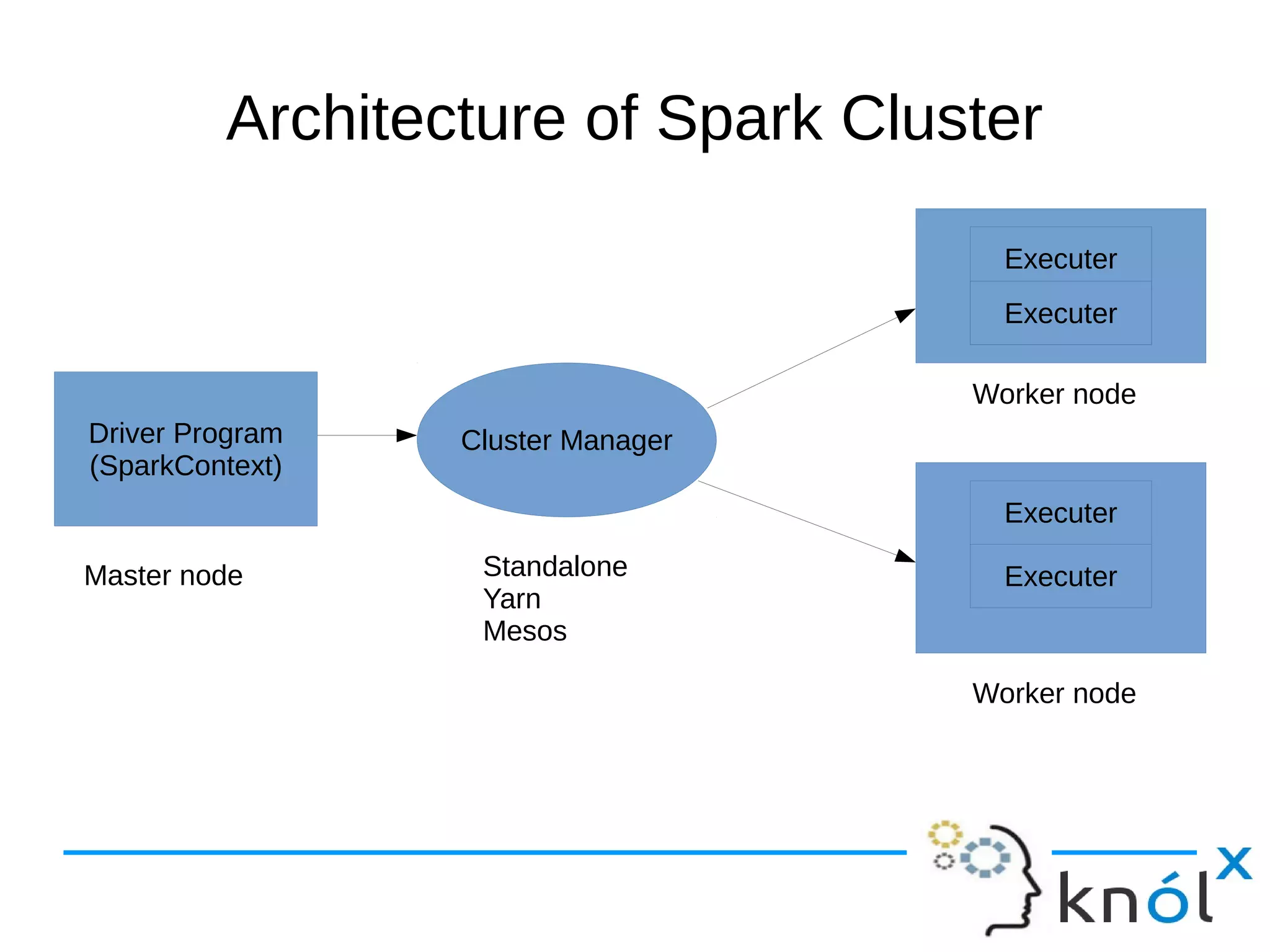

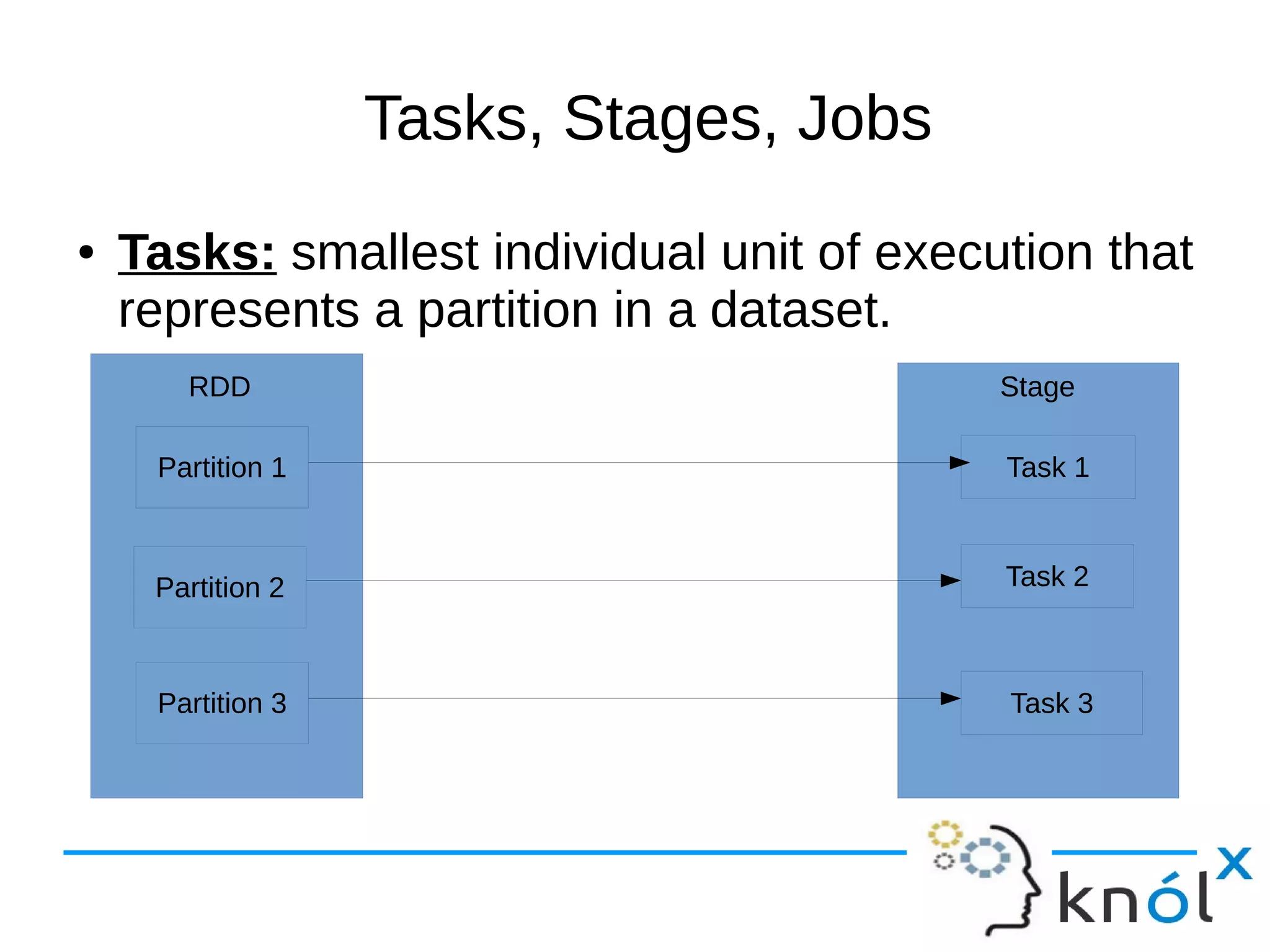

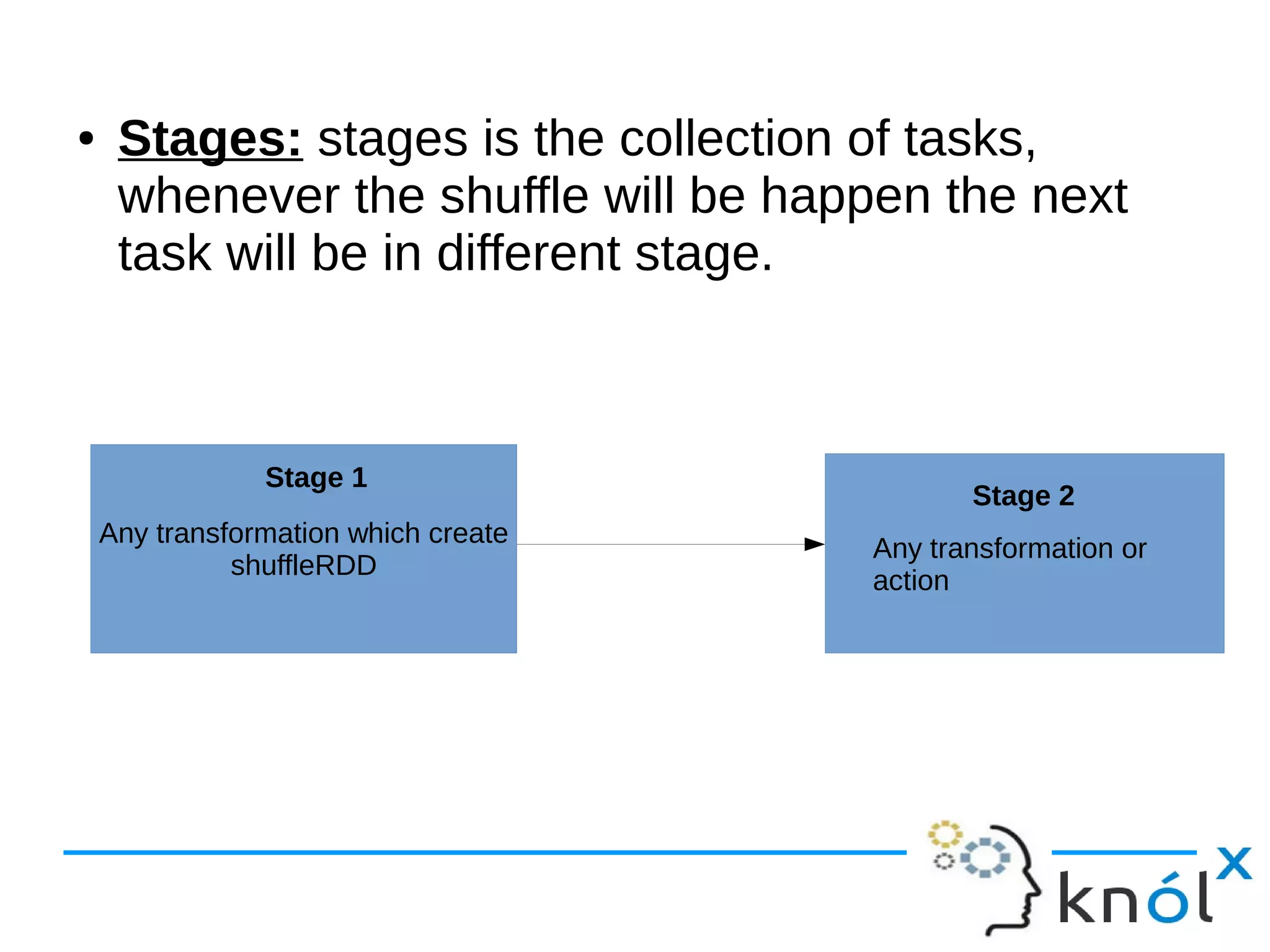

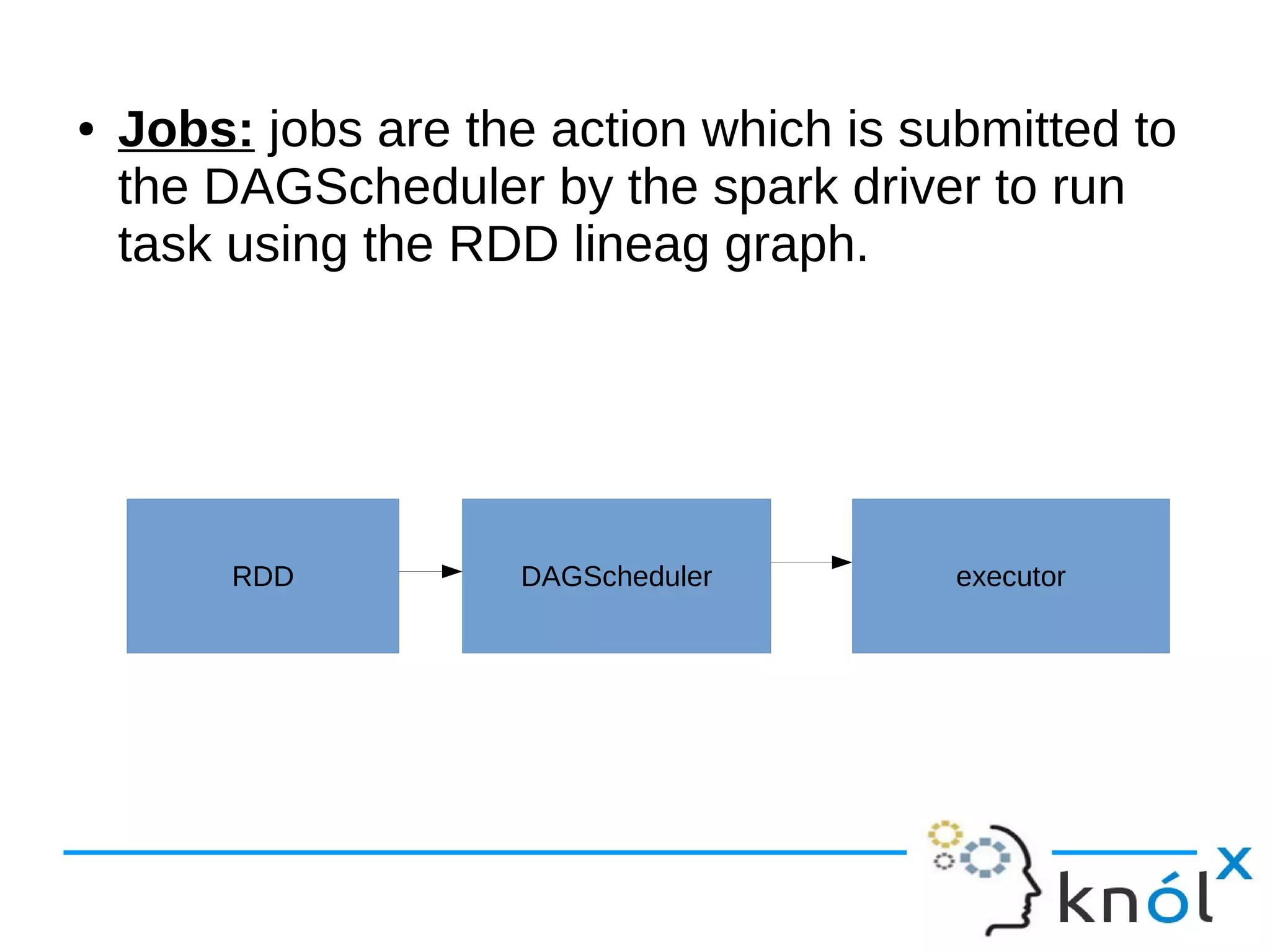

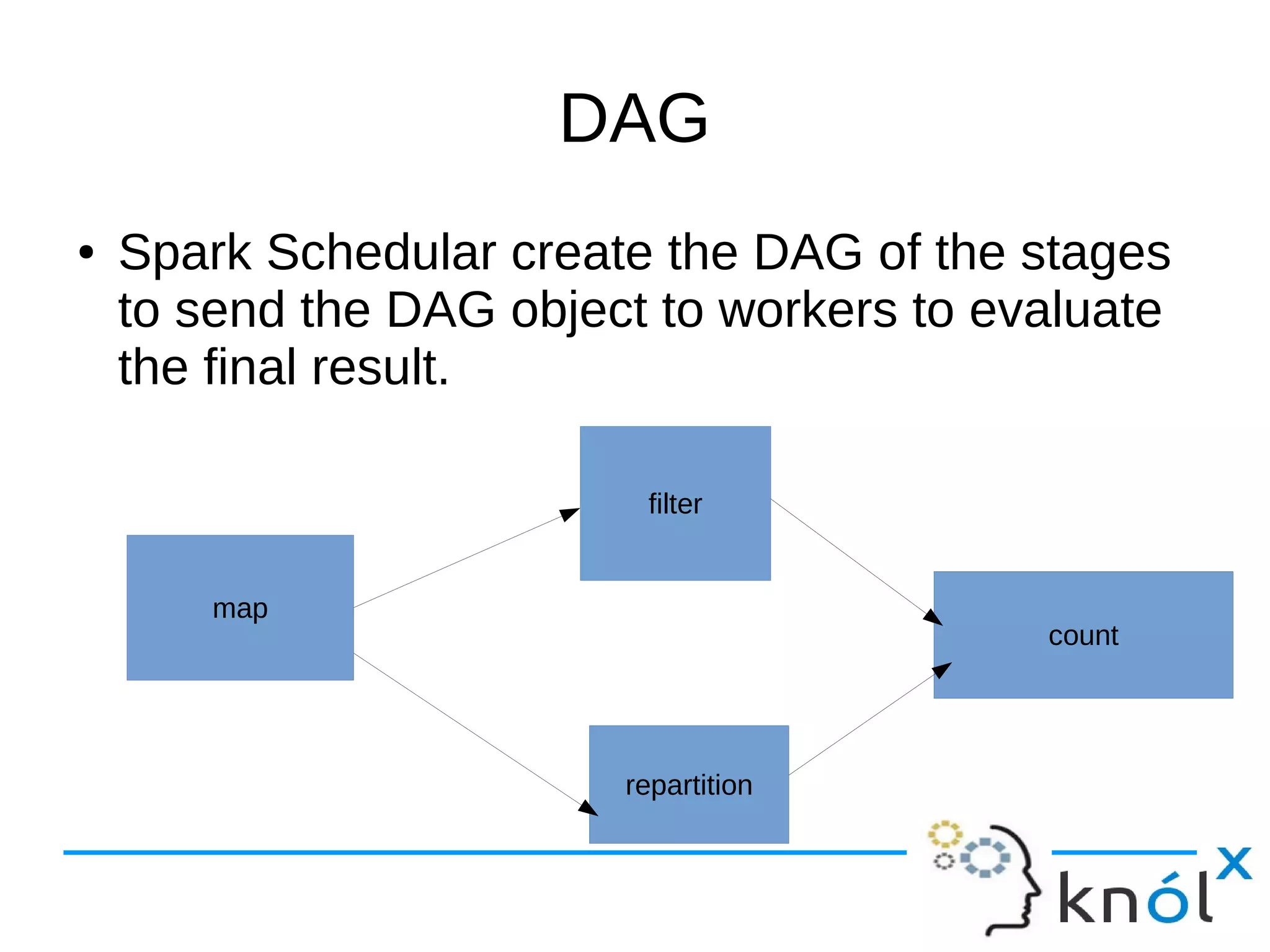

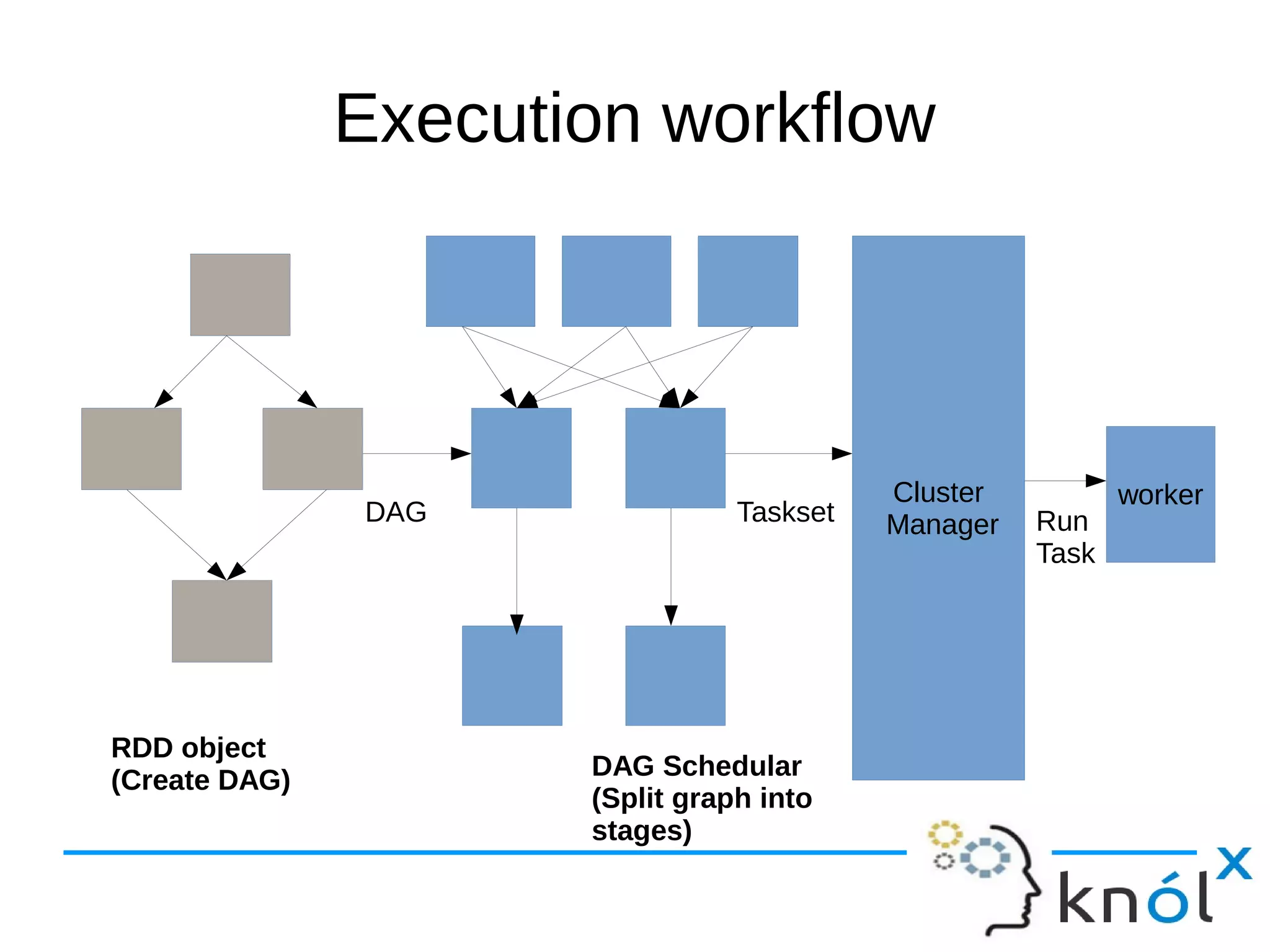

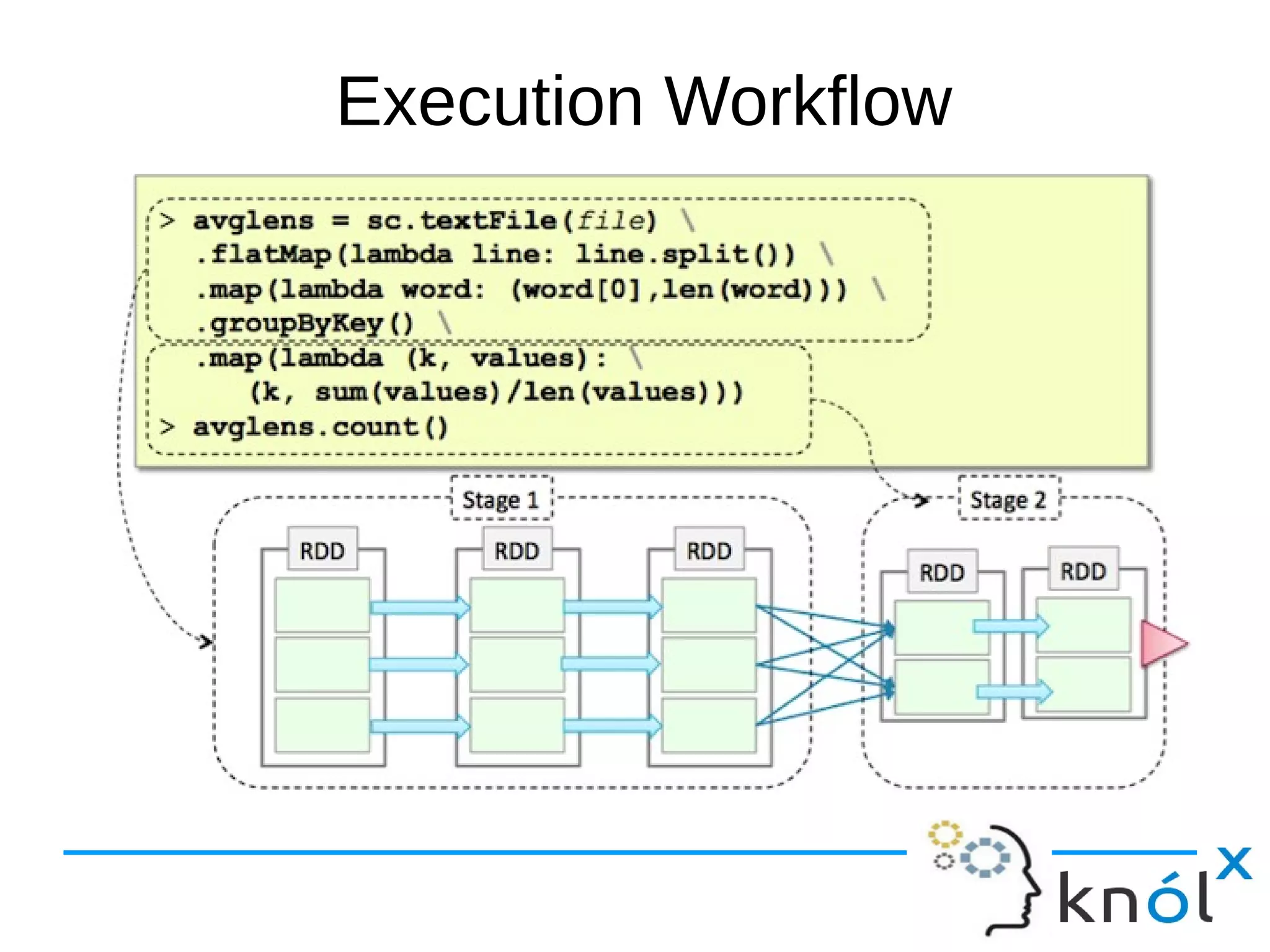

The document outlines the architecture and workflow of Apache Spark, detailing components such as master nodes, worker nodes, executors, and the driver program. It explains the concepts of tasks, stages, and jobs, highlighting how tasks are the smallest execution units, stages are collections of tasks, and jobs are actions submitted for processing. Additionally, it includes a brief mention of the execution workflow and a demo.