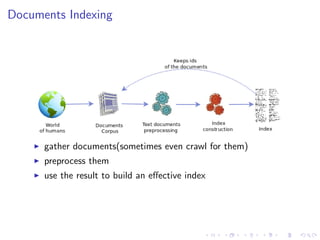

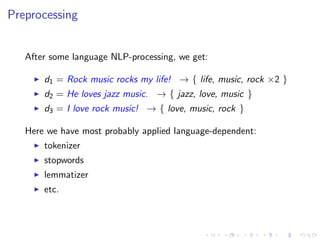

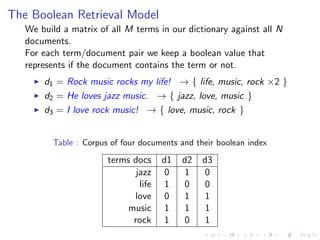

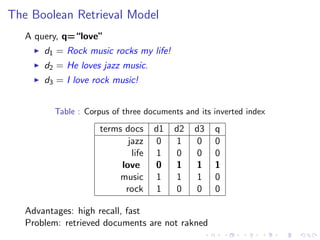

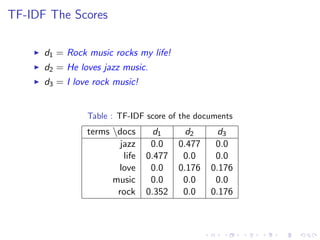

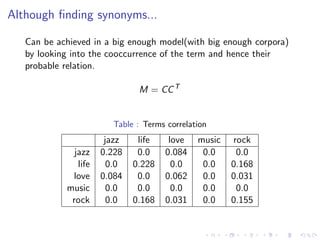

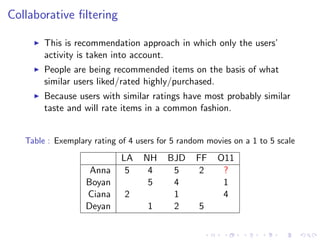

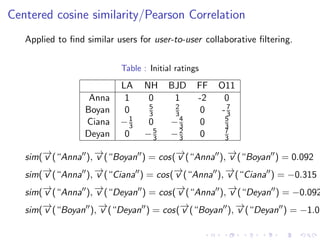

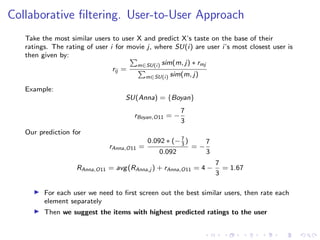

This document provides an introduction to information retrieval and recommender systems. It begins with defining information retrieval as finding documents that satisfy an information need from large collections. It then discusses how documents are indexed and preprocessed, including tokenization, stopwords removal, and lemmatization. Boolean and vector space retrieval models are introduced. The document also defines recommender systems as systems that suggest items of interest to users based on their preferences. It describes collaborative filtering and content-based recommender approaches.

![The Inverted Index and the Vector-Space Model

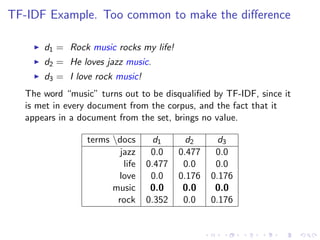

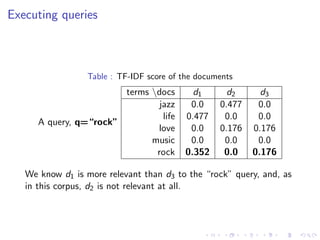

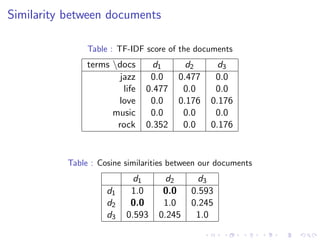

Term-document matrix C[MxN]for M terms and N documents.

Table : We need weights for each term-document couple

terms docs d1 d2 ... dN

t1 w1,1 w1,2 ... w1,N

t2 w2,1 w2,2 ... w2,N

... ... ... ... ...

tM wM,1 wM,2 ... wM,N](https://image.slidesharecdn.com/informationretrievaltorecommendersystems-150519143029-lva1-app6891/85/Information-retrieval-to-recommender-systems-13-320.jpg)

![The problem with dimensionality and sparsity

Imagine...

N = 10,000,000 users

200, 000 items

in a vector-space of M = 1,000,000 terms

how do we use our sparse matrix C[NxM]?](https://image.slidesharecdn.com/informationretrievaltorecommendersystems-150519143029-lva1-app6891/85/Information-retrieval-to-recommender-systems-41-320.jpg)

![The problem with dimensionality and sparsity

Imagine...

N = 10,000,000 users

200, 000 items

in a vector-space of M = 1,000,000 terms

how do we use our sparse matrix C[NxM]?

OMG!!! This is big data!!!

;)](https://image.slidesharecdn.com/informationretrievaltorecommendersystems-150519143029-lva1-app6891/85/Information-retrieval-to-recommender-systems-42-320.jpg)