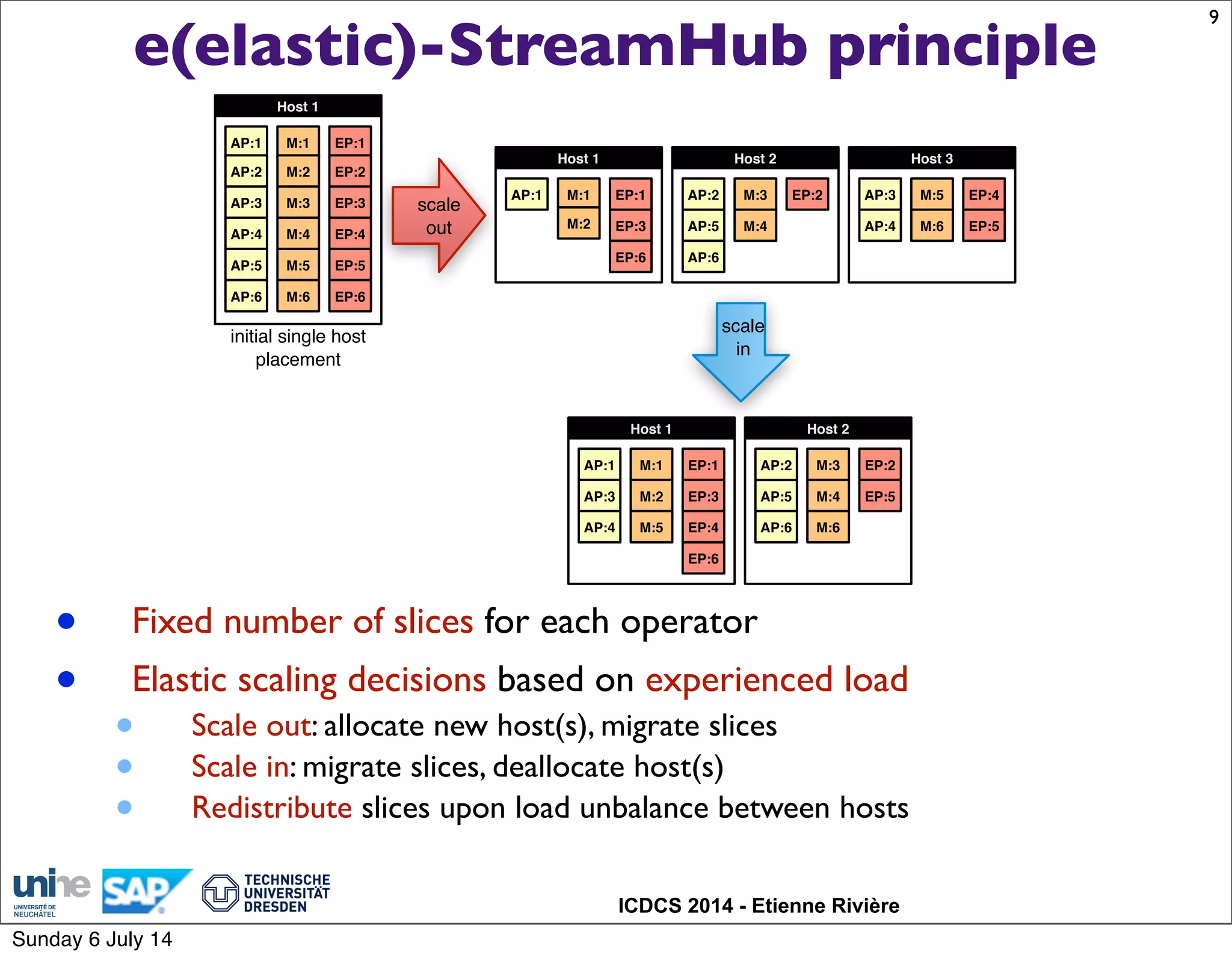

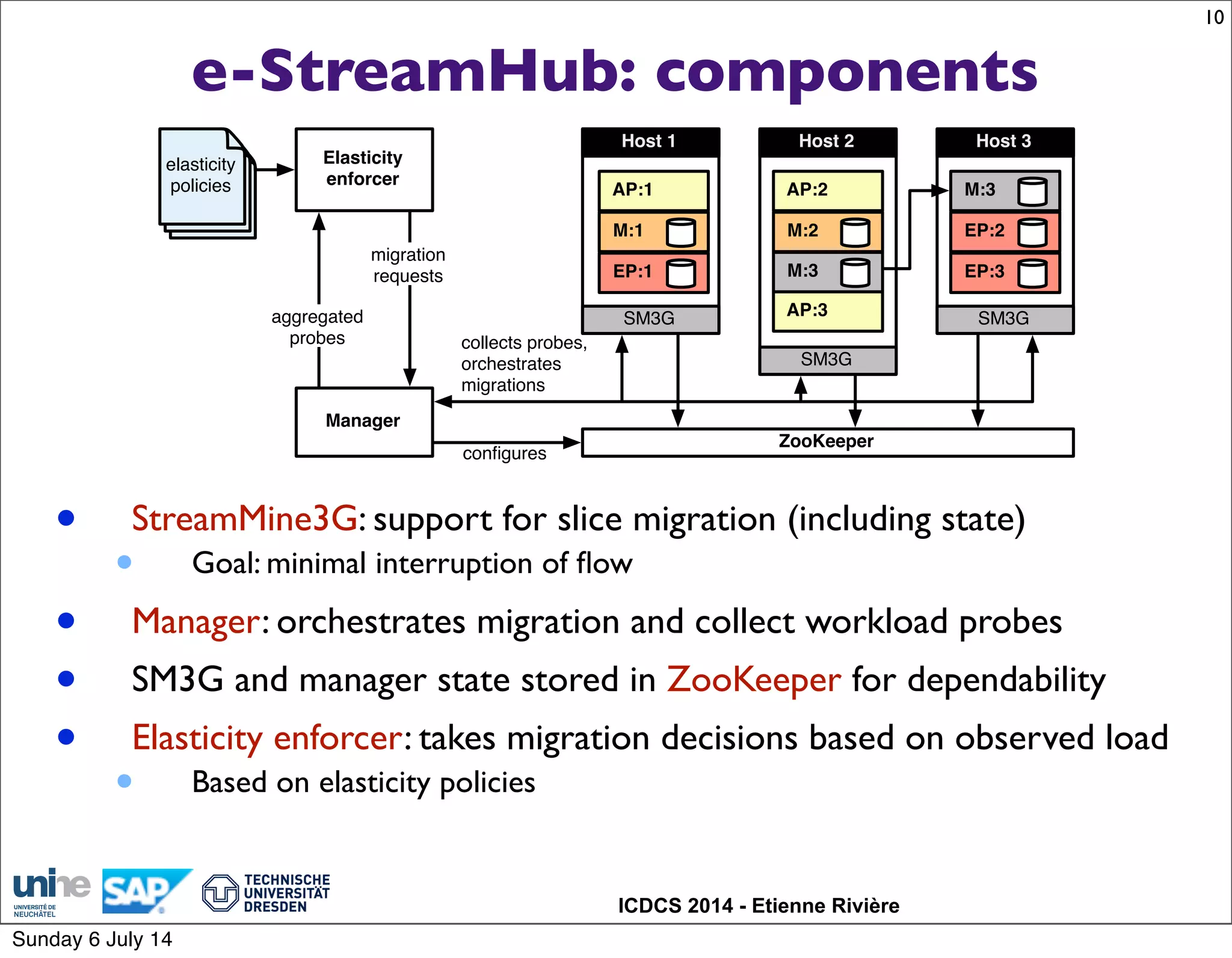

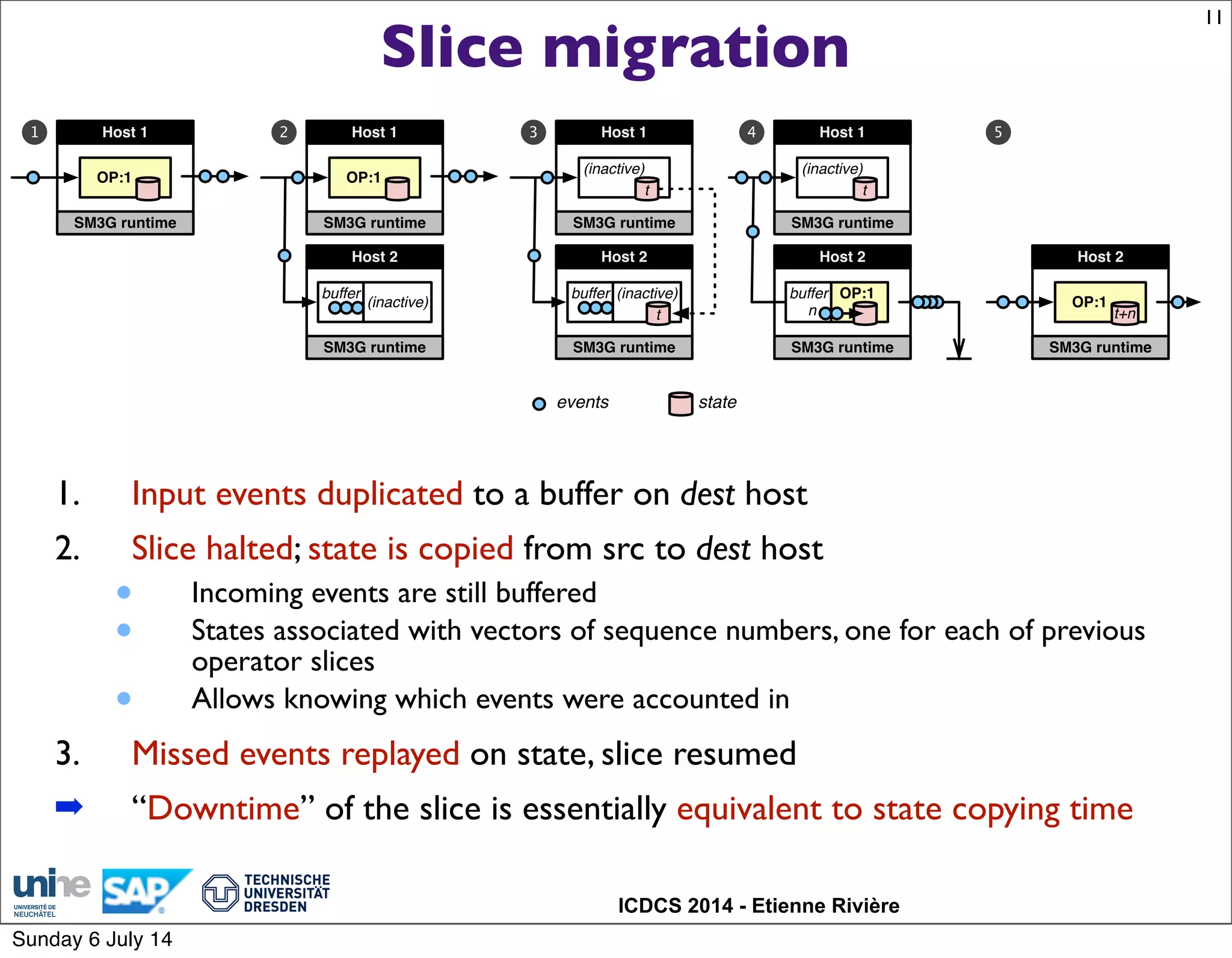

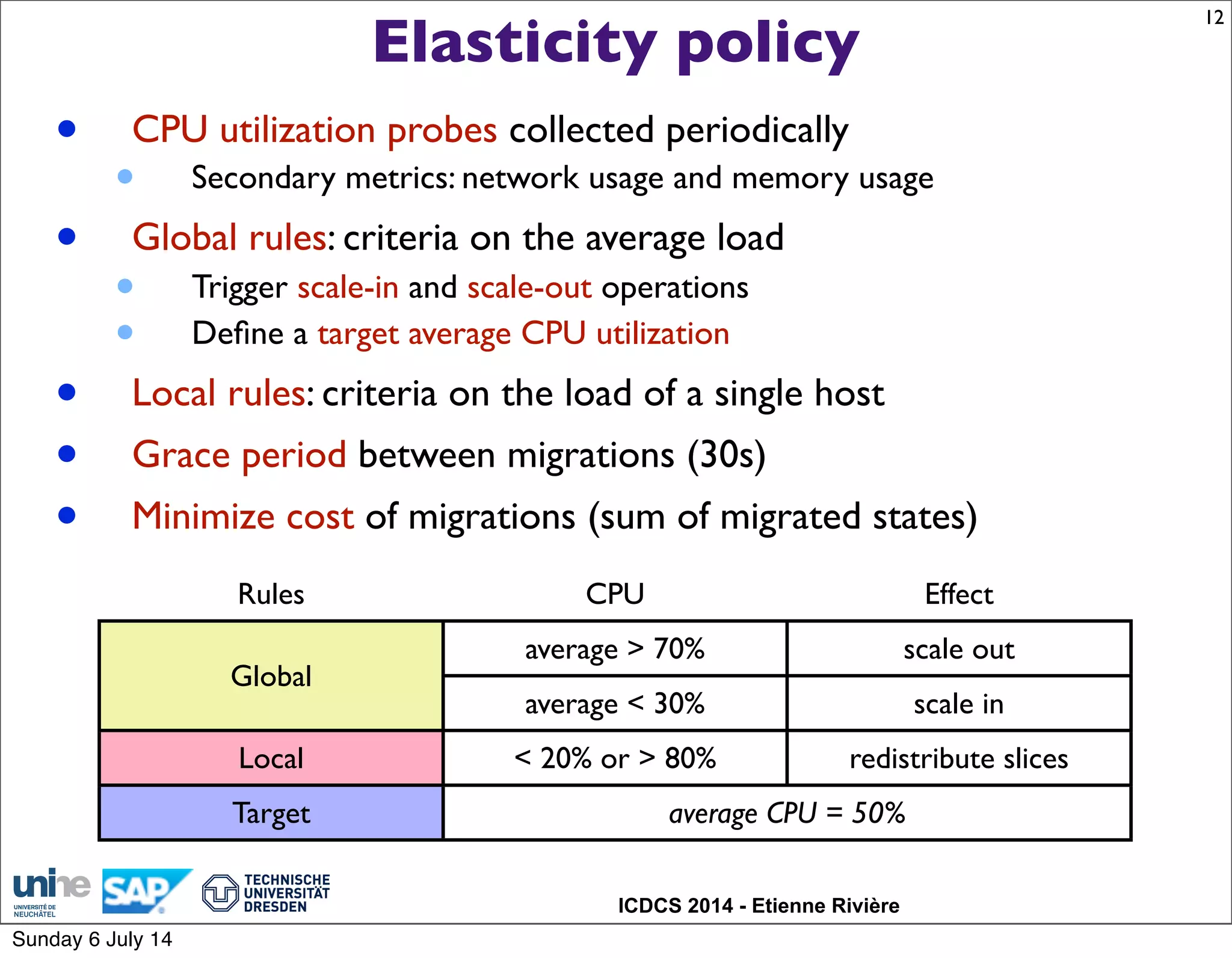

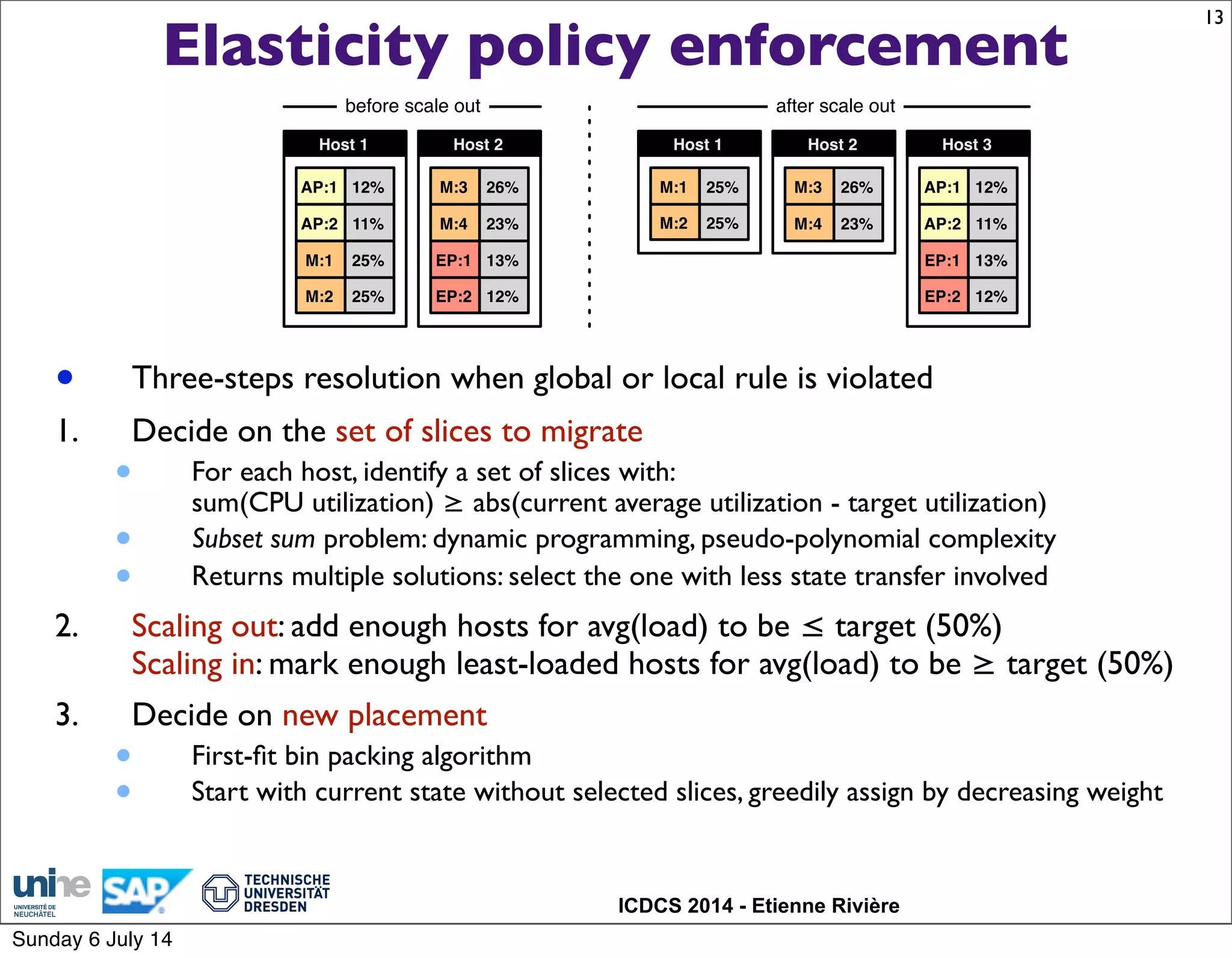

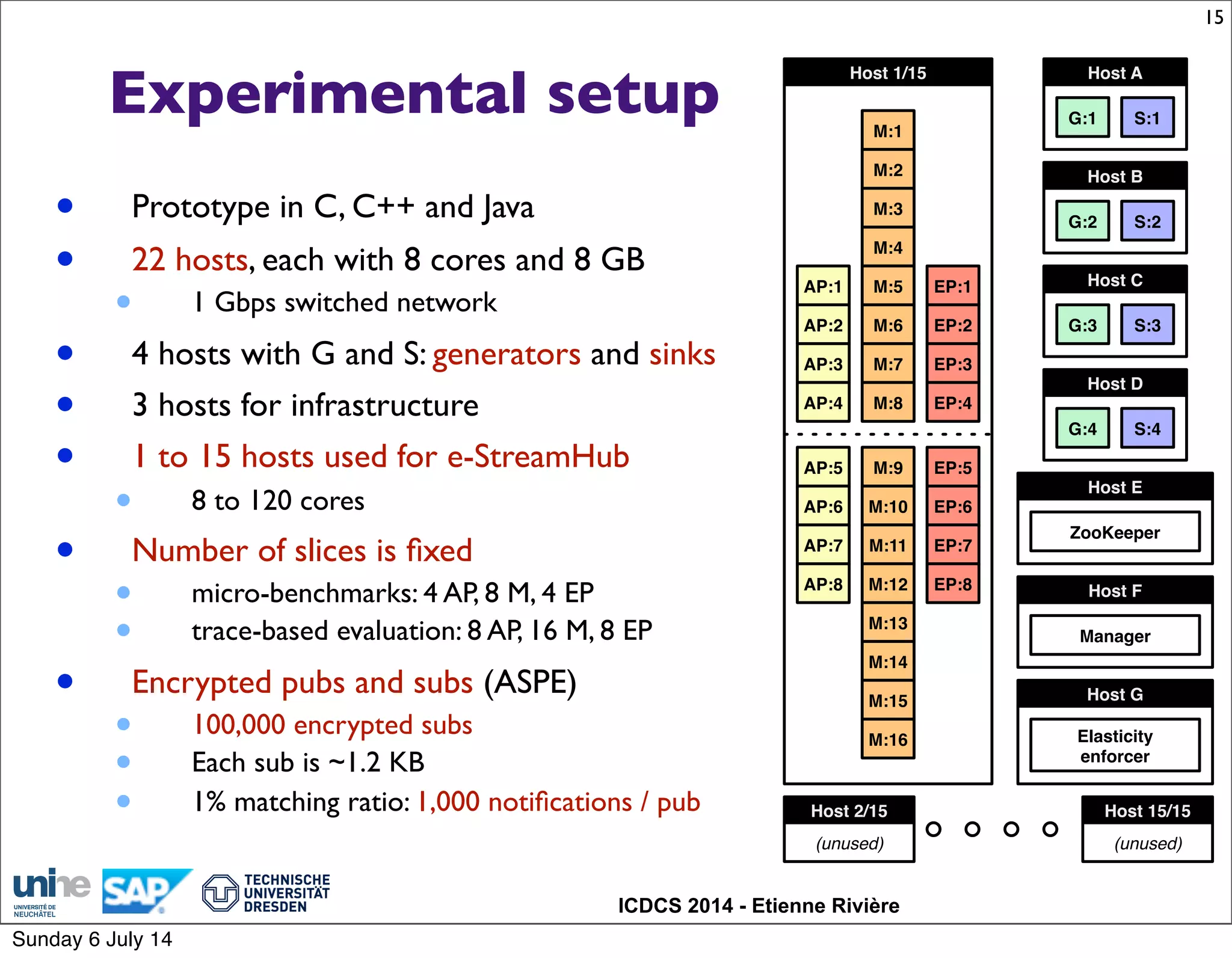

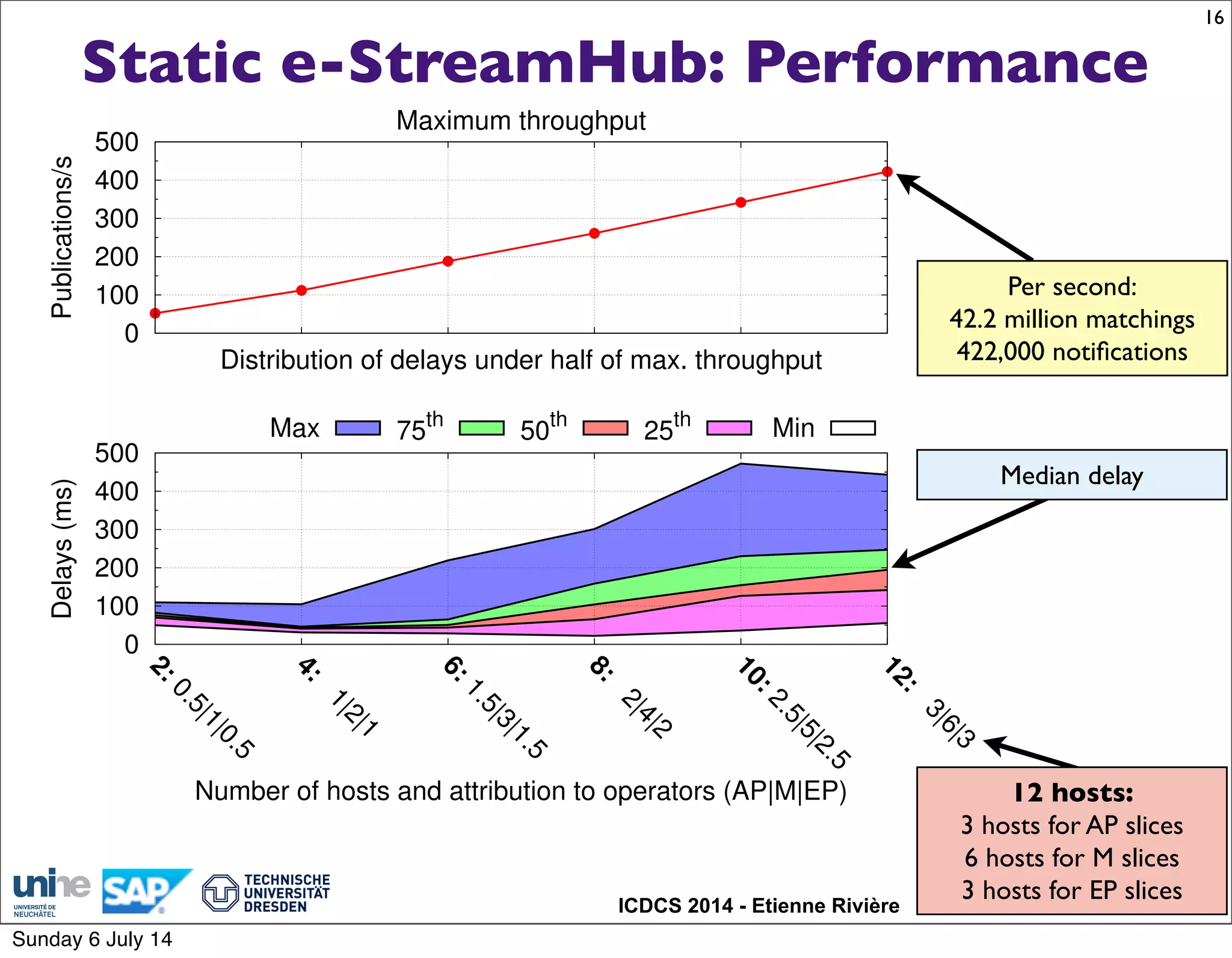

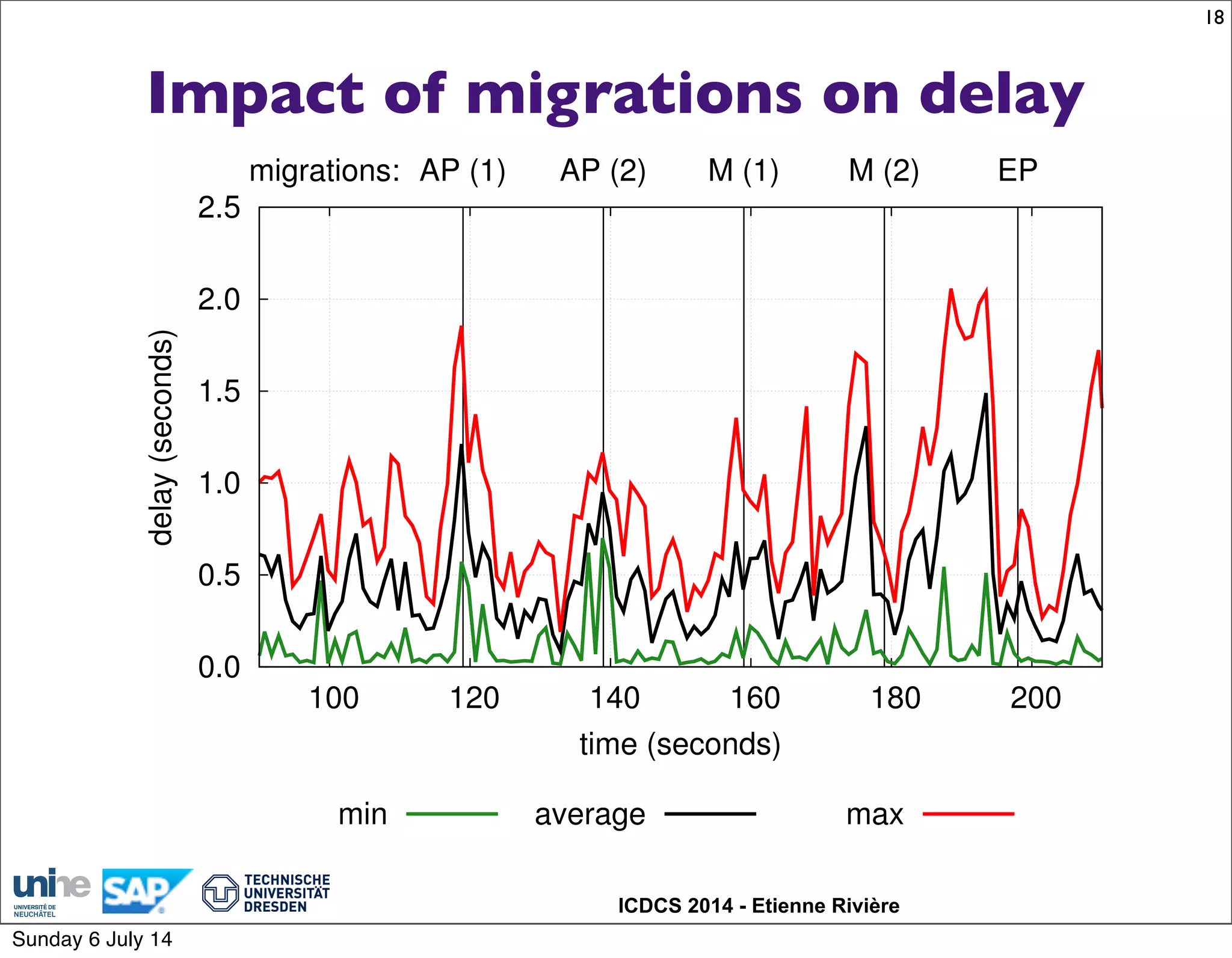

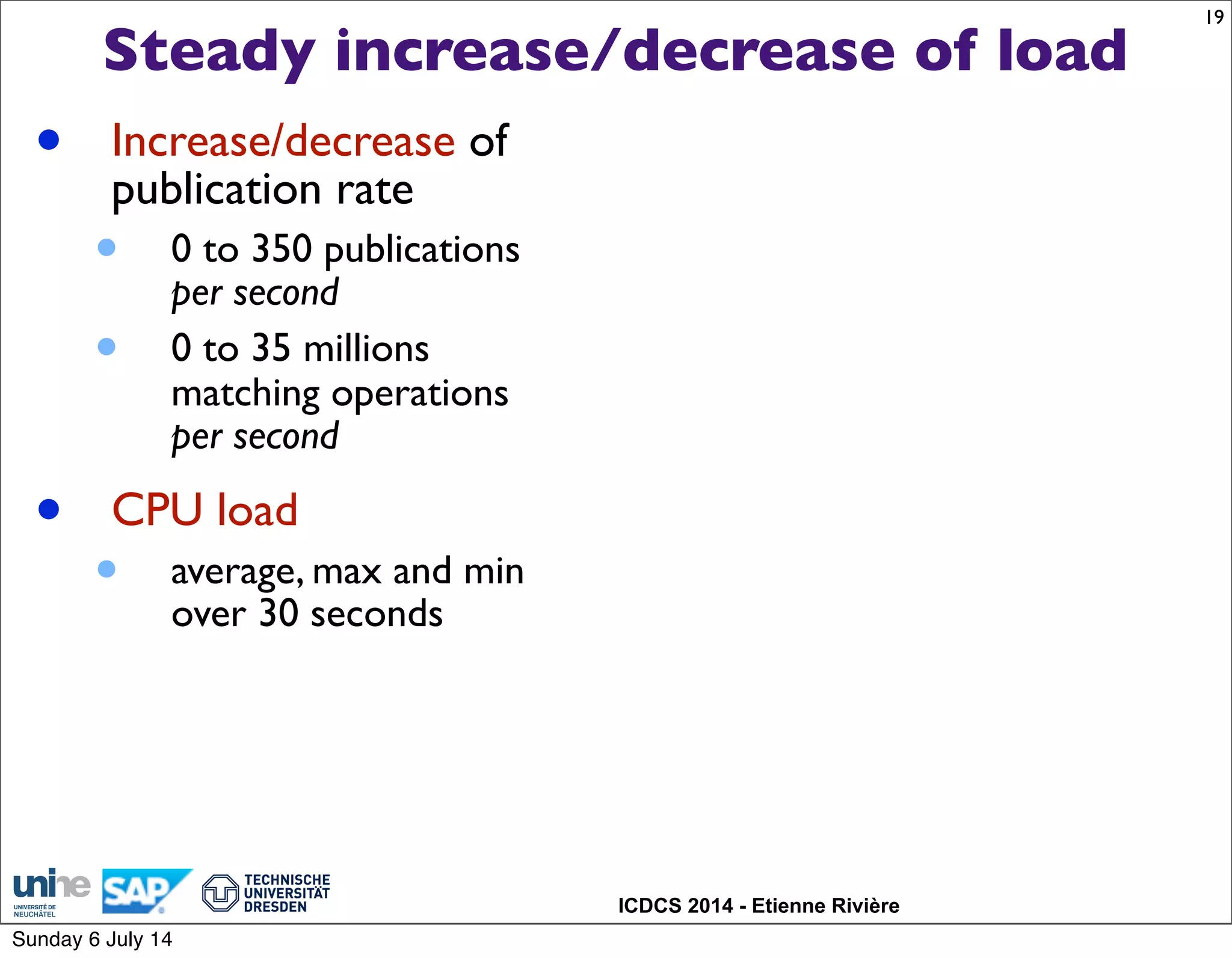

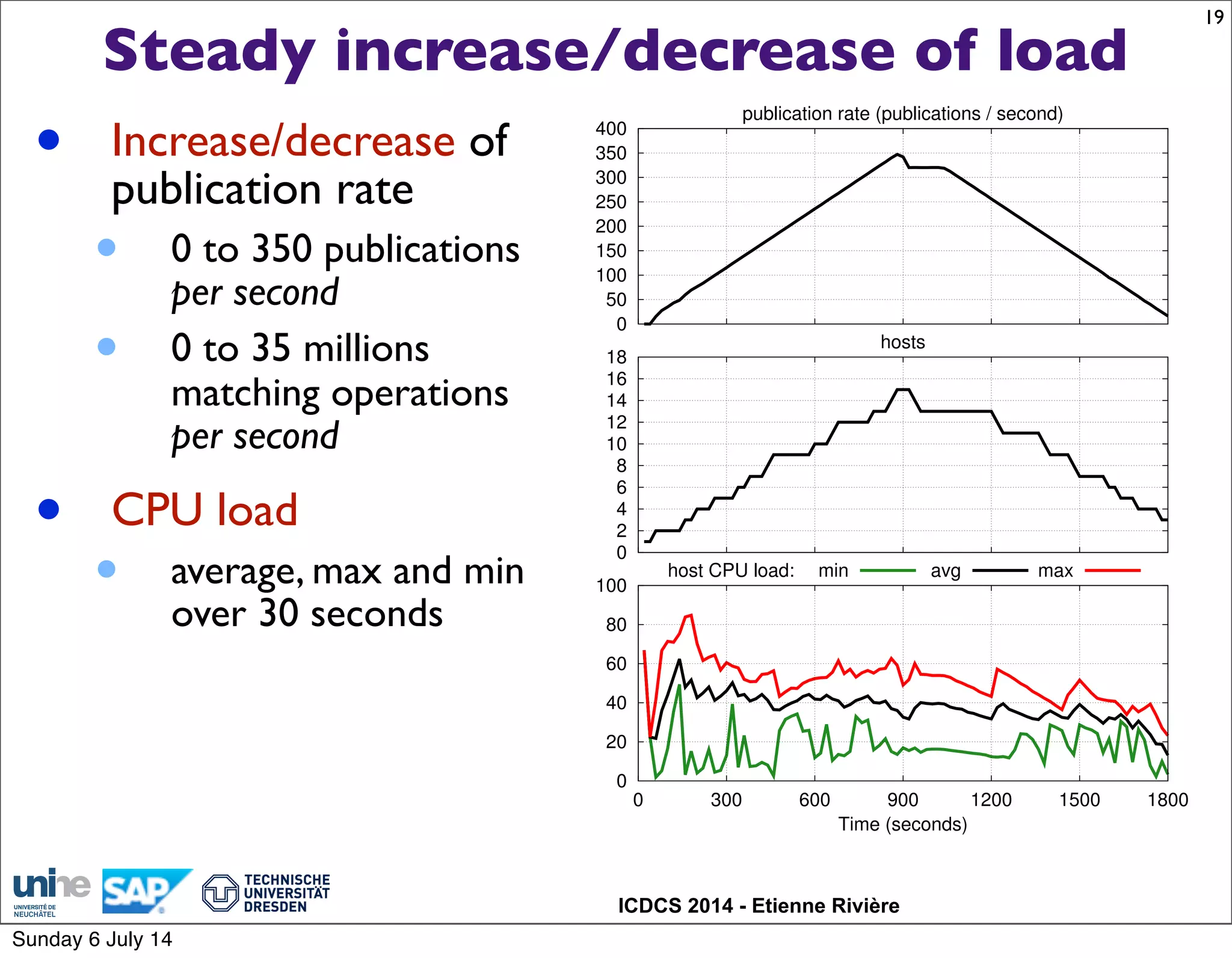

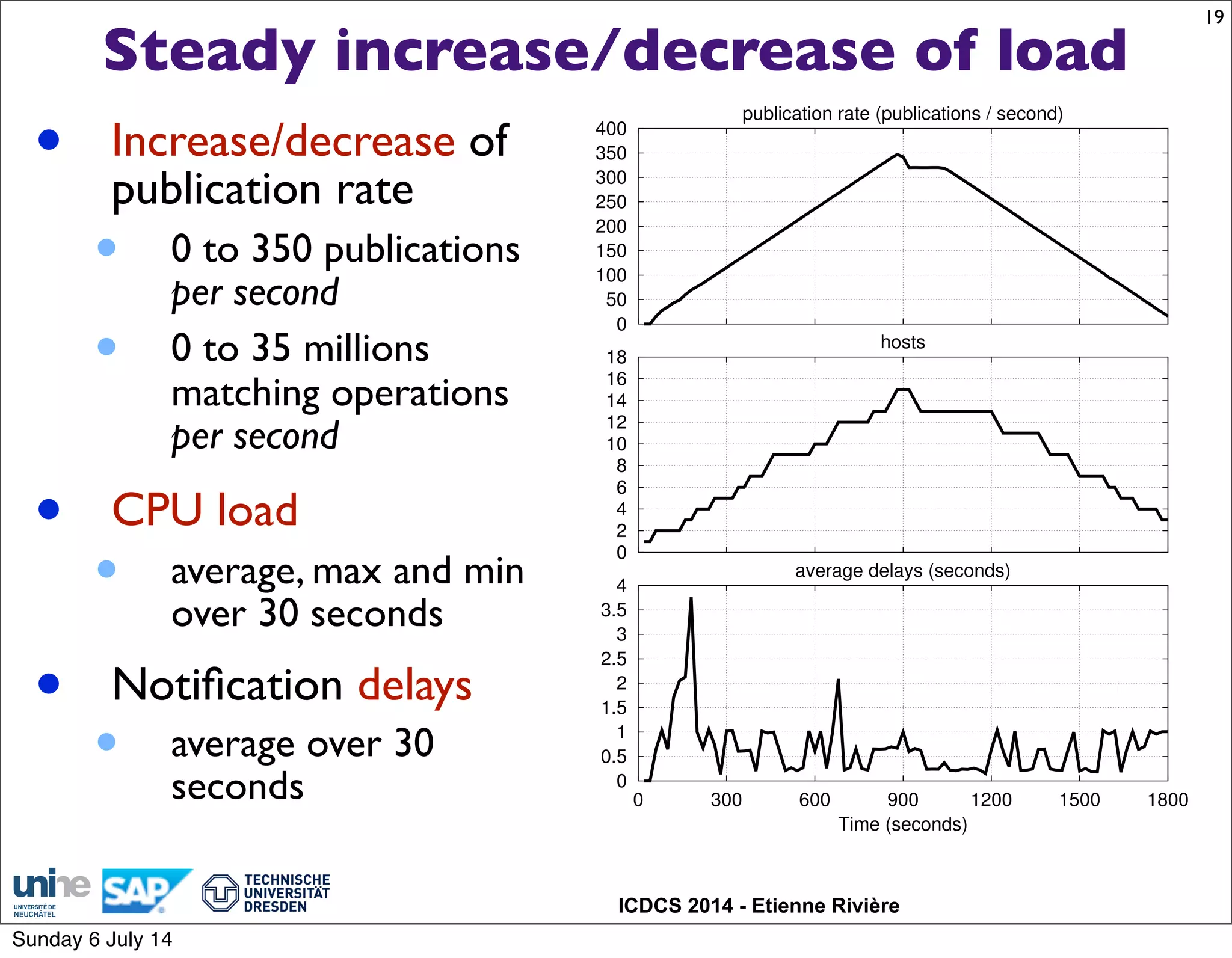

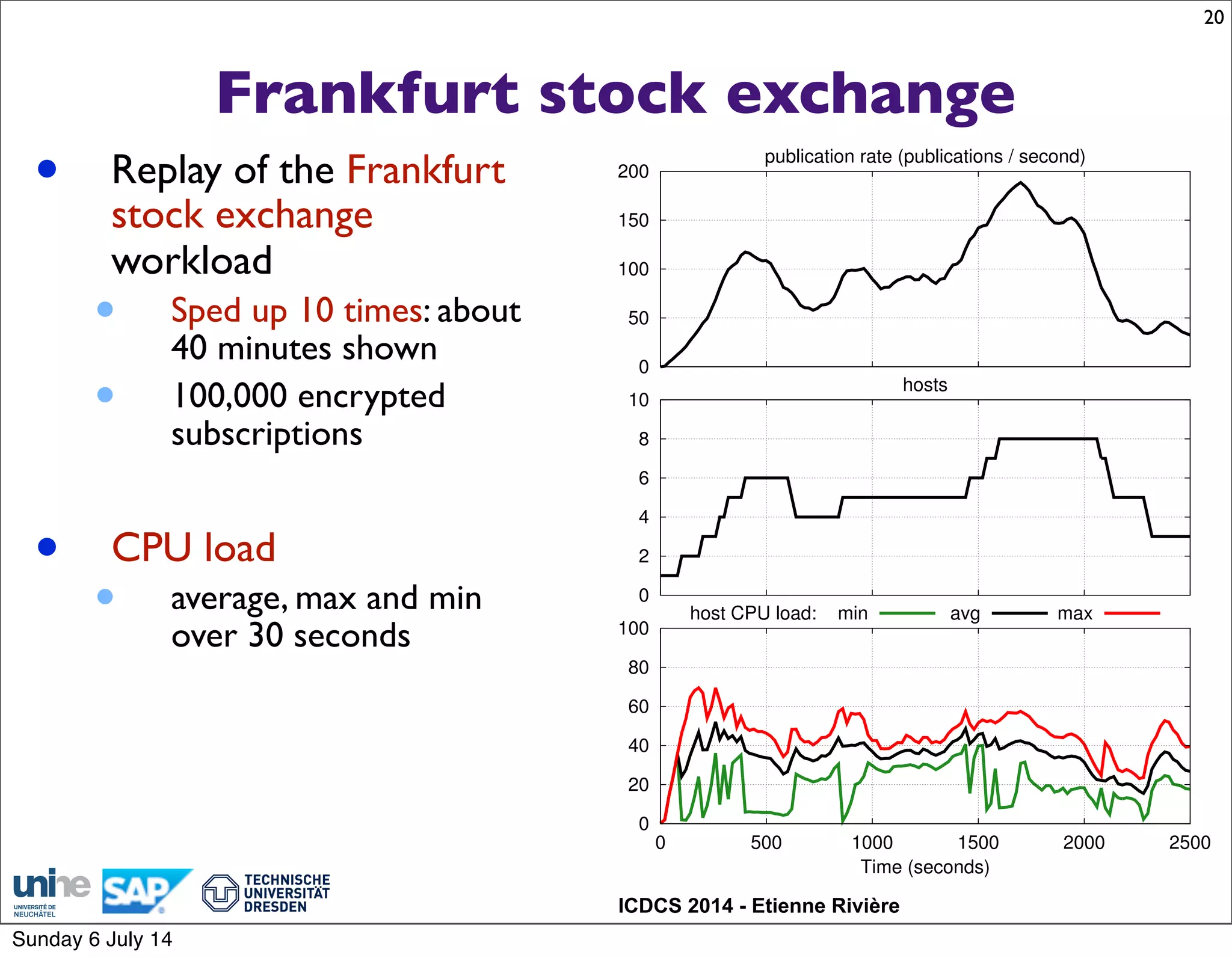

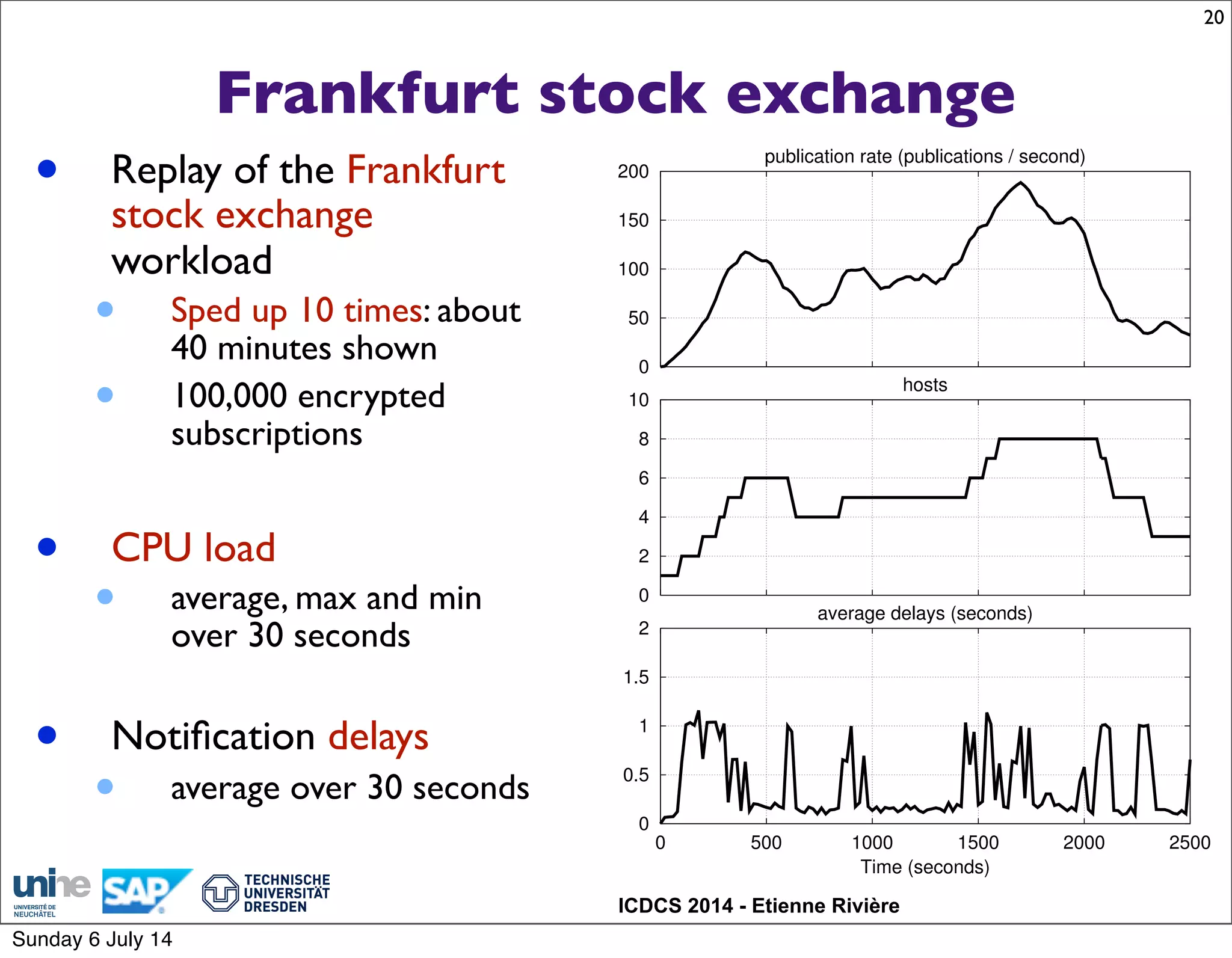

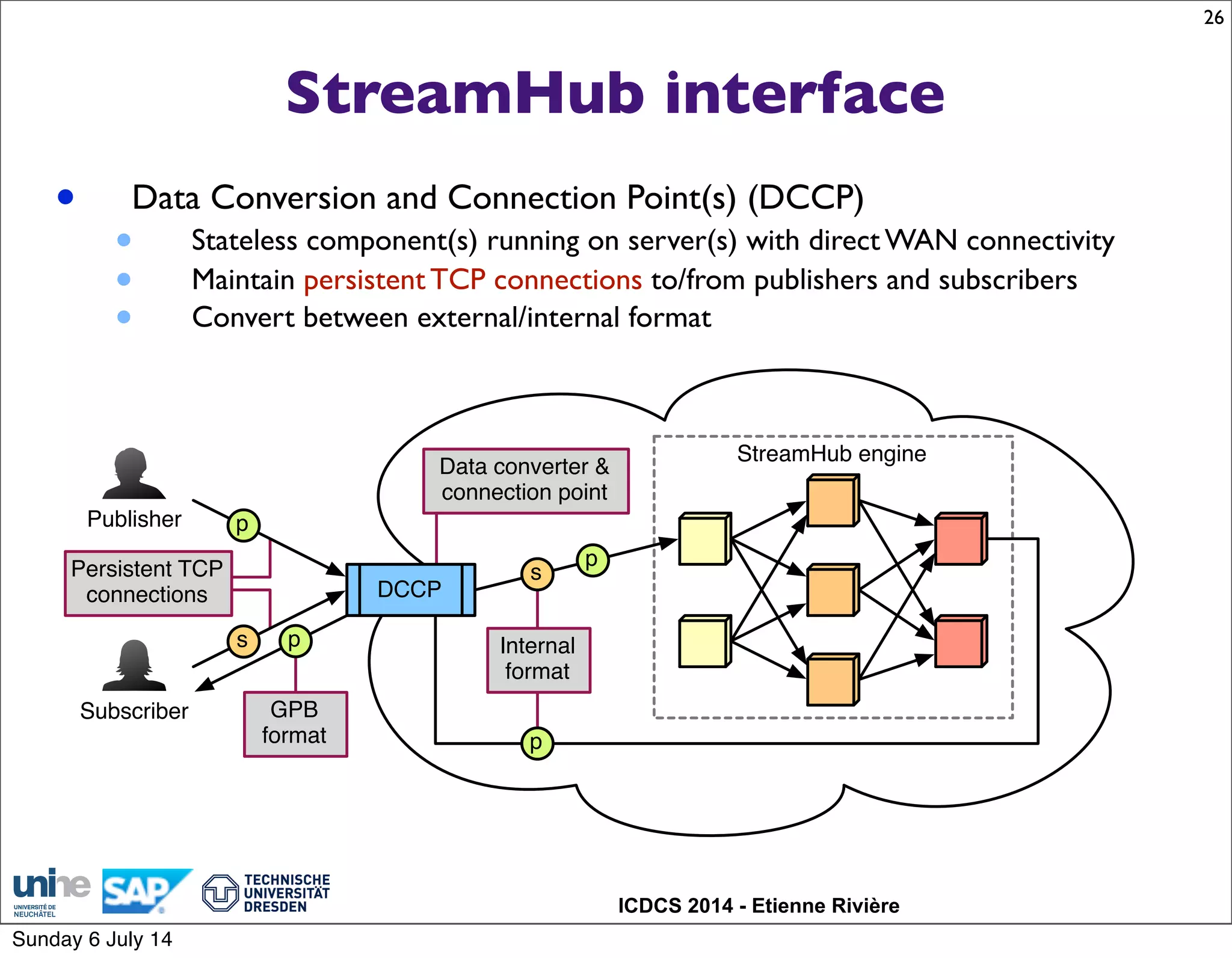

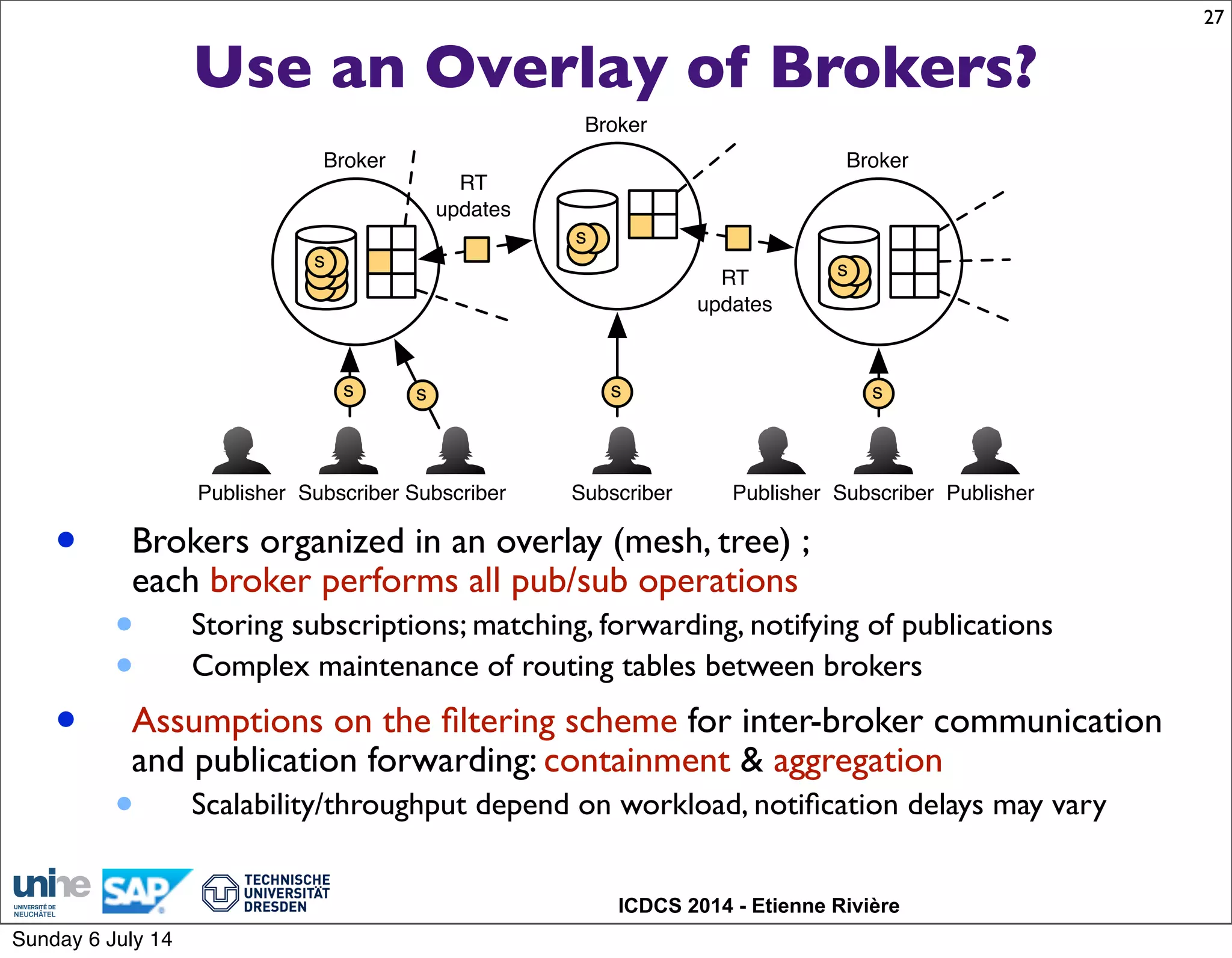

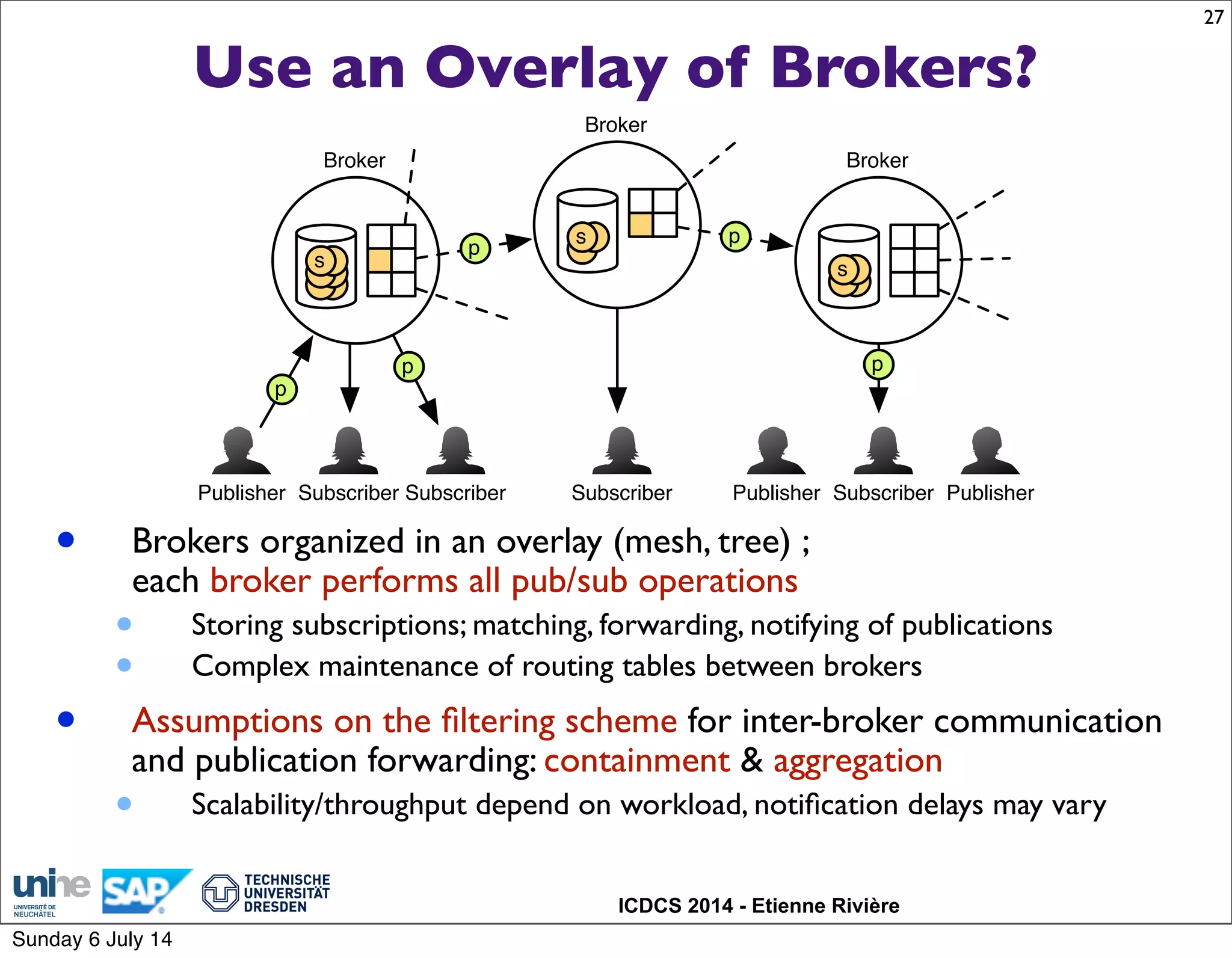

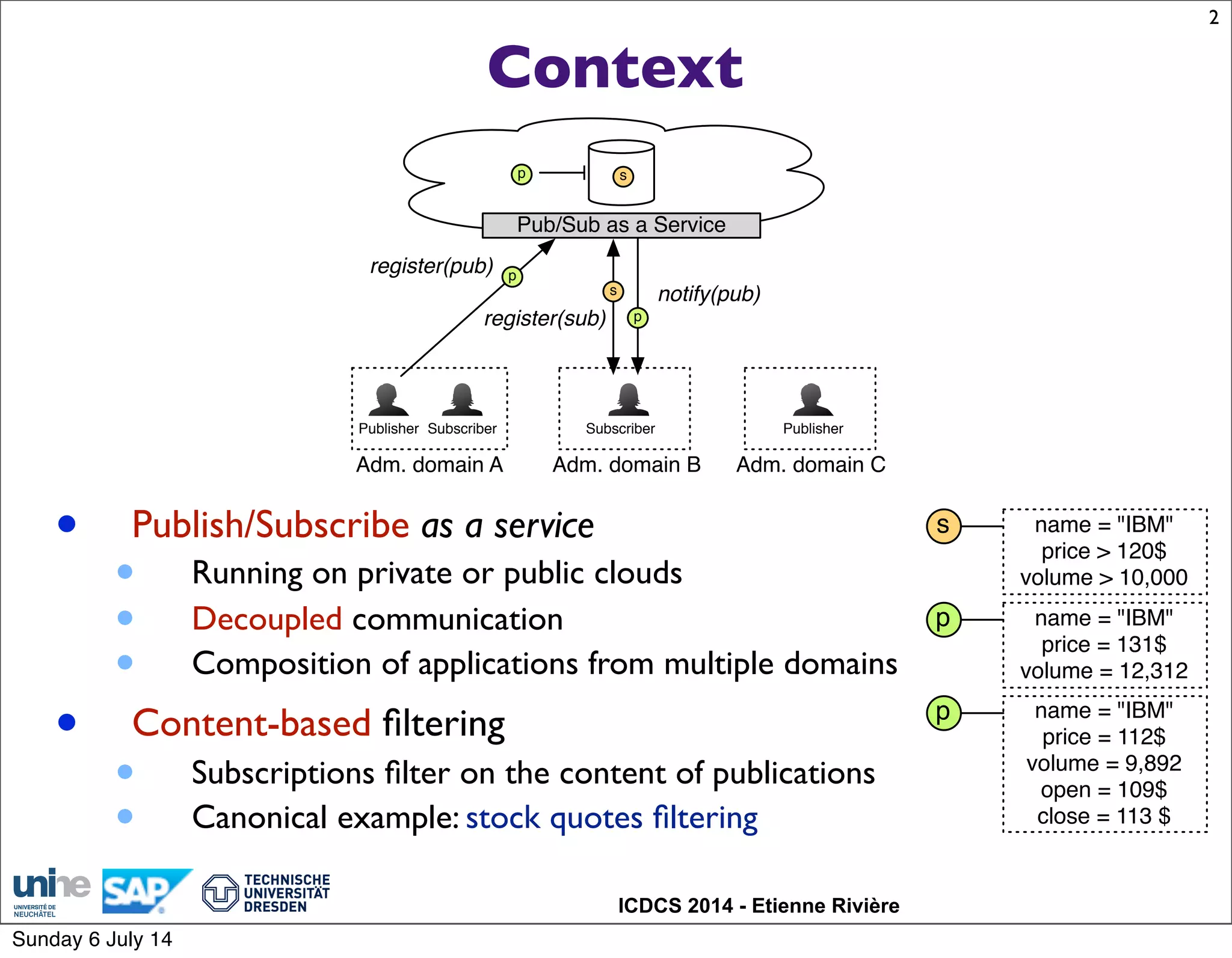

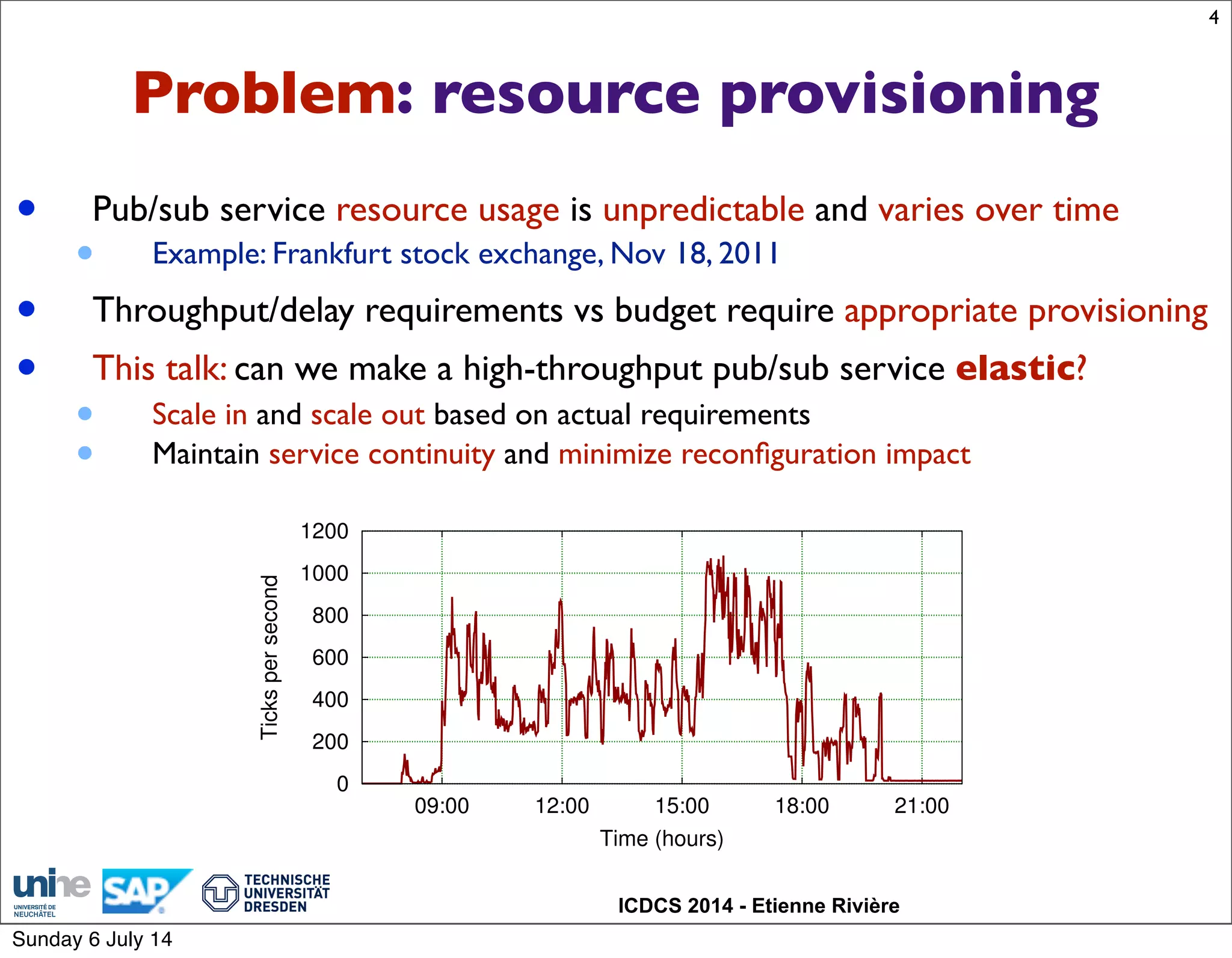

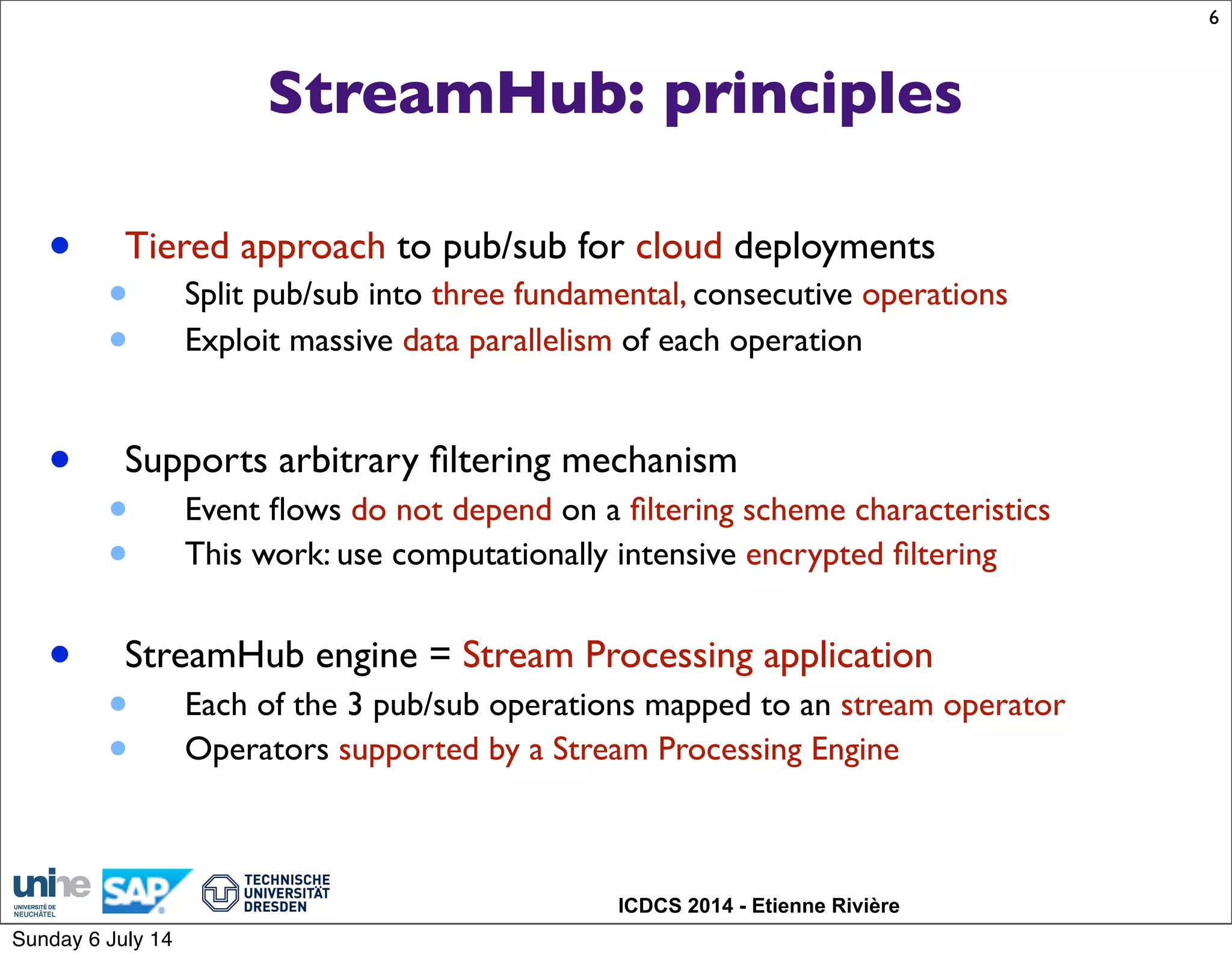

The document discusses the elastic scaling of a high-throughput content-based publish/subscribe engine named Streamhub, focusing on its architecture, scalability requirements, and resource provisioning. It highlights the capability to dynamically adjust resources based on load through efficient slice migration and elasticity policies, ensuring service continuity while handling high volumes of subscriptions and notifications. The evaluation involves performance assessments using real-world workloads, demonstrating the engine's effectiveness in cloud environments.

![ICDCS 2014 - Etienne Rivière

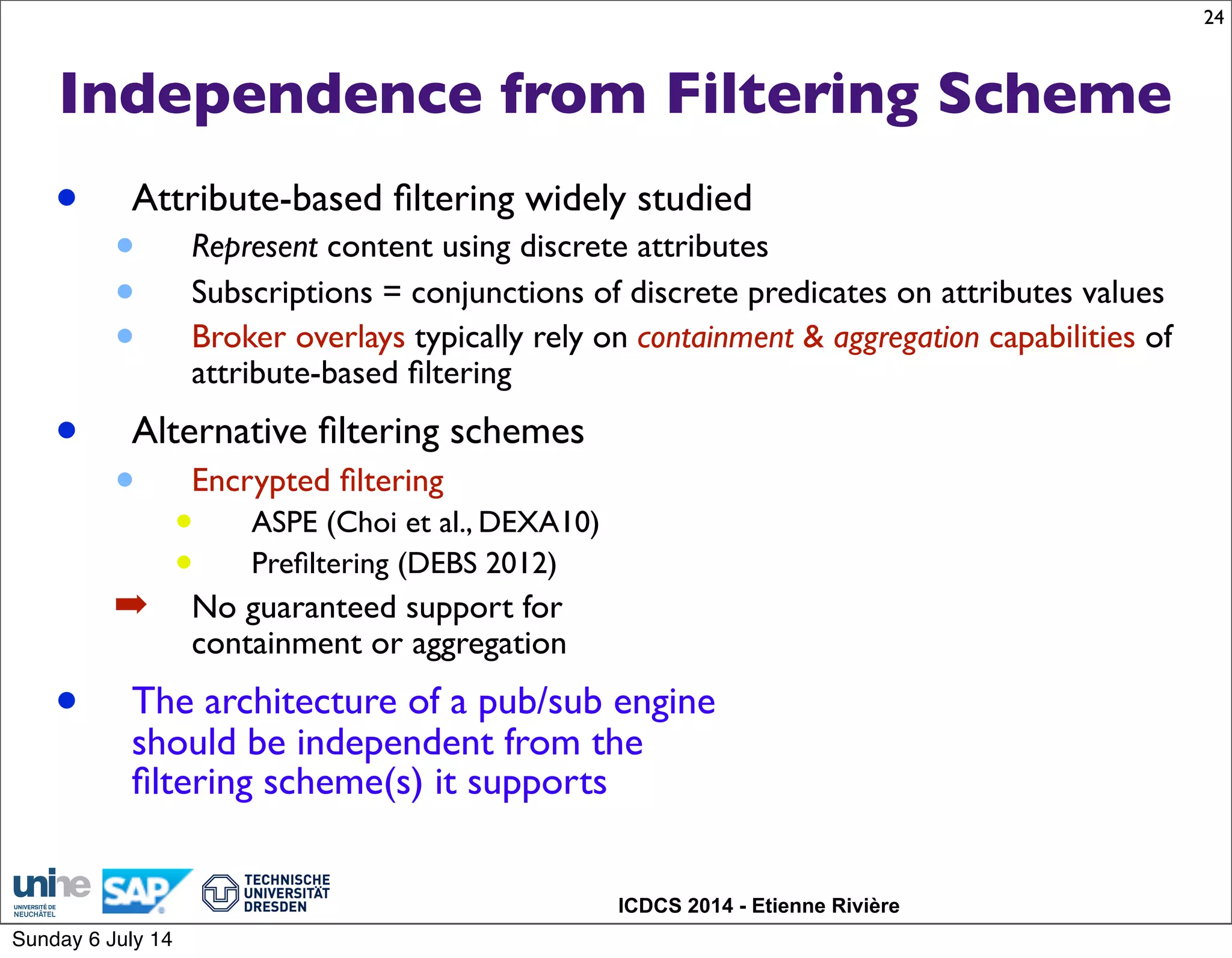

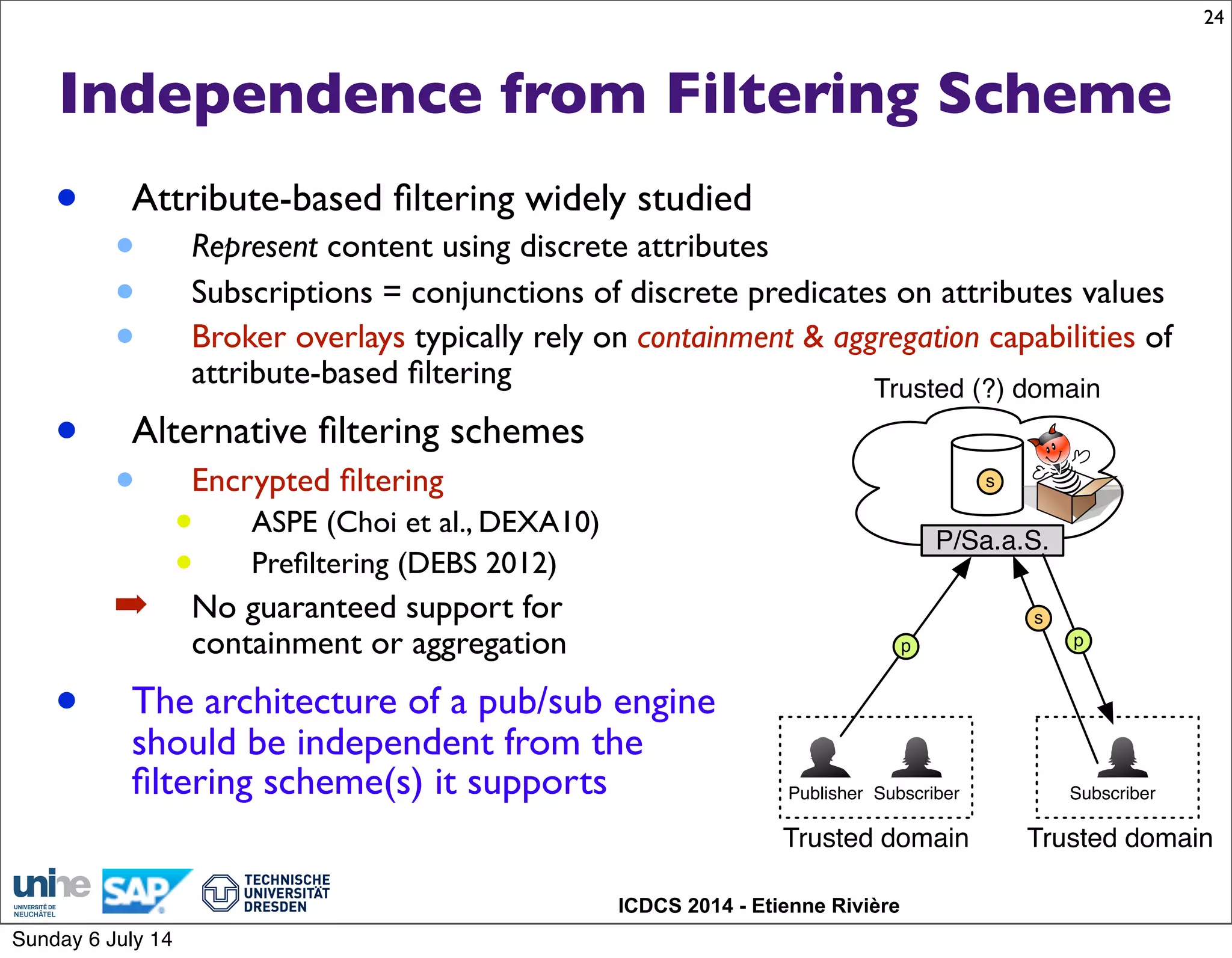

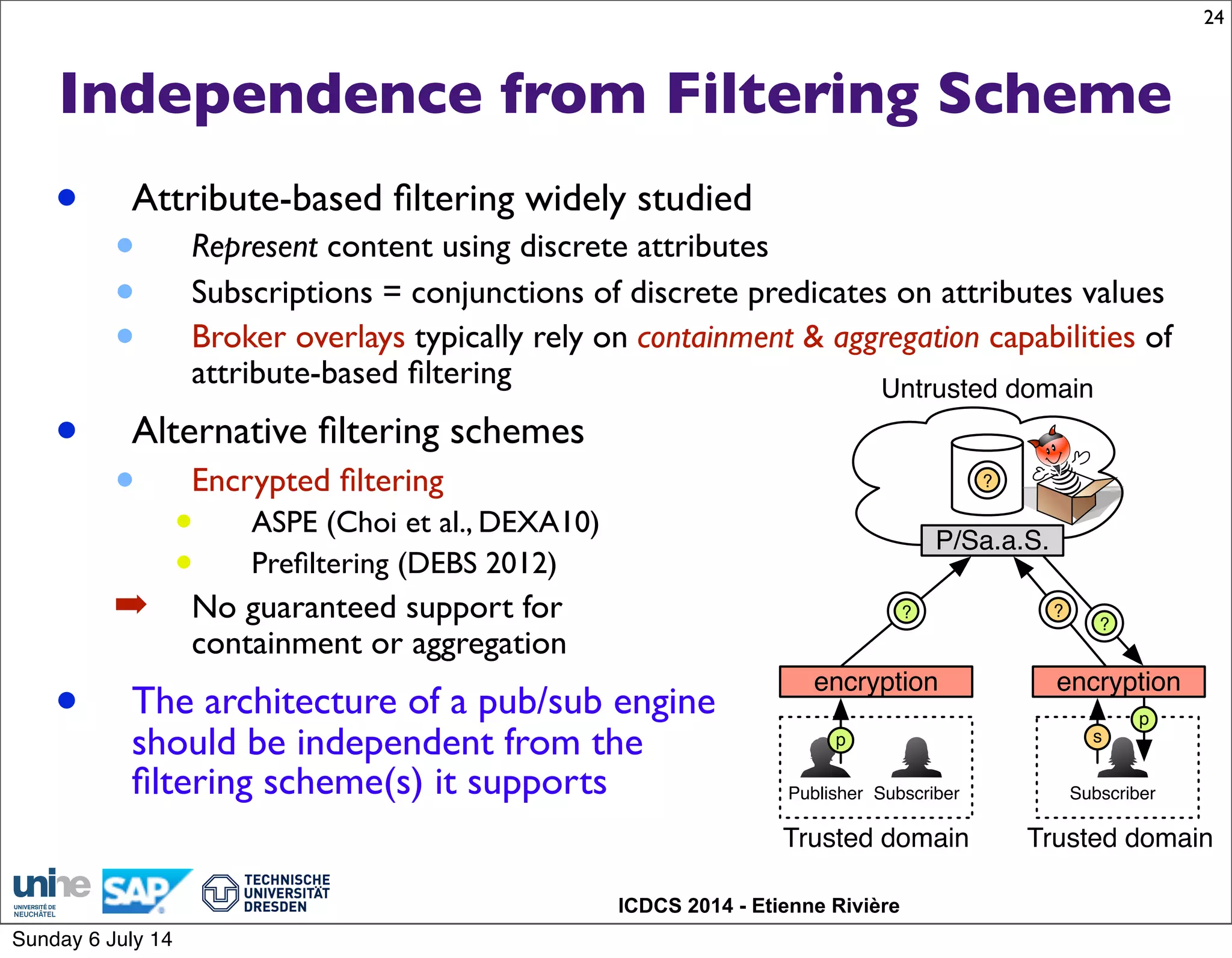

Pub/sub as a service: requirements

• Arbitrary representation of publications and subscriptions

• Not limited to attribute-based filtering

• Untrusted domains: encrypted filtering

• High-throughput and scalability

• Thousands subscriptions per second

• Thousands publications per second

• Thousands to millions notifications per second

• Availability, dependability and low delays

• StreamHub [DEBS 2013]

• Supports arbitrary filtering scheme, in particular

encrypted filtering (ASPE)

• Built on top of a Stream Processing Engine

3

Publisher Subscriber

P/Sa.a.S.

Trusted domain Trusted domain

Subscriber

?

p s

?

encryption encryption

?

Untrusted domain

?

p

Sunday 6 July 14](https://image.slidesharecdn.com/icdcs14-presented-140709015236-phpapp01/75/Elastic-Scaling-of-a-High-Throughput-Content-Based-Publish-Subscribe-Engine-3-2048.jpg)

![ICDCS 2014 - Etienne Rivière

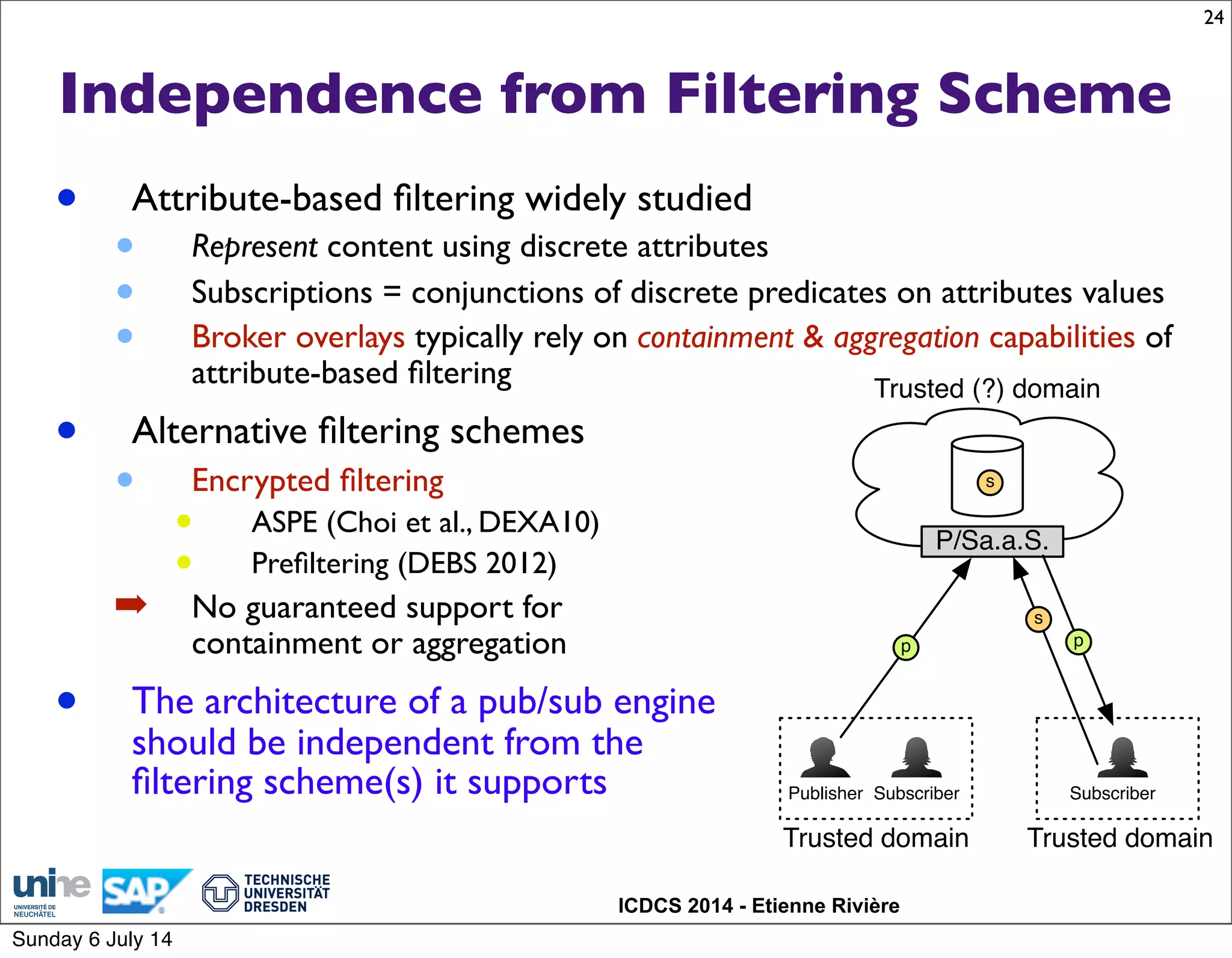

StreamHub Engine [DEBS13]

8

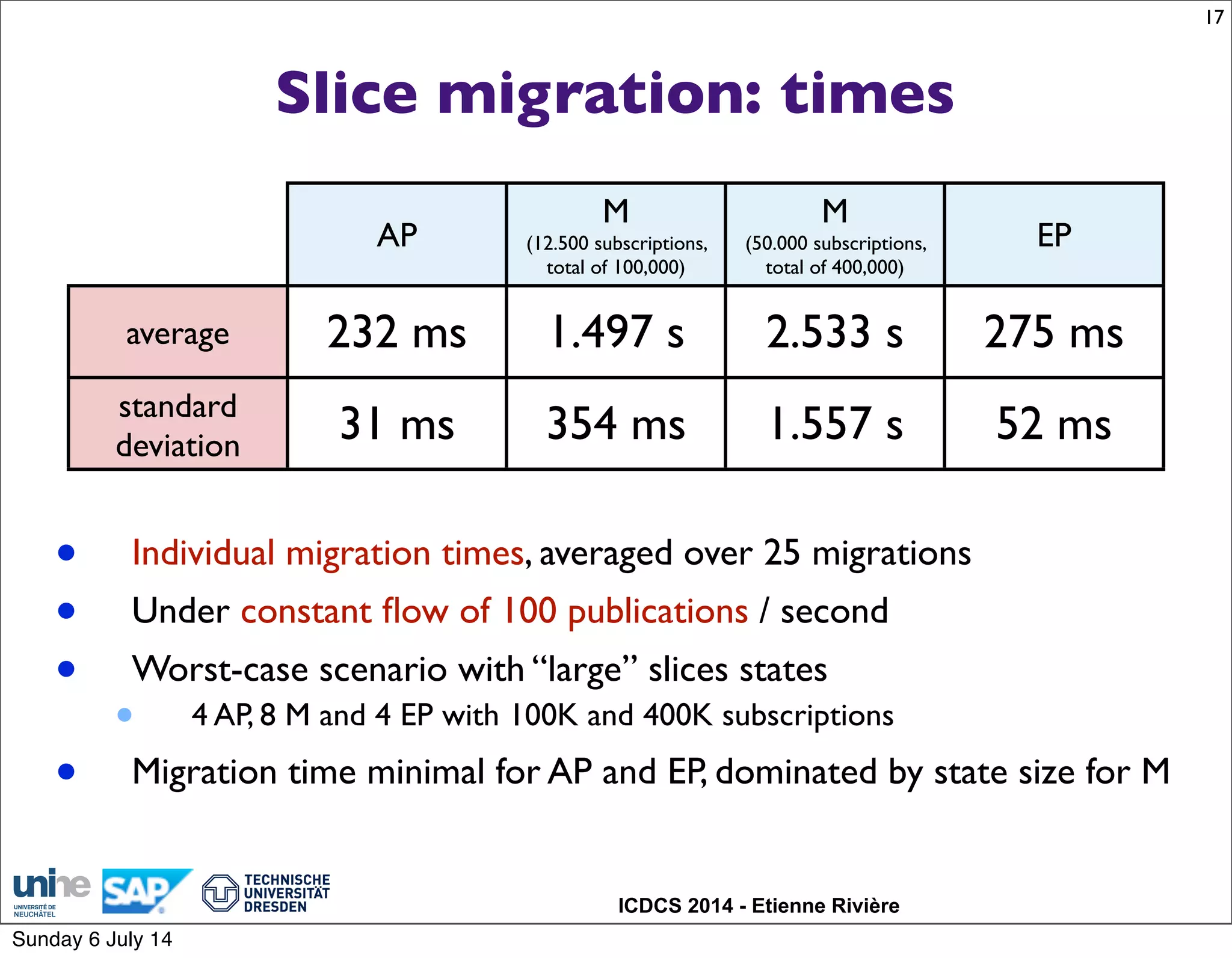

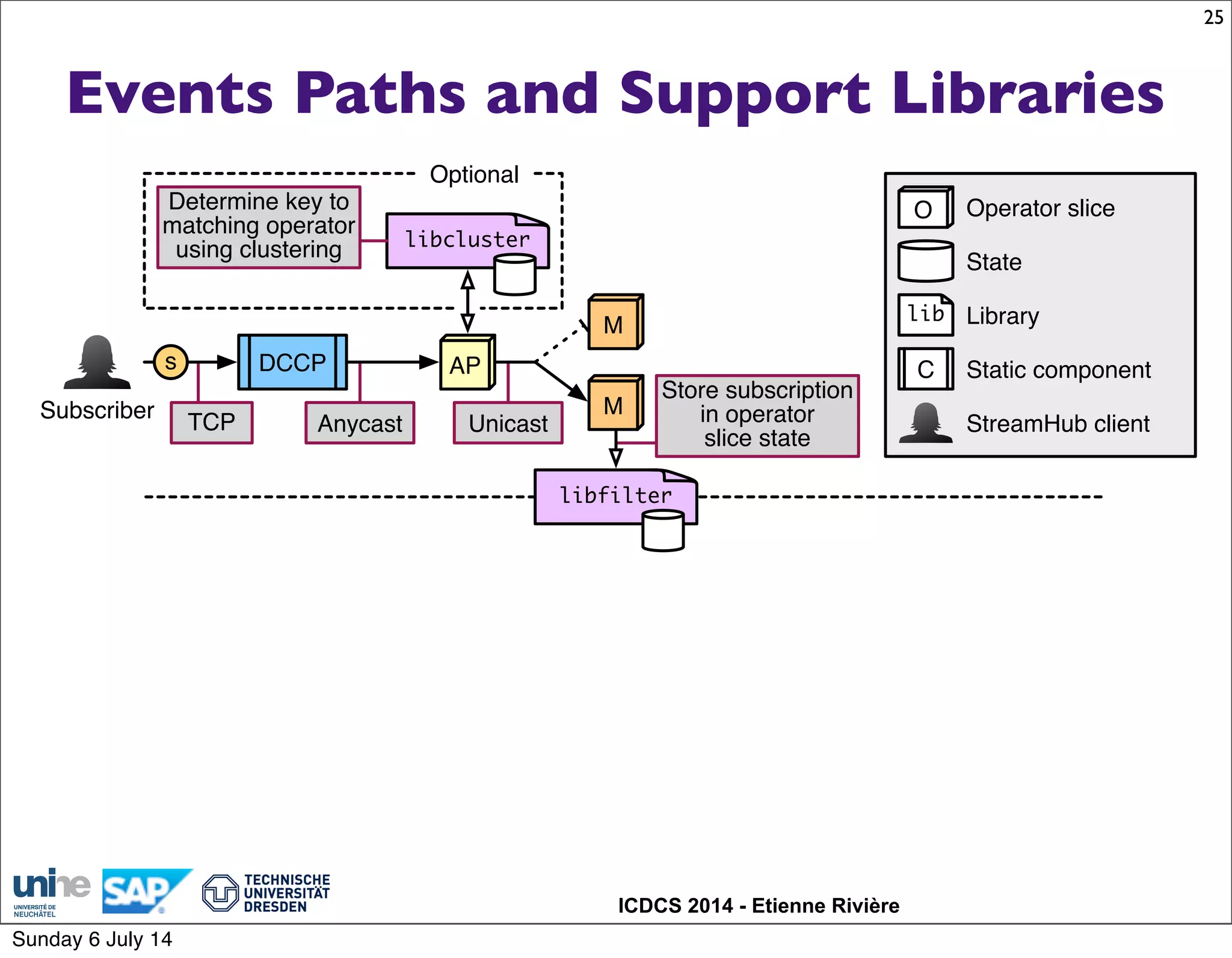

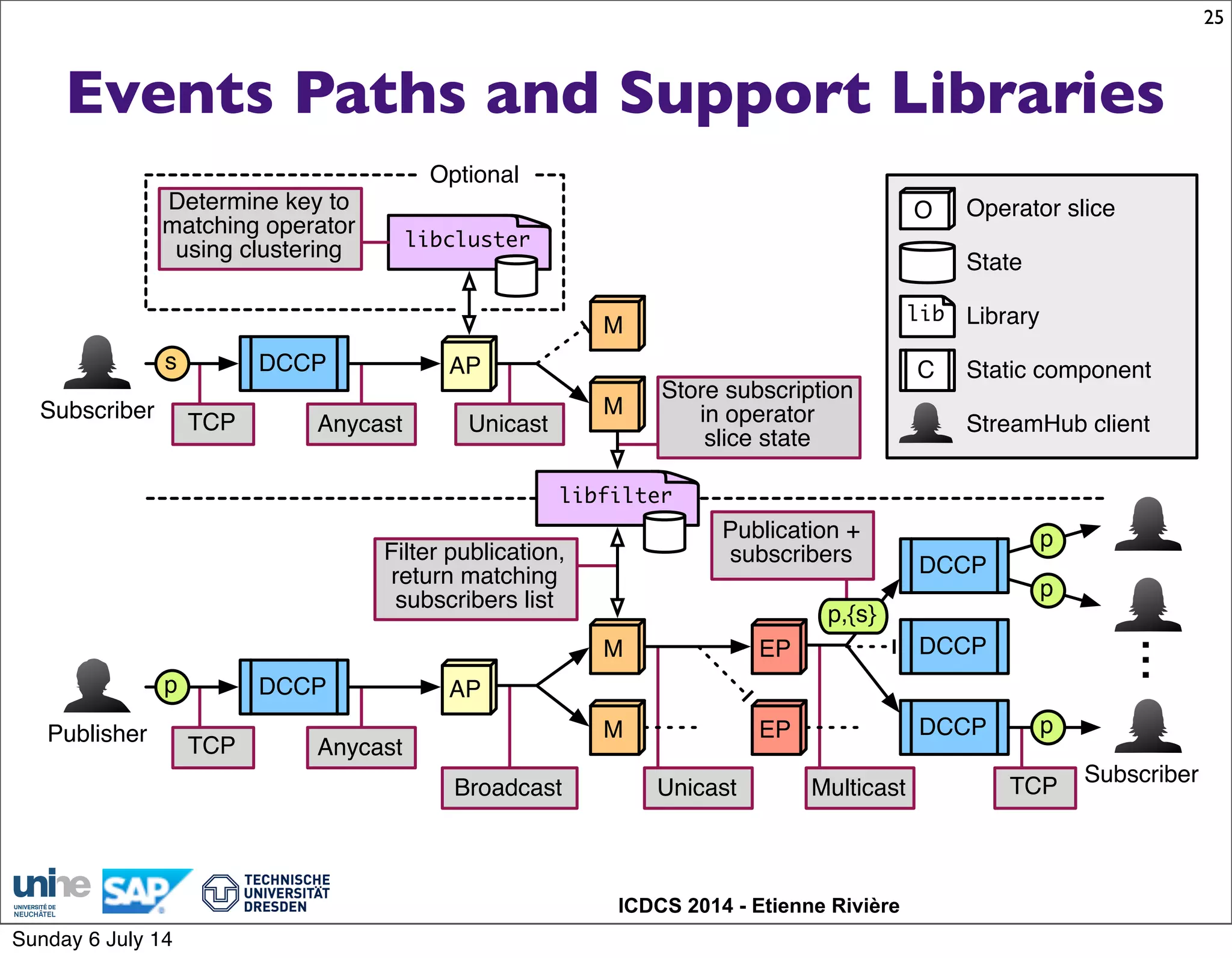

Operator Access Point (AP) Matching (M) Exit Point (EP)

Function Subscriptions Partitioning Publications Filtering Publications Dispatching

State Stateless Stateful (persistent) Stateful (transient)

Role

➡ Decide where to store

subs

➡ Broadcast pubs to next

operator

➡ Store subs

➡ Filter incoming pubs,

create list of matching

subscribers ids

➡ Aggregate lists of

matching subscribers ids

➡ Prepare & dispatch

notifications

Subscriber

SUB

x > 3 &

y == 5

encrypts

SUB

C5F80

BA363

Publisher

PUB

x = 7

y = 10

encrypts

PUB

88F3B

2A09C

DCCP/ConnectionPoint

stores

sends

notifications of

matching encrypted publications

AP:1

AP operator M operator

p

s

Broadcast

(pubs)

Unicast

(subs)

AP:2

AP:3

AP:4

Unicast Multicast

AP:5

AP:6

Anycast

?

EP operator

M:1

M:2

M:3

M:4

M:5

M:6

EP:1

EP:2

EP:3

EP:4

EP:5

EP:6

DCCP/ConnectionPoint

e-StreamHub engine

ASPE

Public Cloud deployment

(same

DCCP)

Sunday 6 July 14](https://image.slidesharecdn.com/icdcs14-presented-140709015236-phpapp01/75/Elastic-Scaling-of-a-High-Throughput-Content-Based-Publish-Subscribe-Engine-8-2048.jpg)