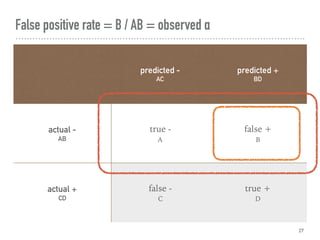

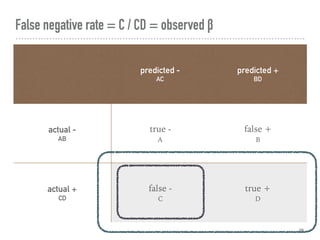

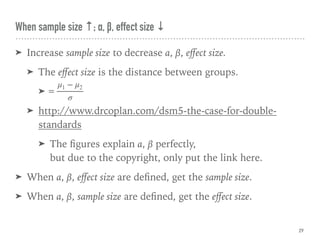

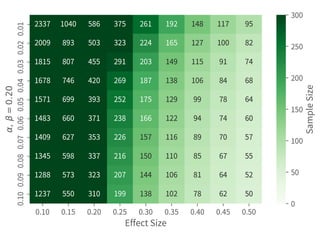

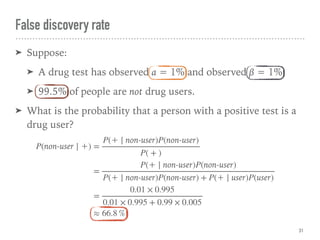

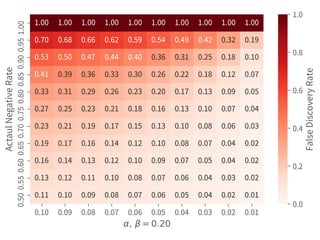

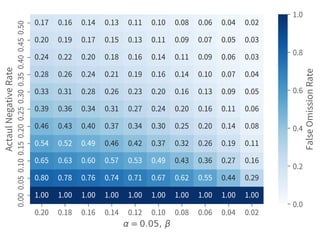

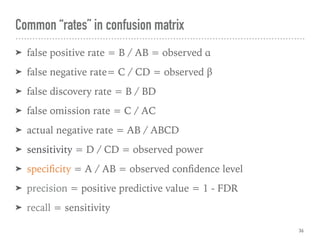

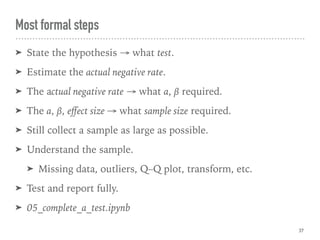

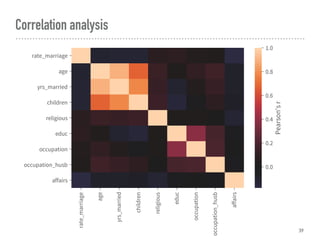

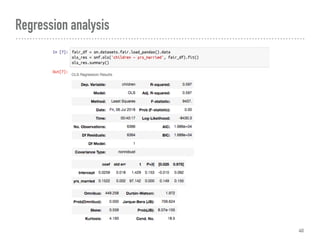

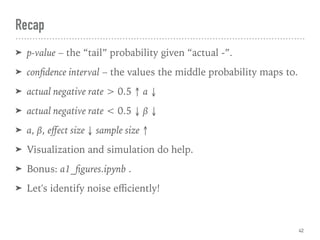

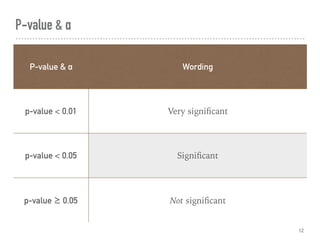

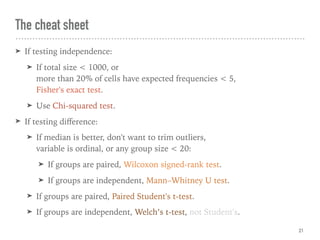

This document discusses hypothesis testing in Python. It covers simulating and analyzing test datasets, how hypothesis tests work, common statistical tests like t-tests and chi-squared tests, and steps for completing a hypothesis test. Key points include defining the null and alternative hypotheses, estimating error rates from a confusion matrix, determining necessary sample sizes based on desired alpha and beta levels, and fully reporting test results. Other statistical analyses like correlation and regression are also briefly mentioned. Overall the document provides an introduction to performing and interpreting hypothesis tests in Python.

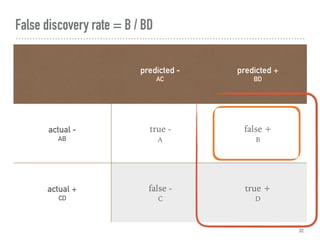

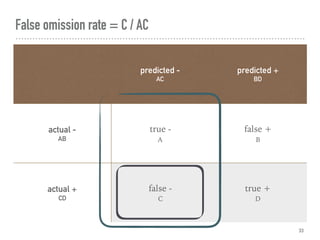

![Confusion matrix, where A = 002 = C[0, 0]

26

predicted -

AC

predicted +

BD

actual -

AB

true -

A

false +

B

actual +

CD

false -

C

true +

D](https://image.slidesharecdn.com/hypothesistestingwithpython-180710010048/85/Hypothesis-Testing-With-Python-26-320.jpg)