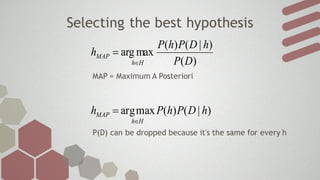

Bayesian learning allows systems to classify new examples based on a model learned from prior examples using probabilities. It reasons by calculating the posterior probability of a hypothesis given new evidence using Bayes' rule. The maximum a posteriori (MAP) hypothesis that best explains the evidence is selected. Naive Bayes classifiers make a strong independence assumption between attributes. They classify new examples by calculating the posterior probability of each class and choosing the class with the highest value. Overfitting can occur if the learned model is too complex for the data. Model selection aims to avoid overfitting by evaluating models on separate training and test datasets.