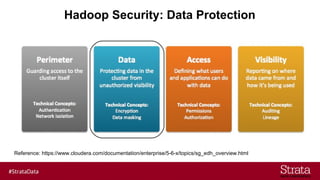

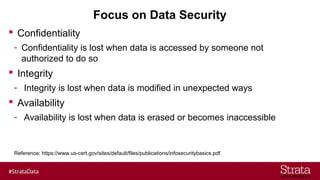

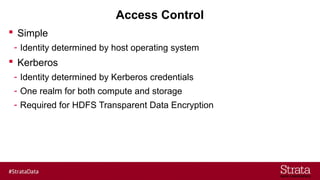

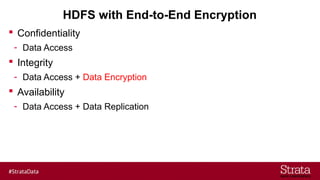

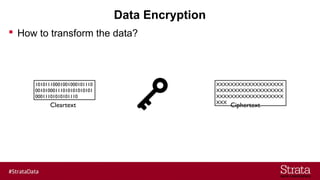

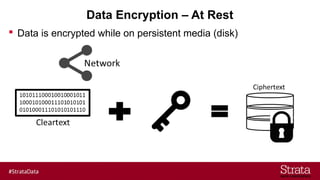

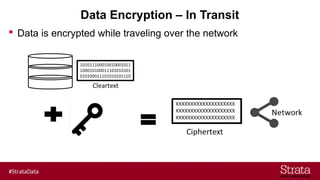

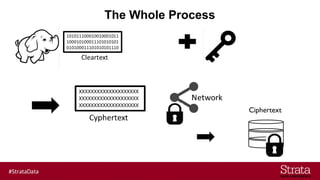

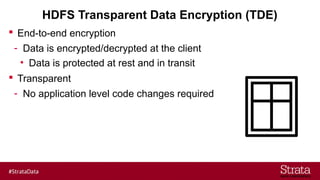

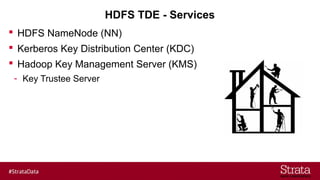

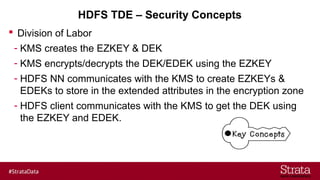

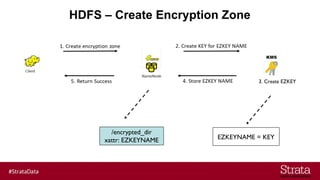

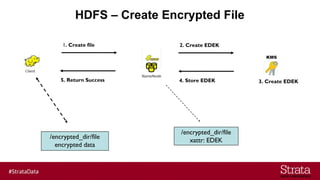

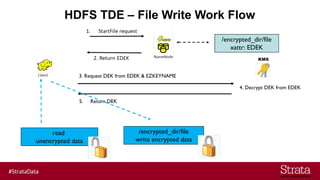

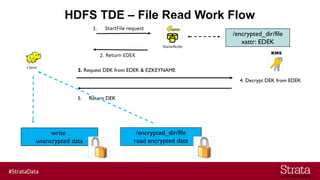

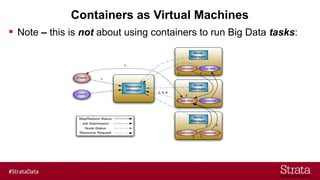

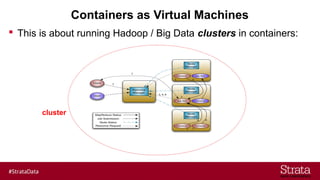

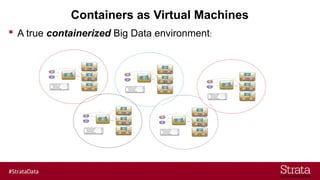

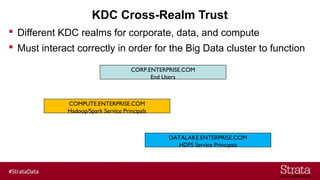

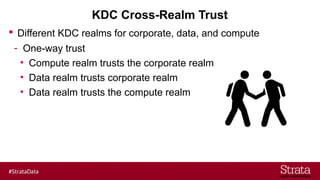

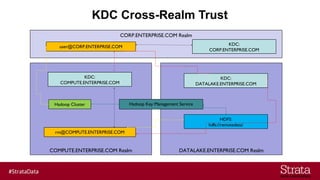

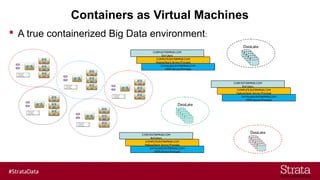

The document outlines the importance of securing big data environments, particularly in a containerized architecture, with a focus on Hadoop's data protection mechanisms, including access control, authentication, and transparent data encryption (TDE). It explains how TDE enables data to be encrypted both at rest and in transit without requiring changes to application level code, making it essential for maintaining confidentiality, integrity, and availability of data. Additionally, it discusses the complexities of managing key distribution centers (KDC) across different realms and highlights the challenges of virtualization and containerization in big data settings.