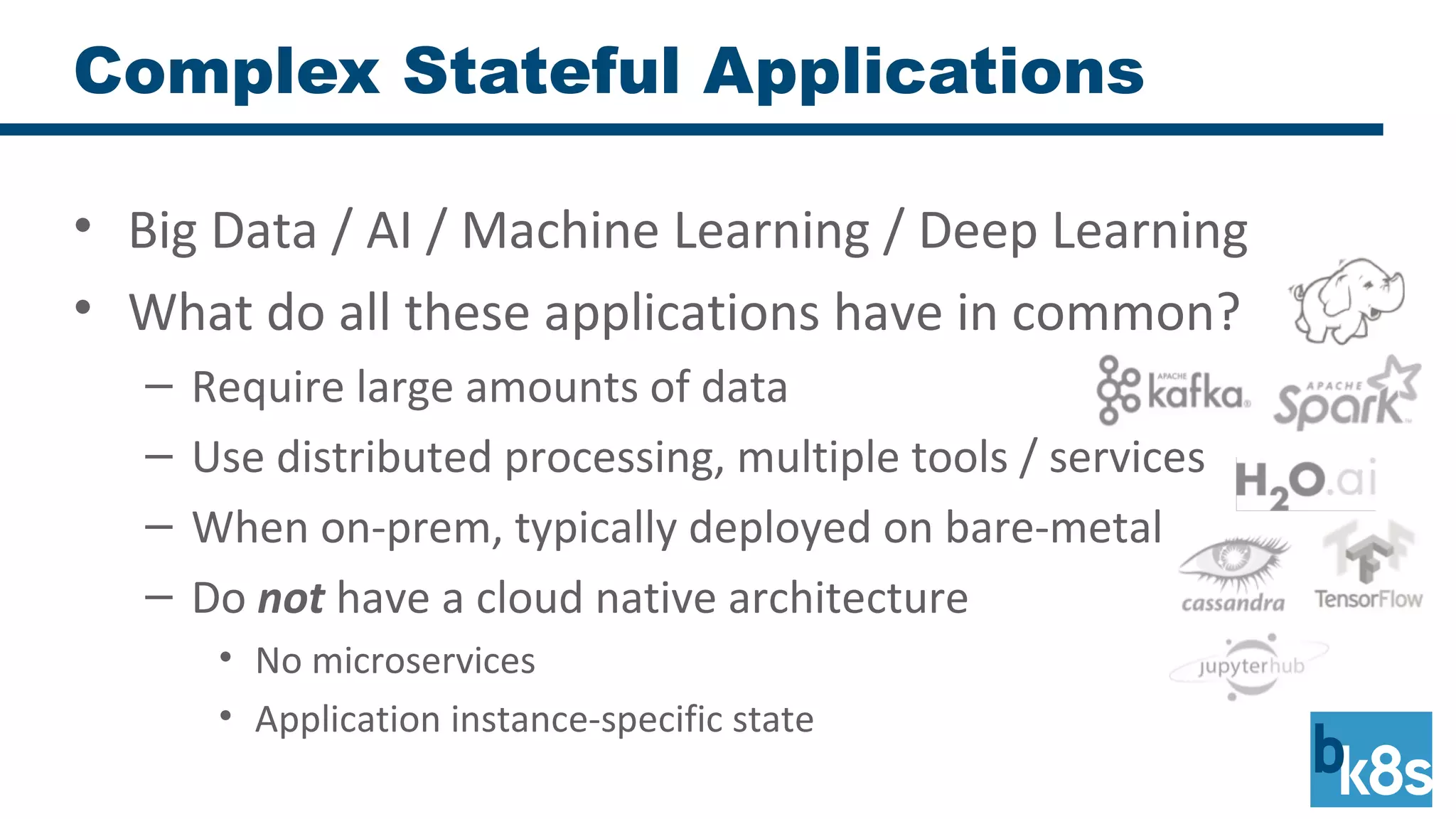

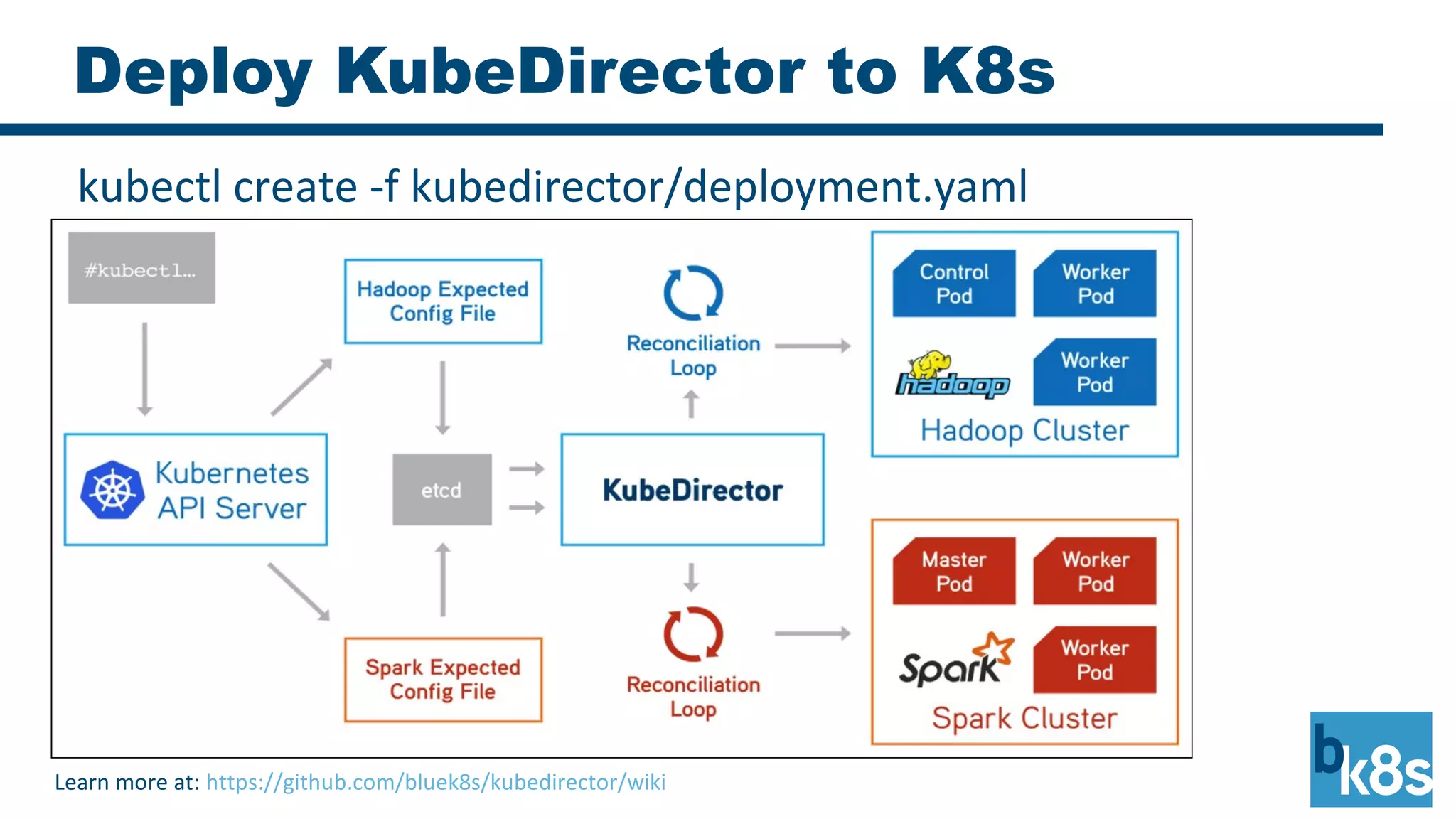

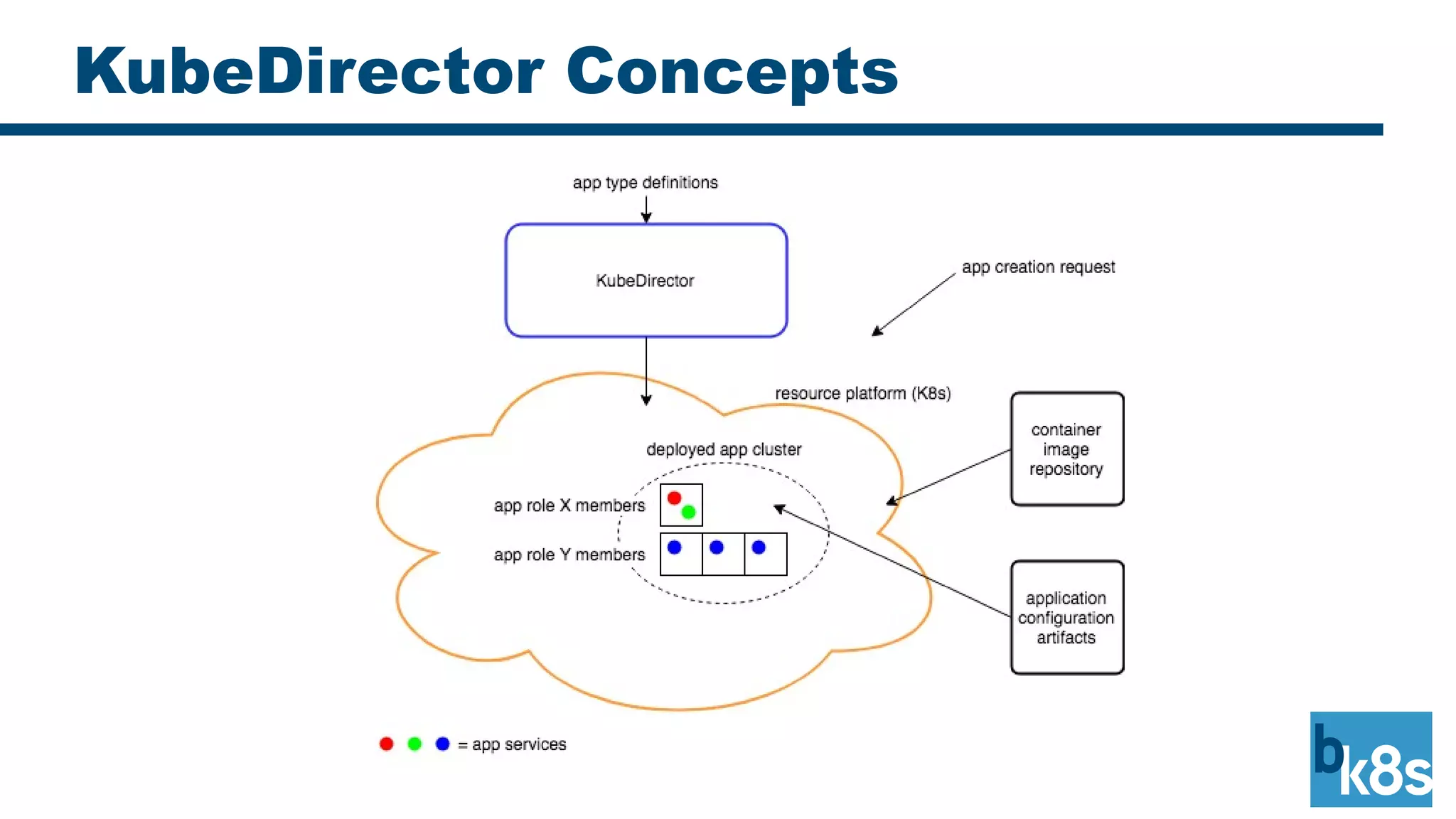

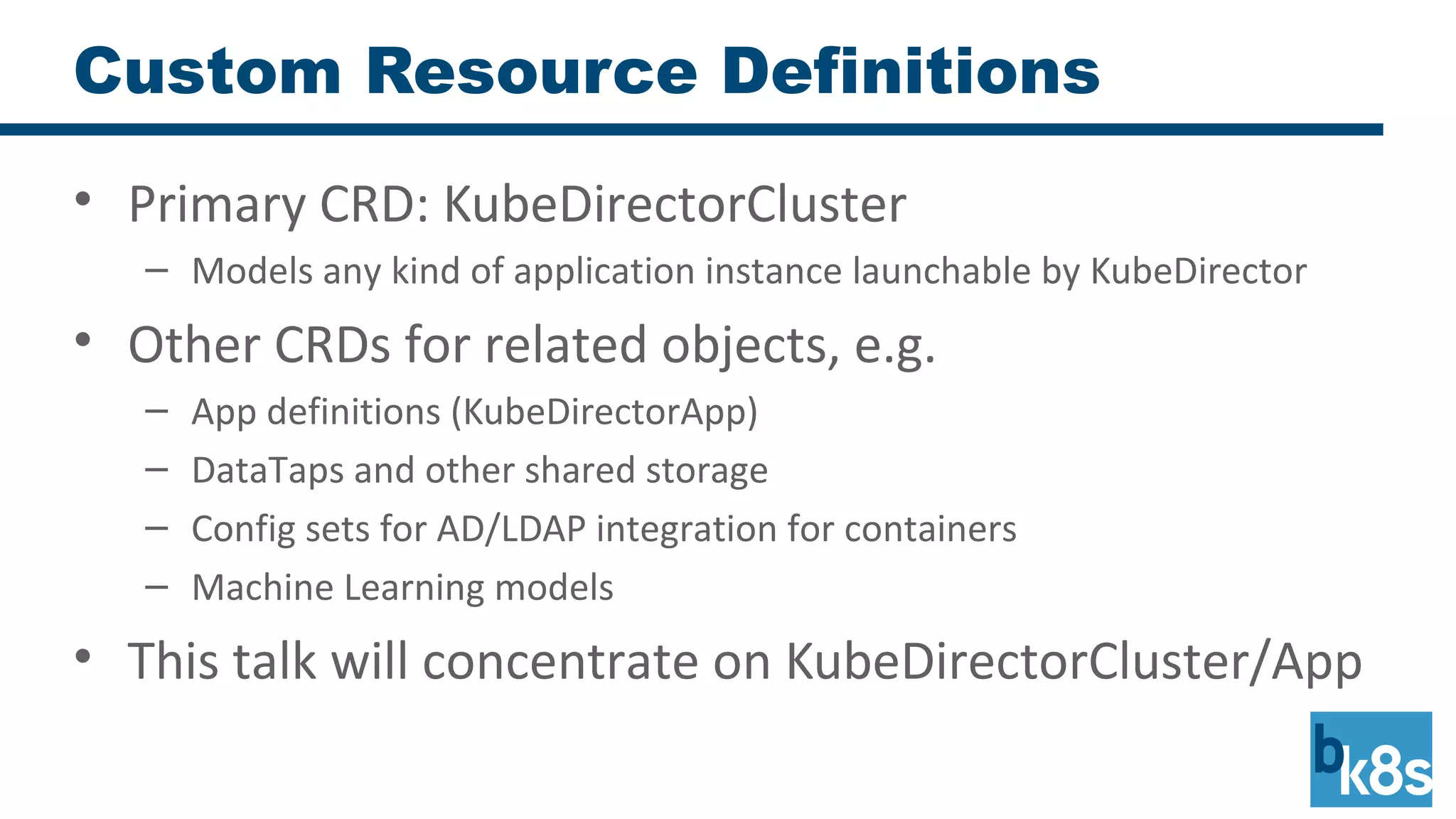

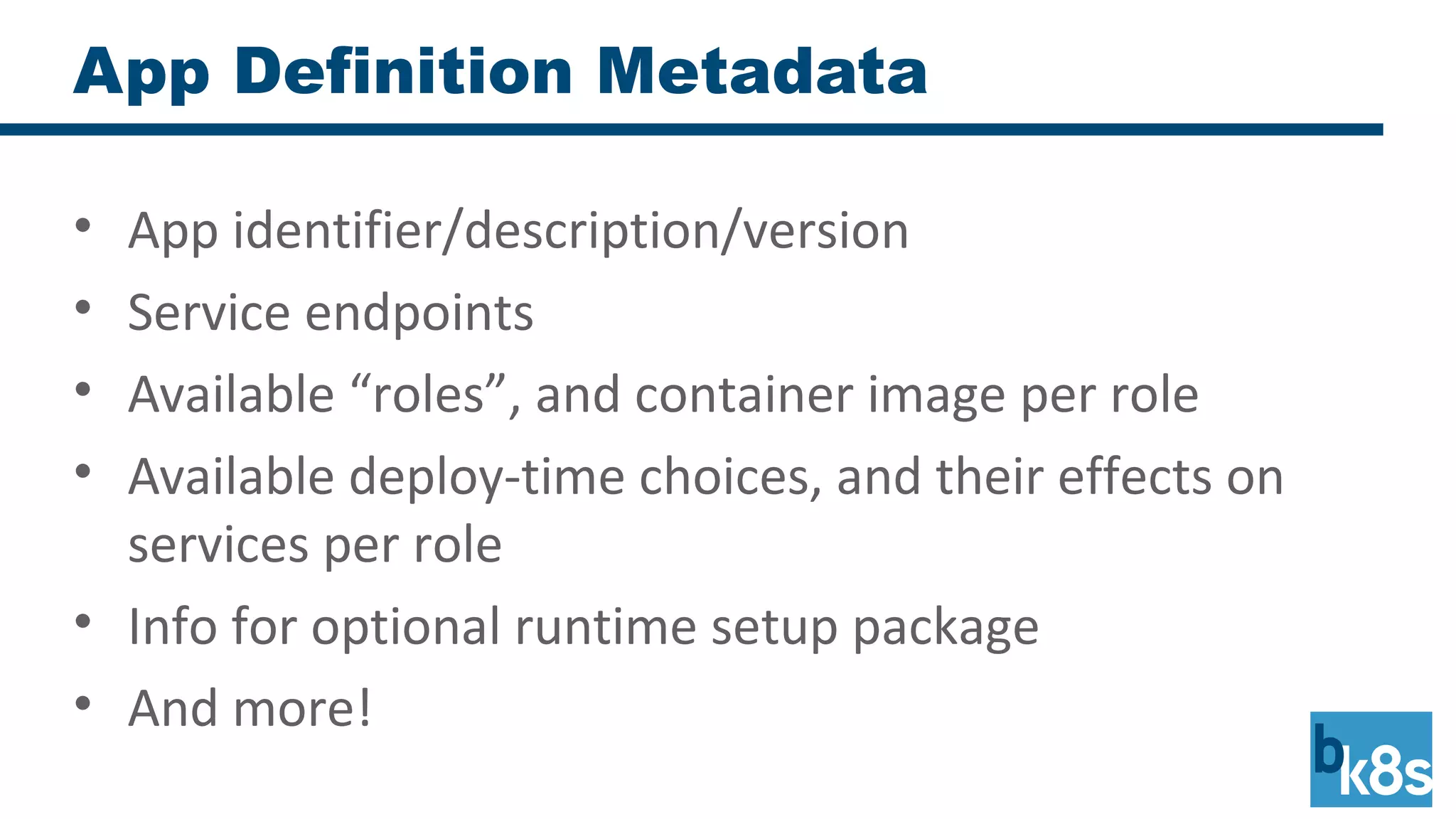

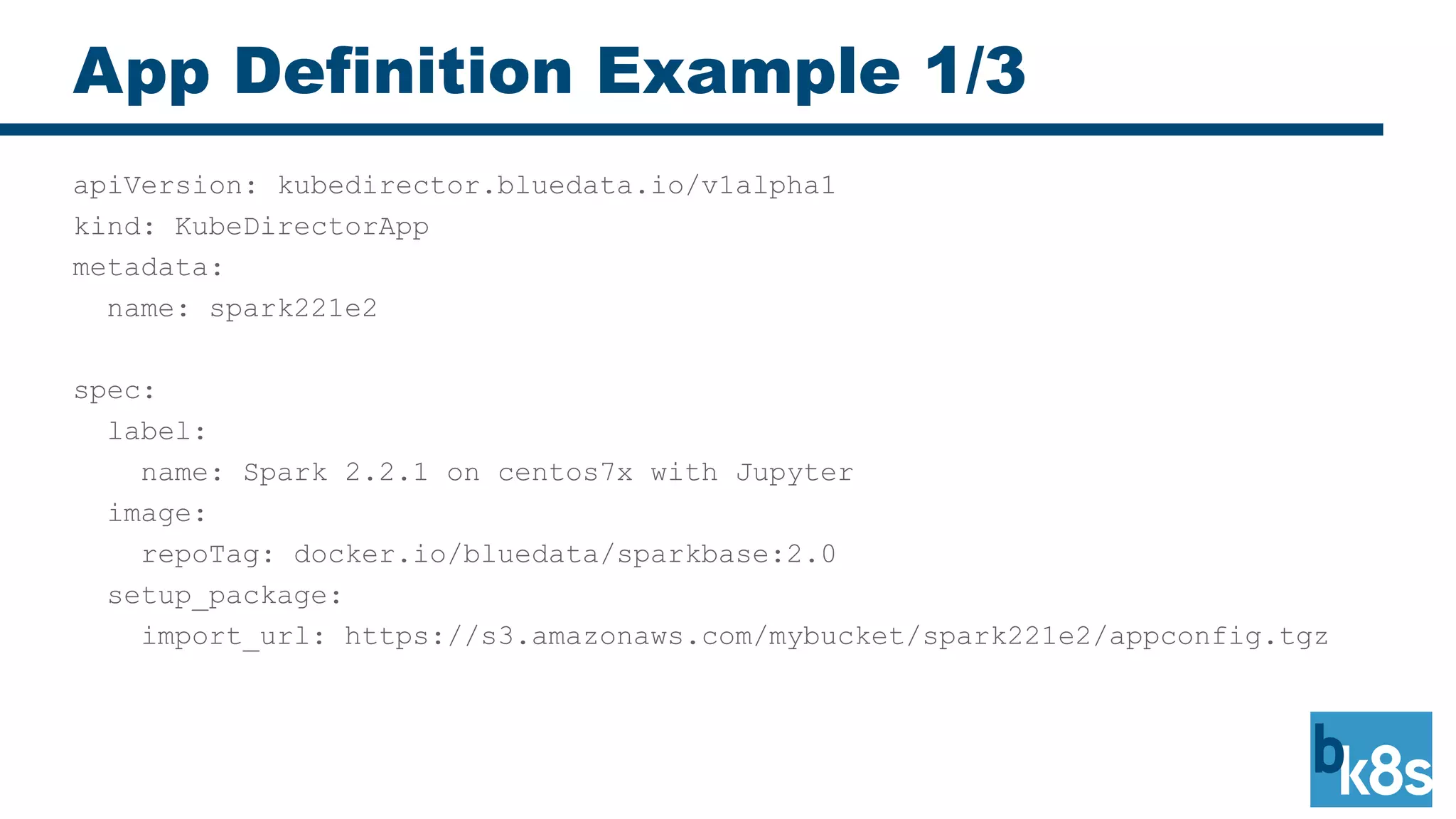

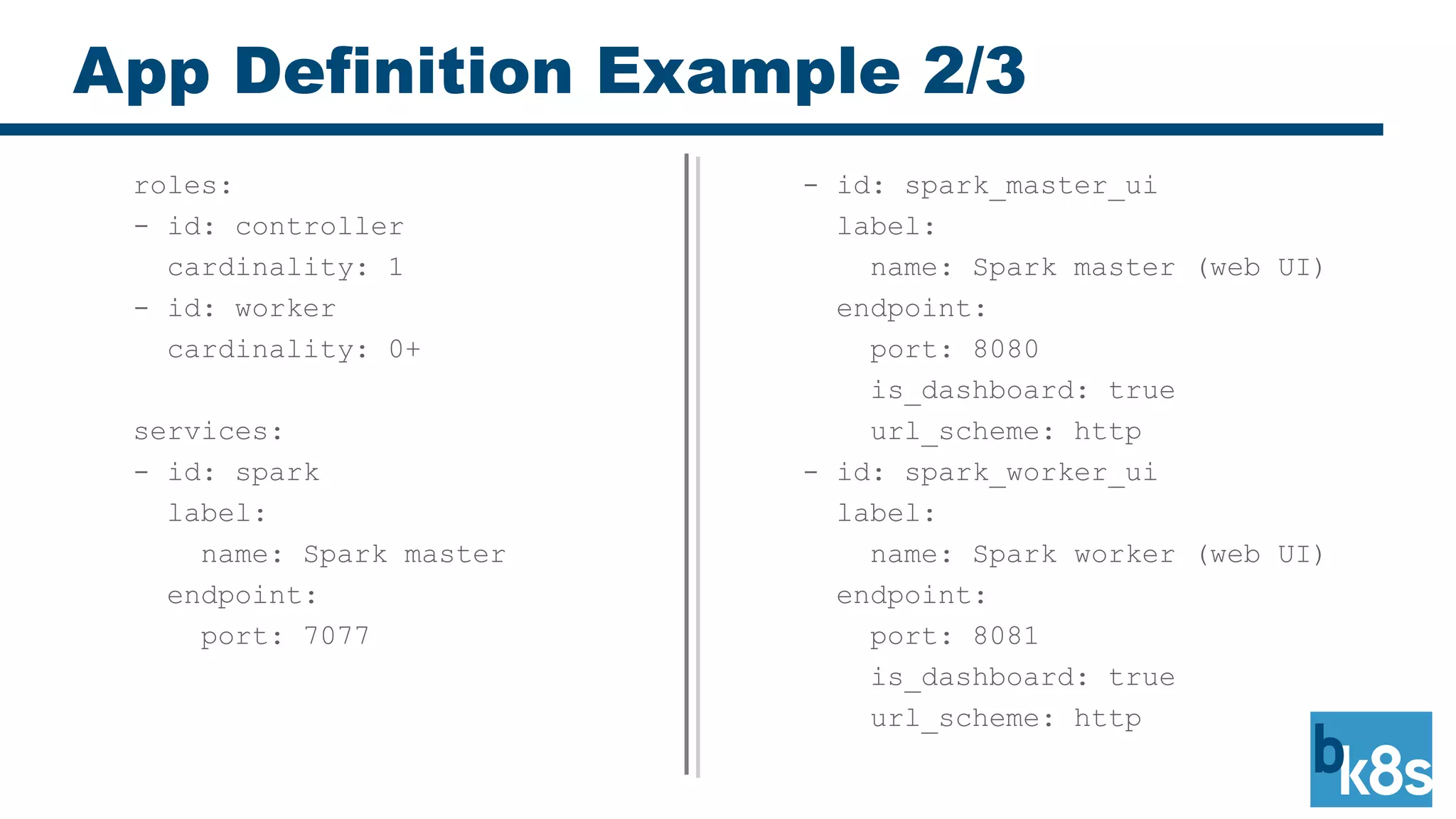

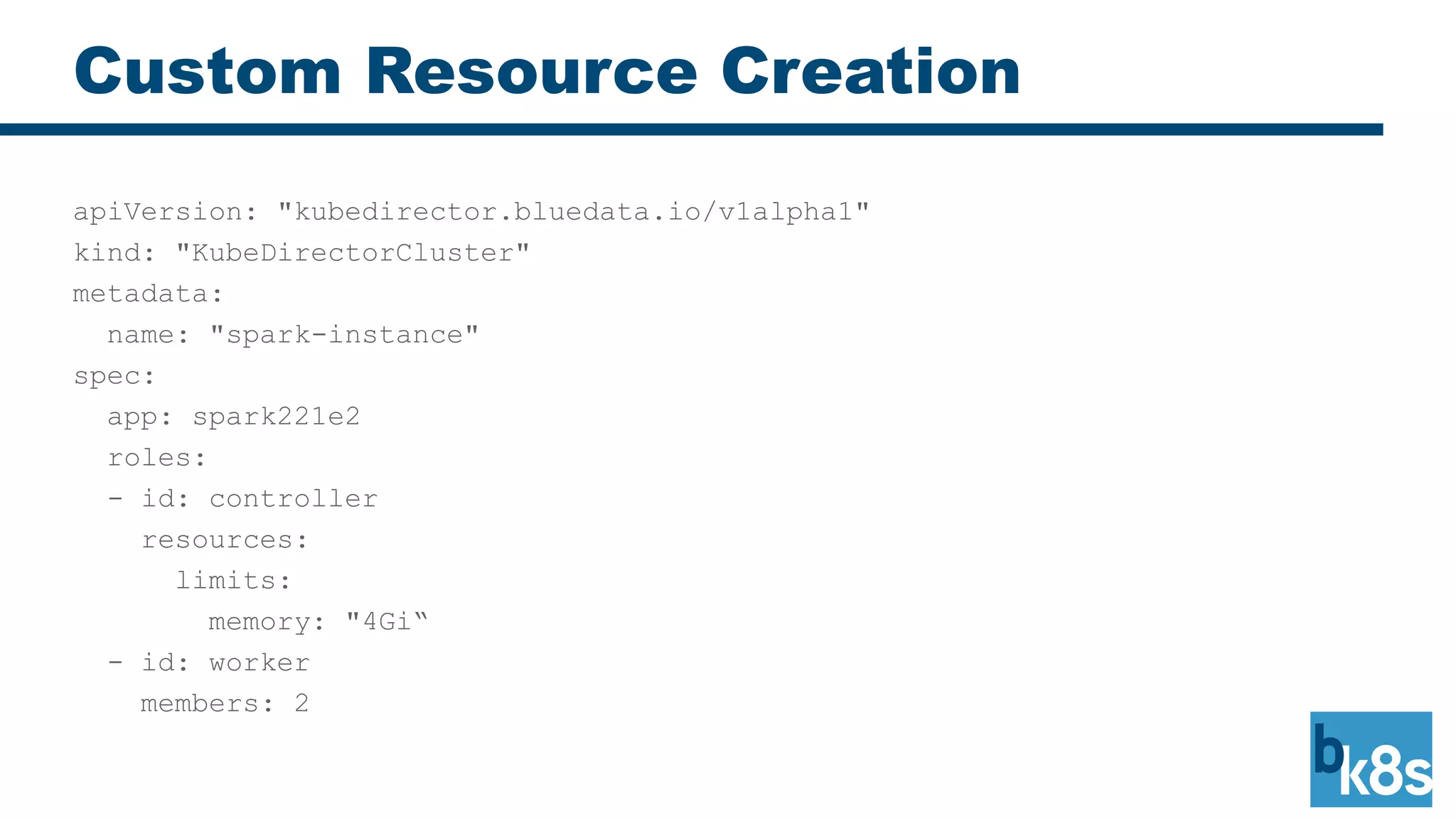

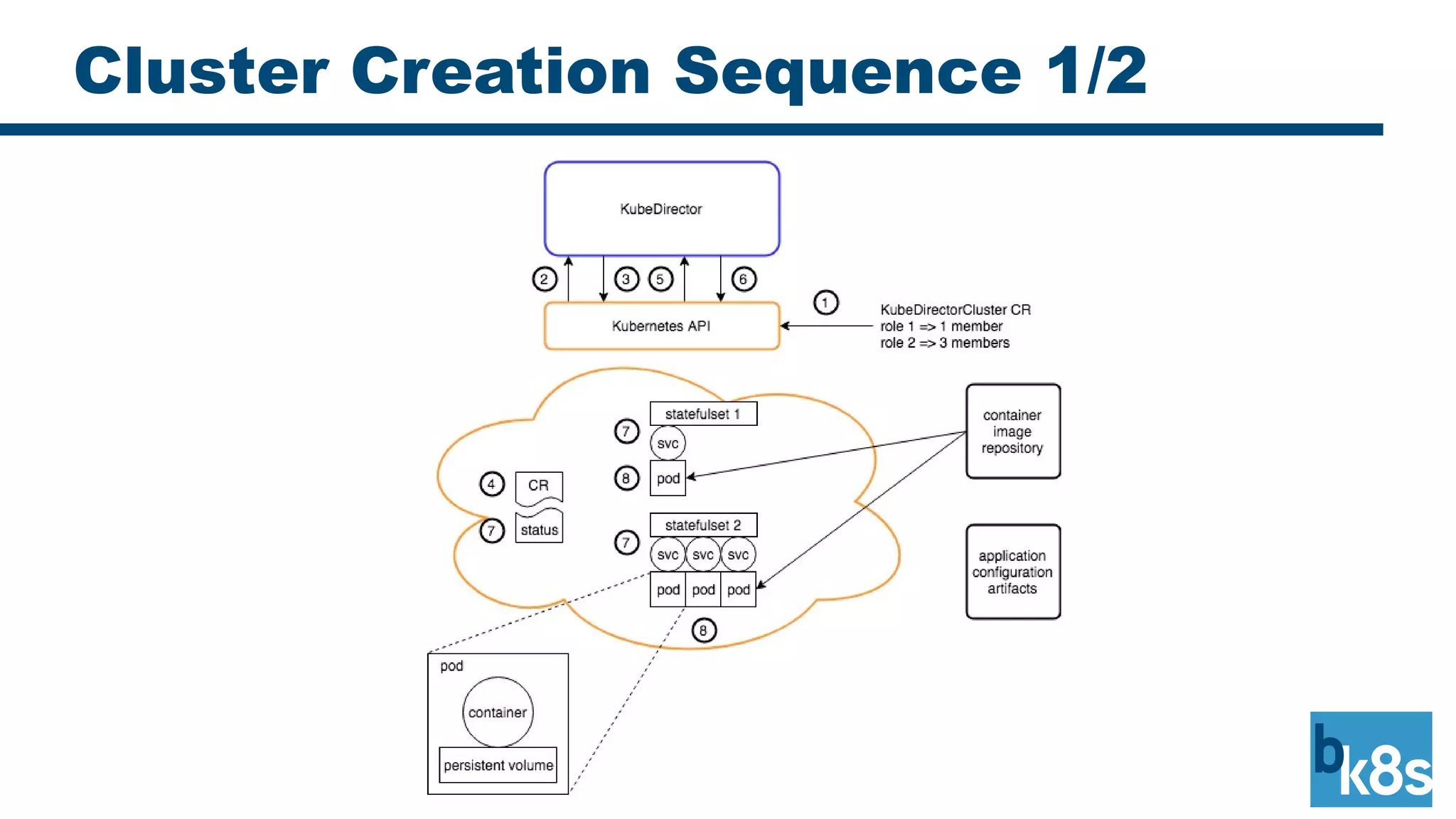

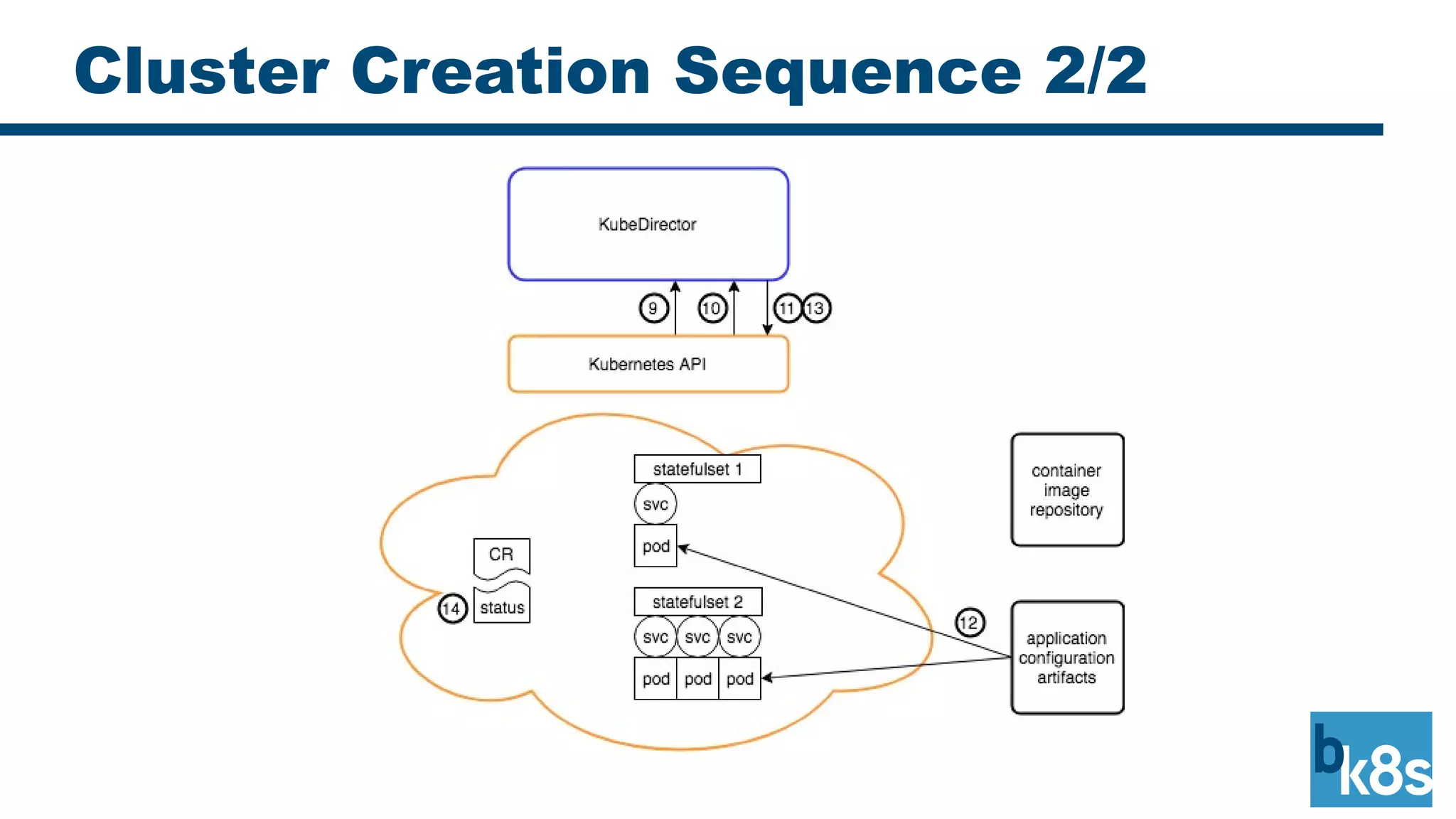

Kubedirector is an open-source project designed to manage stateful applications on Kubernetes, focusing on complex big data, AI, and machine learning deployments. It allows application experts and administrators to deploy and manage applications through a custom controller that operates with CRDs, enabling a separation of concerns among users. The framework simplifies the process by being data-driven and requires no app-specific logic in its code, facilitating interactions among different applications.