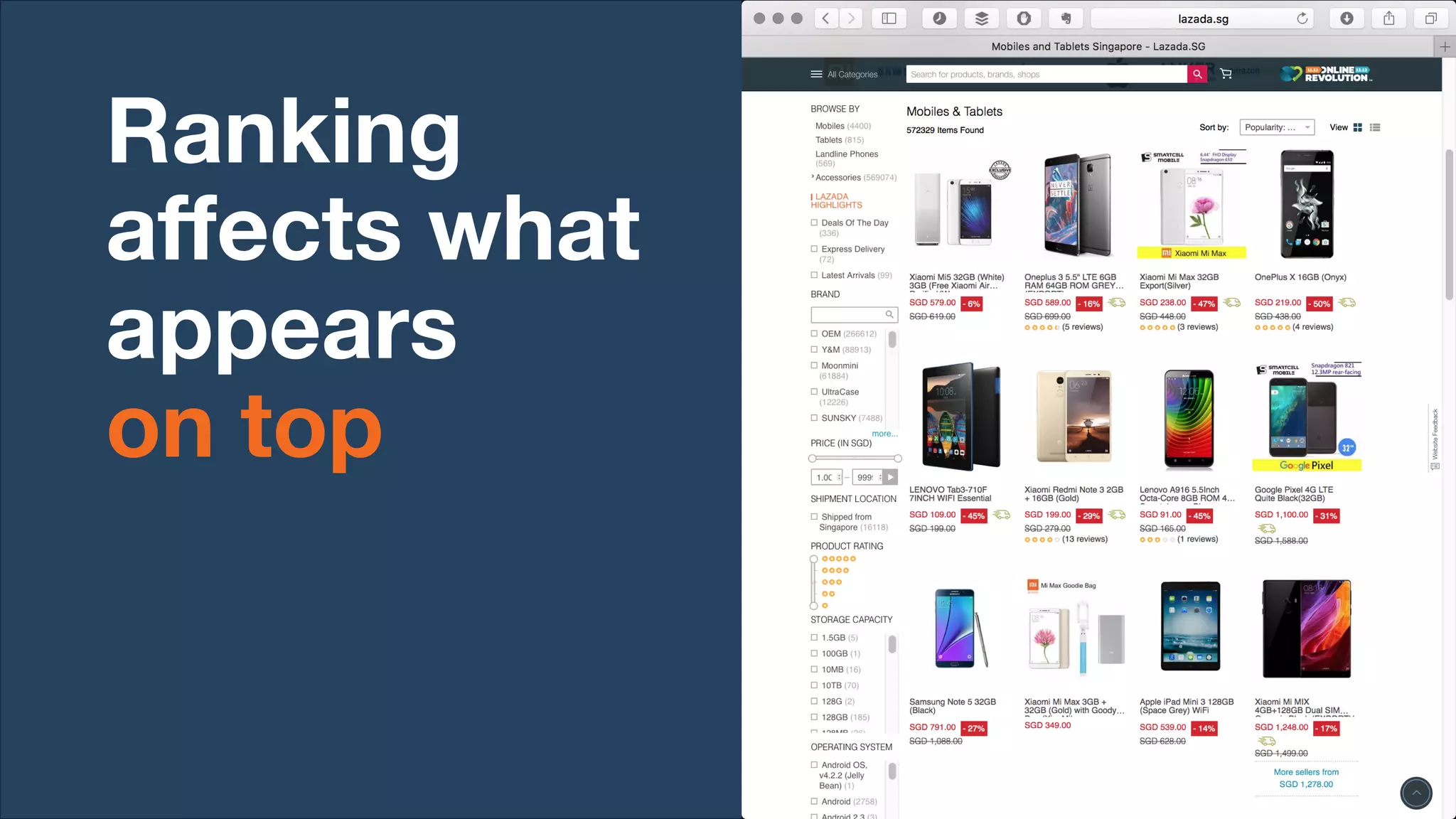

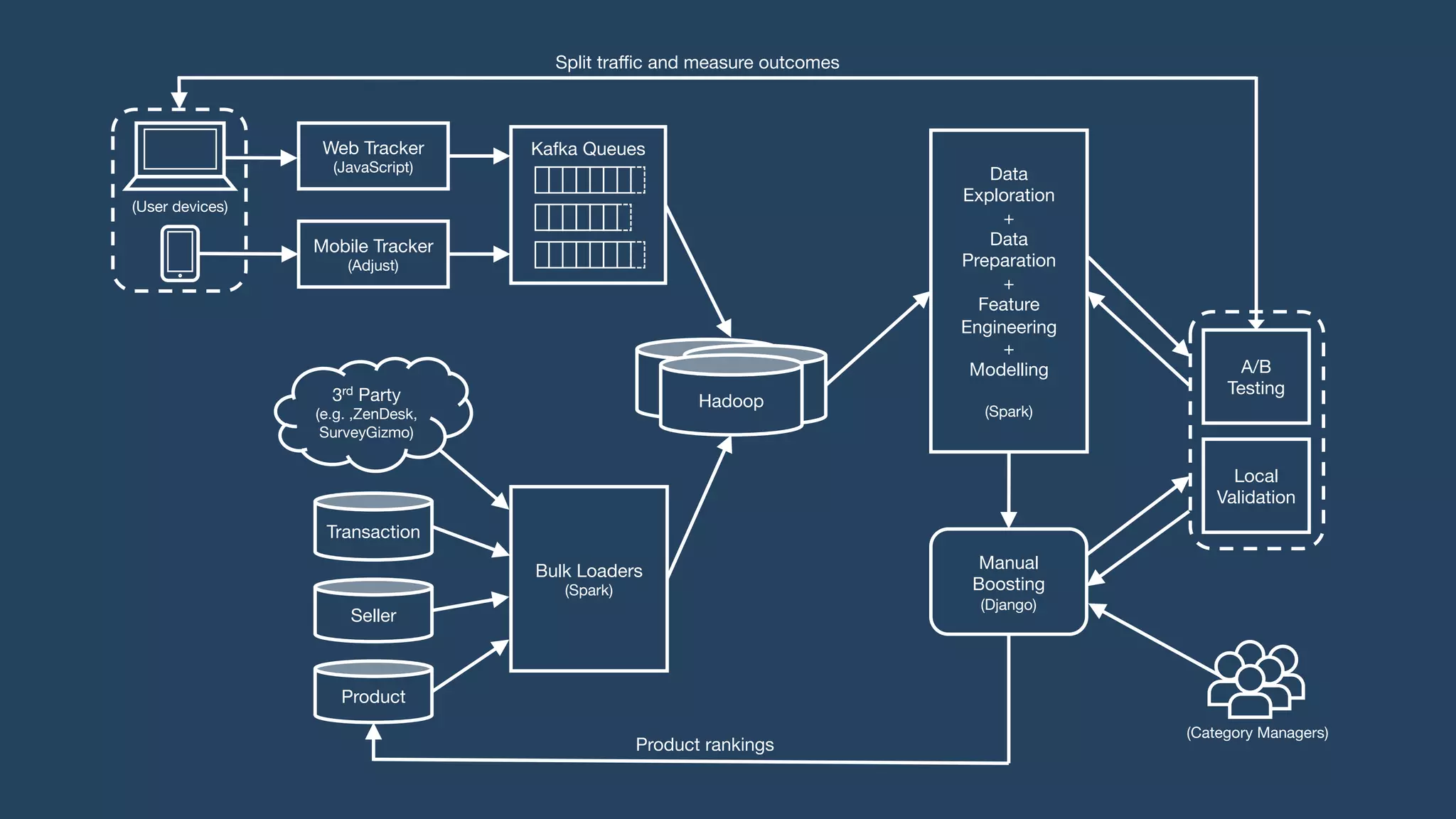

Lazada enhances product rankings to improve customer experience and conversion through data-driven methods that analyze user behavior and product engagement. The document discusses how Lazada employs machine learning and various metrics to identify and boost new and quality products, resulting in increased conversion rates and user engagement. Key methodologies involve measuring shopper interest, product similarity, and quality, alongside testing changes to ensure effective outcomes.