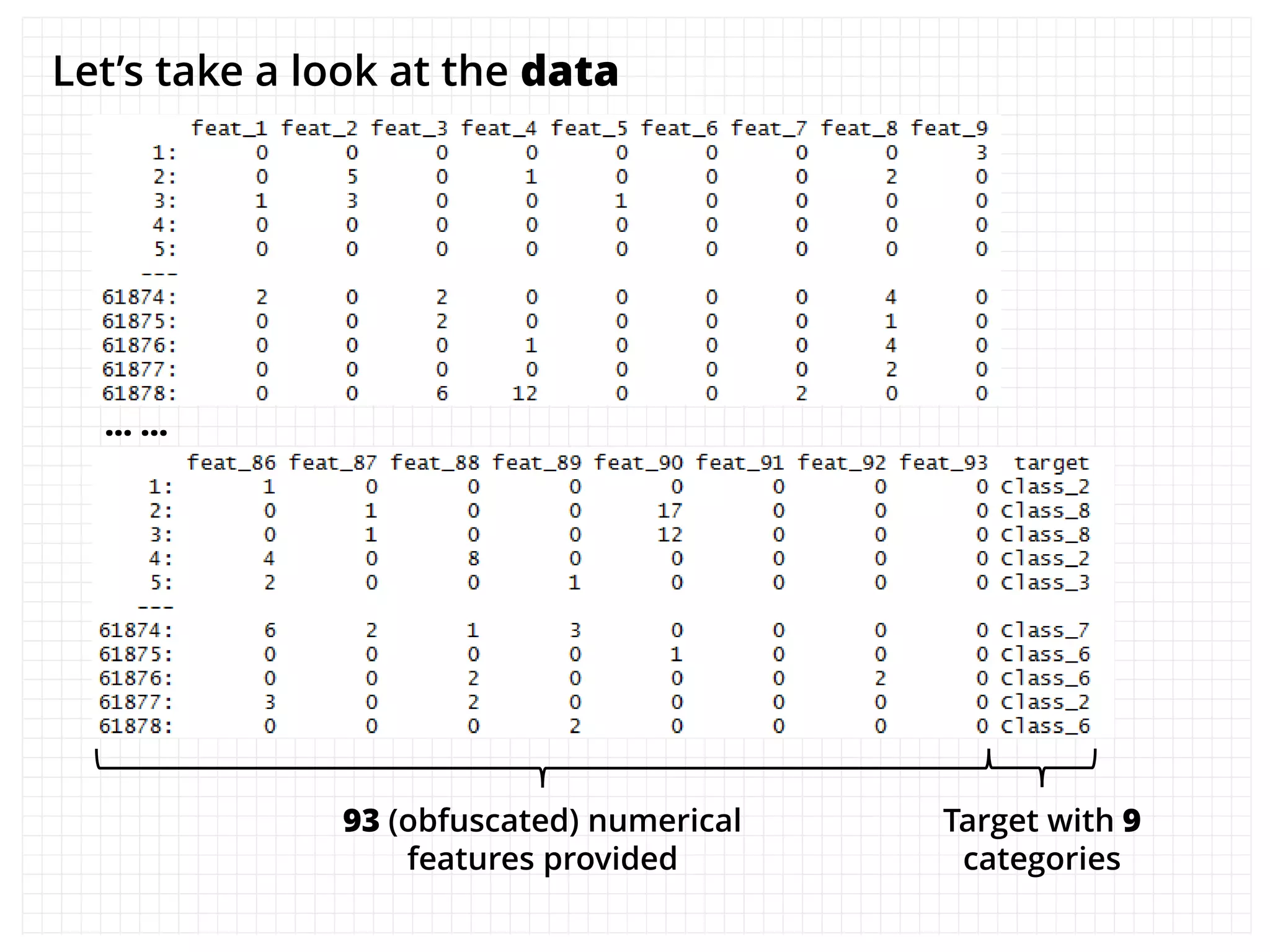

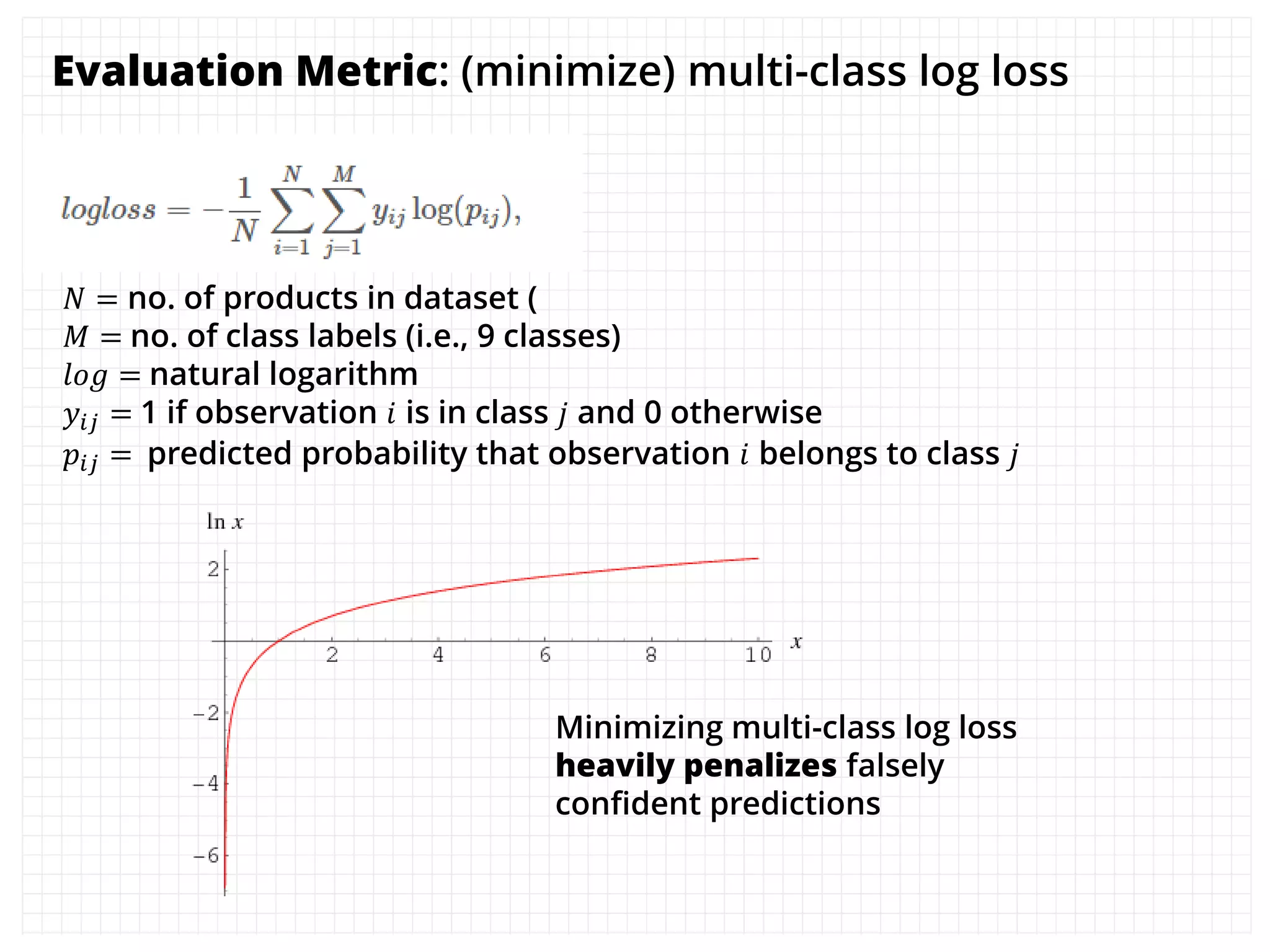

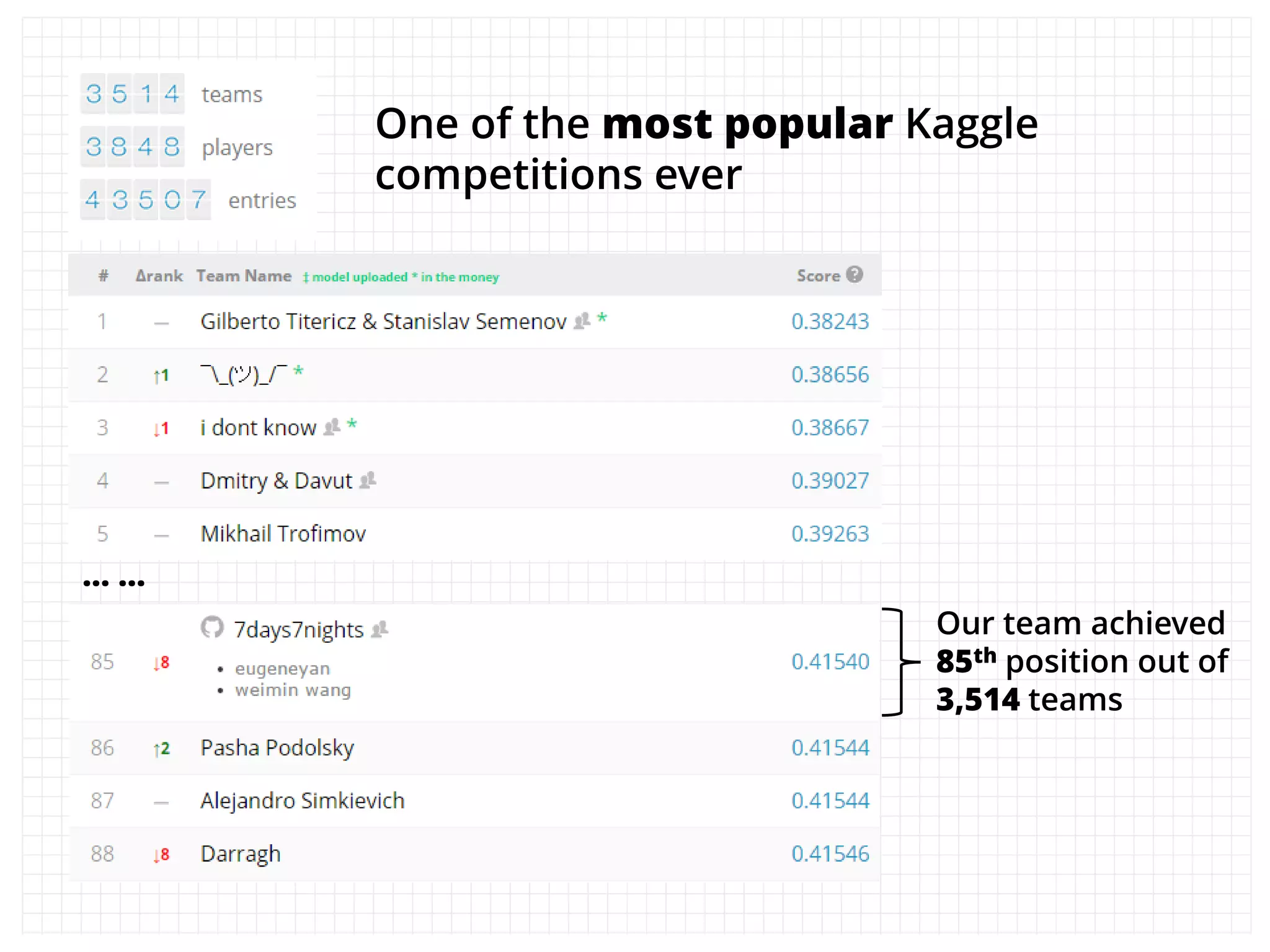

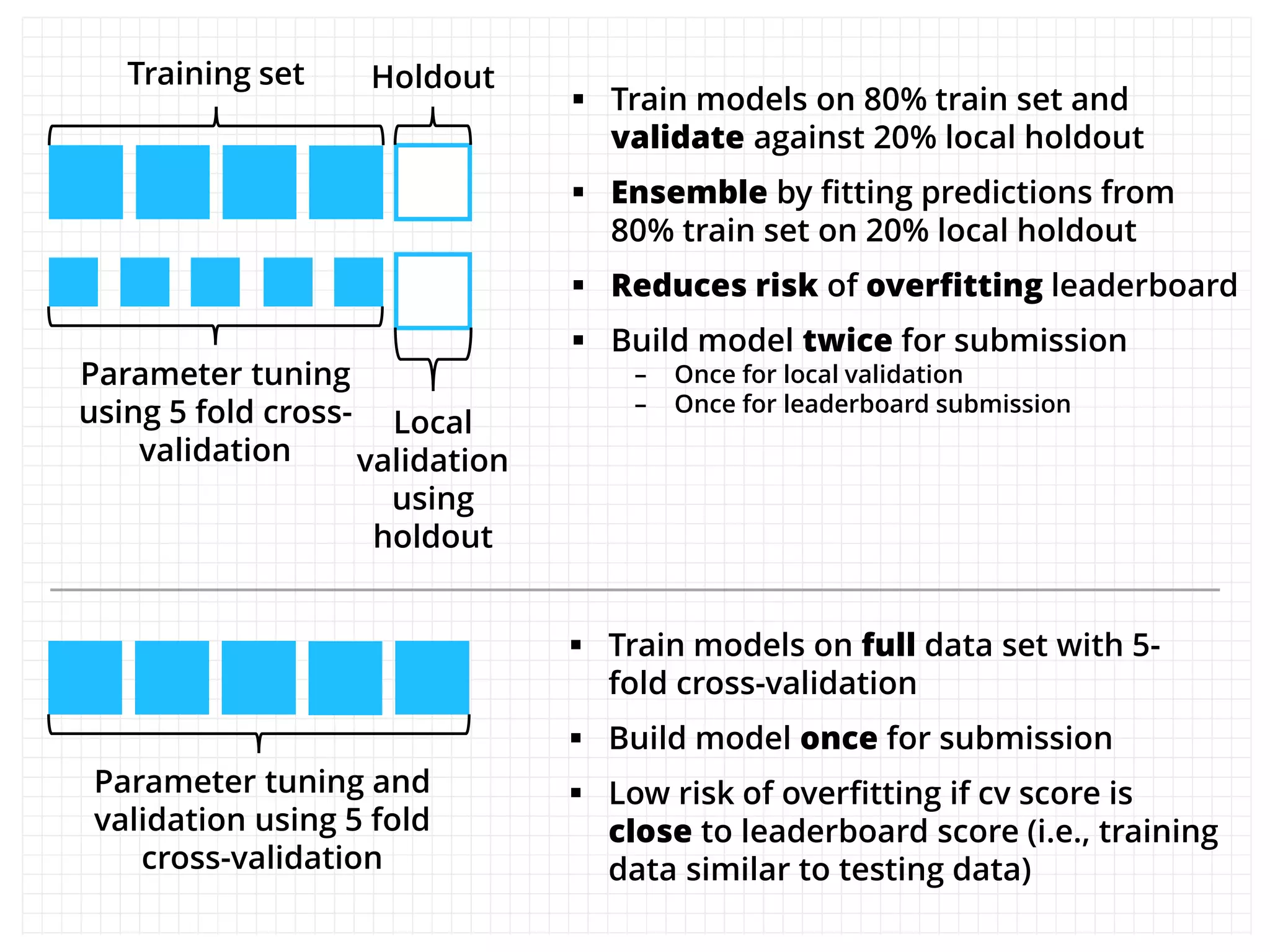

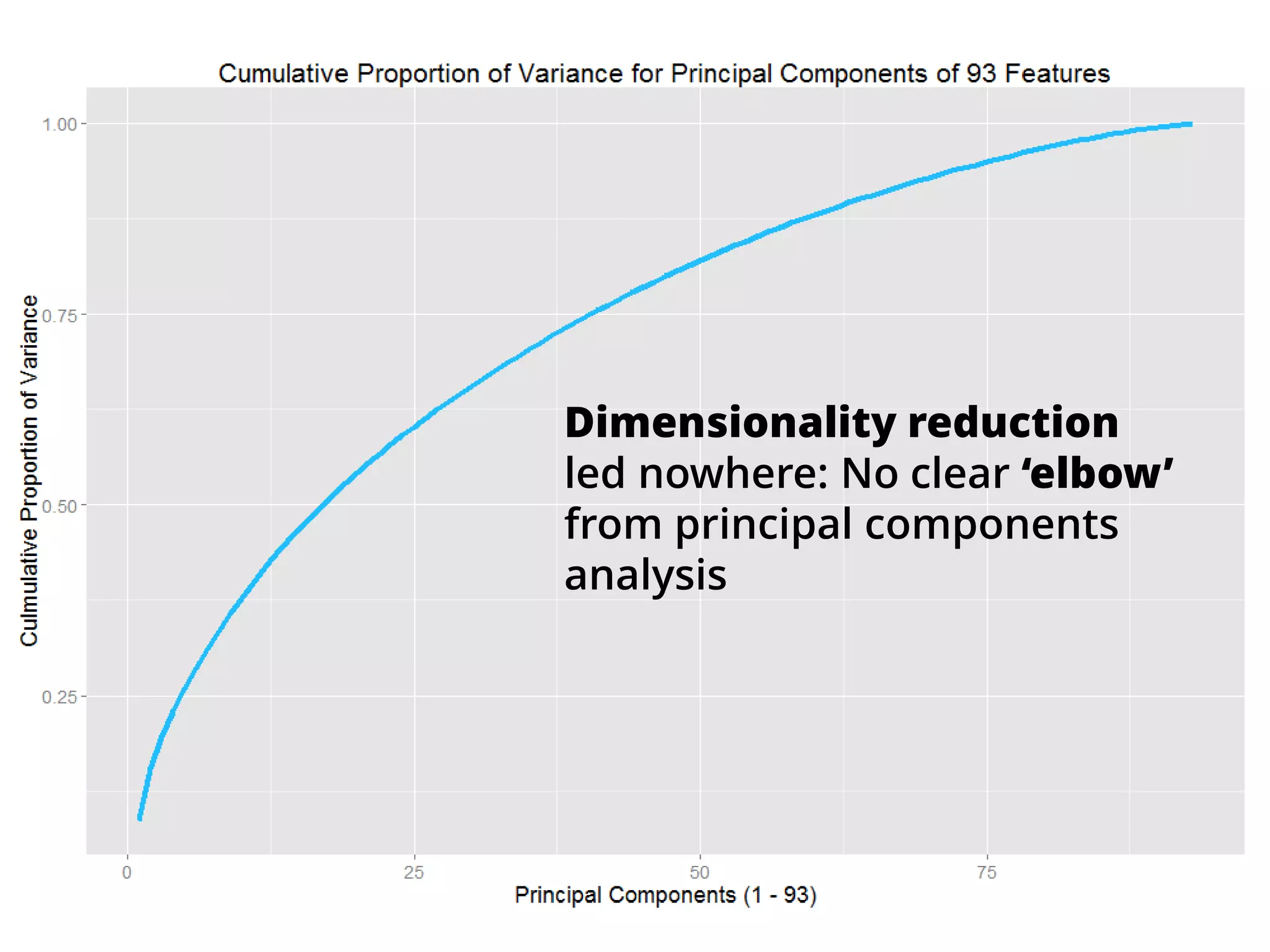

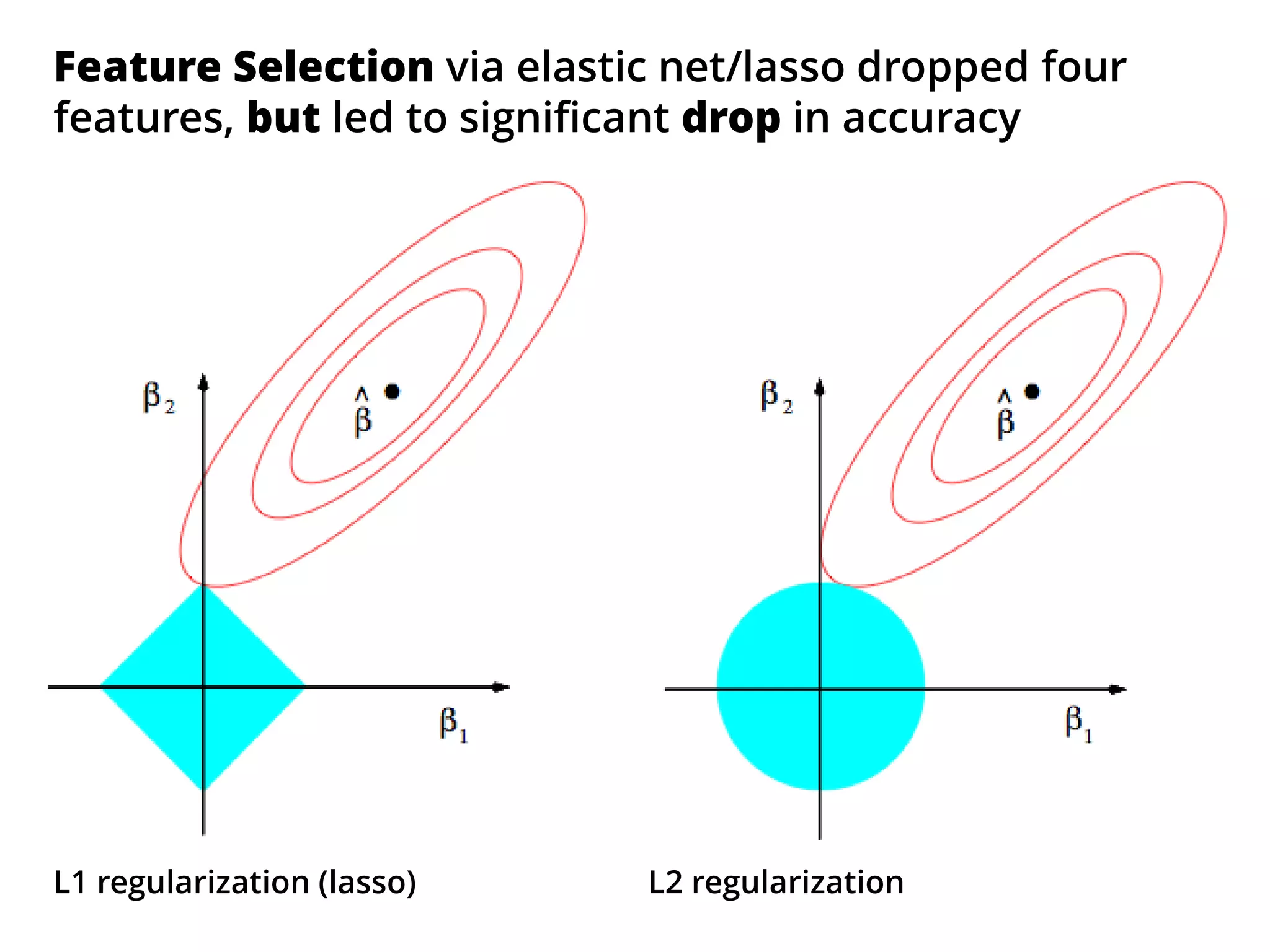

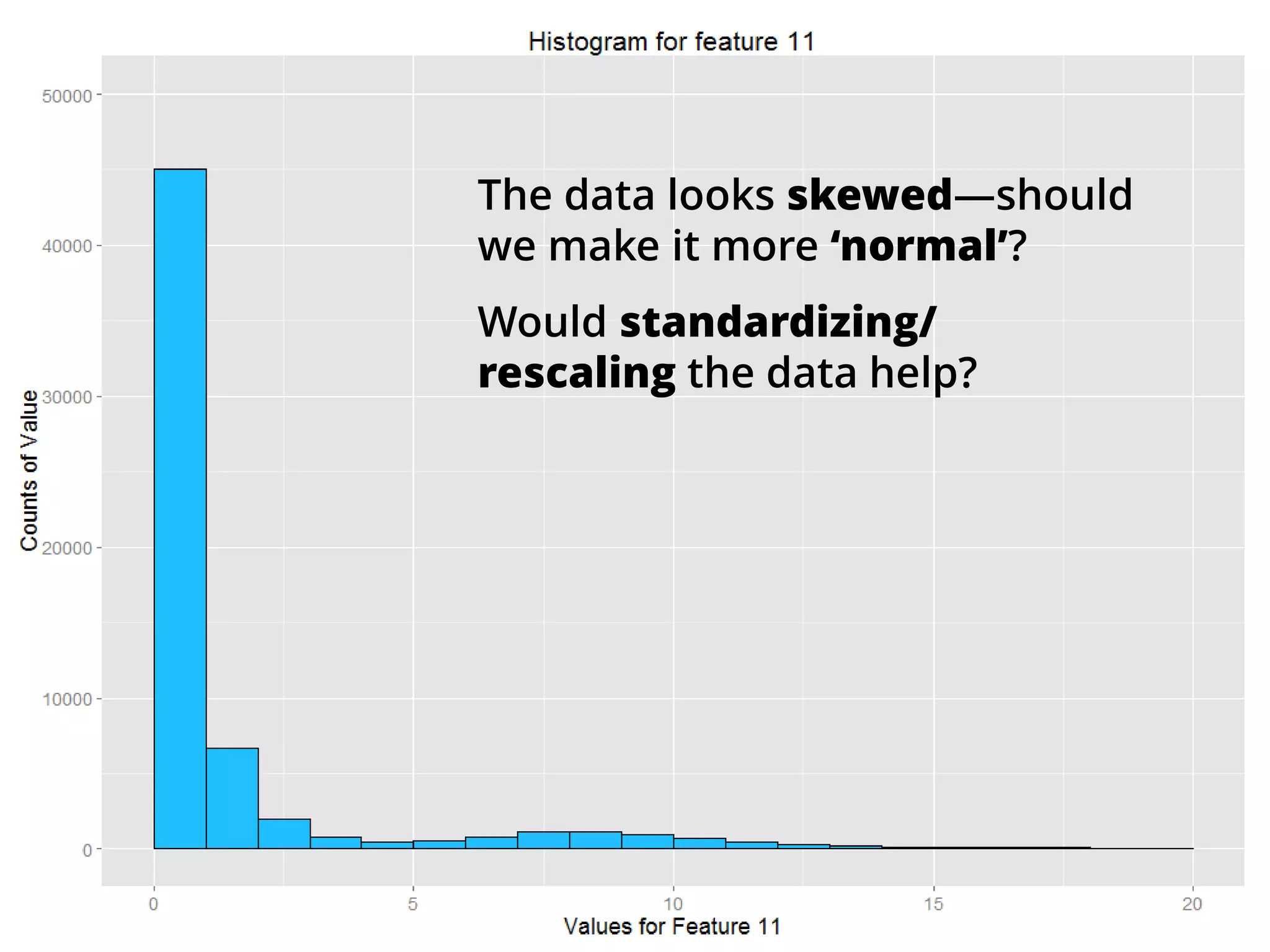

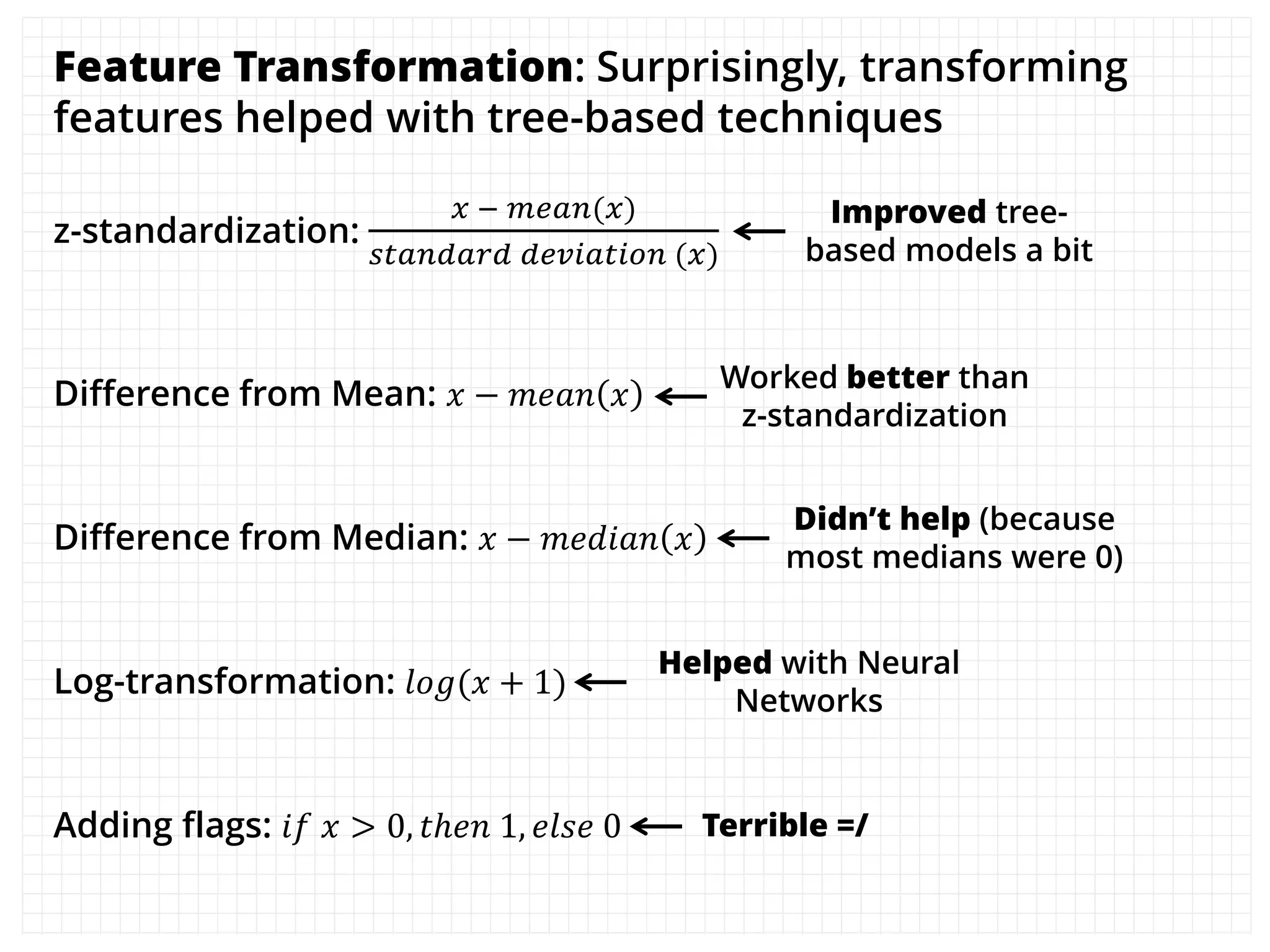

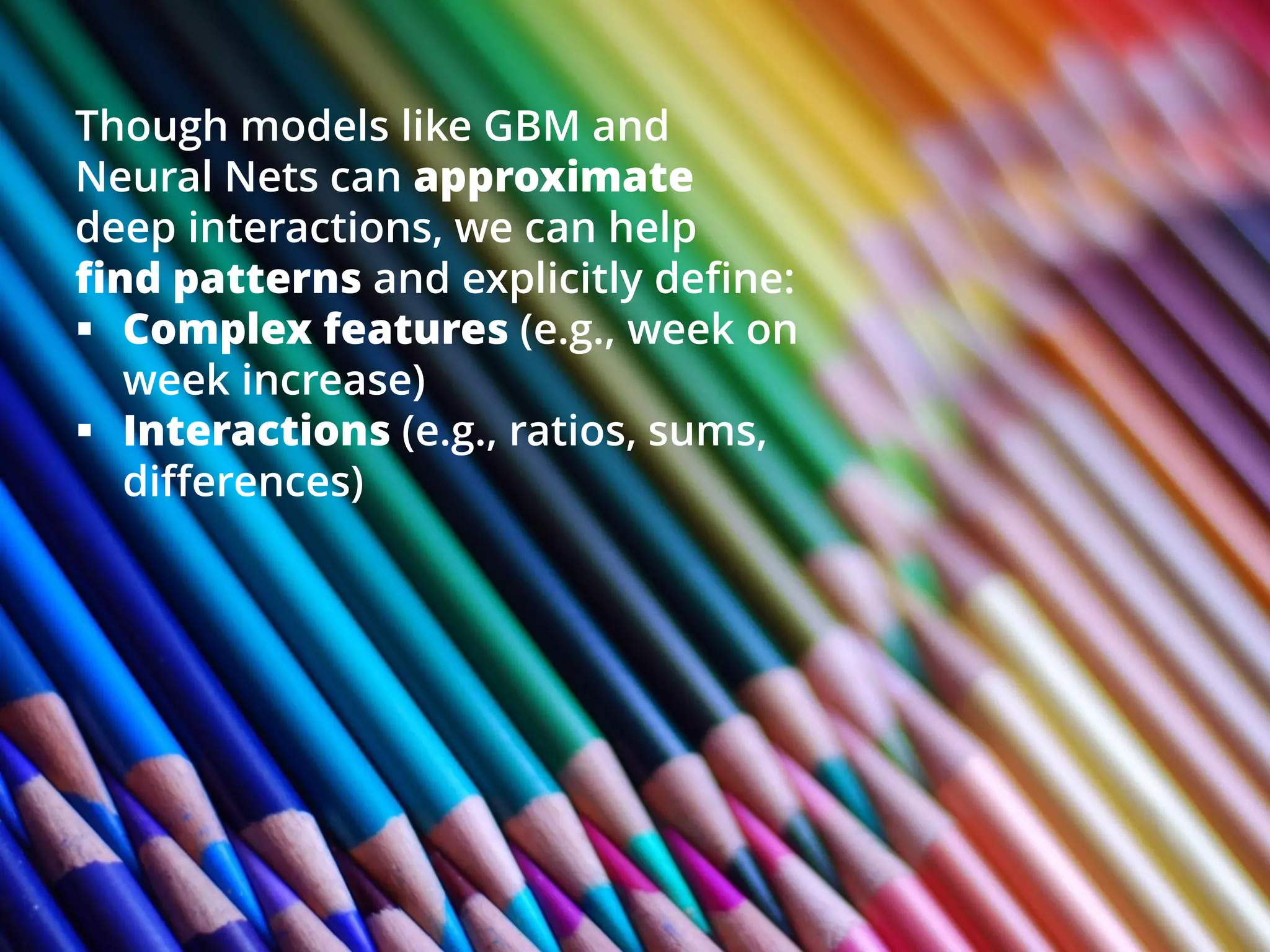

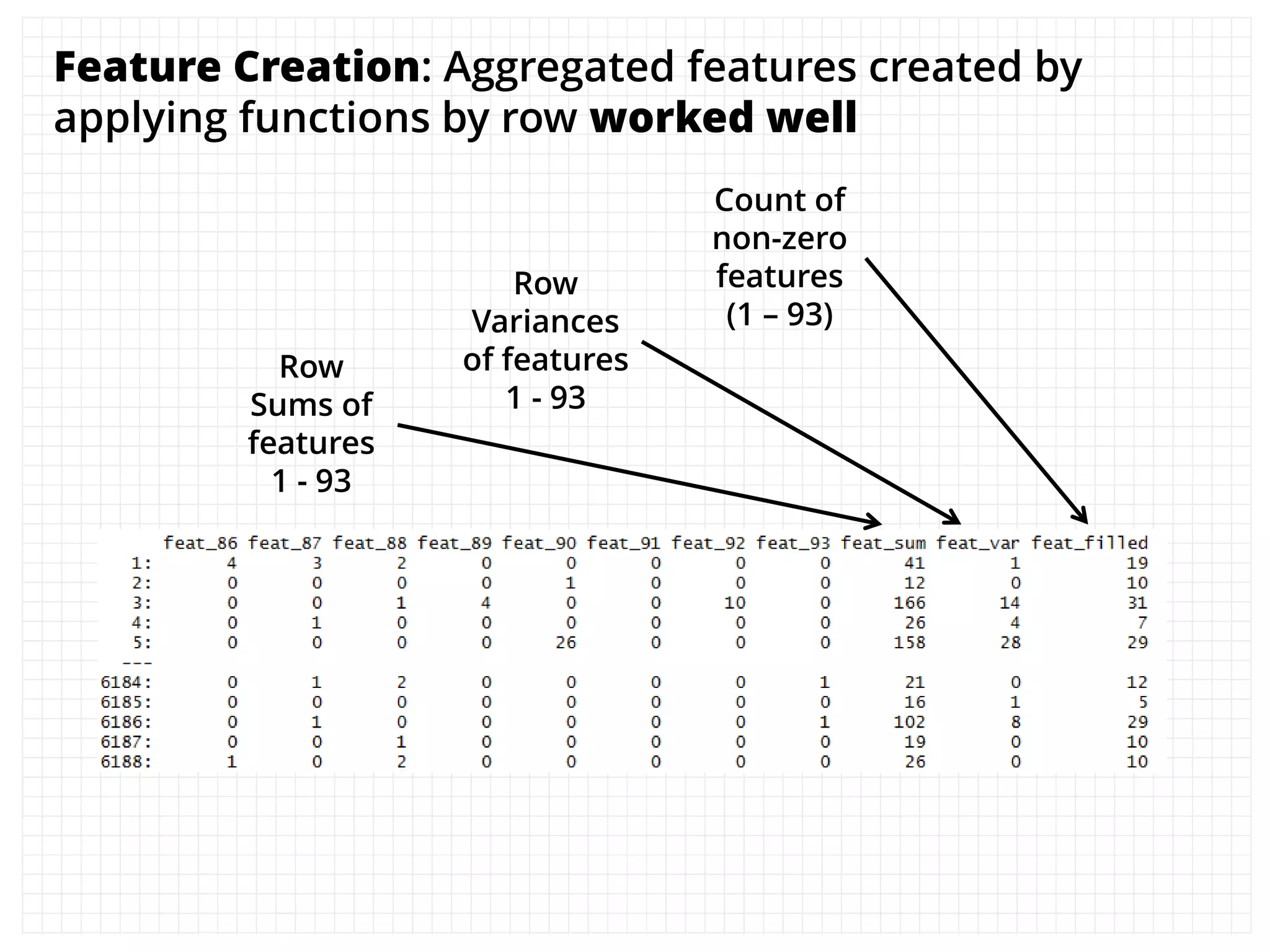

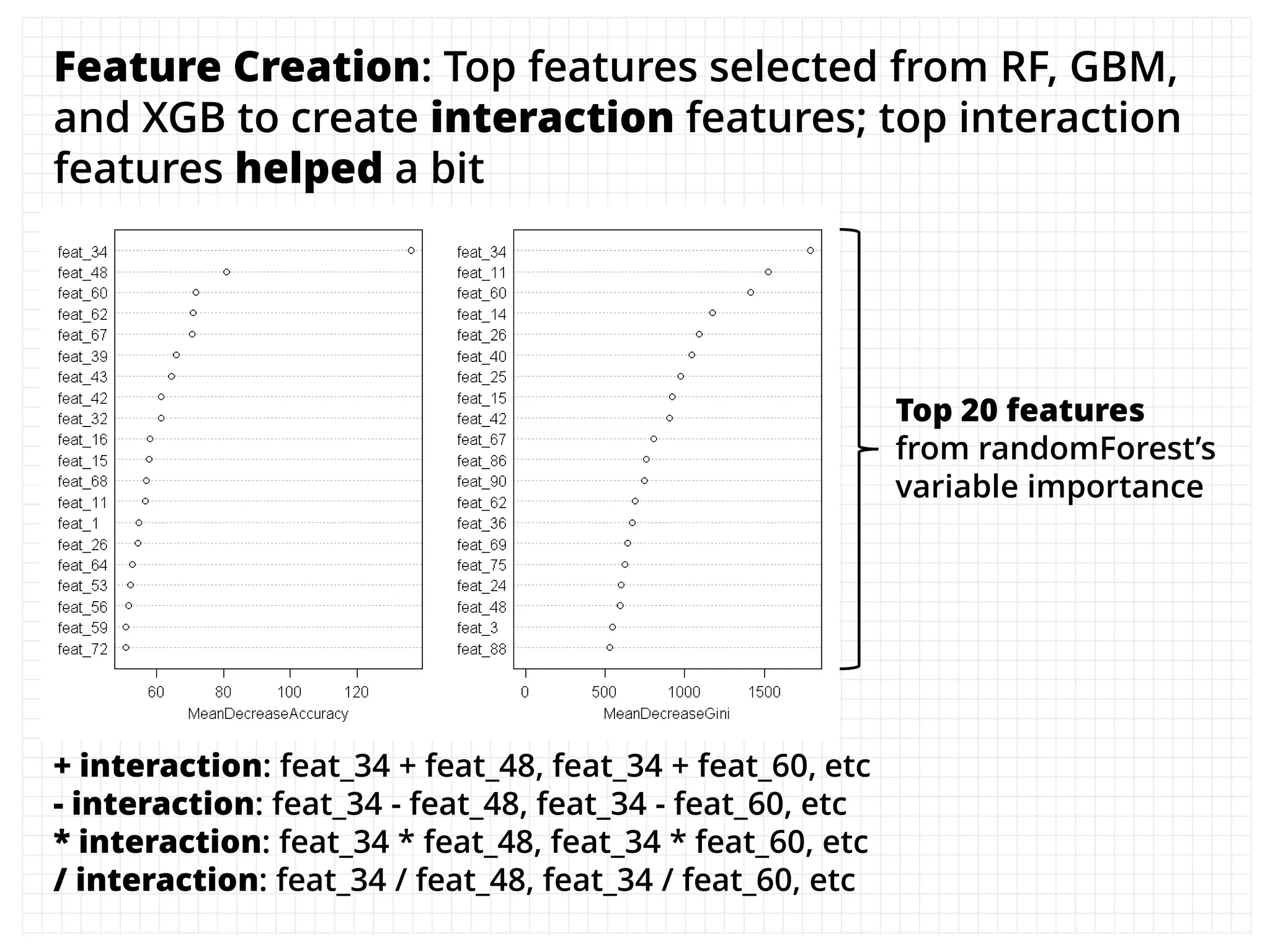

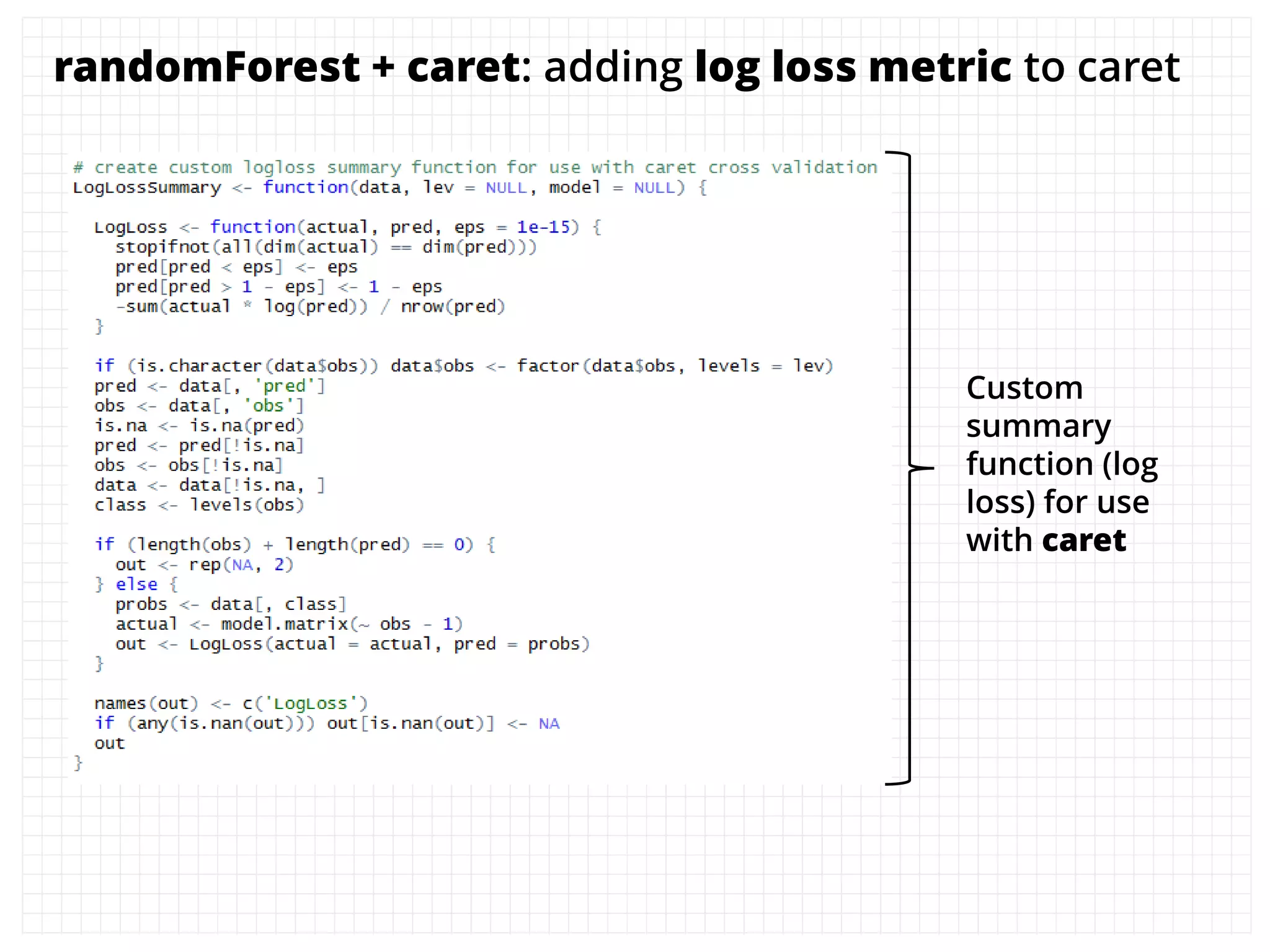

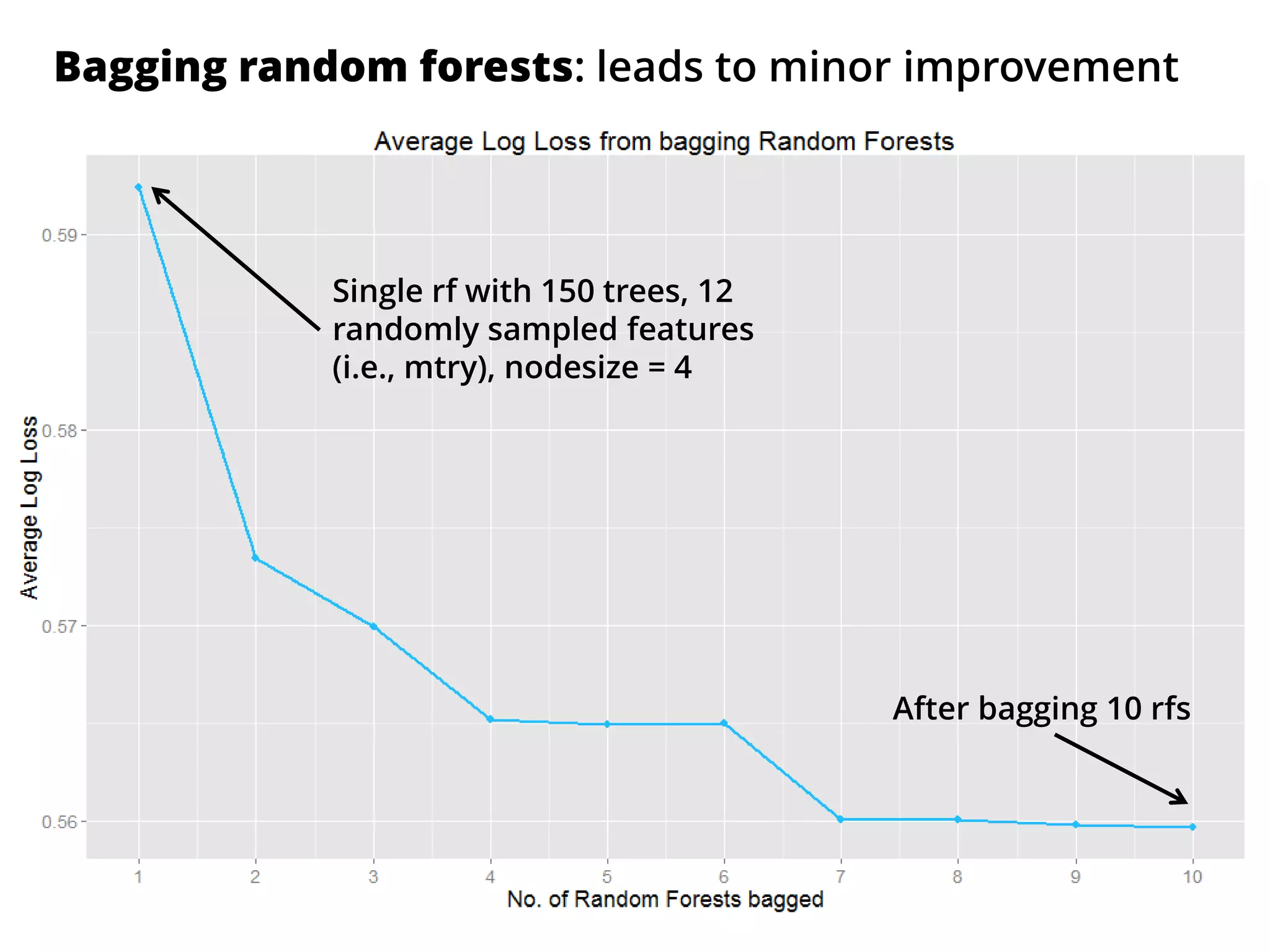

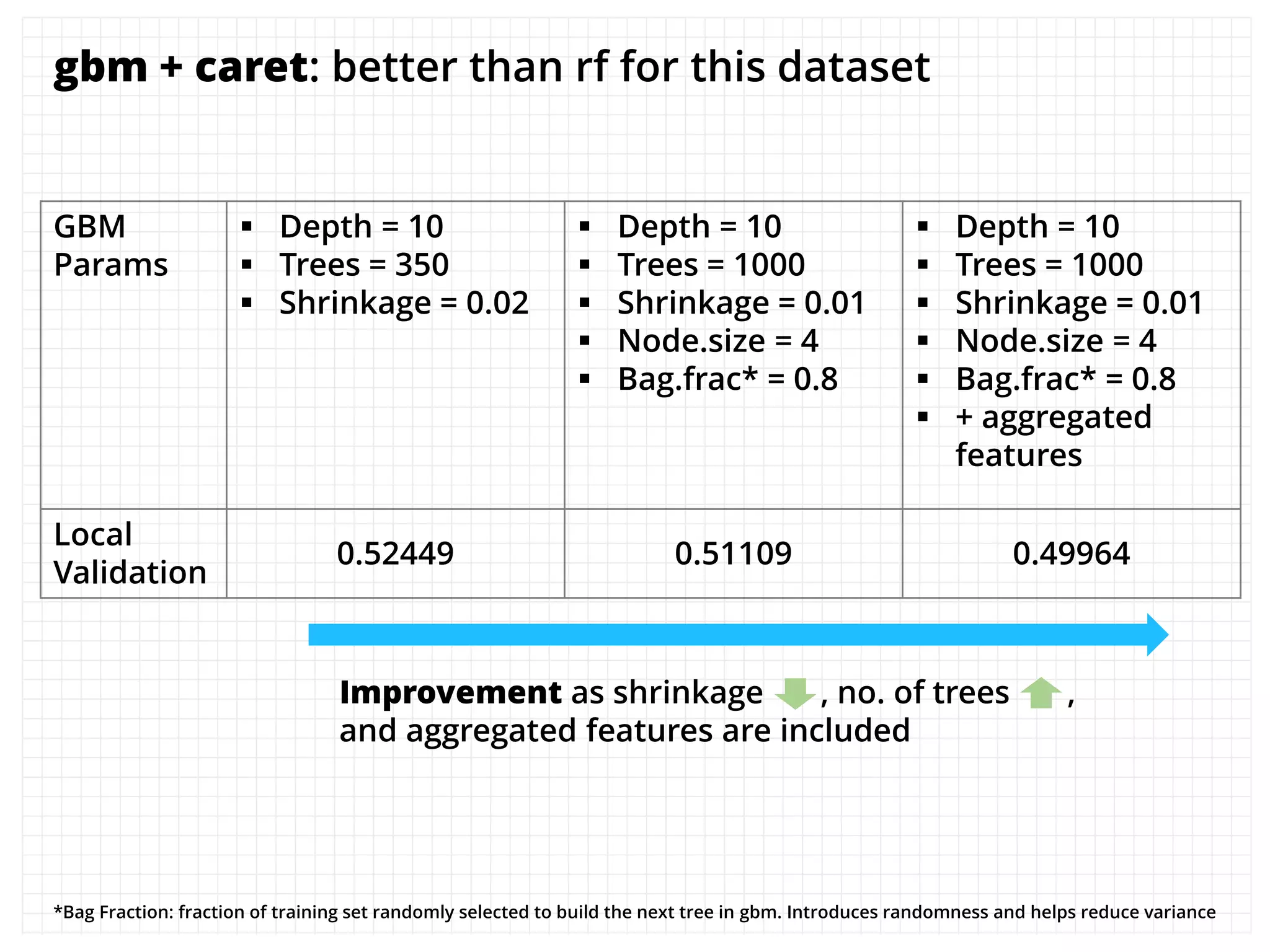

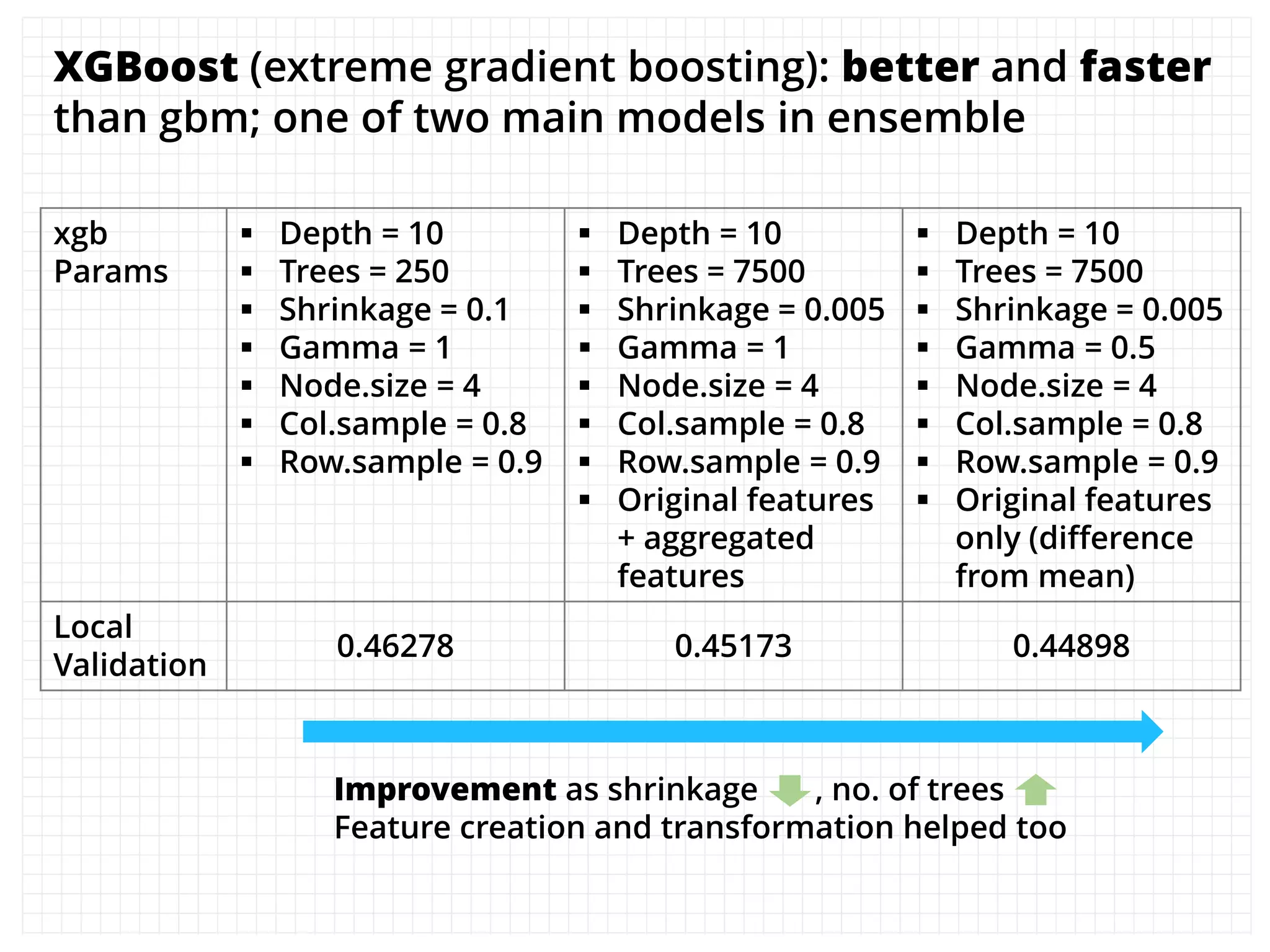

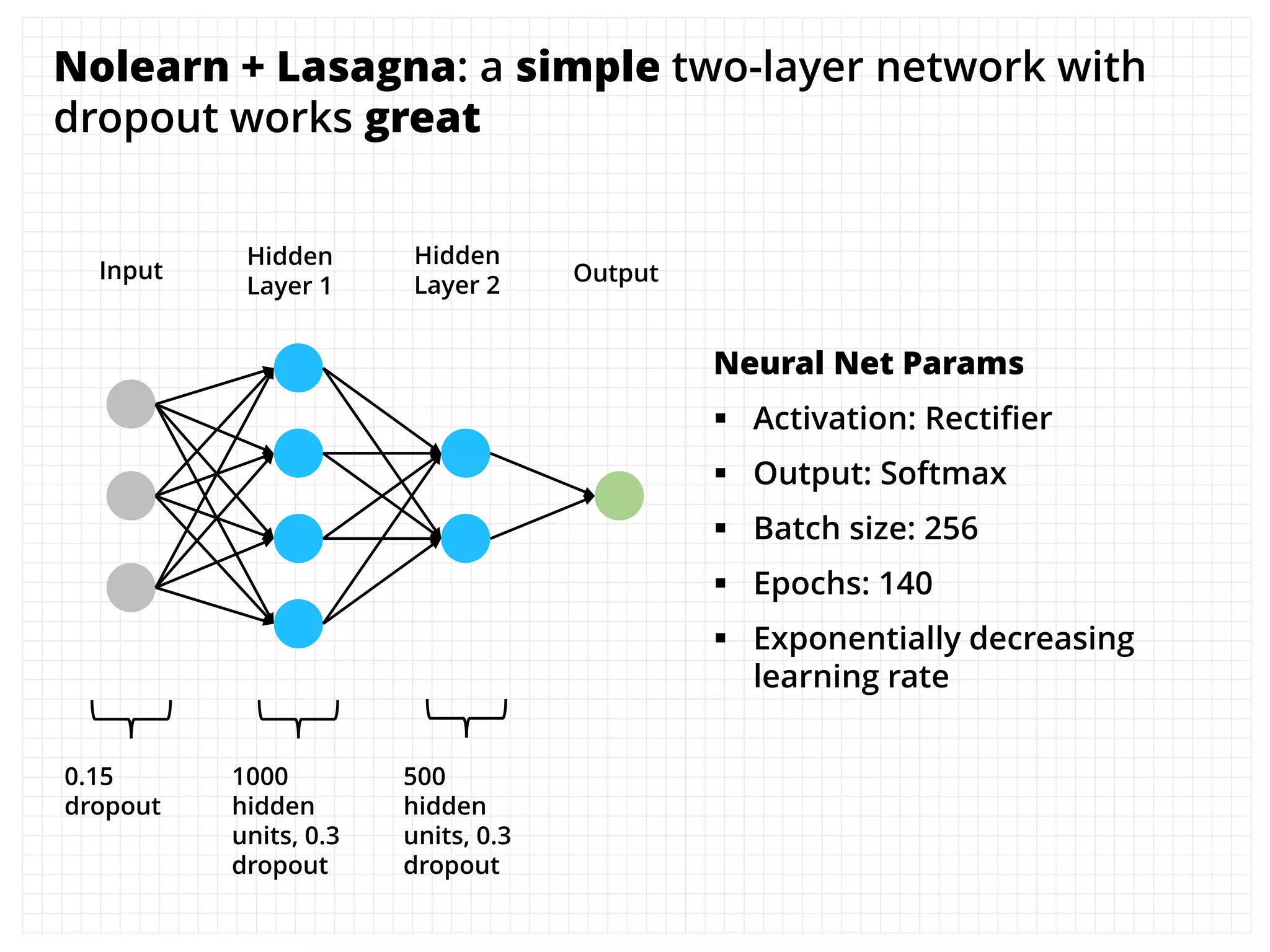

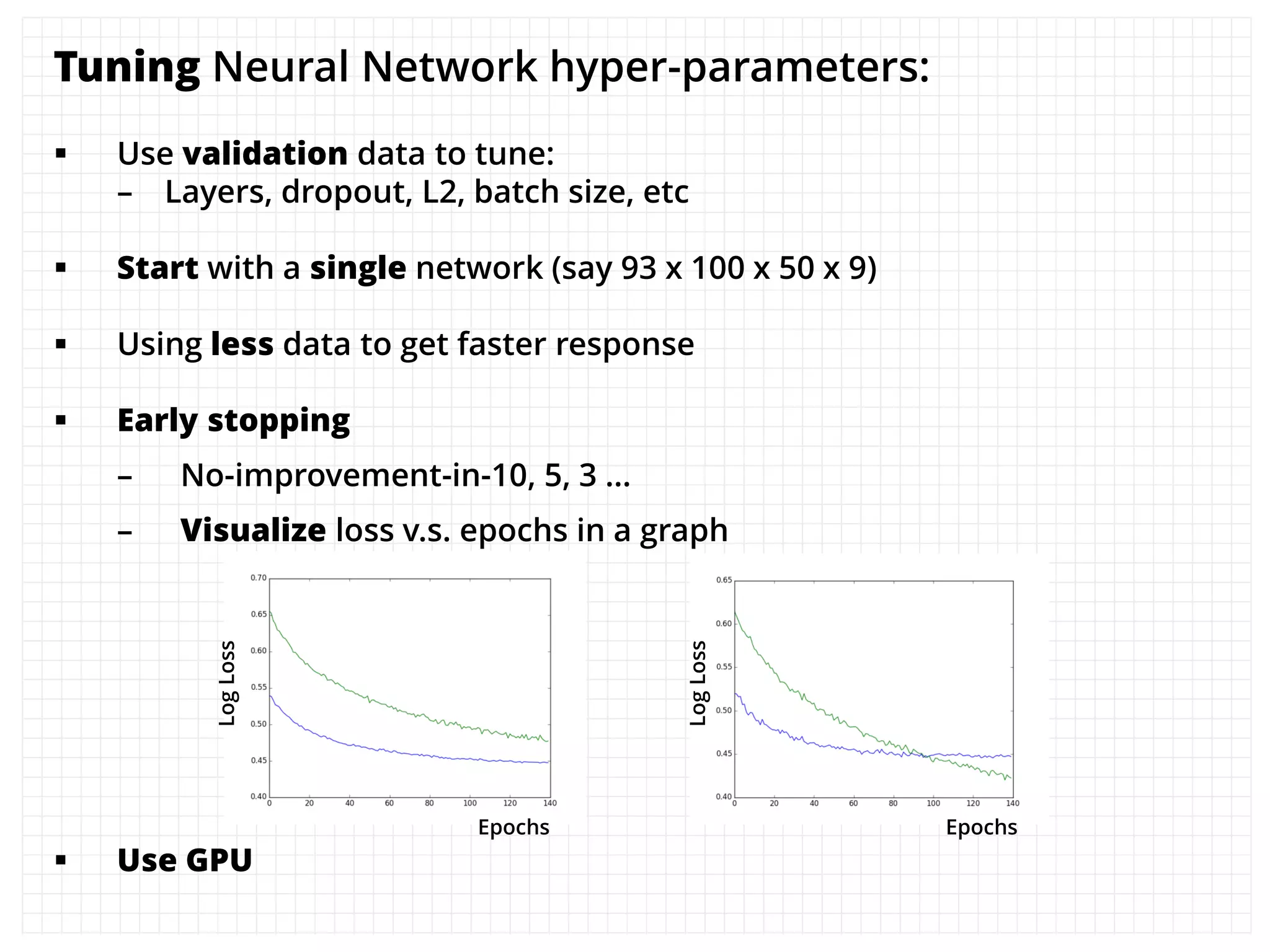

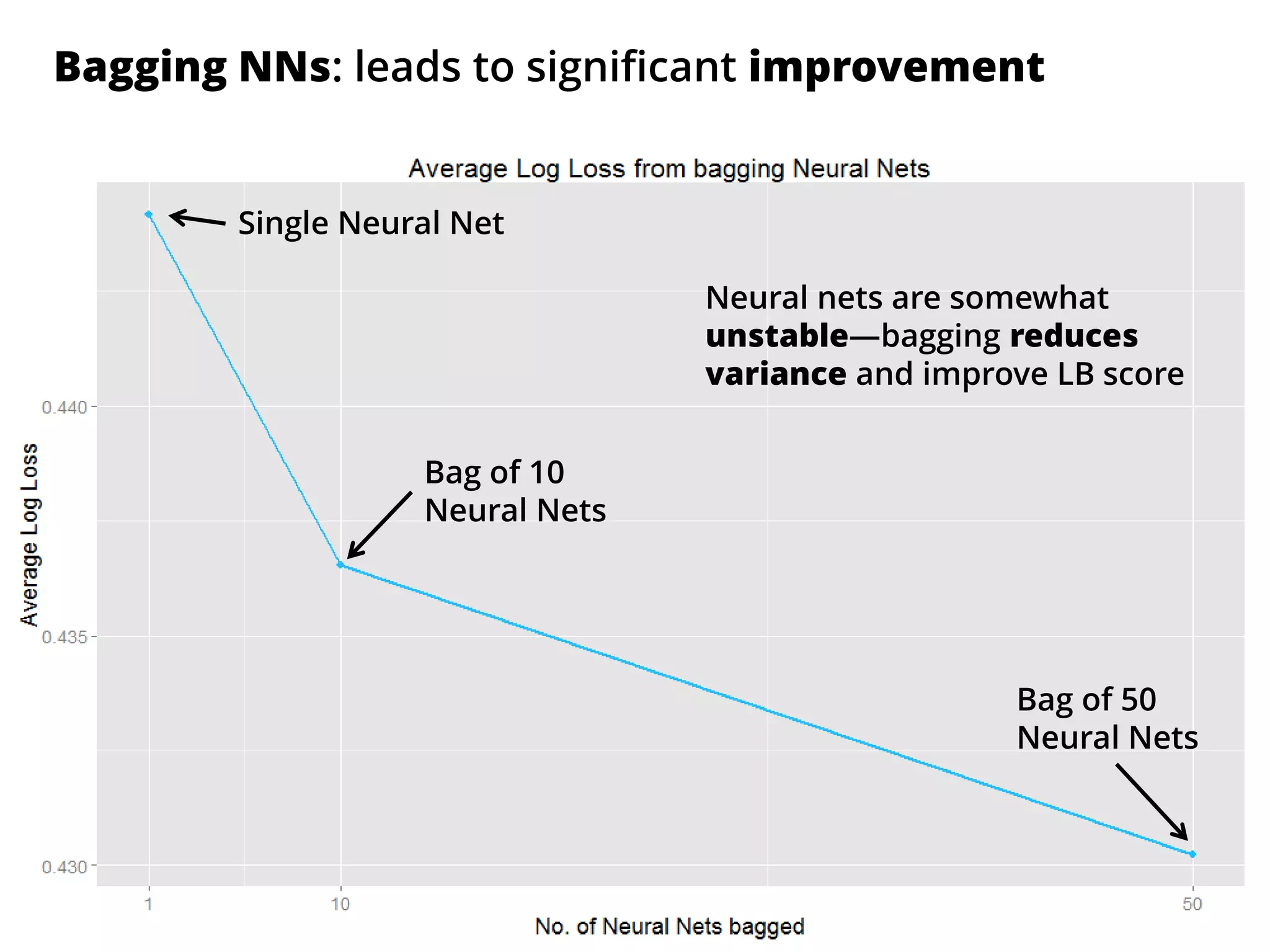

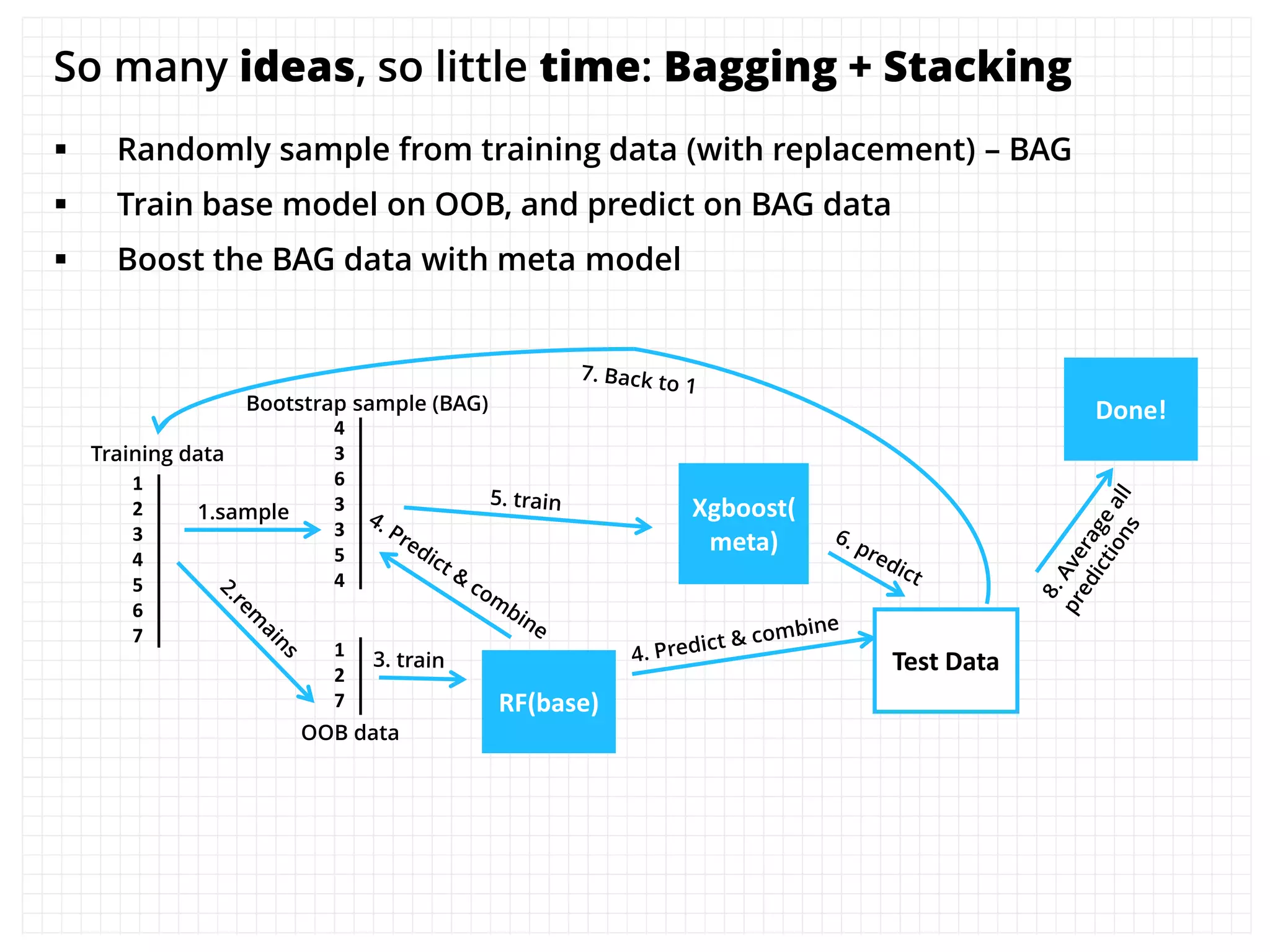

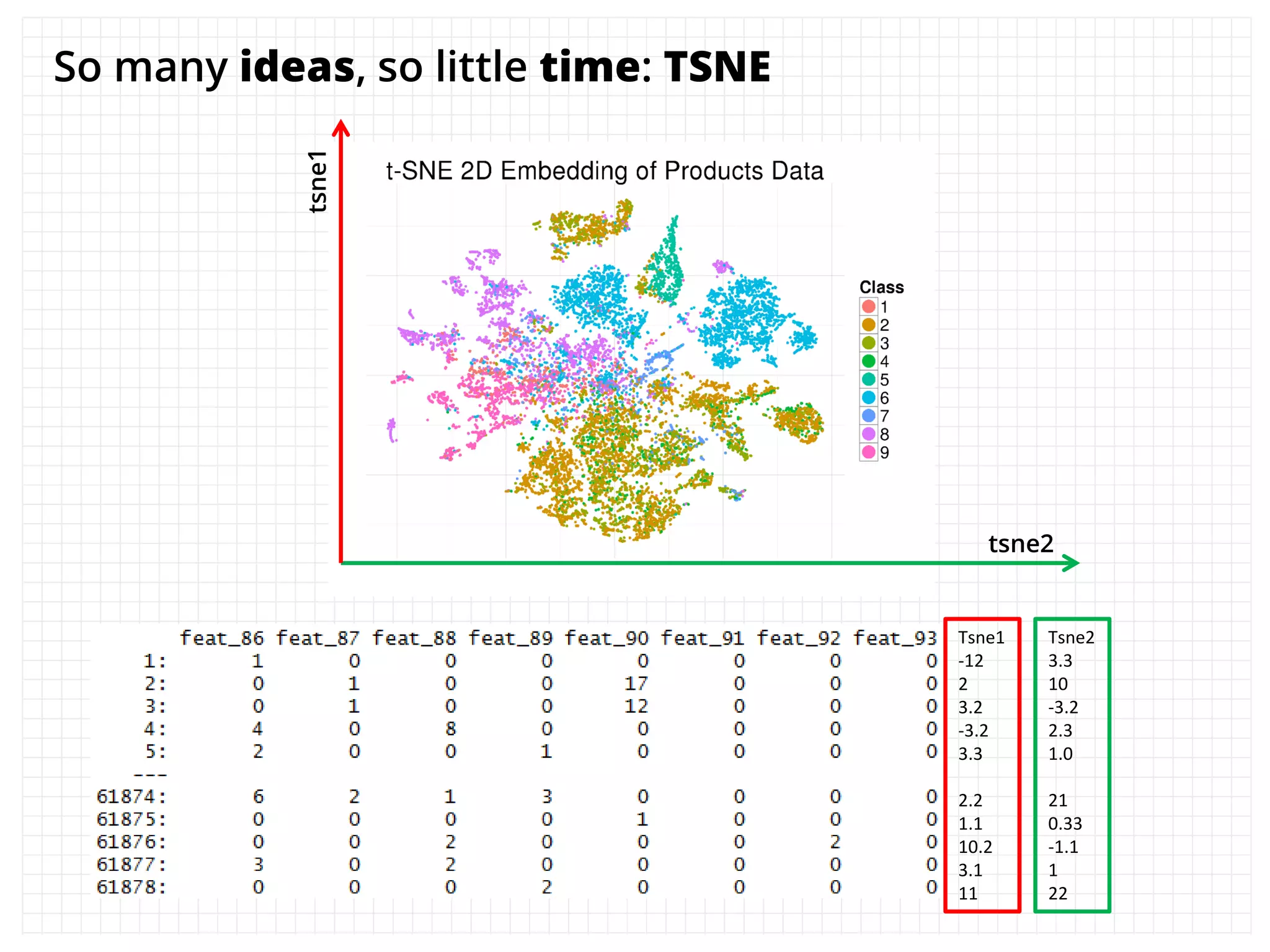

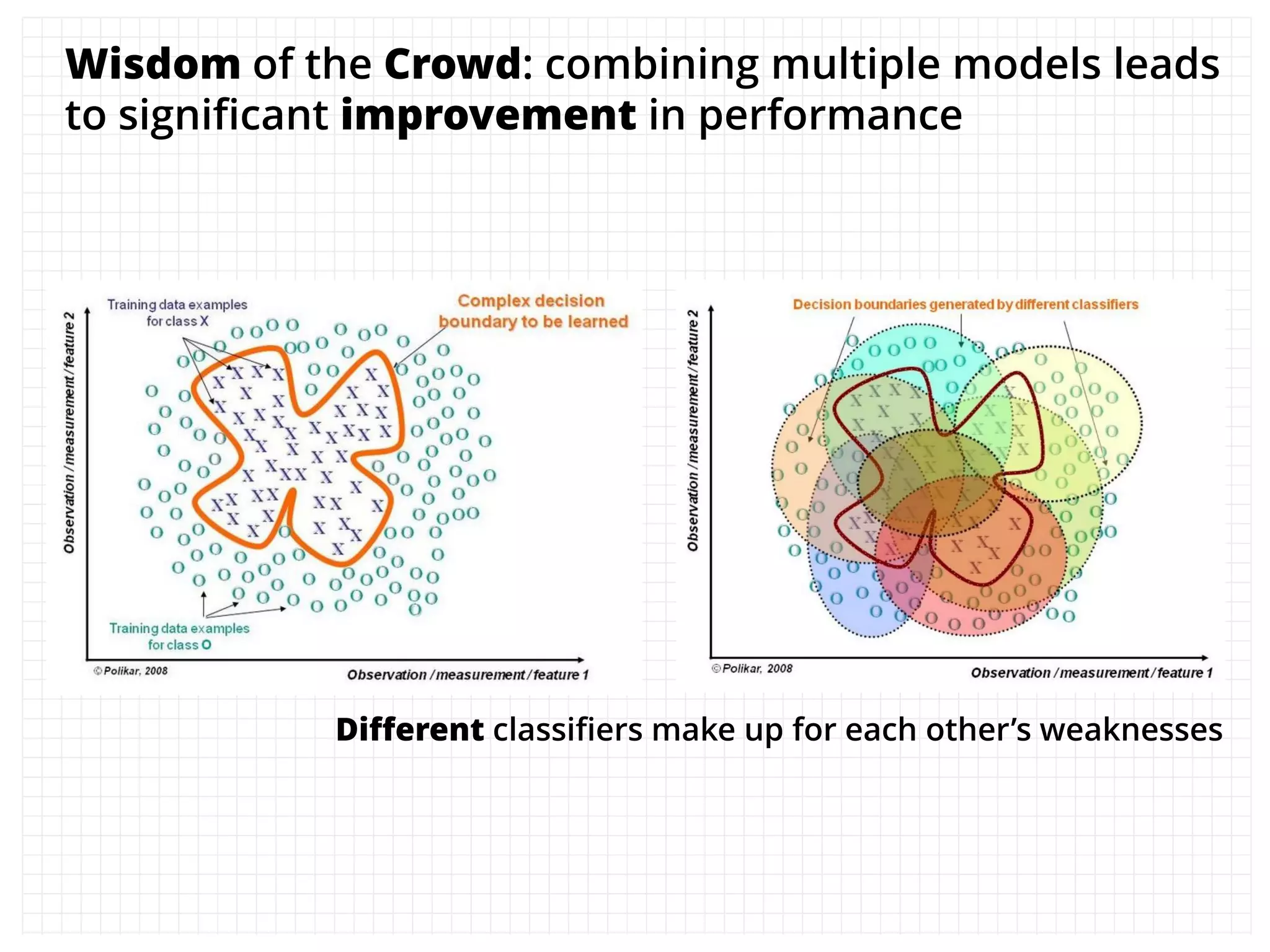

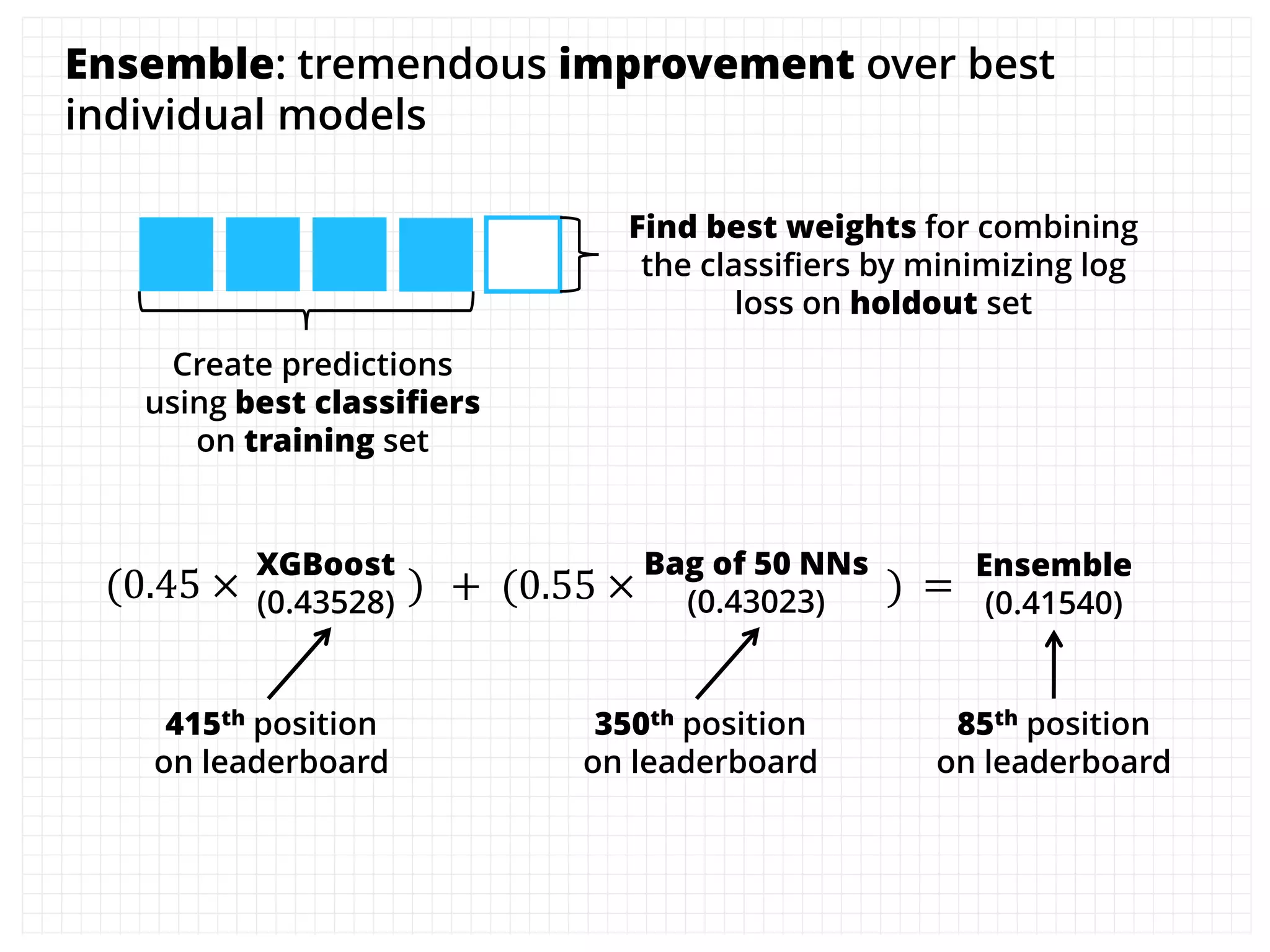

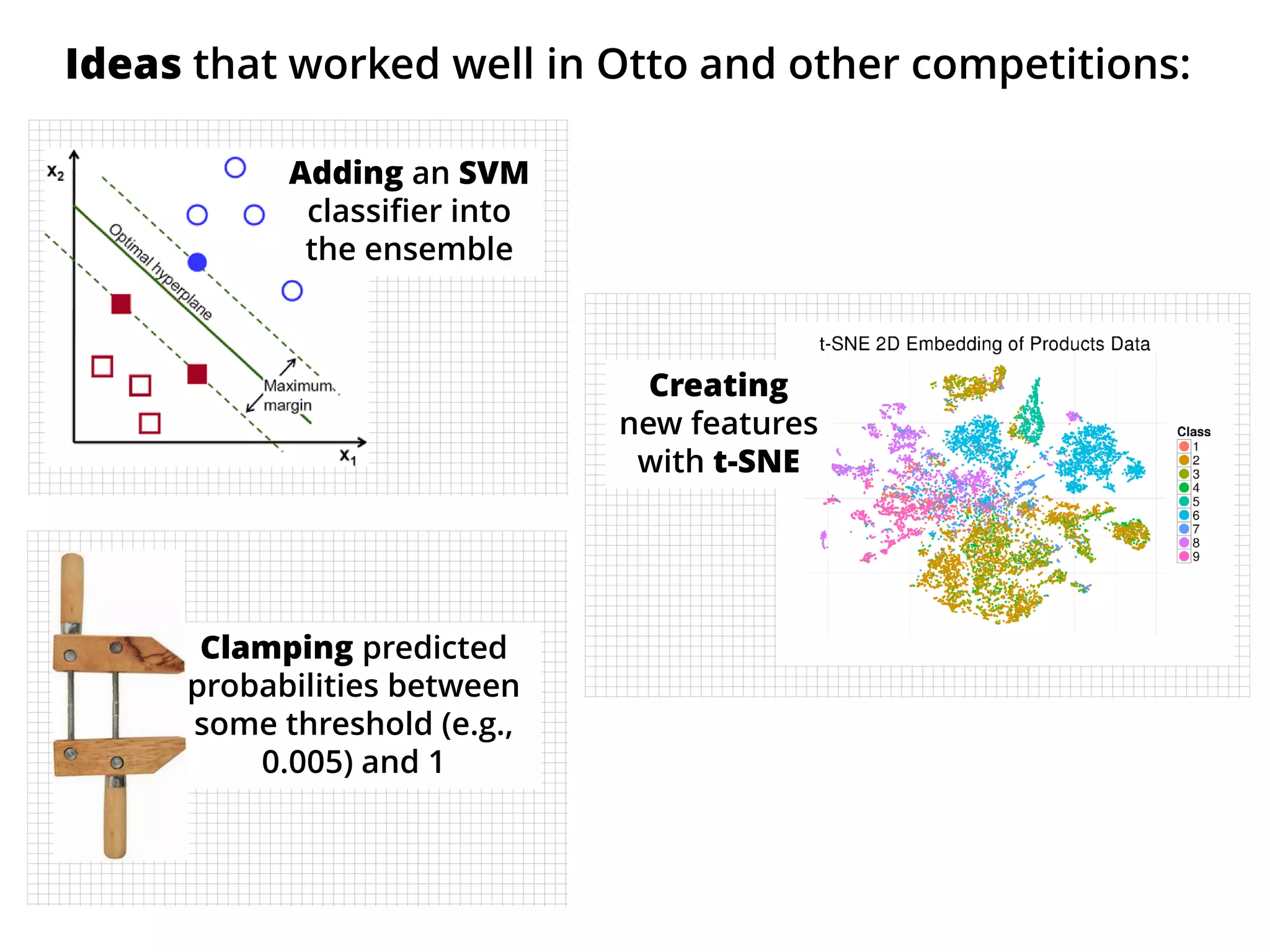

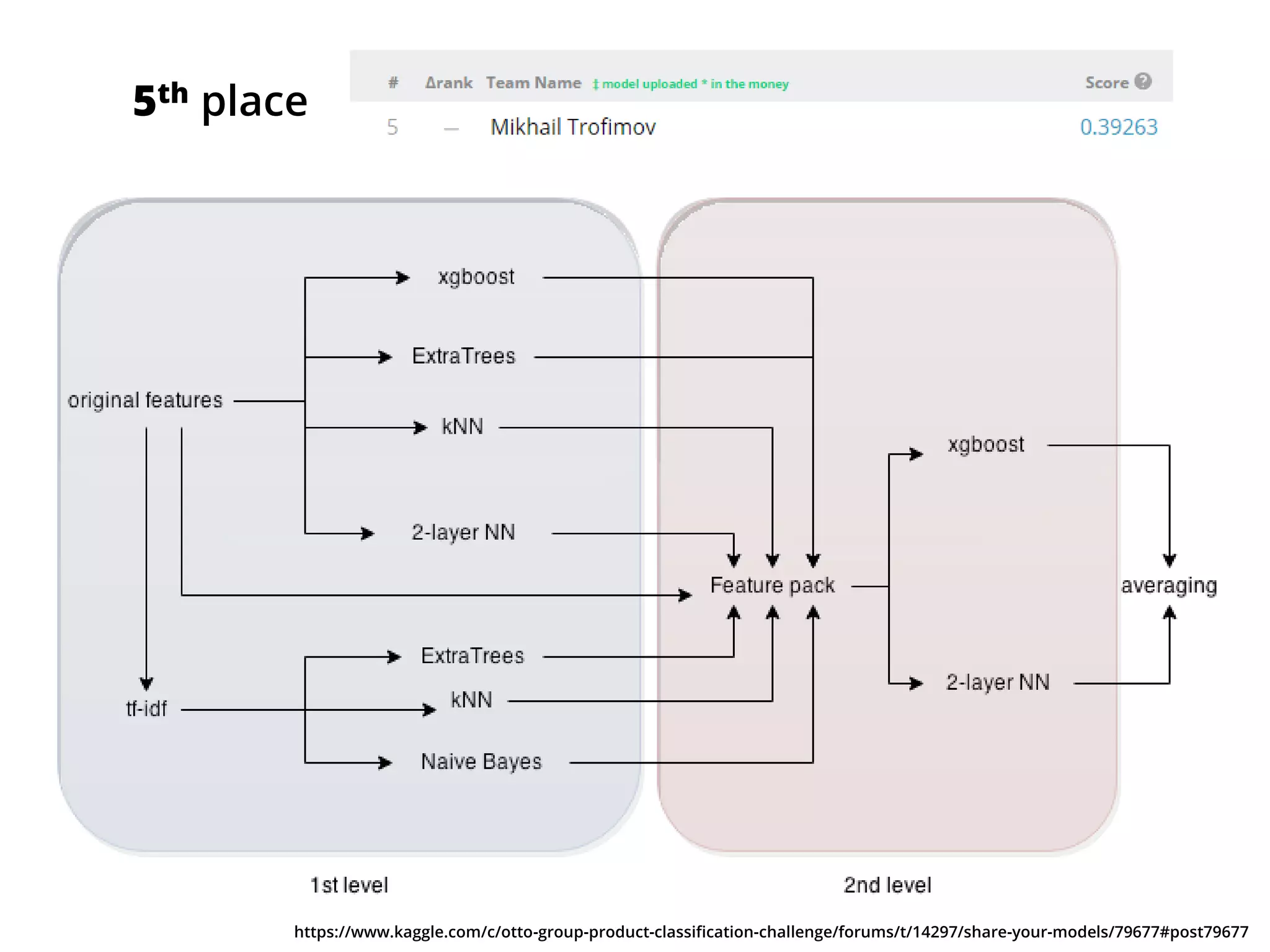

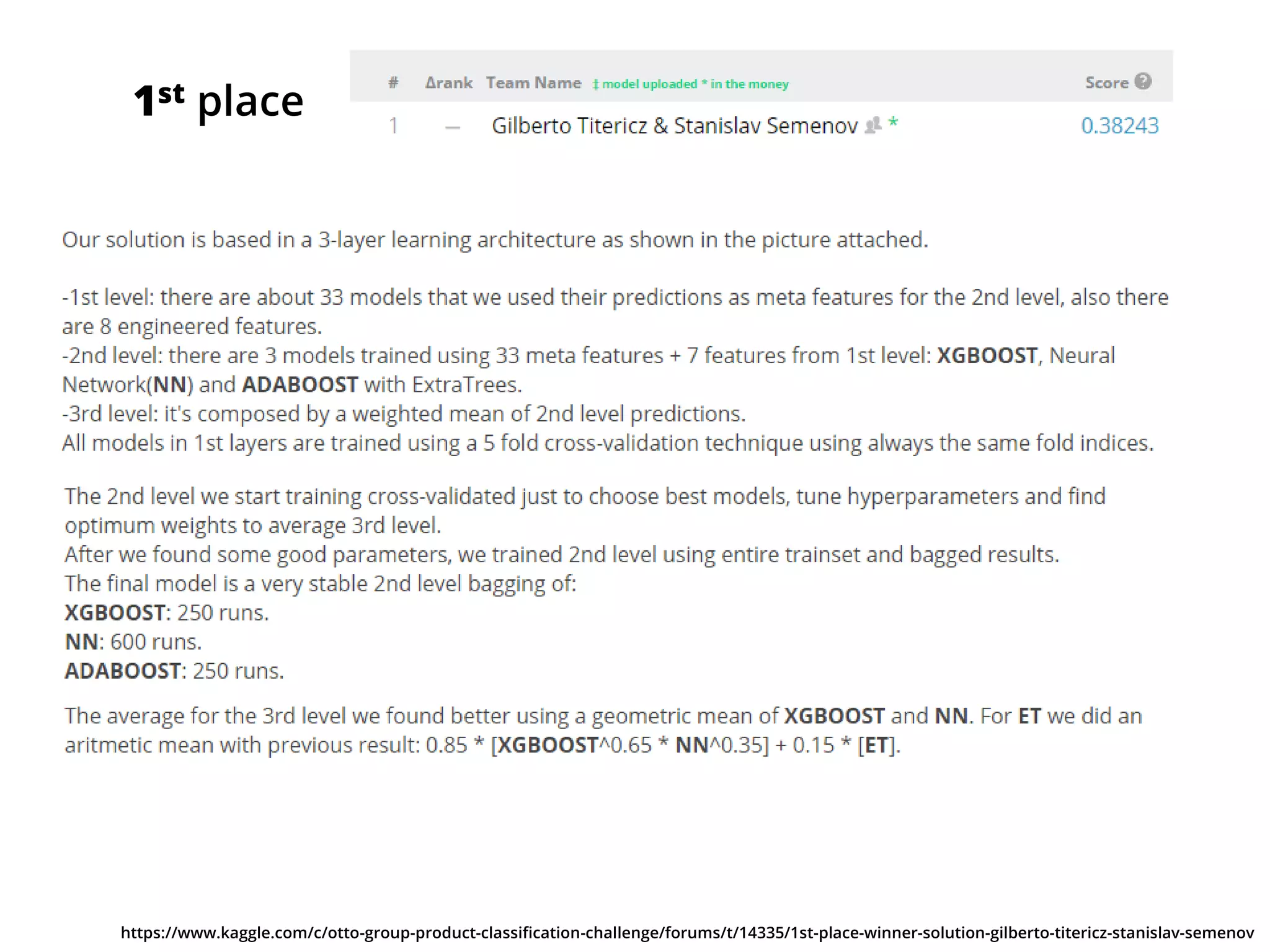

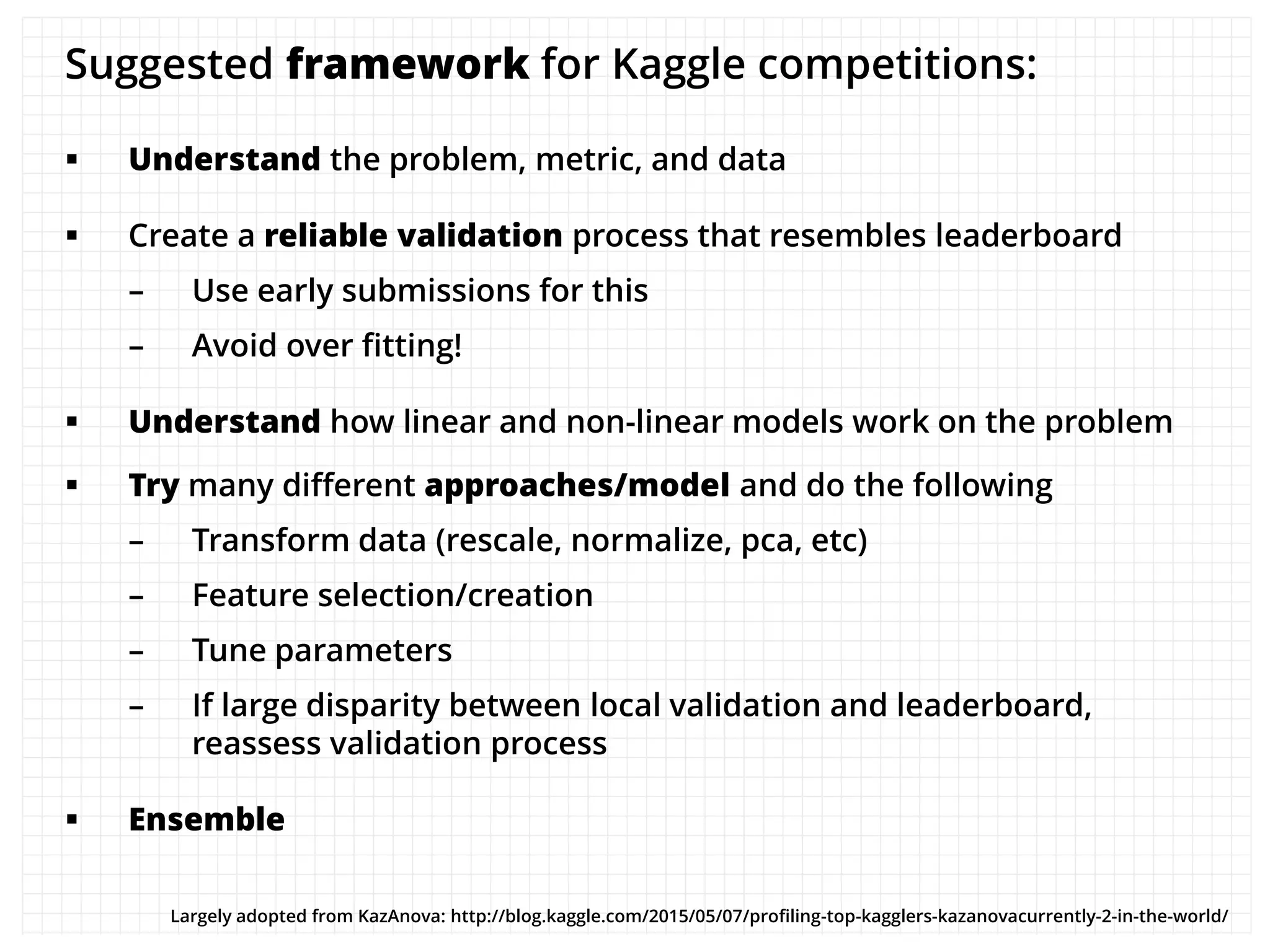

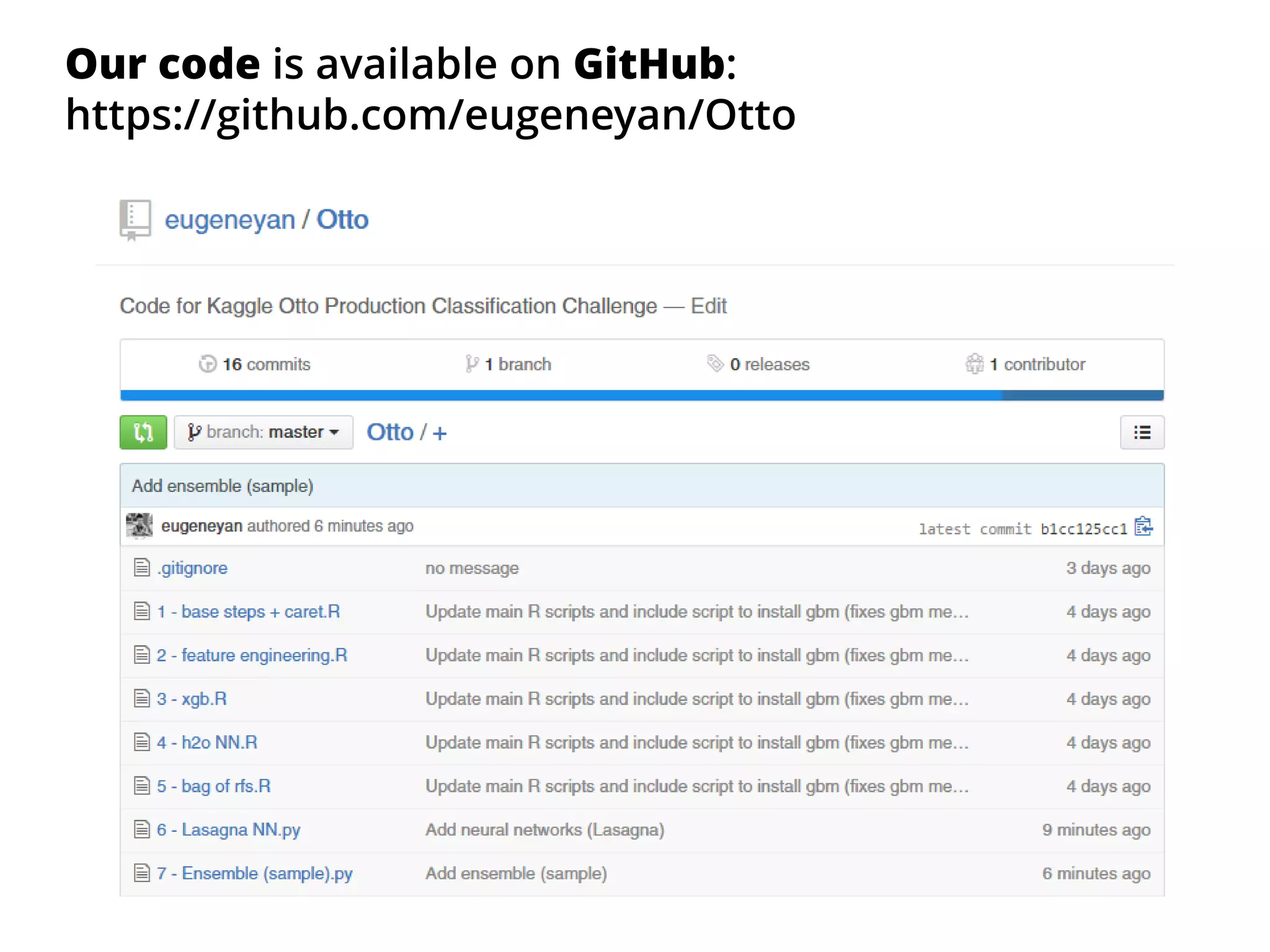

The document outlines how the authors achieved the 85th position out of 3,514 teams in the Kaggle Otto product classification challenge, focused on minimizing multi-class log loss through various modeling techniques. They discuss data feature engineering, model validation methods, and the performance of different algorithms, including tree-based models and neural networks, while emphasizing the importance of ensemble methods. The authors also provide insights into strategies for Kaggle competitions, including effective validation processes and feature transformation techniques.