1. The Trans-Pacific Grid Datafarm testbed provides 70 terabytes of disk capacity and 13 gigabytes per second of disk I/O performance across clusters in Japan, the US, and Thailand.

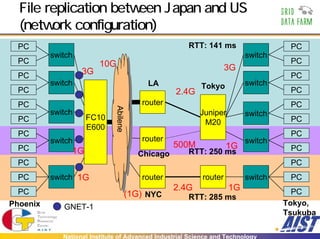

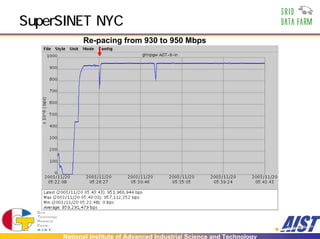

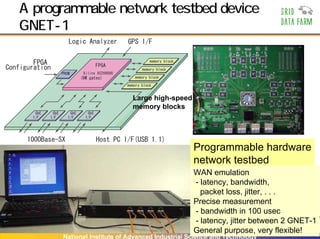

2. Using the GNET-1 network testbed device, the Trans-Pacific Grid Datafarm achieved stable transfer rates of up to 3.79 gigabits per second during a file replication experiment between Japan and the US, near the theoretical maximum of 3.9 gigabits per second.

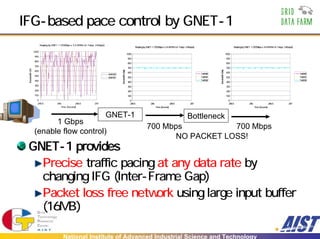

3. Precise pacing of network traffic flows using inter-frame gap controls on the GNET-1 device allowed for high-speed, lossless utilization of long-haul trans-Pacific network links.

![Key points of this talk

Trans-pacific Grid file system and testbed

70 TBytes disk capacity, 13 GB/sec disk I/O performance

Trans-pacific file replication [SC2003 Bandwidth Challenge]

1.5TB data transferred in an hour

Multiple high-speed Trans-Pacific networks;

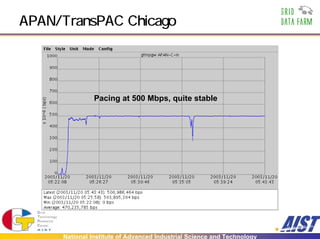

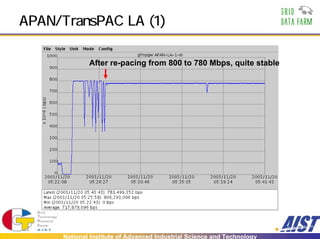

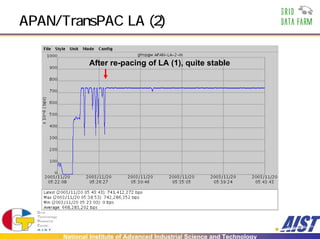

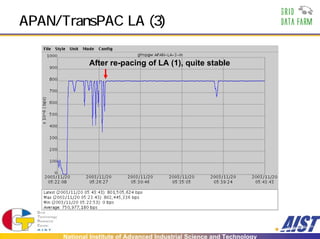

APAN/TransPAC (2.4 Gbps OC48 POS, 500 Mbps OC-12

ATM), SuperSINET (2.4 Gbps x 2, 1 Gbps available)

6,000 miles

stable 3.79 Gbps out of theoretical peak 3.9 Gbps (97%)

using 11 node pairs (MTU 6000B)

We won the "Distributed Infrastructure" award!

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-2-320.jpg)

![[Background] Petascale Data Intensive

Computing

High Energy Physics

CERN LHC, KEK Belle

~MB/collision,

100 collisions/sec Detector for

LHCb experiment

~PB/year

2000 physicists, 35 countries

Detector for

ALICE experiment

Astronomical Data Analysis

data analysis of whole the data

TB~PB/year/telescope

SUBARU telescope

10 GB/night, 3 TB/year

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-3-320.jpg)

![[Background 2] Large-scale File Sharing

P2P – exclusive and special-purpose approach

Napster, Gnutella, Freenet, . . .

Grid technology – file transfer, metadata management

GridFTP, Replica Location Service

Storage Resource Broker (SRB)

Large-scale file system – general approach

Legion, Avaki [Grid, no replica management]

Grid Datafarm [Grid]

Farsite, OceanStore [P2P]

AFS, DFS, . . .

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-4-320.jpg)

![Grid Datafarm (1): Gfarm file system -

World-wide virtual file system [CCGrid 2002]

Transparent access to dispersed file data in a Grid

POSIX I/O APIs, and native Gfarm APIs for extended file

view semantics and replications

Map from virtual directory tree to physical file

Automatic and transparent replica access for fault

tolerance and access-concentration avoidance

Virtual Directory /grid

Tree File system metadata

ggf jp

aist gtrc file1 file2

mapping

file1 file2 file3 file4

File replica creation

Gfarm File System

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-6-320.jpg)

![Grid Datafarm (2): High-performance data access

and processing support [CCGrid 2002]

World-wide parallel and distributed processing

Aggregate of files = superfile

Data processing of superfiles = parallel and

distributed data processing of member files

Local file view (SPMD parallel file access)

File-affinity scheduling (“Owner-computes”)

World-wide

Virtual CPU Parallel &

distributed

processing

Grid File System Astronomic archival data

365 parallel analysis

in a year (superfile)

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-7-320.jpg)

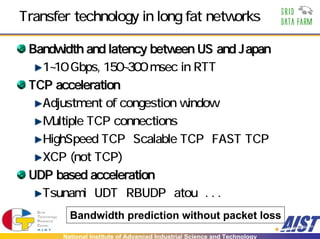

![Multiple TCP streams sometimes

considered harmful . . .

Multiple TCP streams achieve good bandwidth, but

excessively congest the network. In fact would

“shoot oneself in the foot”.

APAN/TransPAC LA-Tokyo (2.4Gbps)

2800

Too much

High oscillation

2600 congestion

Not stable! 2400

2200

2000

Bandwidth (Mbp

1800

1600 TxTotal

TxBW0

1400

TxBW1

1200

TxBW2

1000

Compensate 800

each other 600

400

200

0

375.5 Too much 377

376 376.5 network flow

377.5 378

Time (seconds)

[10 msec average]

Need to limit bandwidth appropriately

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-9-320.jpg)

![Summary of technologies for performance

improvement

[Disk I/O performance] Grid Datafarm – A Grid file system with high-

performance data-intensive computing support

A world-wide virtual file system that federates local file systems of

multiple clusters

It provides scalable disk I/O performance for file replication via high-

speed network links and large-scale data-intensive applications

Trans-Pacific Grid Datafarm testbed

5 clusters in Japan, 3 clusters in US, and 1 cluster in Thailand, provides 70

TBytes disk capacity, 13 GB/sec disk I/O performance

It supports file replication for fault tolerance and access-concentration

avoidance

[World-wide high-speed network efficient utilization] GNET-1 – a gigabit

network testbed device

Provides IFG-based precise rate-controlled flow at any rate

Enables stable and efficient Trans-Pacific network use of HighSpeed

TCP

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-12-320.jpg)

![Trans-Pacific Grid Datafarm testbed:

Network and cluster configuration

SuperSINET Trans-Pacific thoretical peak 3.9 Gbps Indiana

Gfarm disk capacity 70 TBytes Univ

Titech

disk read/write 13 GB/sec

147 nodes

16 TBytes 10G SuperSINET

4 GB/sec SC2003

Univ 2.4G

Tsukuba NII New 2.4G Phoenix

10 nodes 10G York

1 TBytes 2.4G(1G)

300 MB/sec 10G [950 Mbps]

Abilene

Abilene

KEK [500 Mbps]

7 nodes 1G OC-12 ATM

3.7 TBytes 622M Chicago

200 MB/sec

APAN 1G 10G

Maffin Tokyo XP

APAN/TransPAC

1G 1G

32 nodes

AIST 5G 2.4G Los Angeles

[2.34 Gbps] 23.3 TBytes

10G

Tsukuba SDSC 2 GB/sec

16 nodes 16 nodes Kasetsart

WAN

11.7 TBytes 11.7 TBytes Univ,

1 GB/sec Thailand

1 GB/sec

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-13-320.jpg)

![Scientific Data for Bandwidth Challenge

Trans-Pacific File Replication of scientific data

For transparent, high-performance, and fault-tolerant access

Astronomical Object Survey on Grid Datafarm [HPC Challenge participant]

World-wide data analysis on whole the archive

652 GBytes data observed by SUBARU telescope

N. Yamamoto (AIST)

Large configuration data from Lattice QCD

Three sets of hundreds of gluon field configurations on a 24^3*48 4-D

space-time lattice (3 sets x 364.5 MB x 800 = 854.3 GB)

Generated by the CP-PACS parallel computer at Center for

Computational Physics, Univ. of Tsukuba (300Gflops x years of CPU

time) [Univ Tsukuba Booth]

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-14-320.jpg)

![Network bandwidth in APAN/TransPAC

LA route

PC RTT: 141 ms PC

switch switch

PC 3G 3G PC

10G 2.4G

PC FC10 Juniper PC

switch router switch

PC E600 M20 PC

PC switch LA Tokyo switch PC

GNET-1 Stable transfer rate of 2.3 Gbps

2

[Gbps]

1

No pacing Pacing in 2.3 Gbps

(900 + 900 + 500)

National Institute of Advanced Industrial Science and Technology](https://image.slidesharecdn.com/gfarmfstatebe-tip2004-090926211935-phpapp01/85/Gfarm-Fs-Tatebe-Tip2004-15-320.jpg)