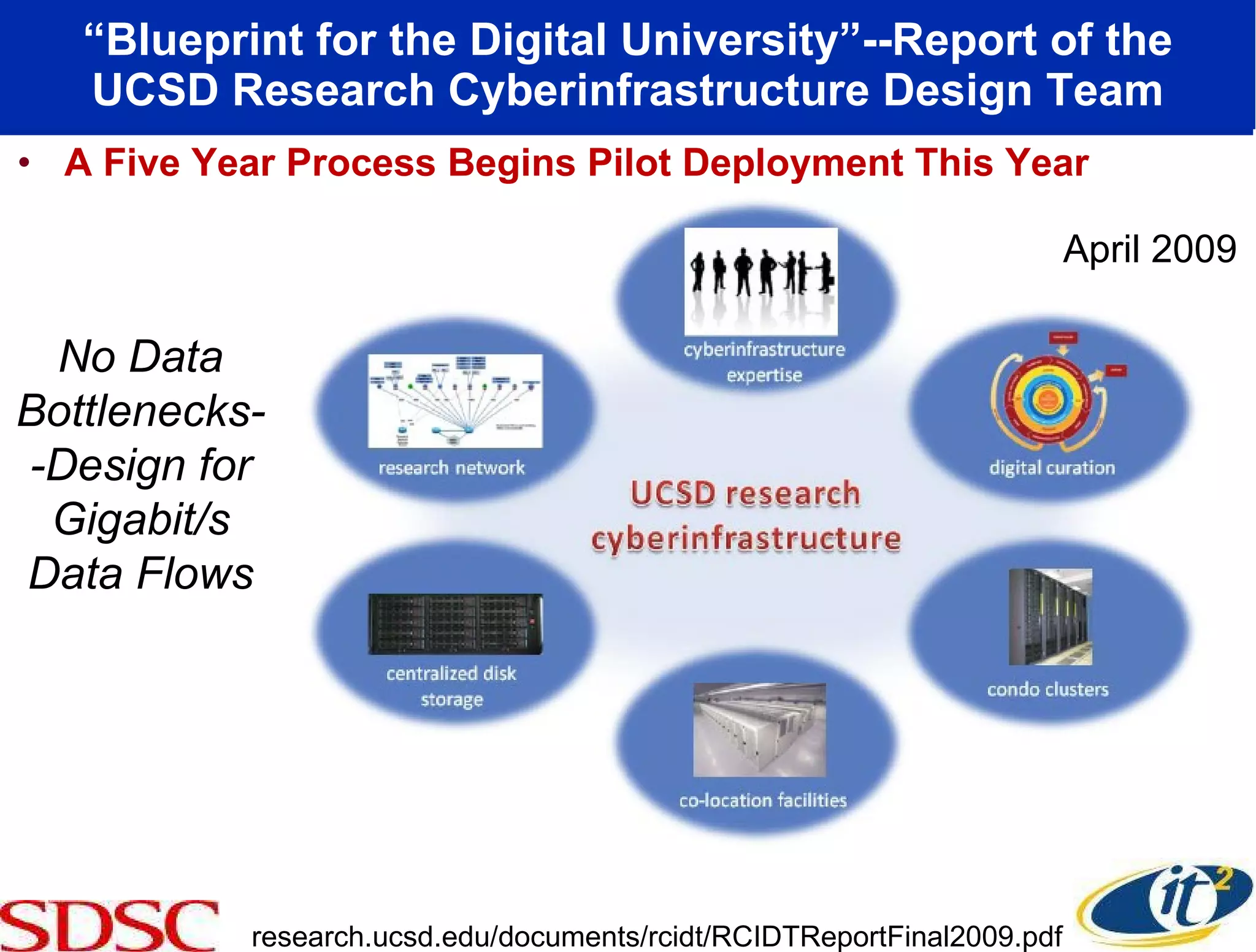

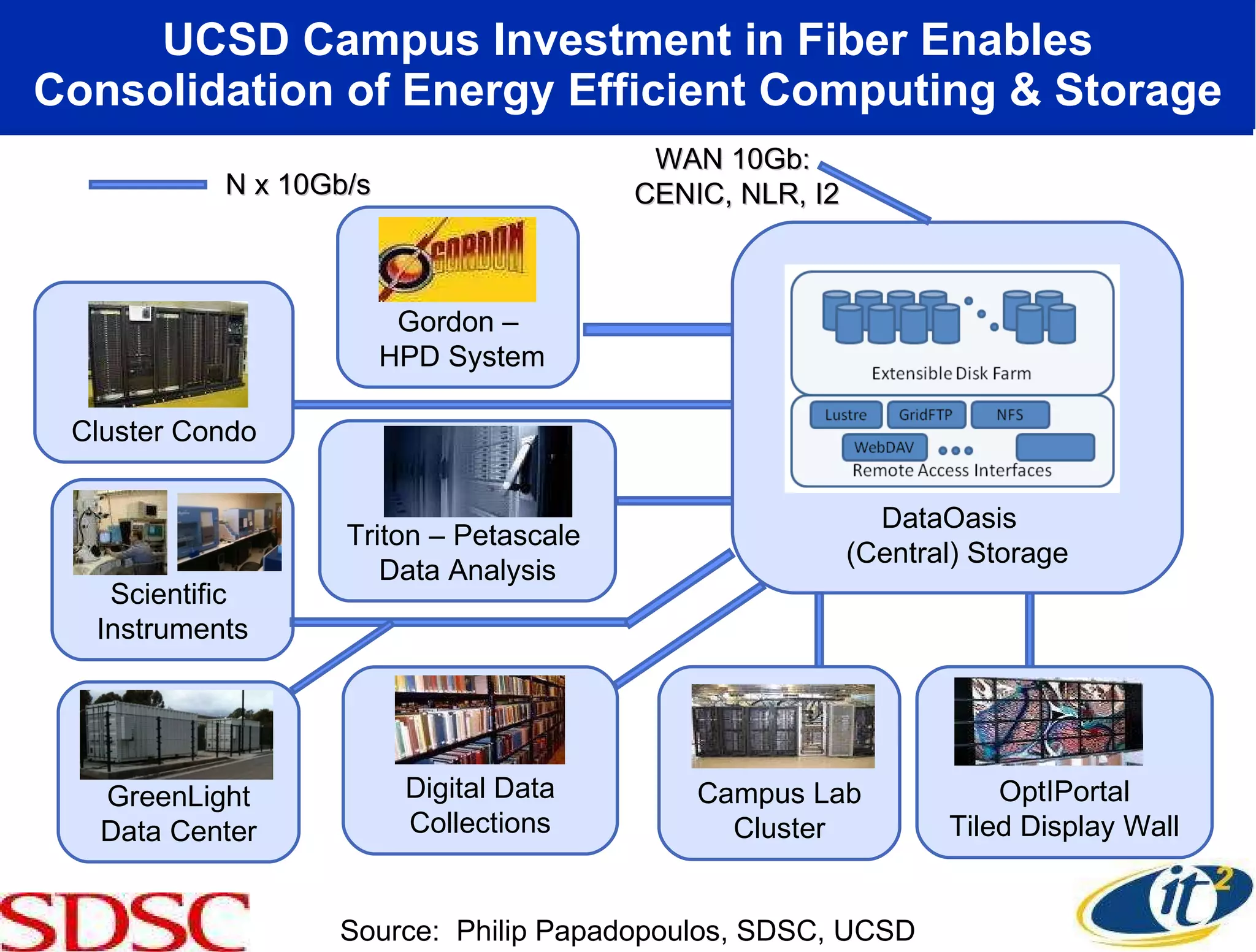

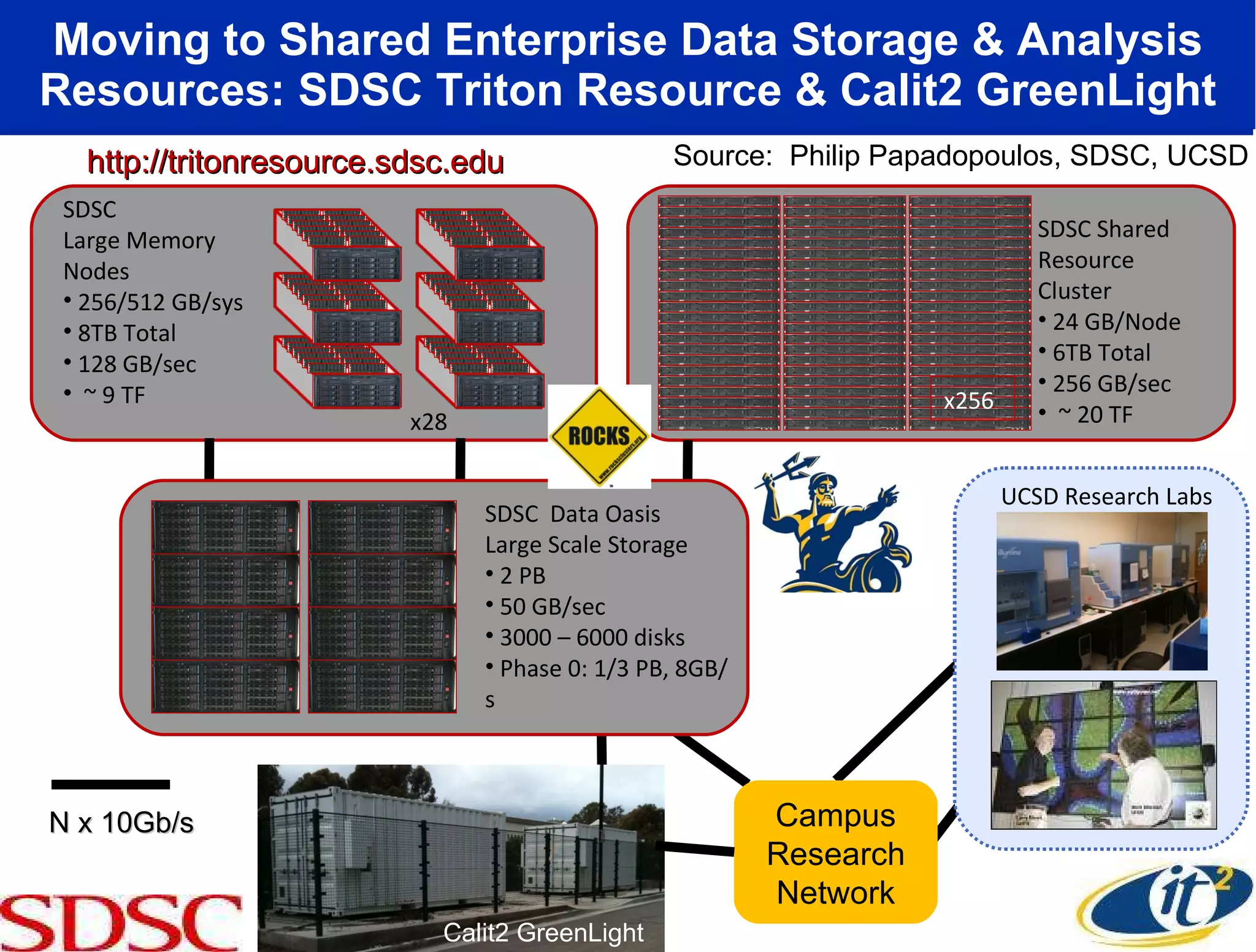

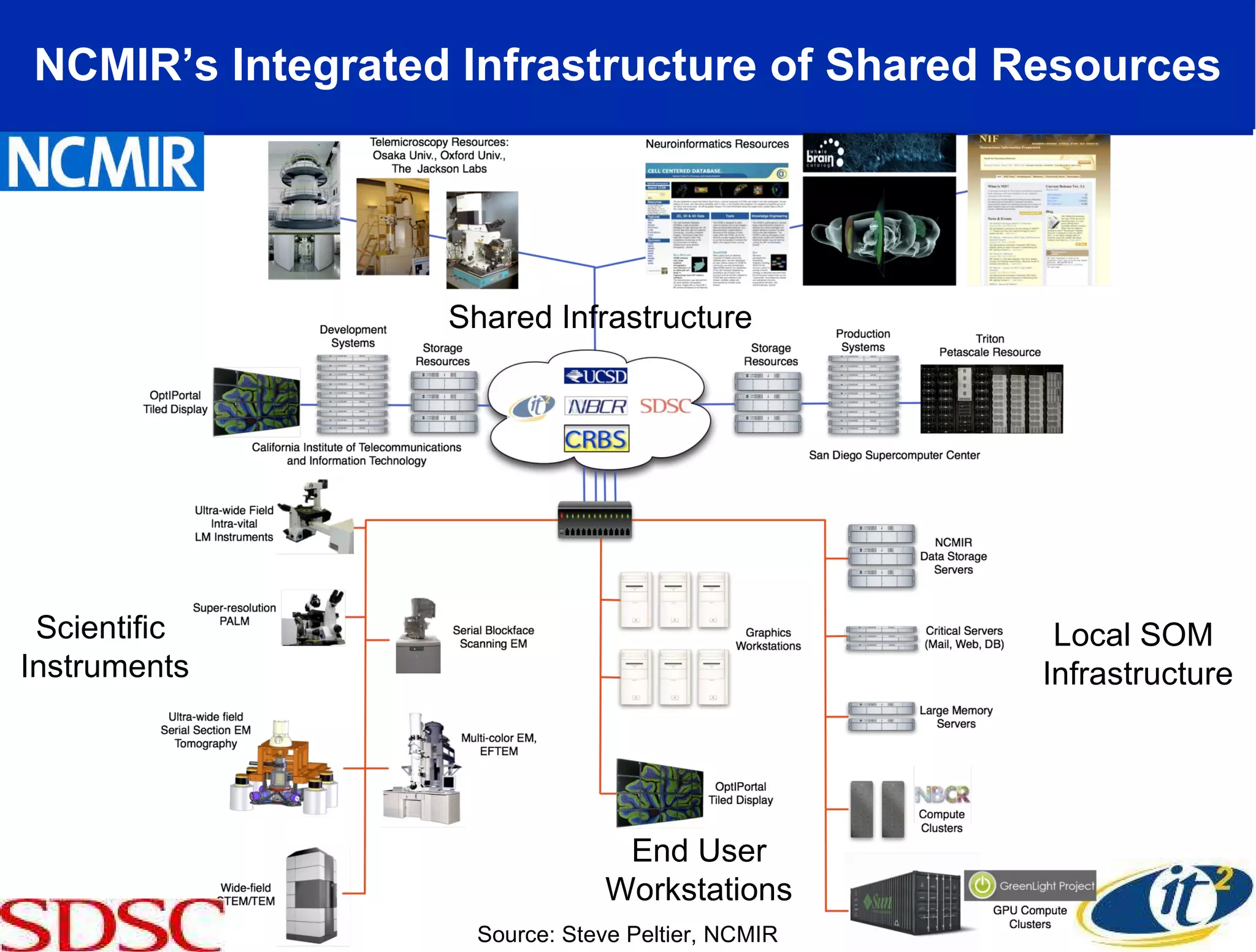

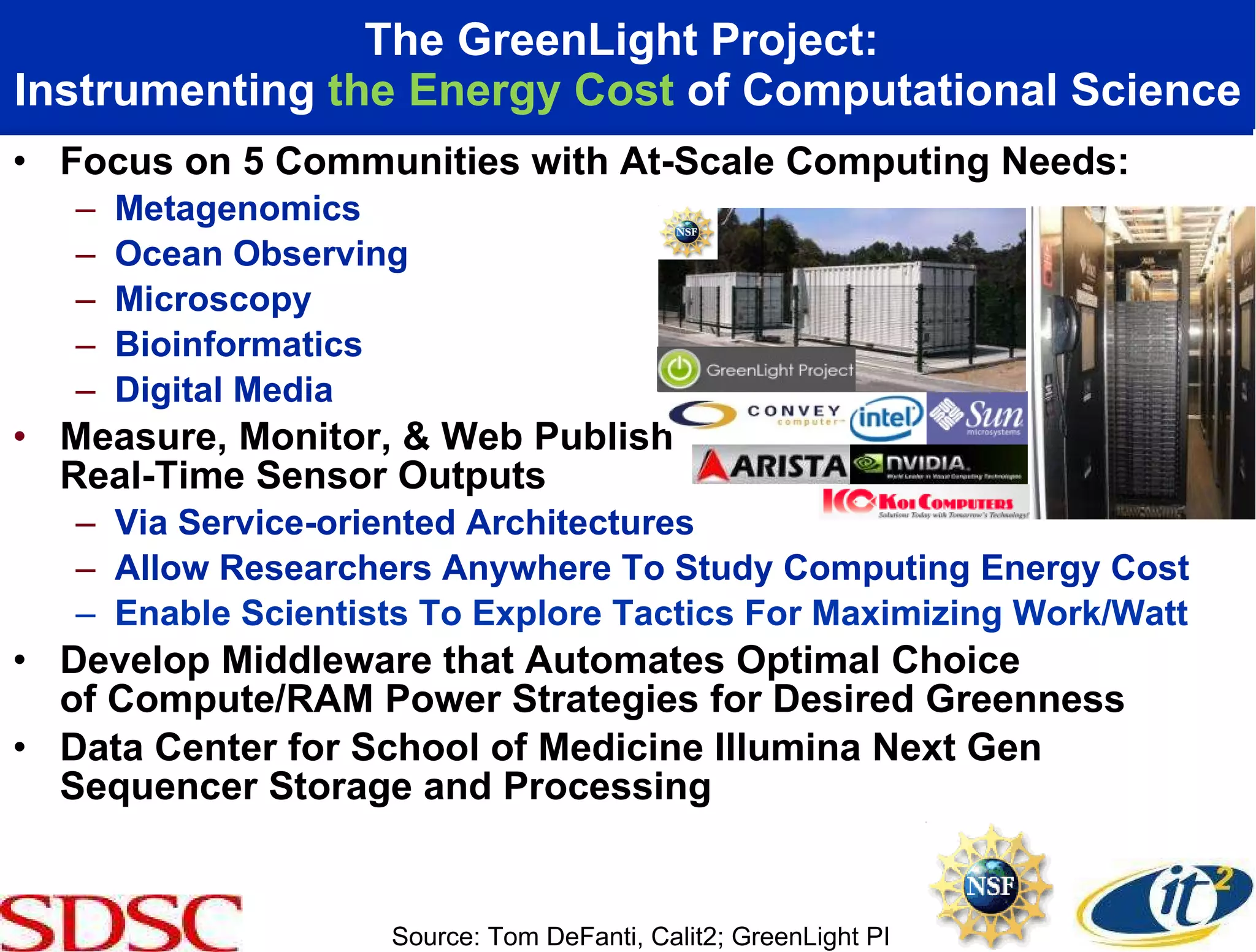

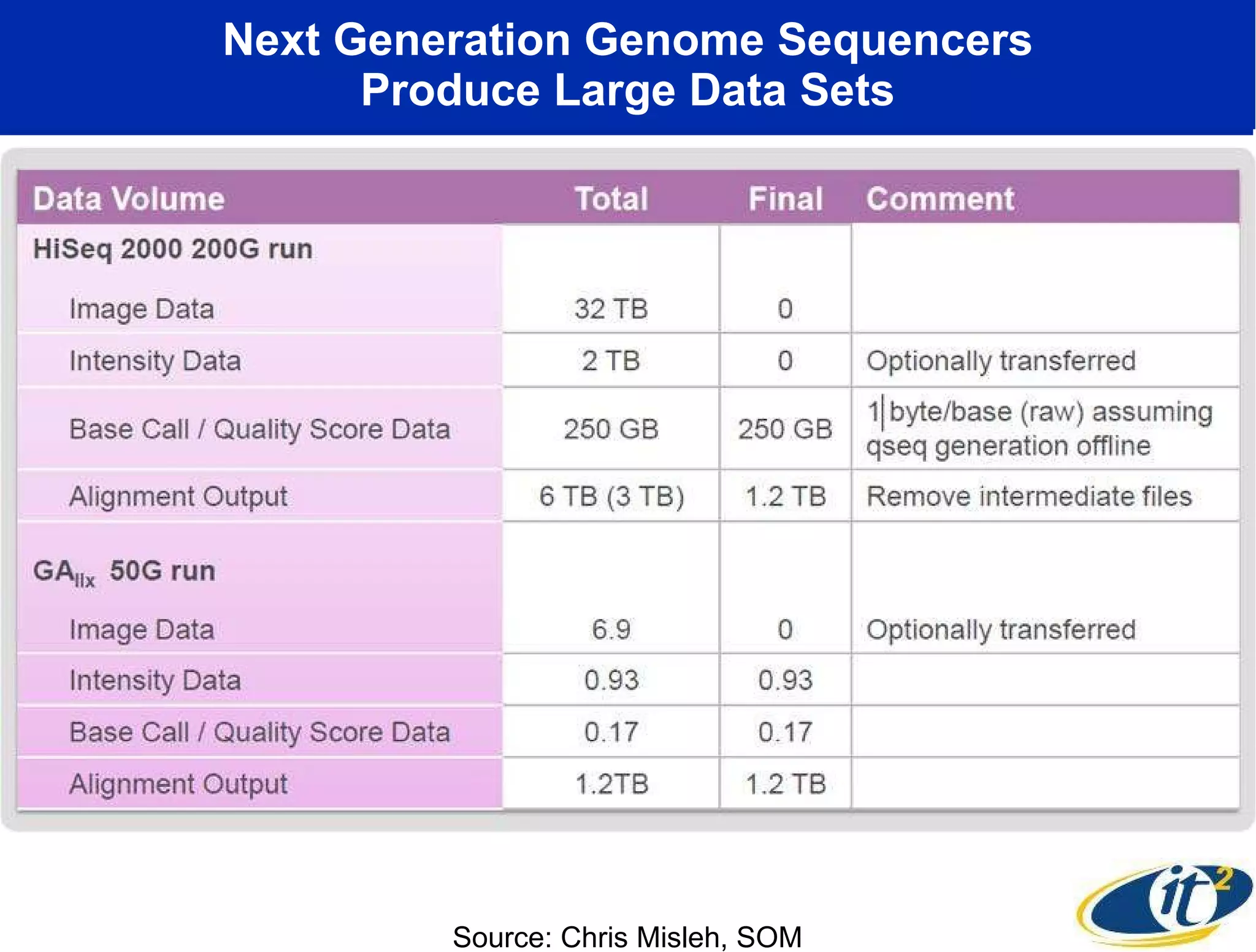

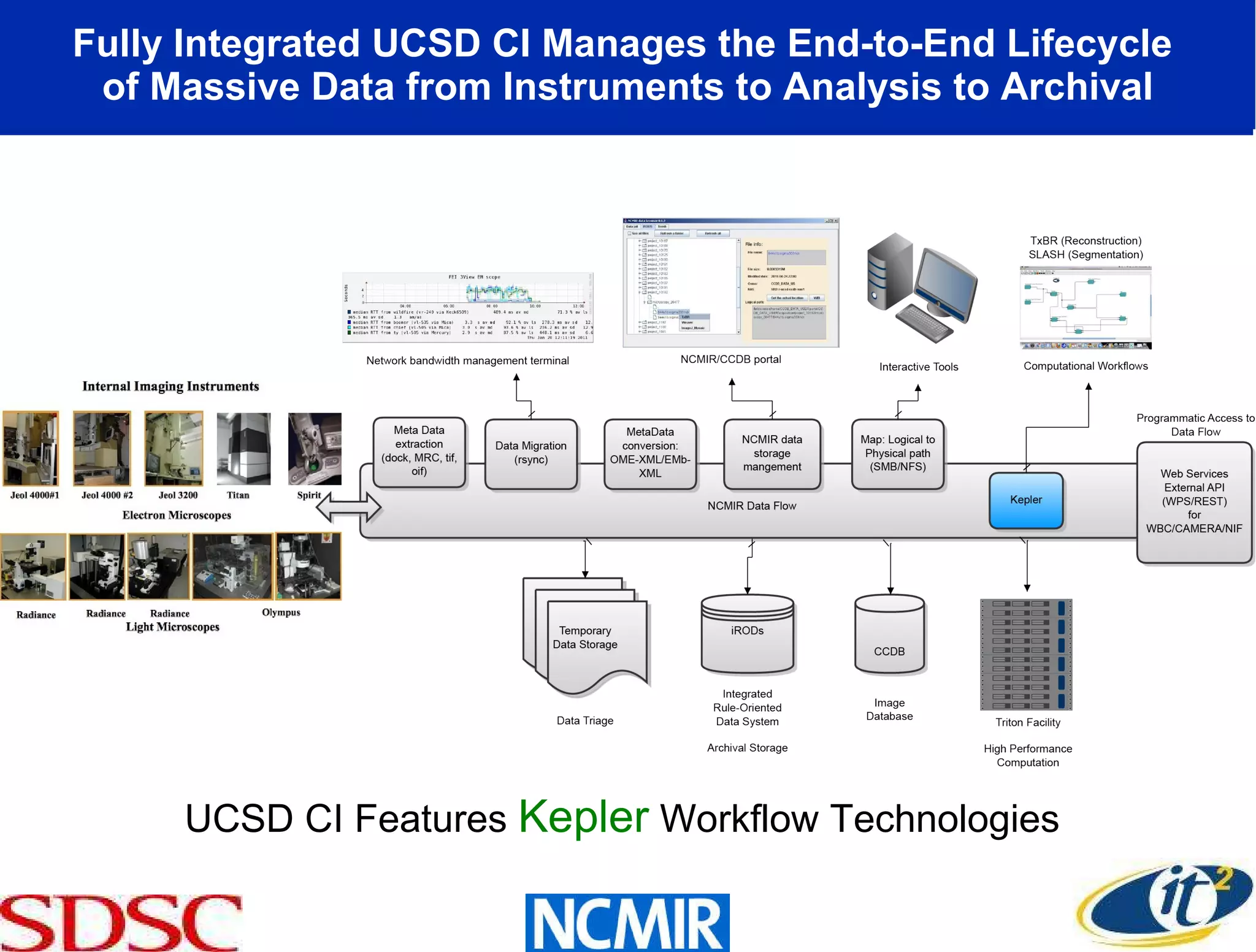

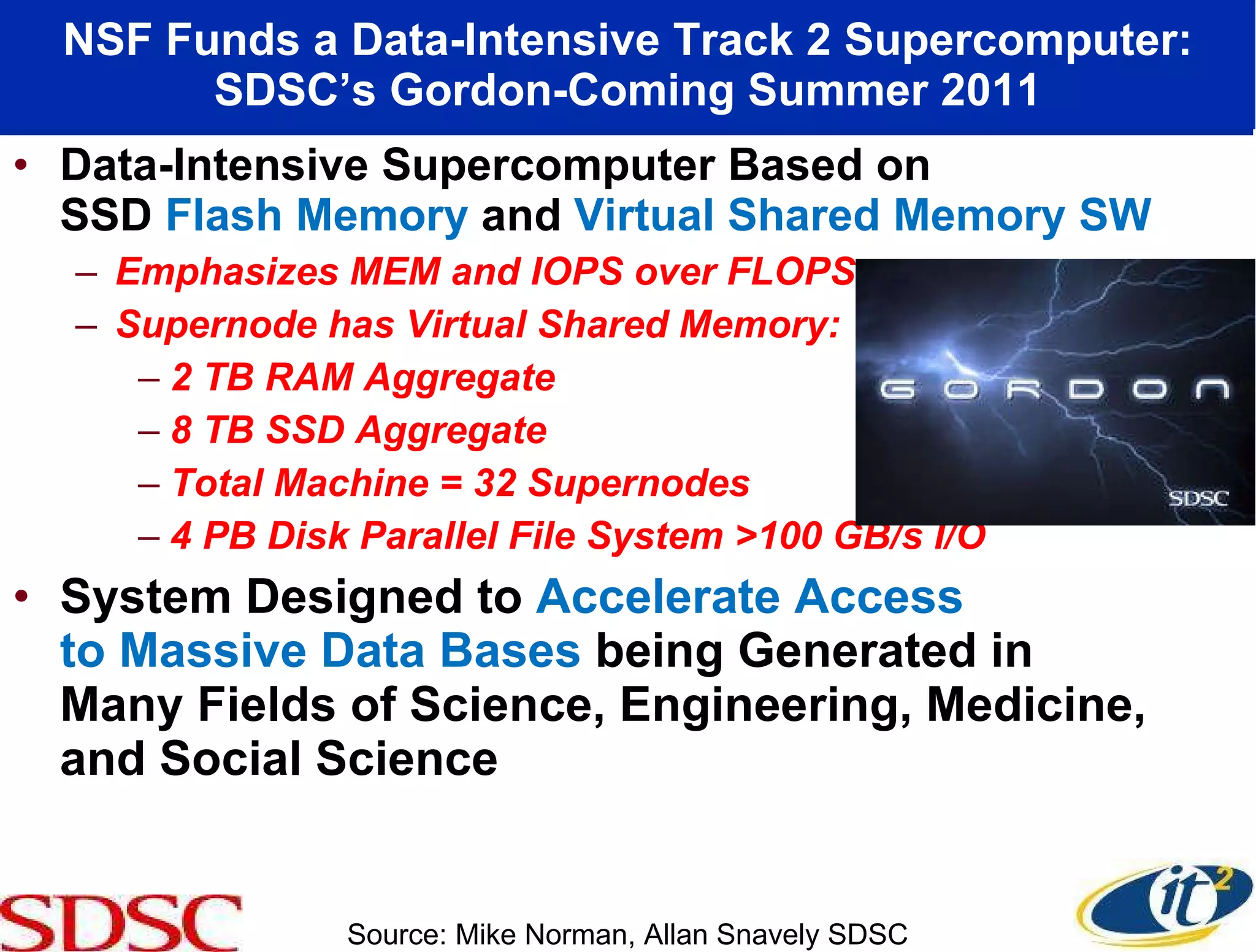

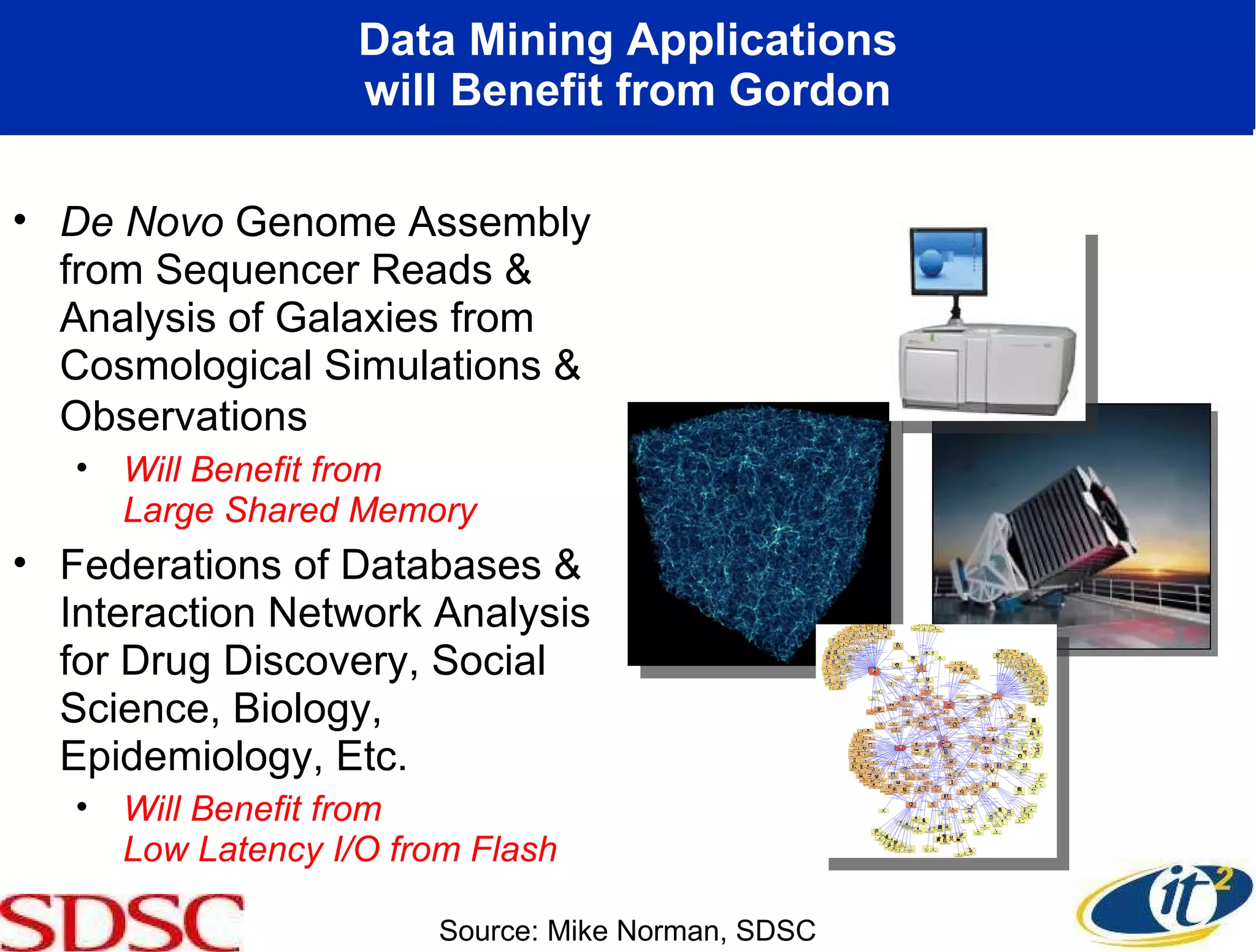

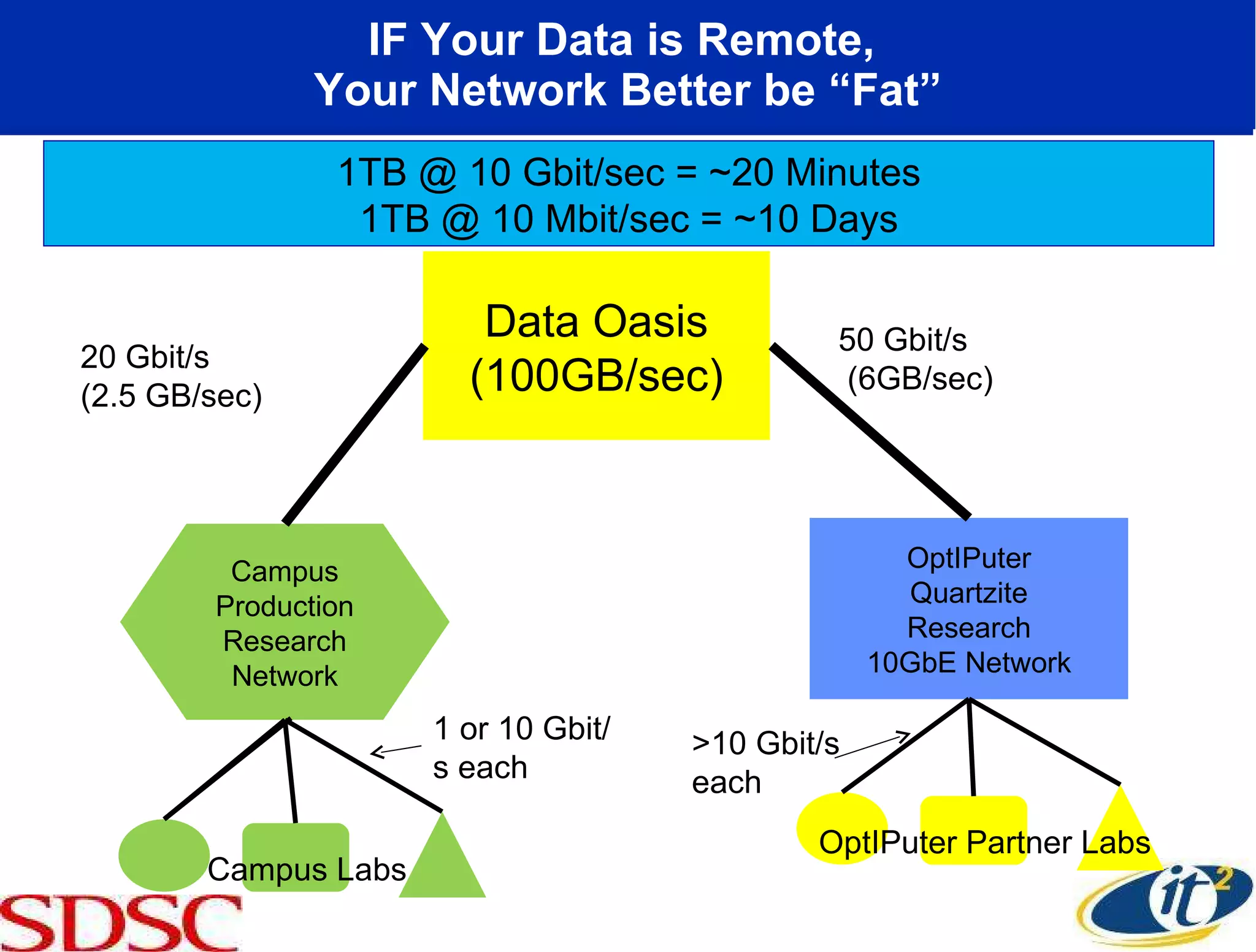

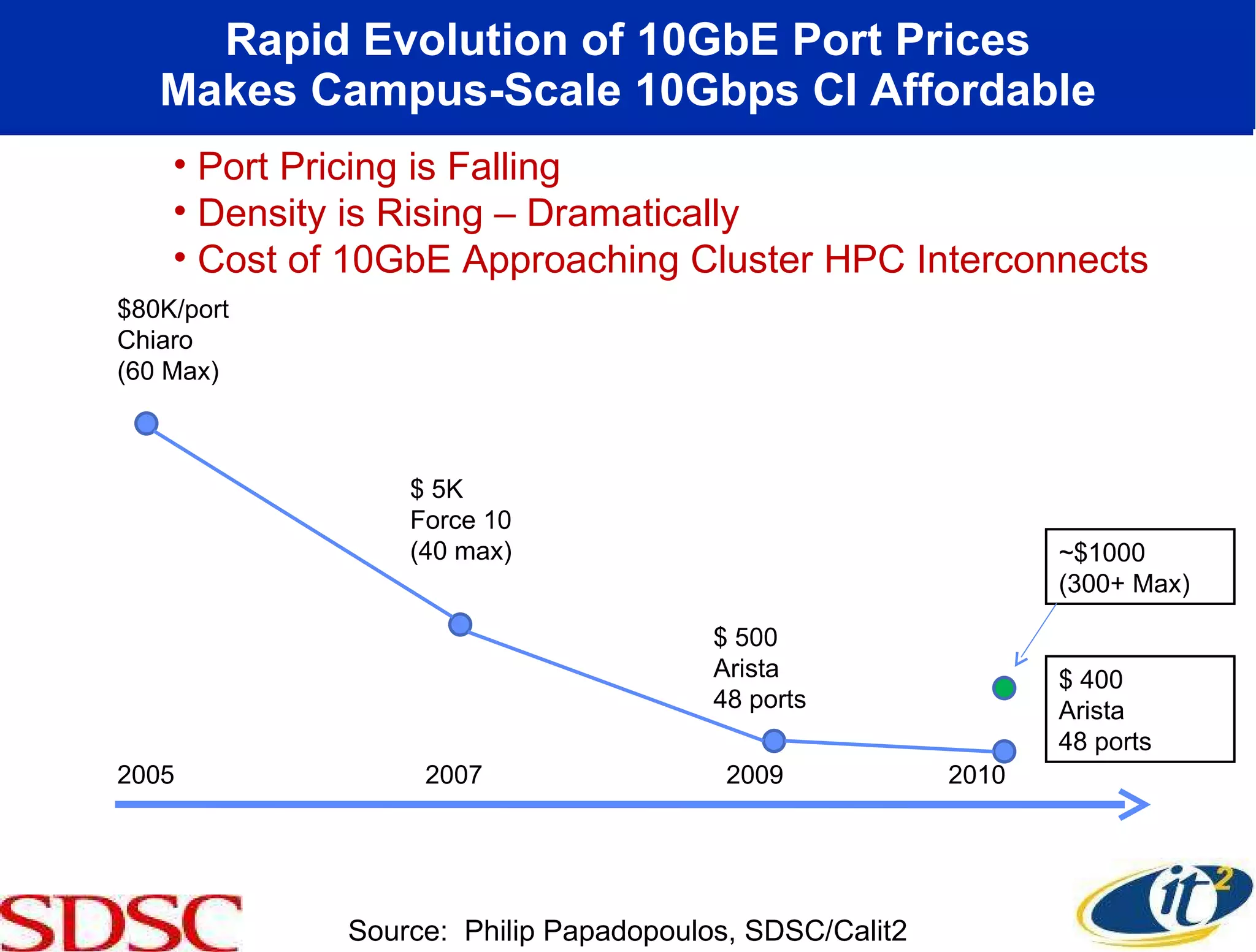

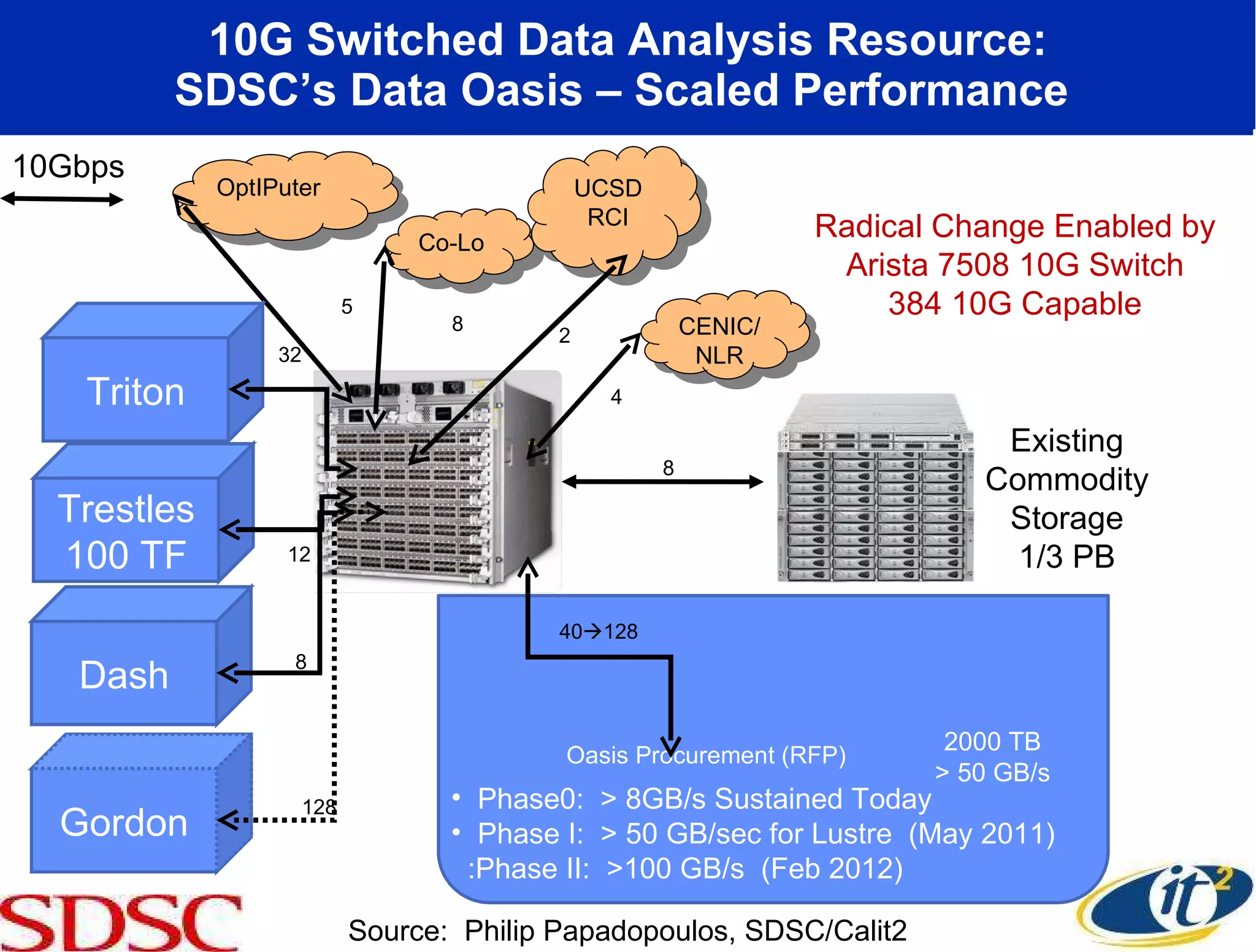

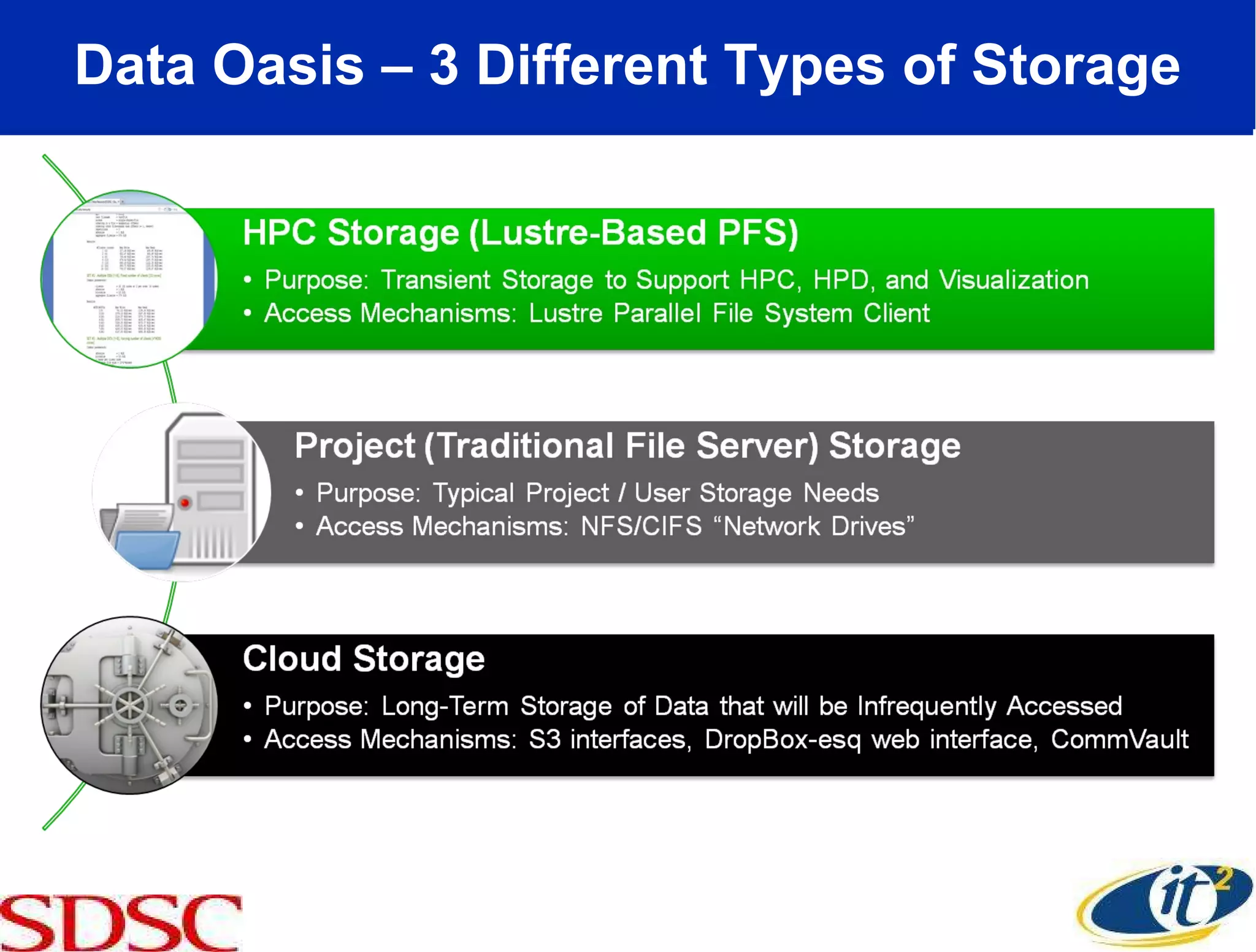

The document discusses advancements in high-performance cyberinfrastructure at UC San Diego, focusing on enabling data-driven science for stem cell research and other fields. It highlights the deployment of a 10 Gbps network and various computational resources, such as the Triton data analysis facility and shared storage solutions, to address the challenges of massive data processing. Additionally, it outlines collaborative efforts and ongoing projects that aim to enhance scientific research capabilities through optimized data storage and analysis techniques.