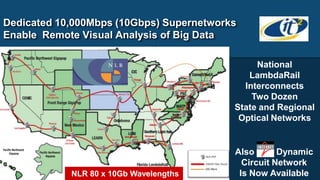

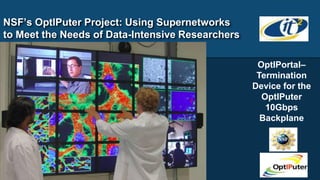

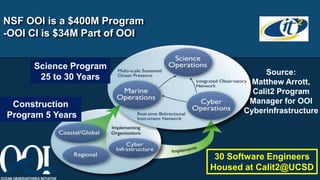

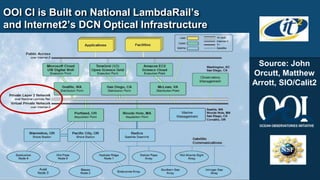

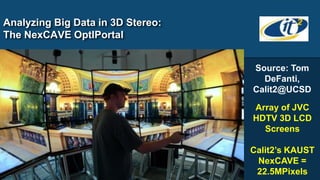

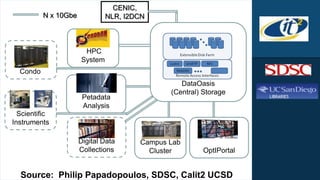

This document discusses how high-performance cyberinfrastructure and dedicated 10Gbps networks enable new levels of discovery for data-intensive research. It provides examples of how universities are using these resources for projects in fields like cosmology, ocean observing, and microbial analysis. Specifically, it describes how networks like National LambdaRail provide connectivity between campuses and commercial clouds, and how tools like OptIPortals allow researchers to remotely analyze large datasets in real-time using visualization techniques.