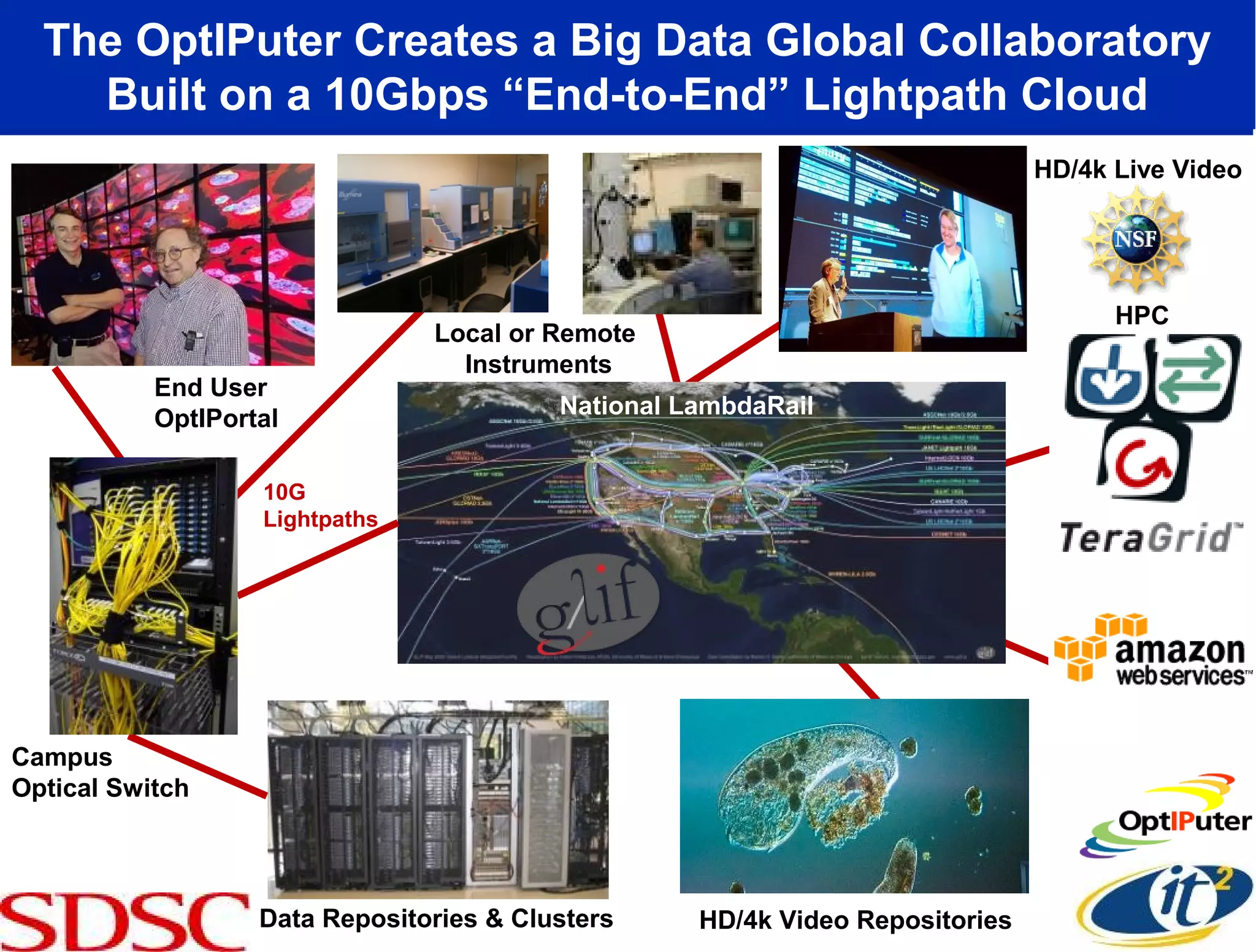

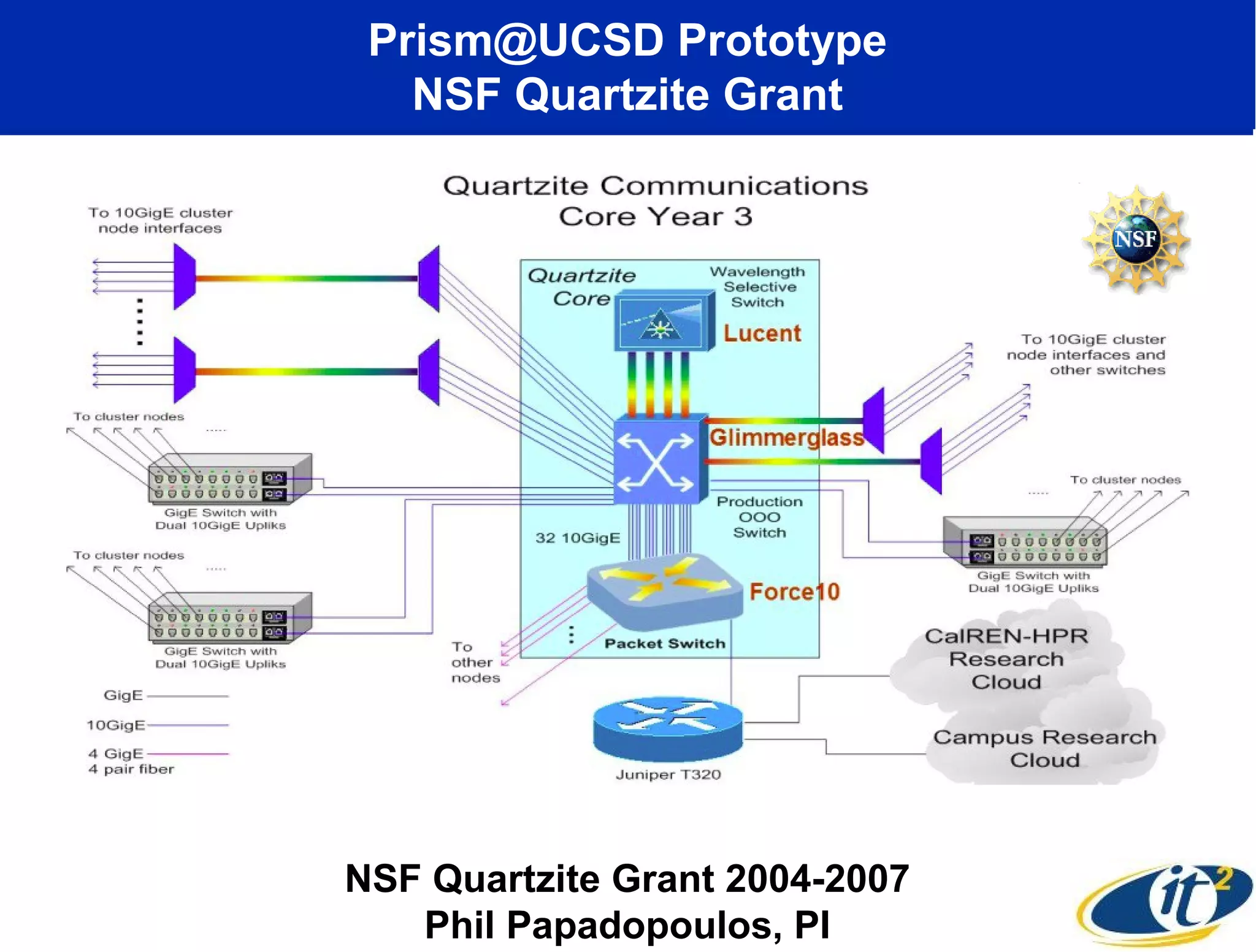

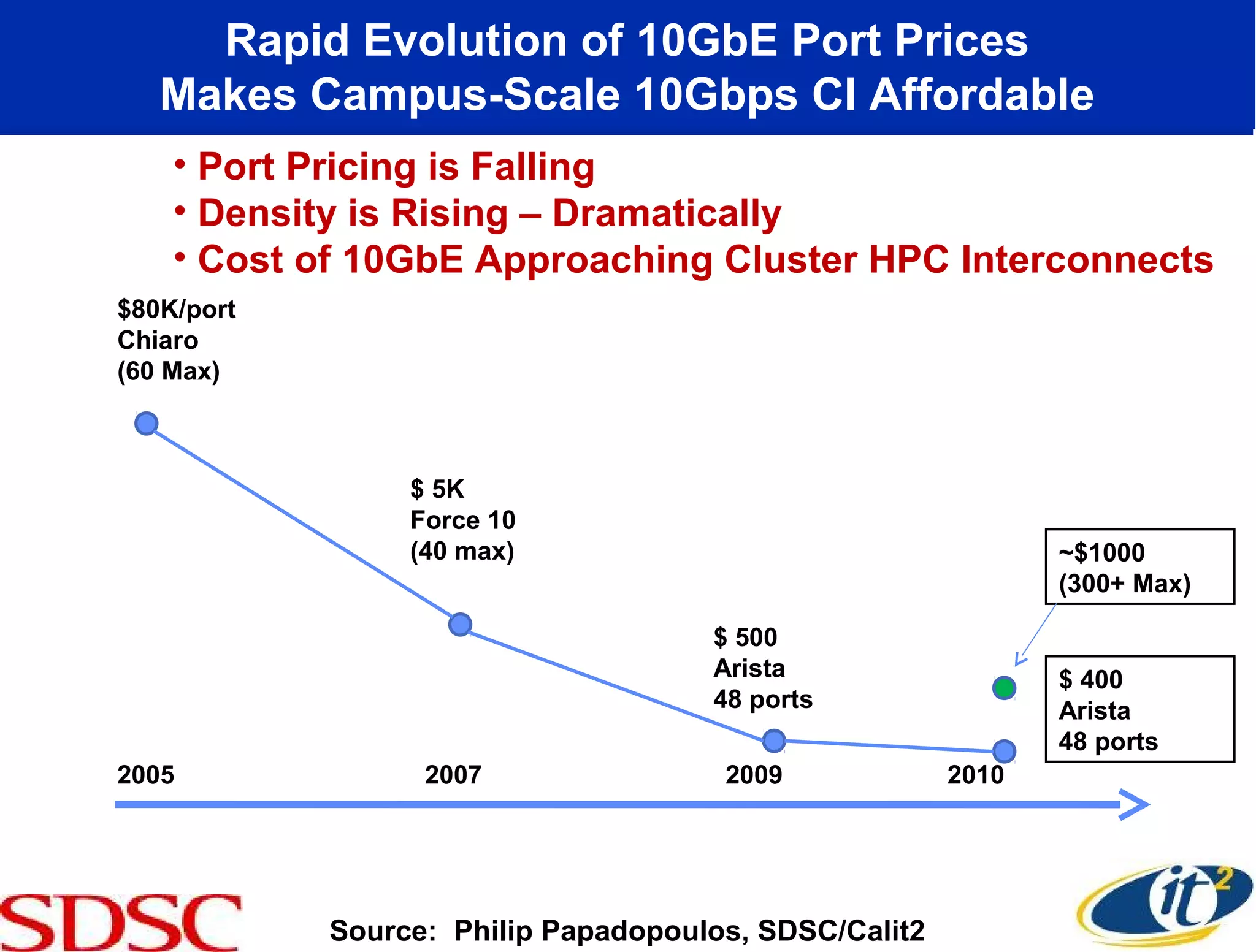

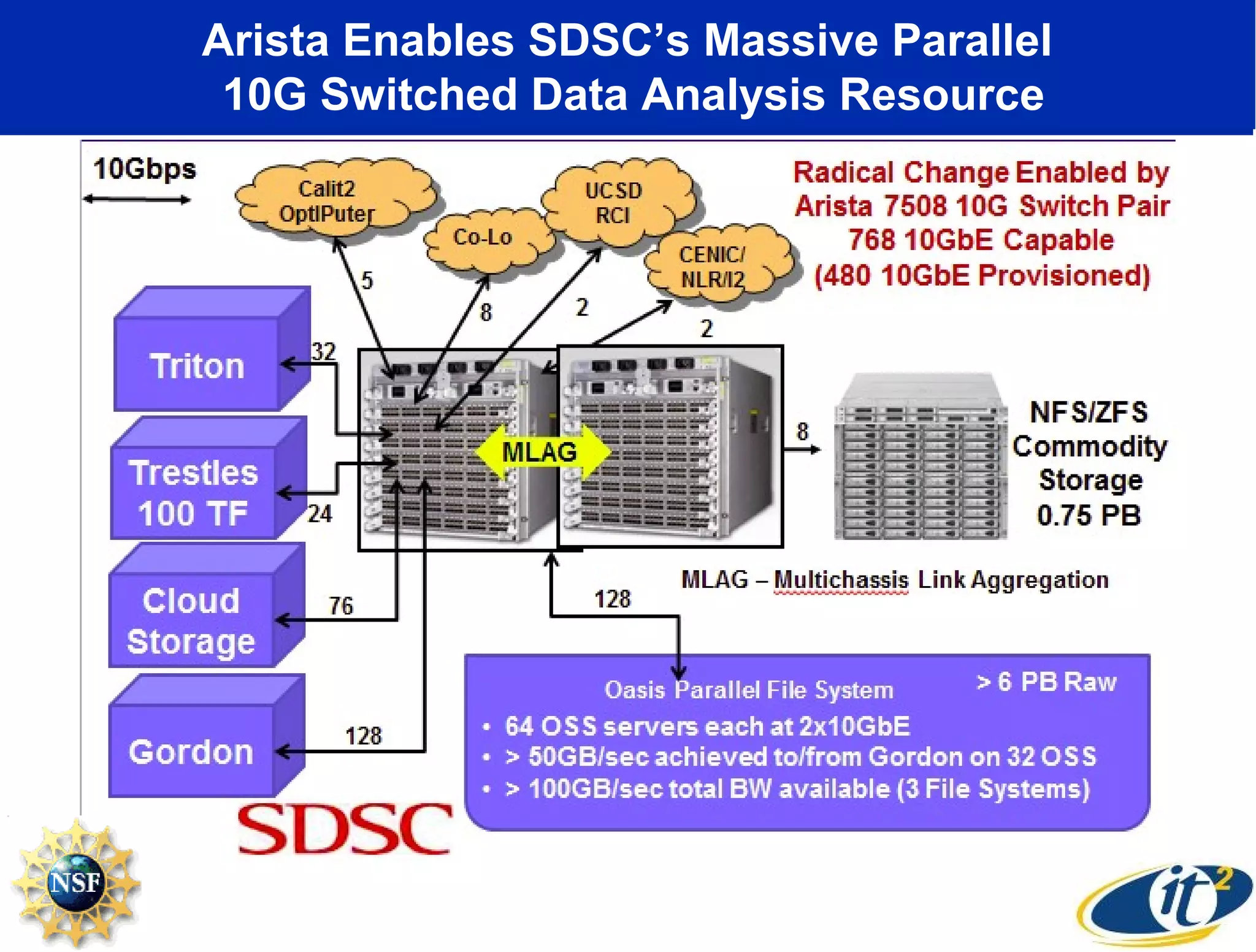

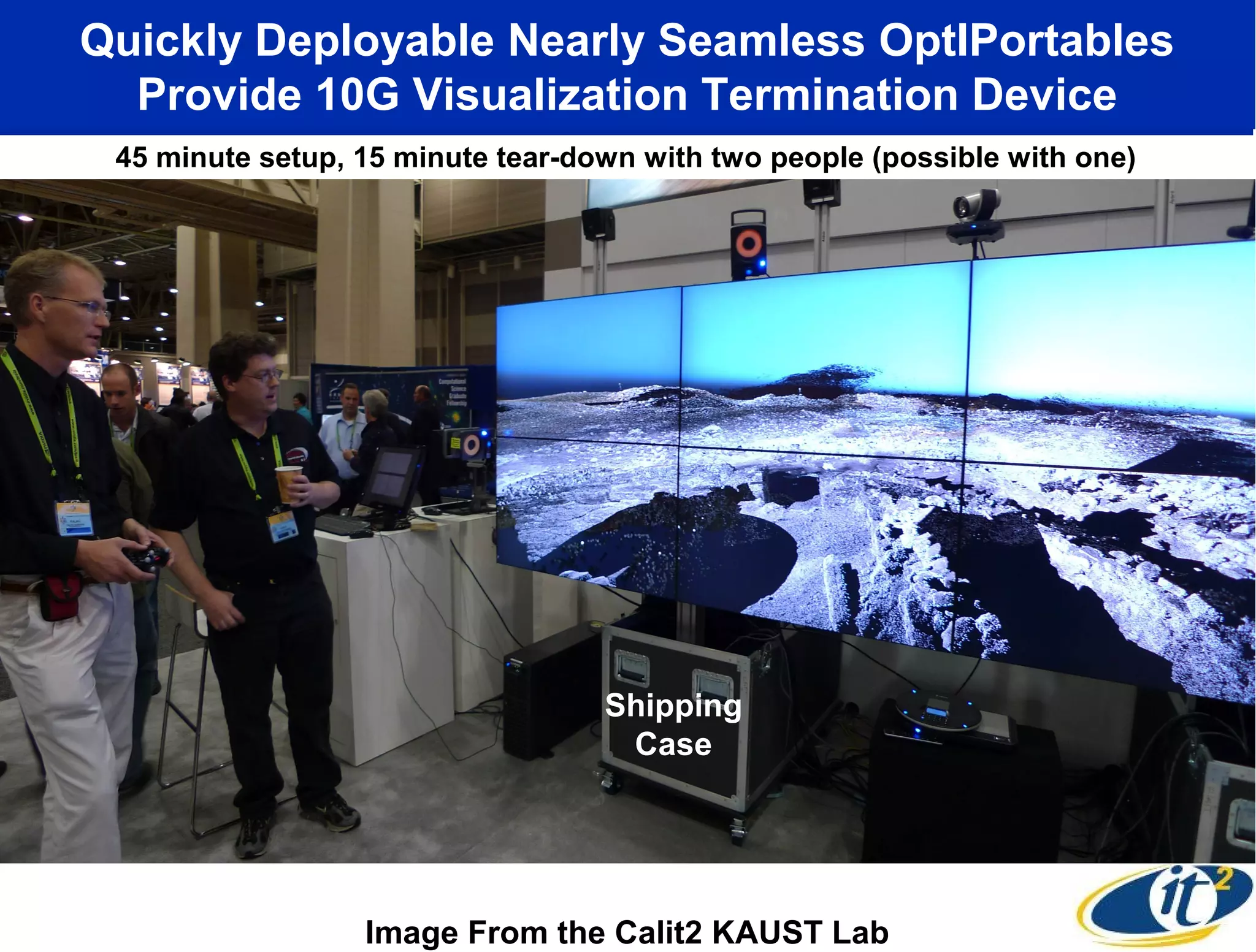

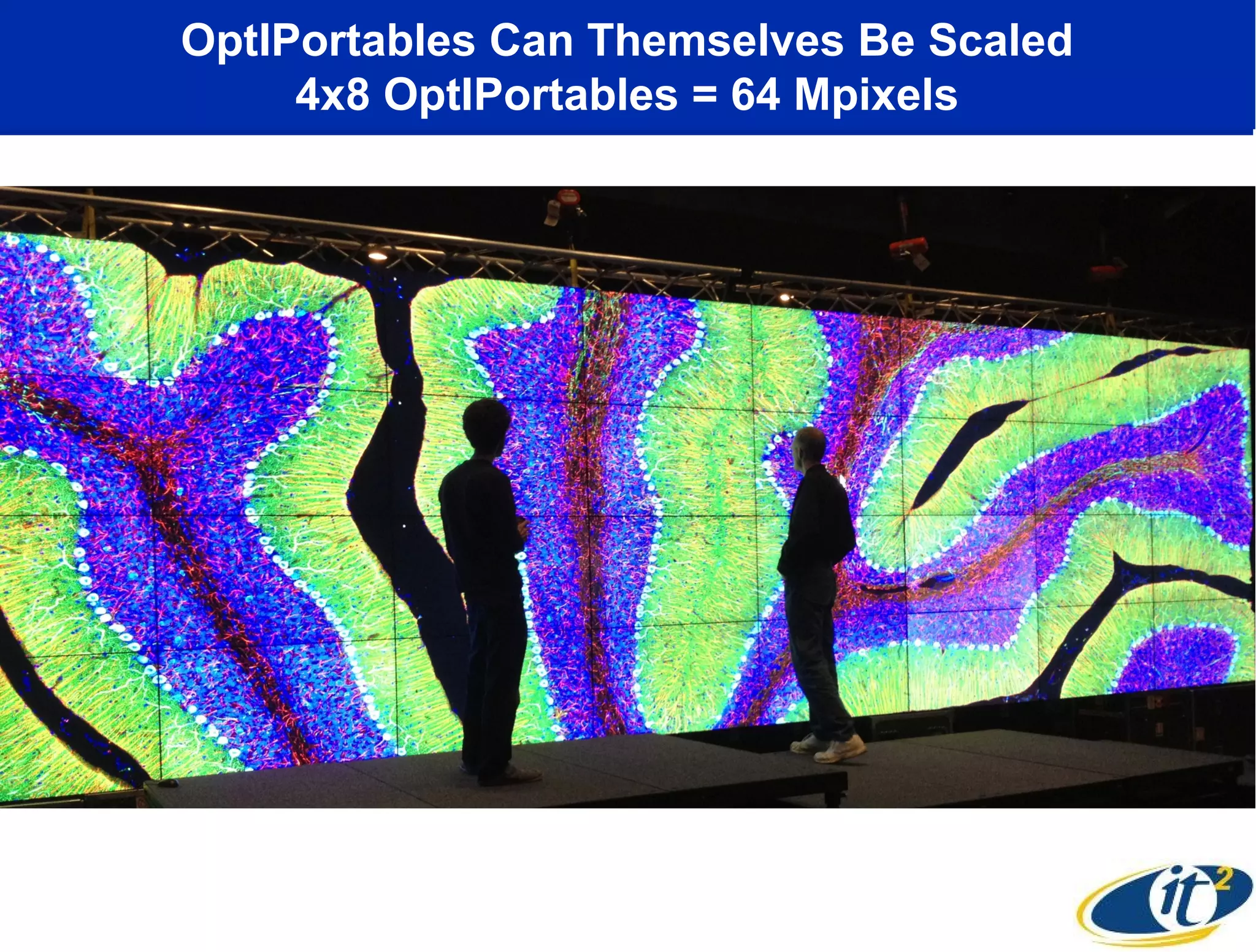

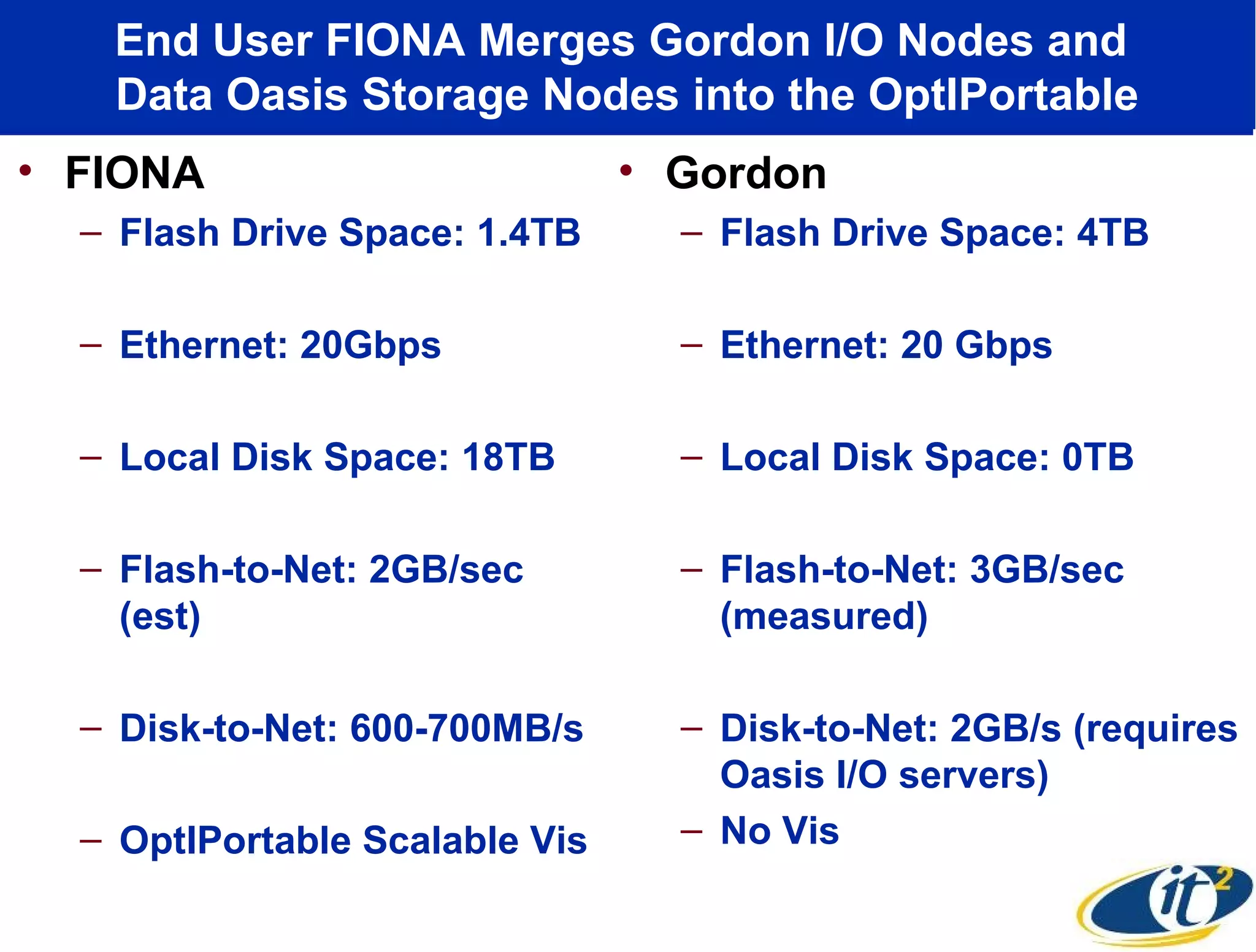

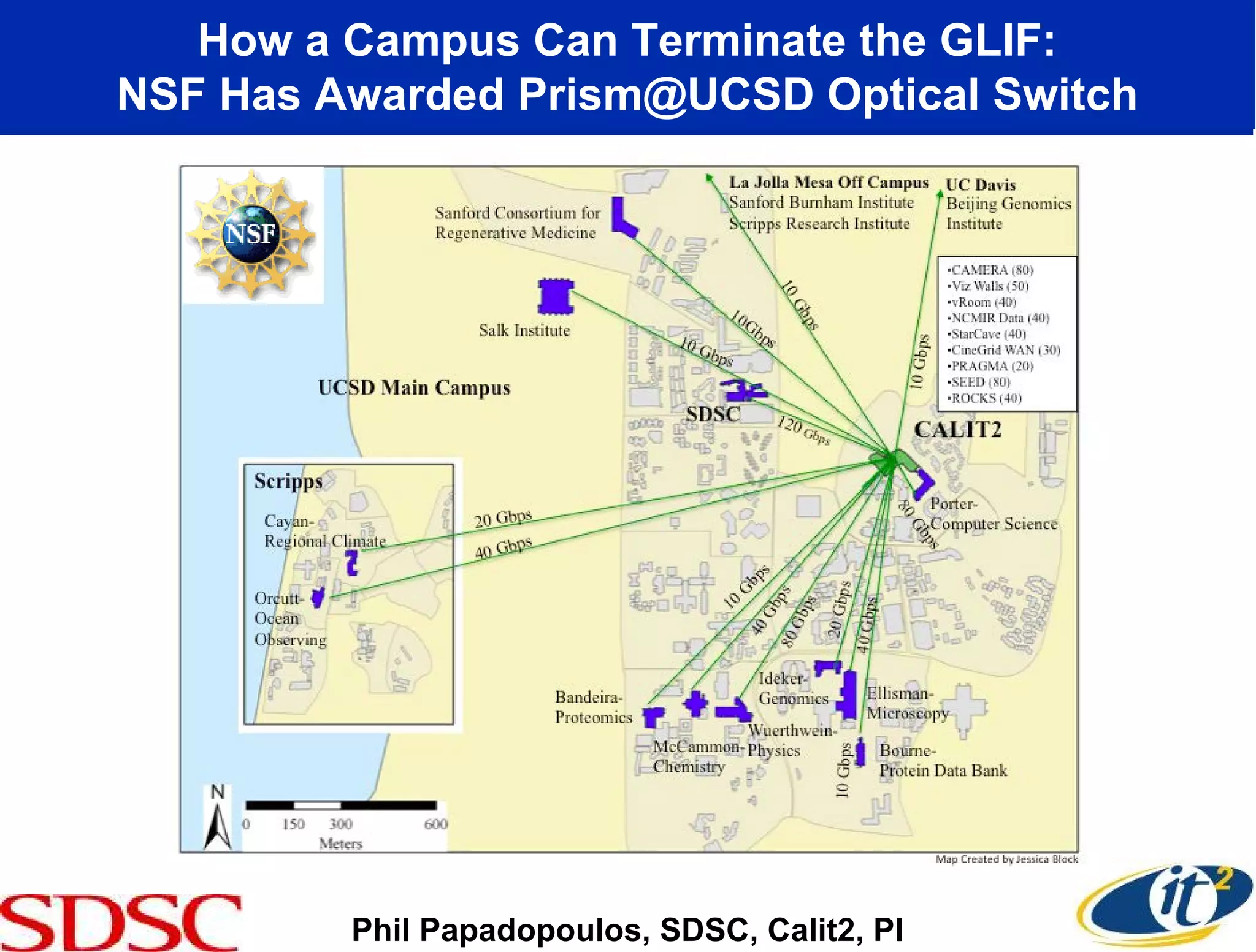

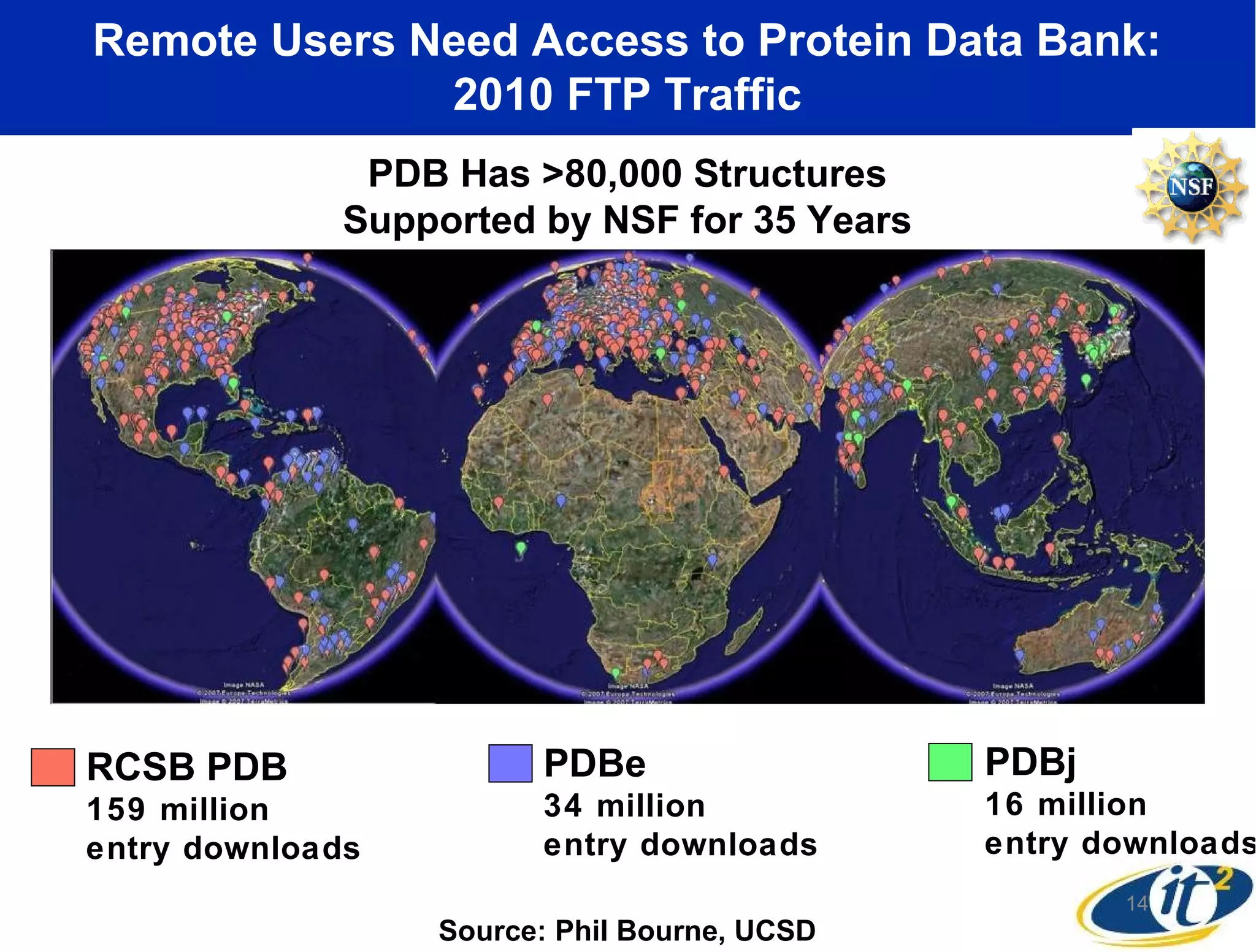

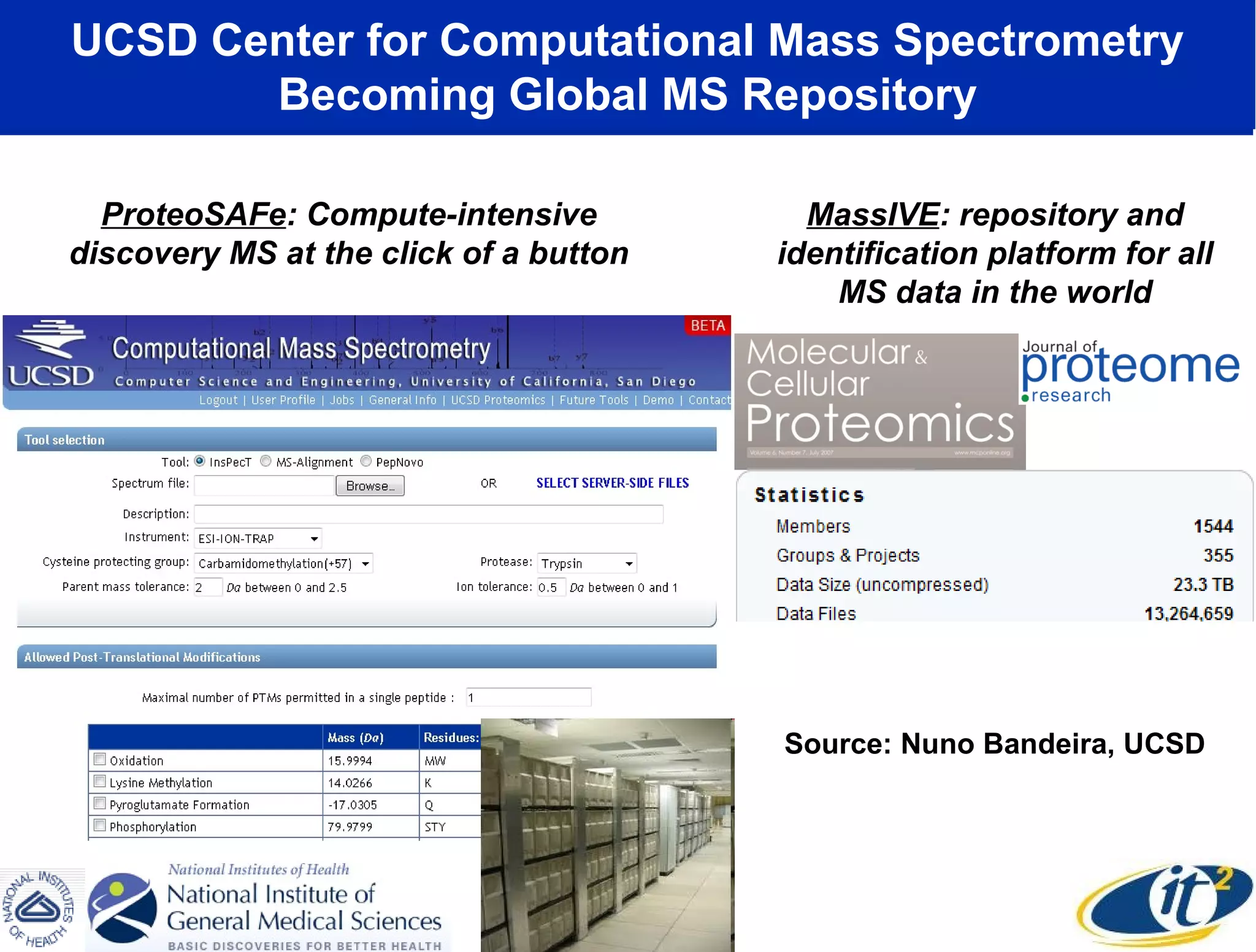

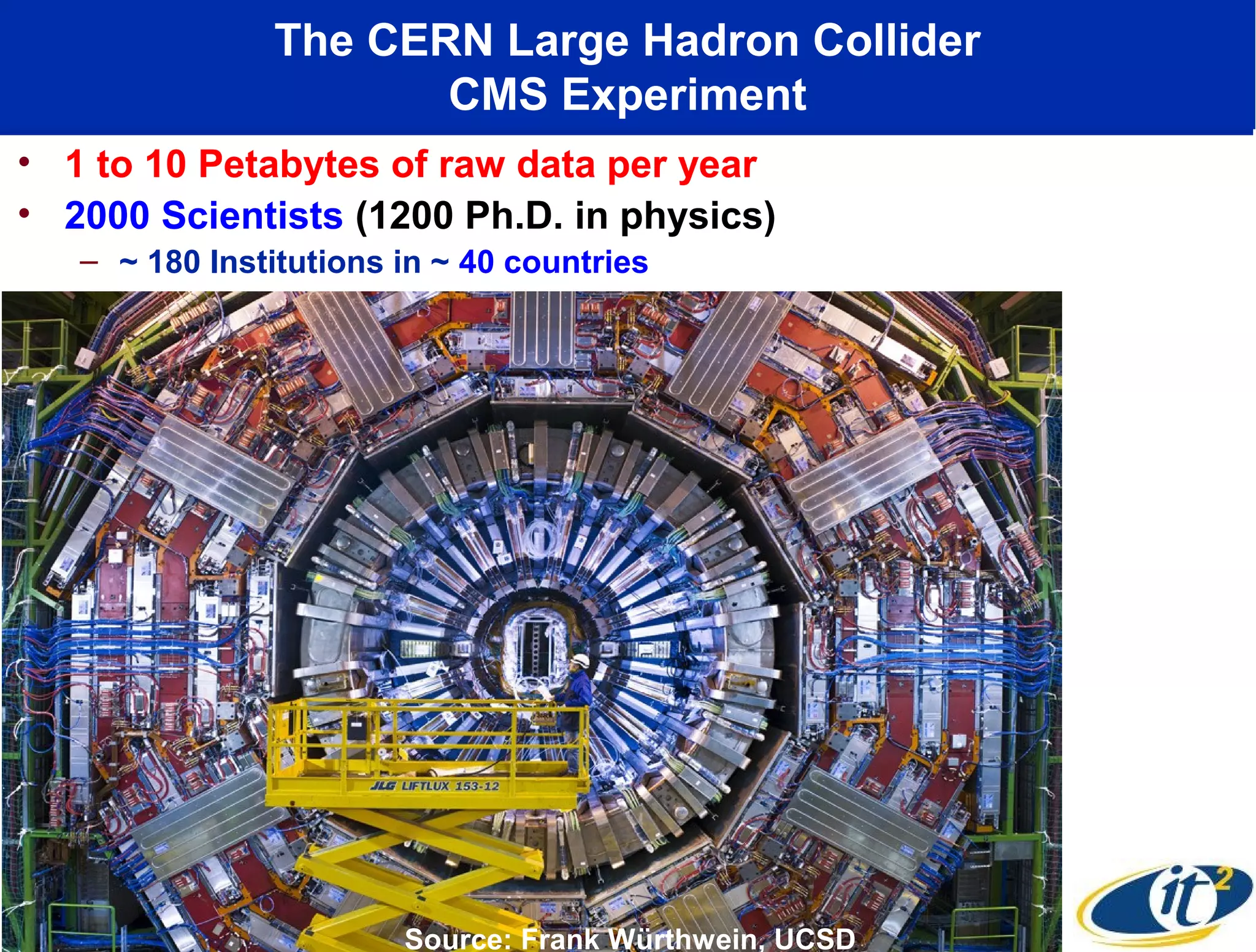

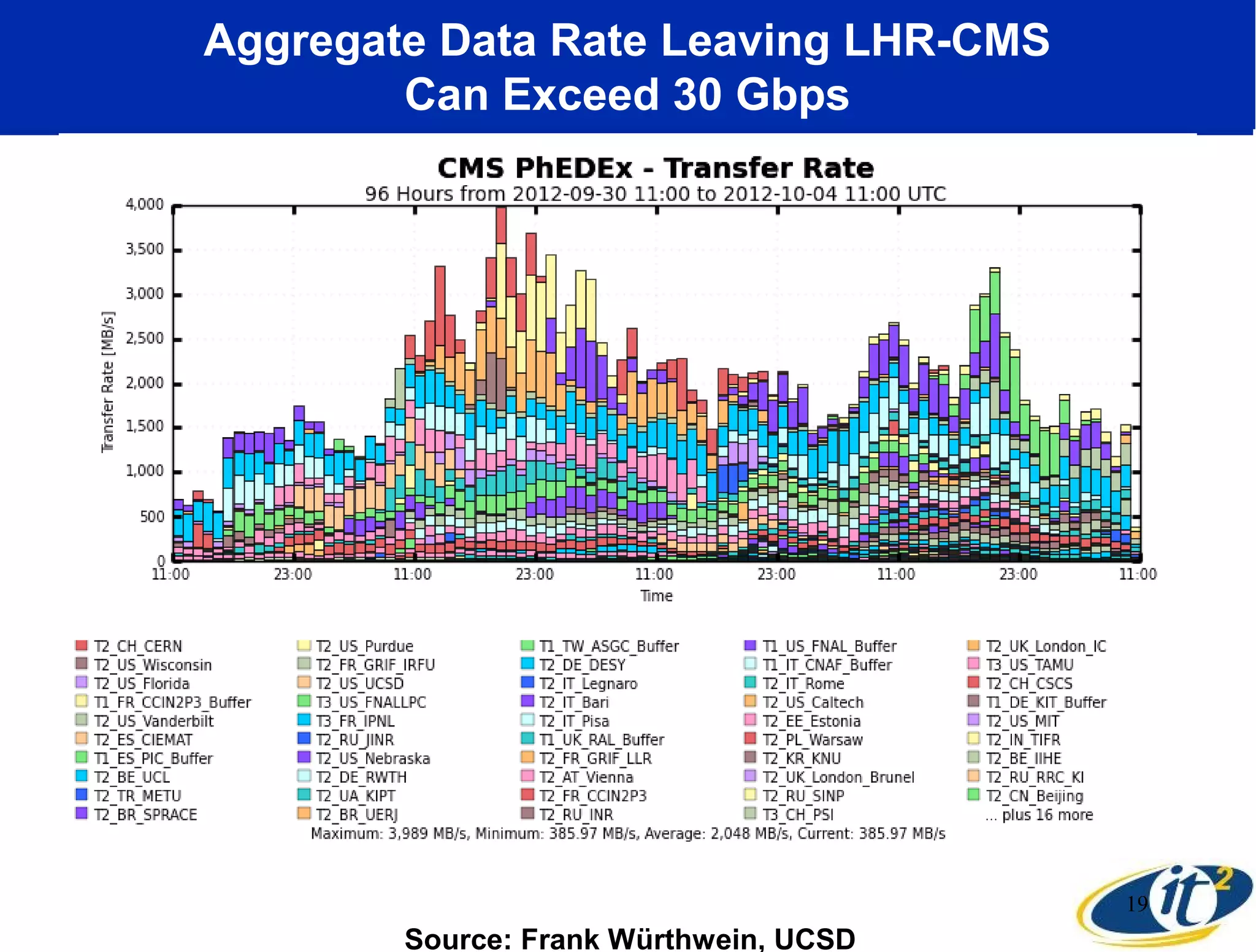

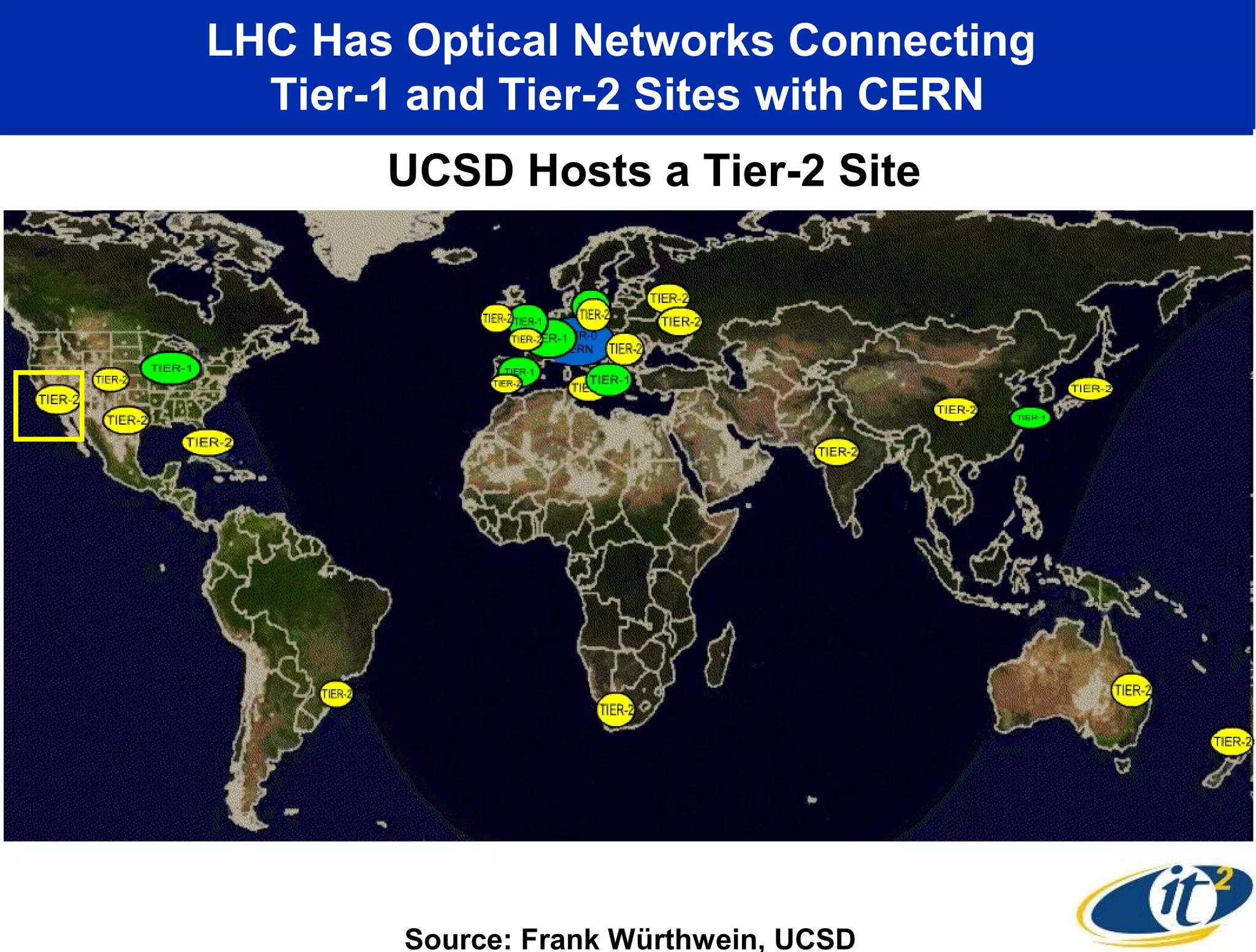

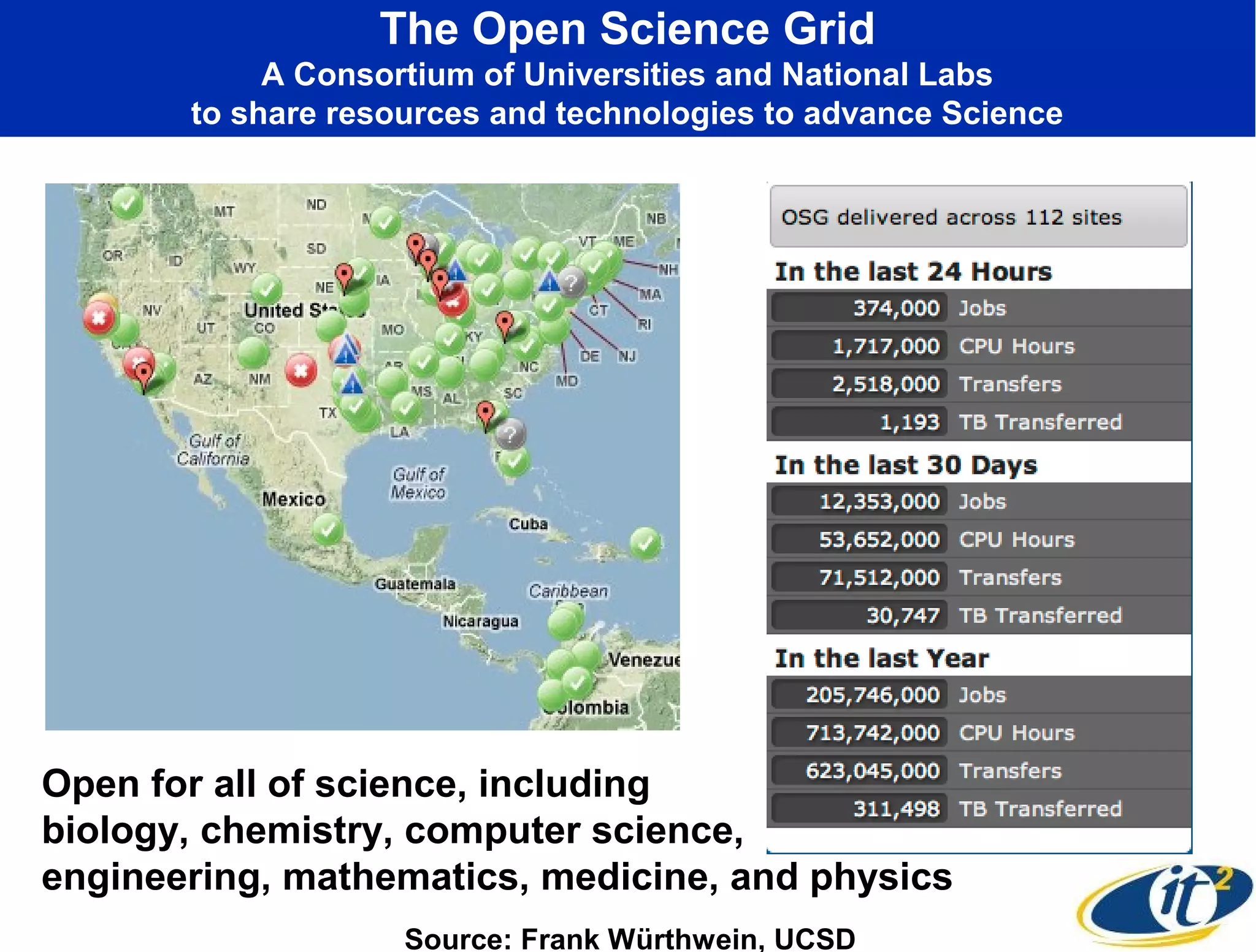

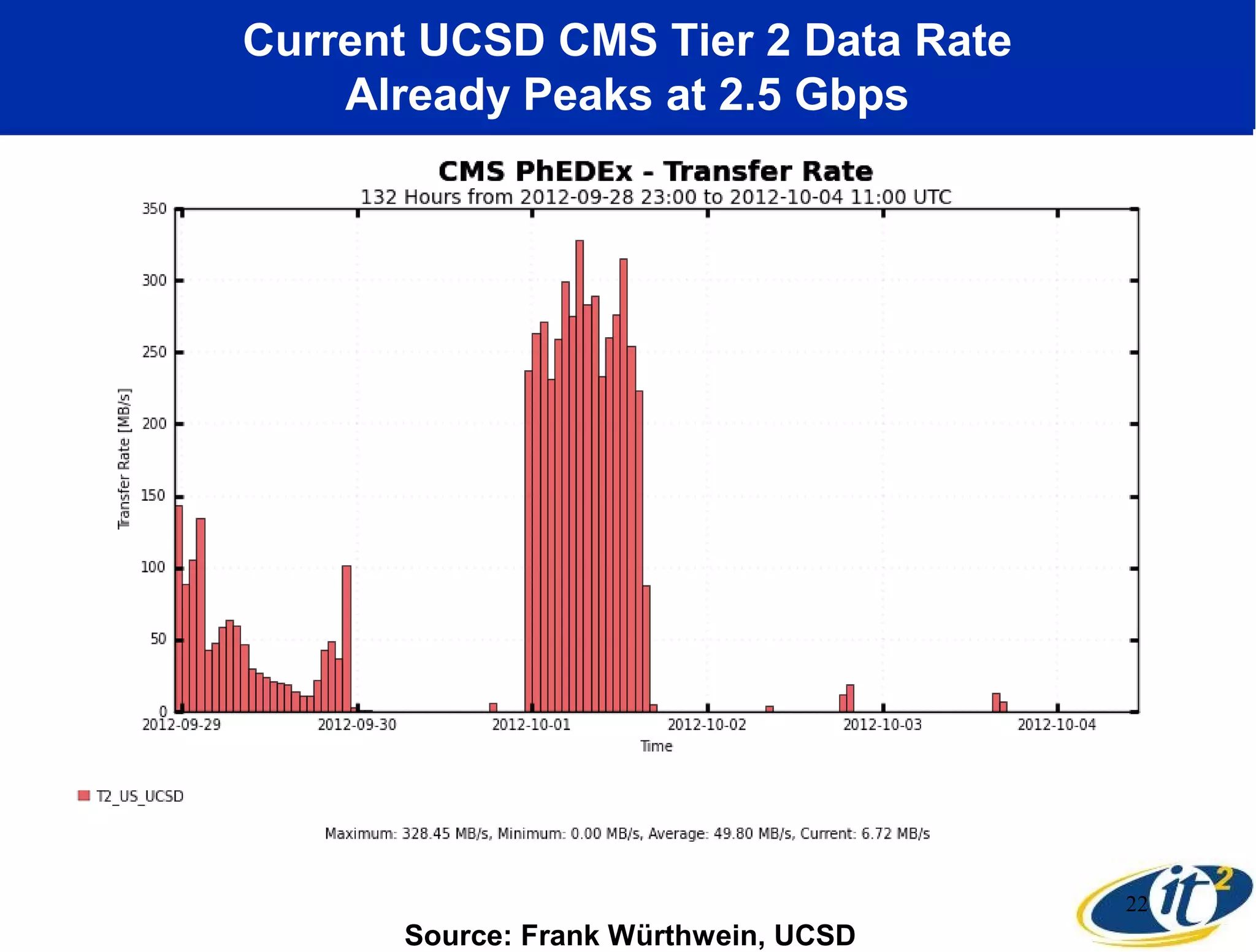

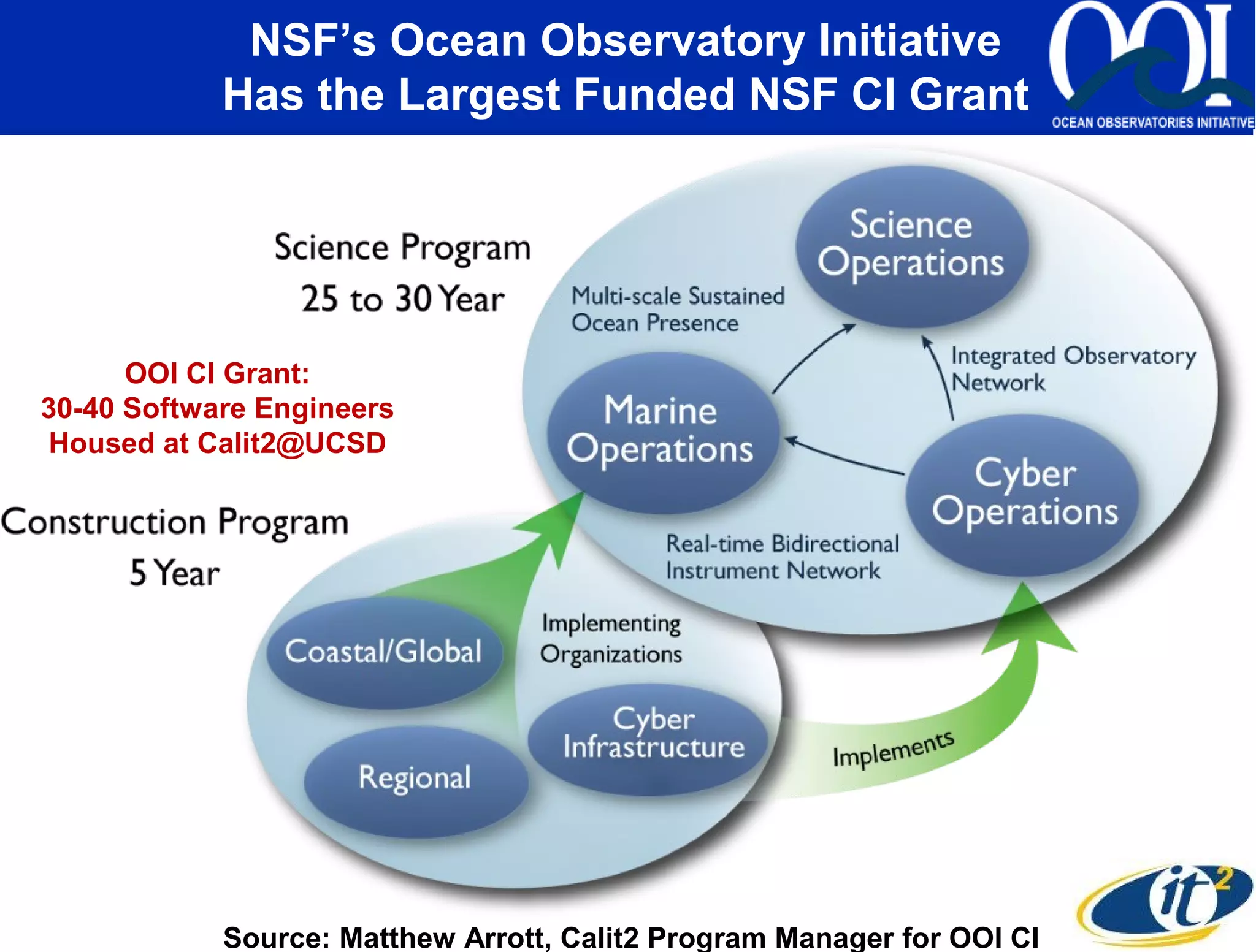

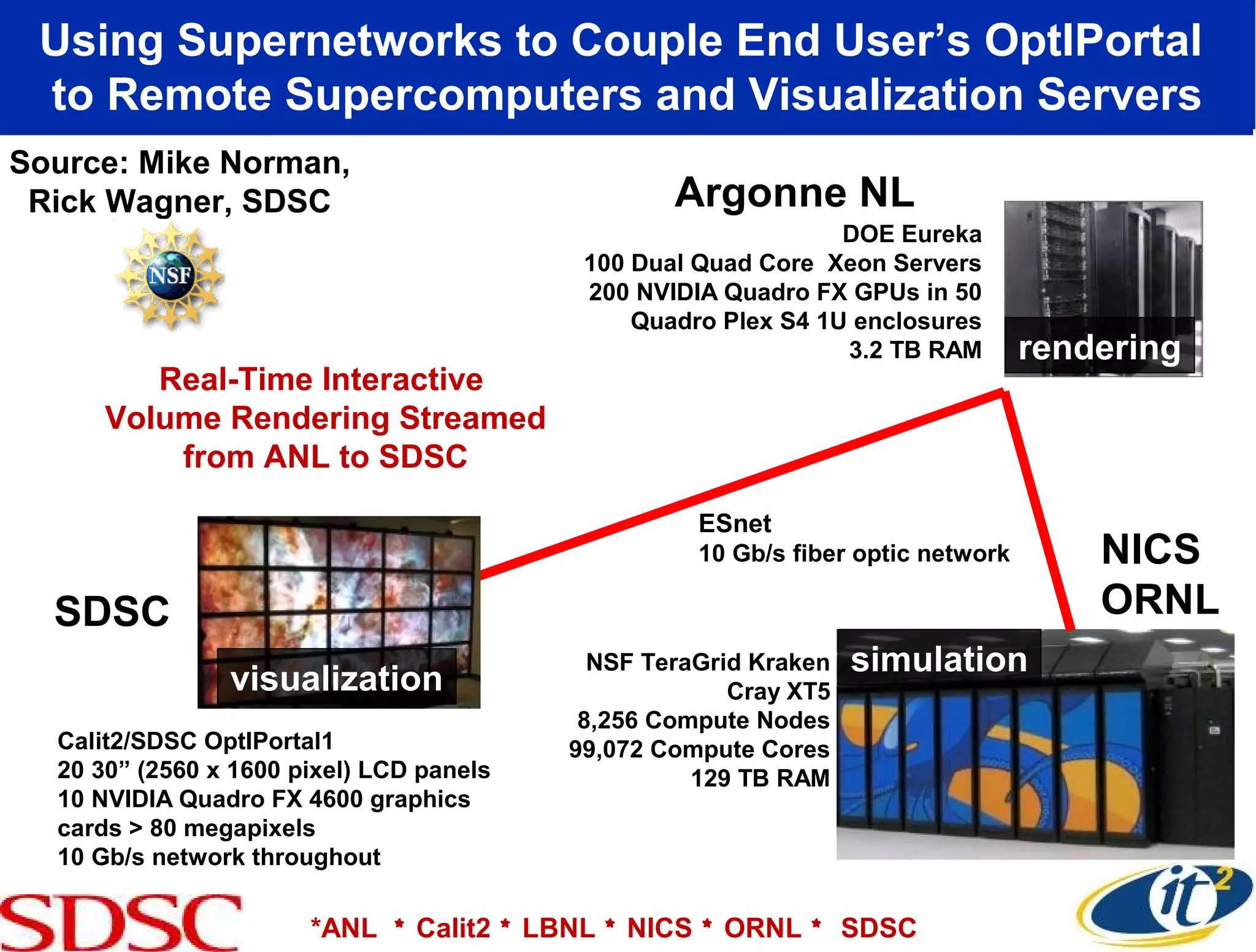

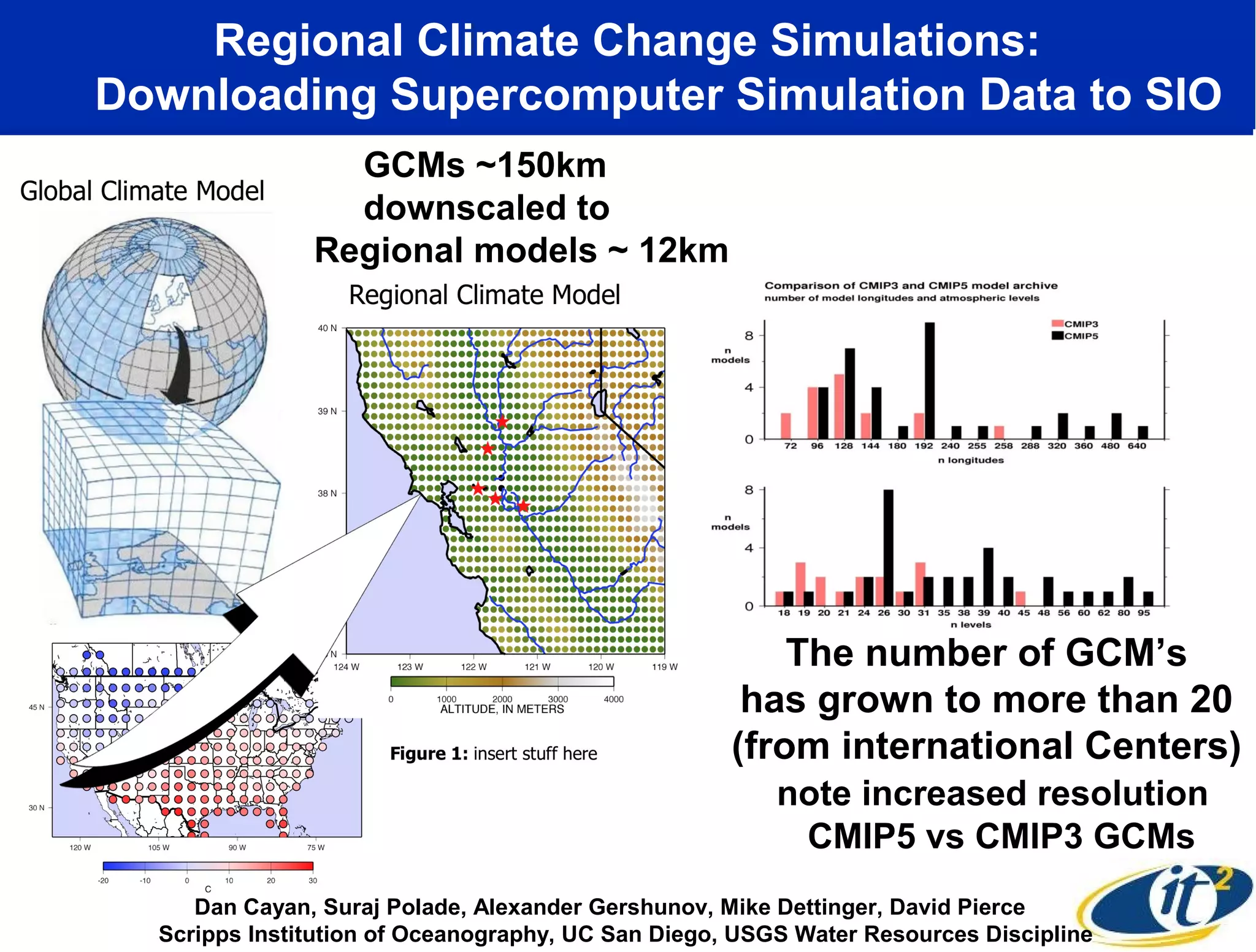

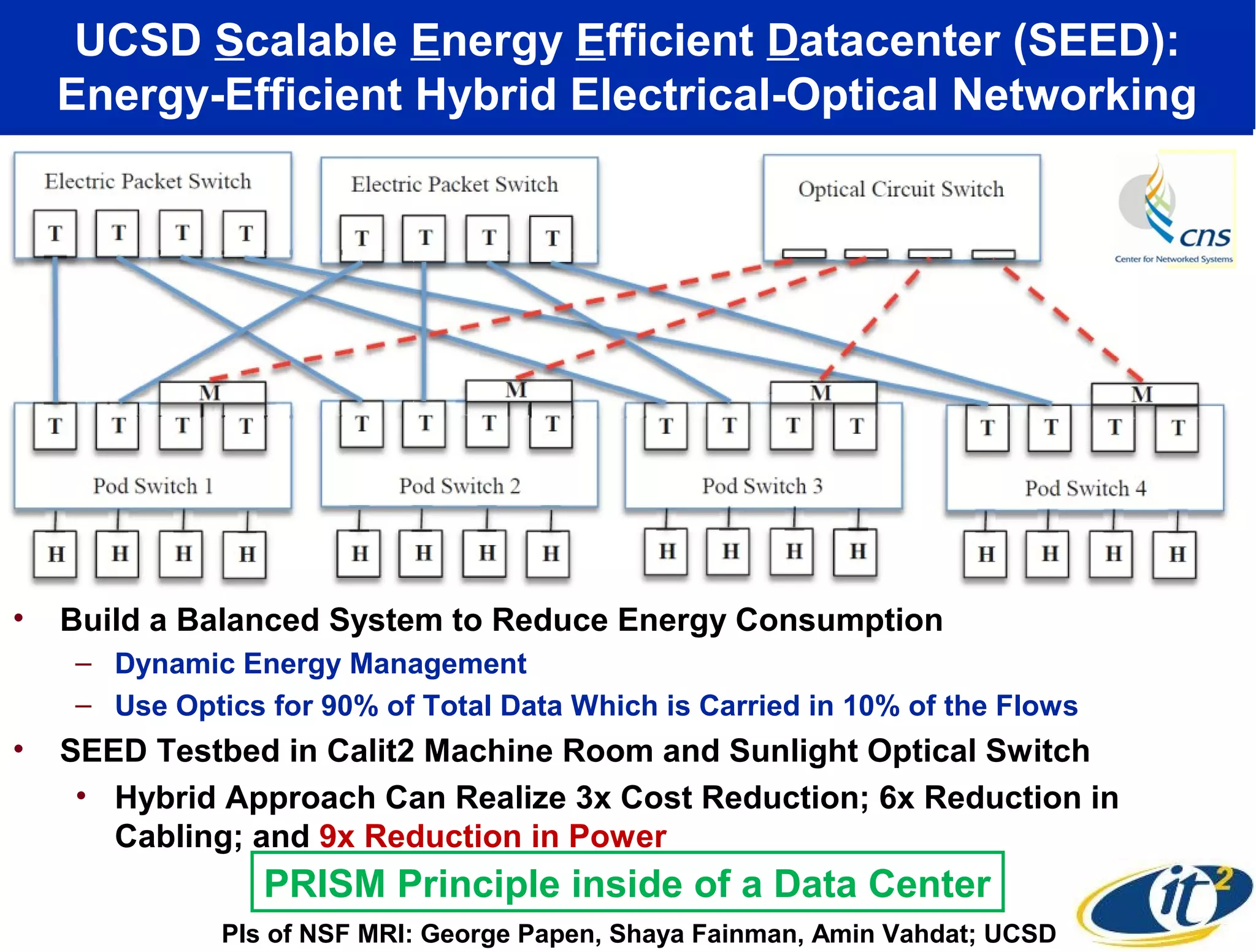

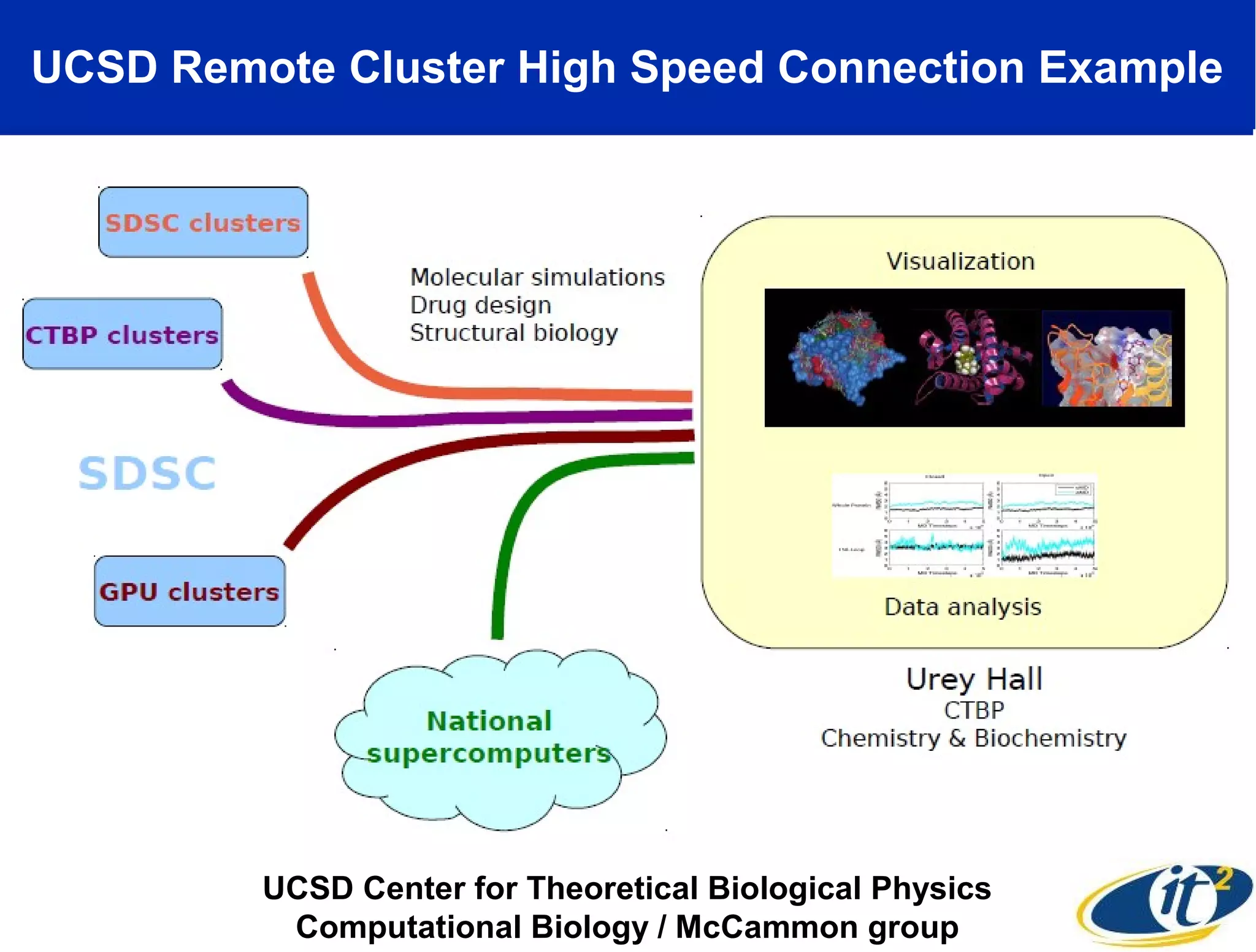

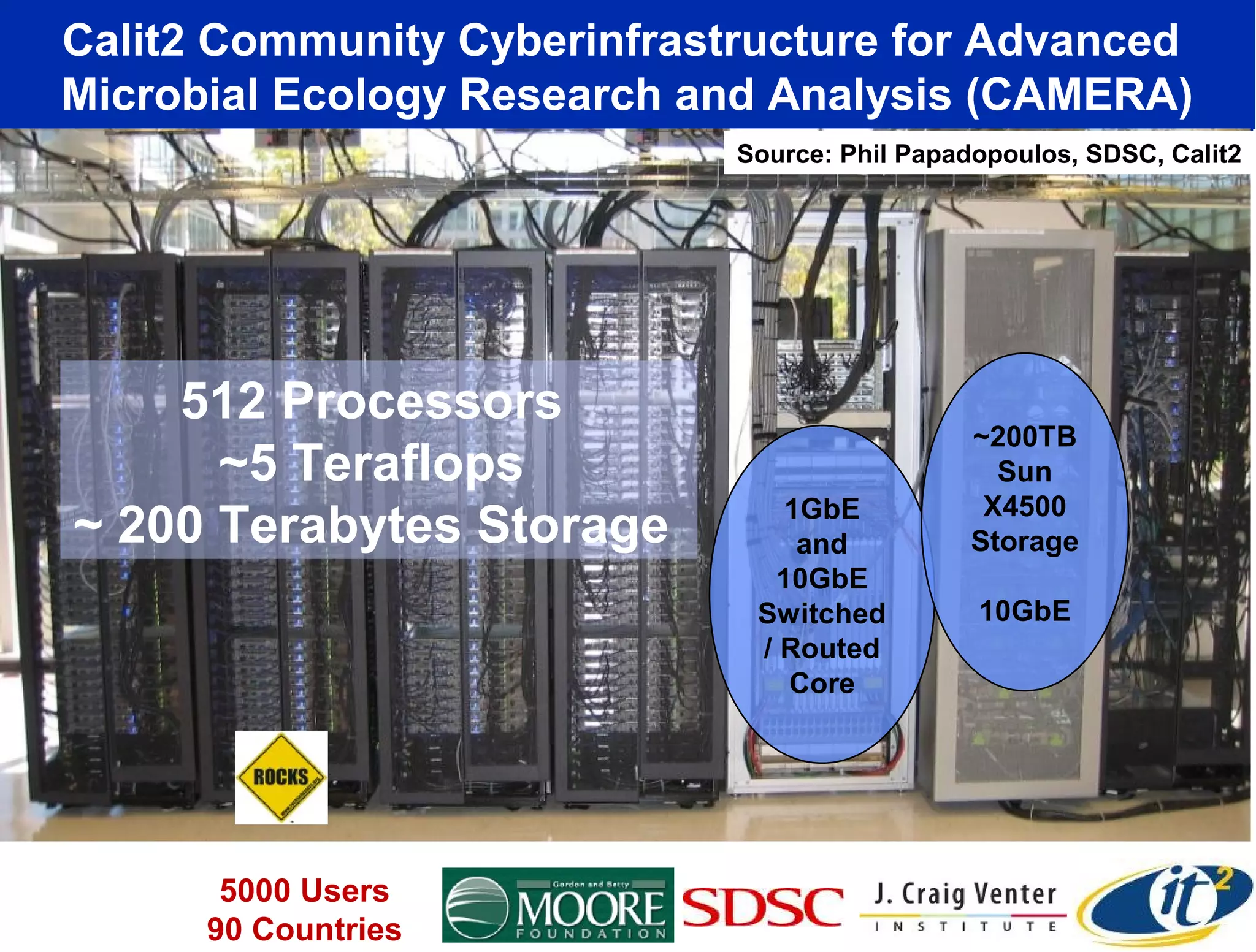

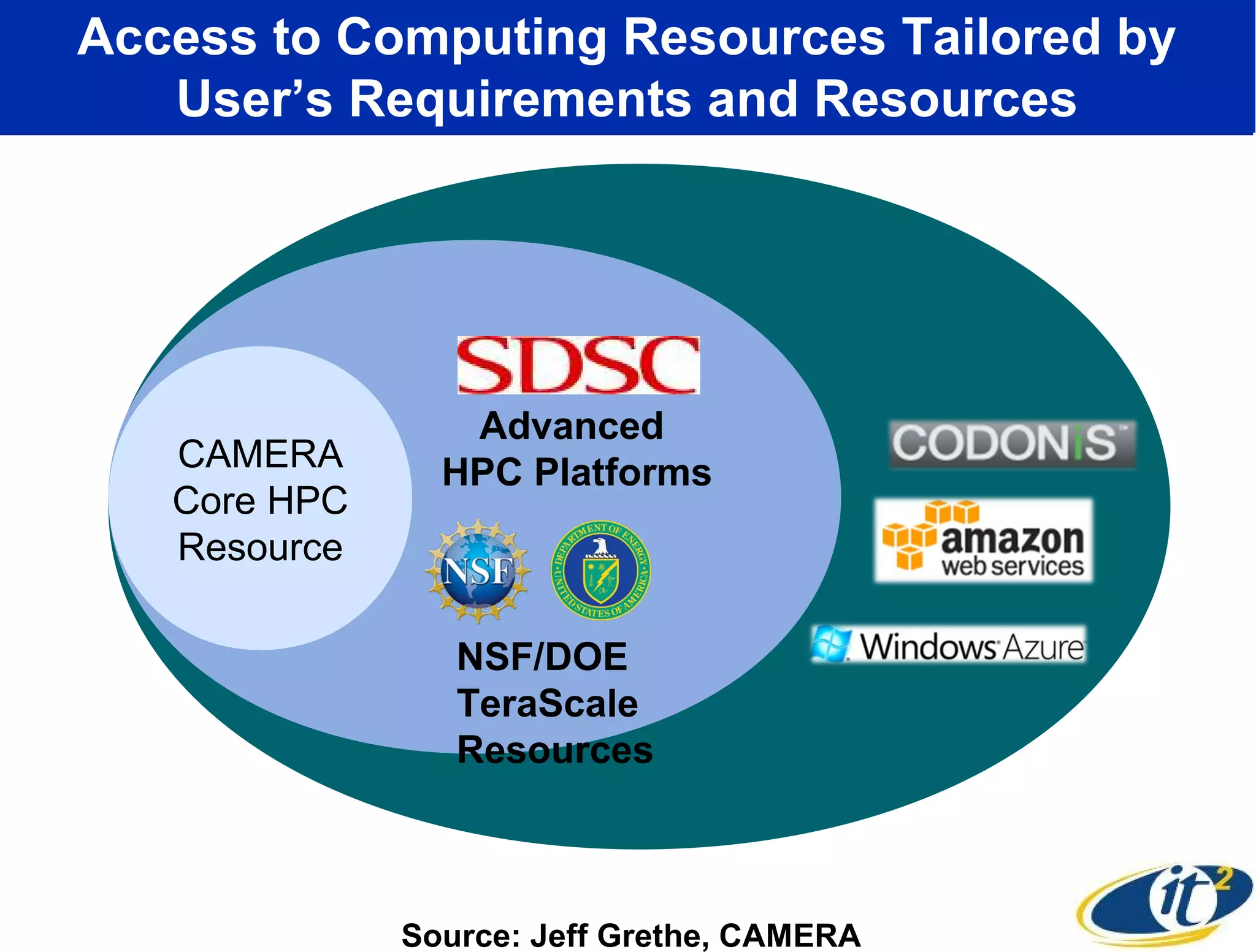

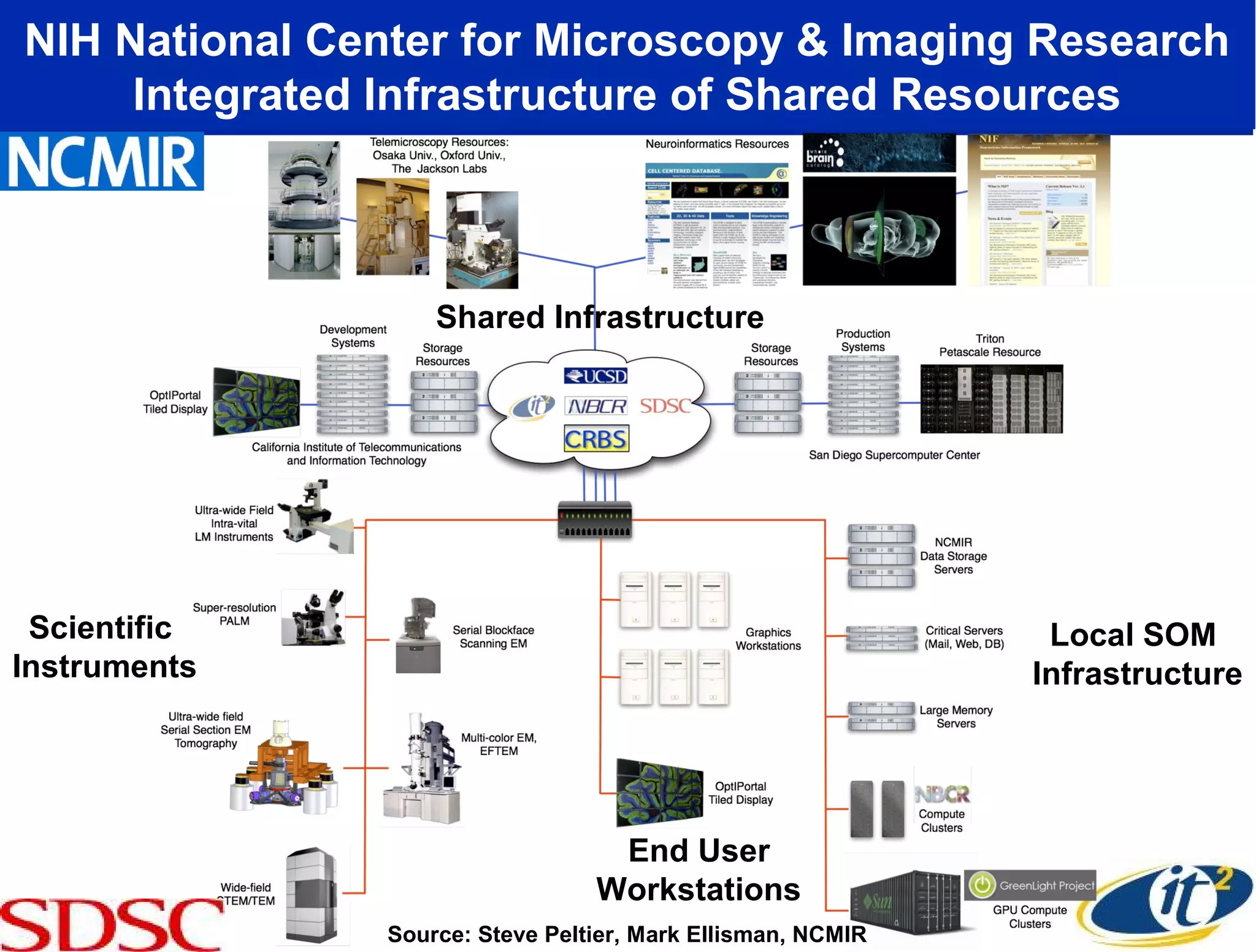

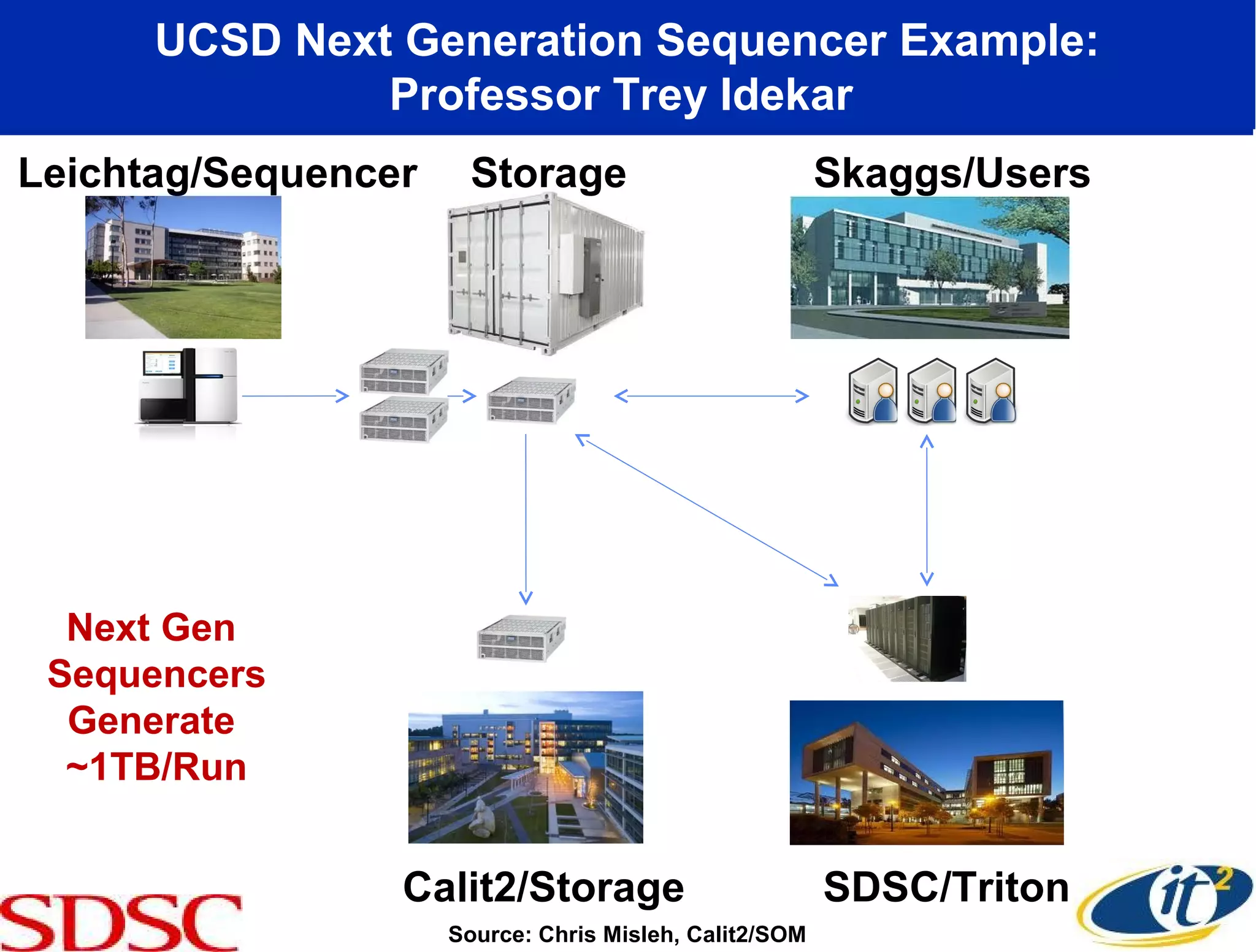

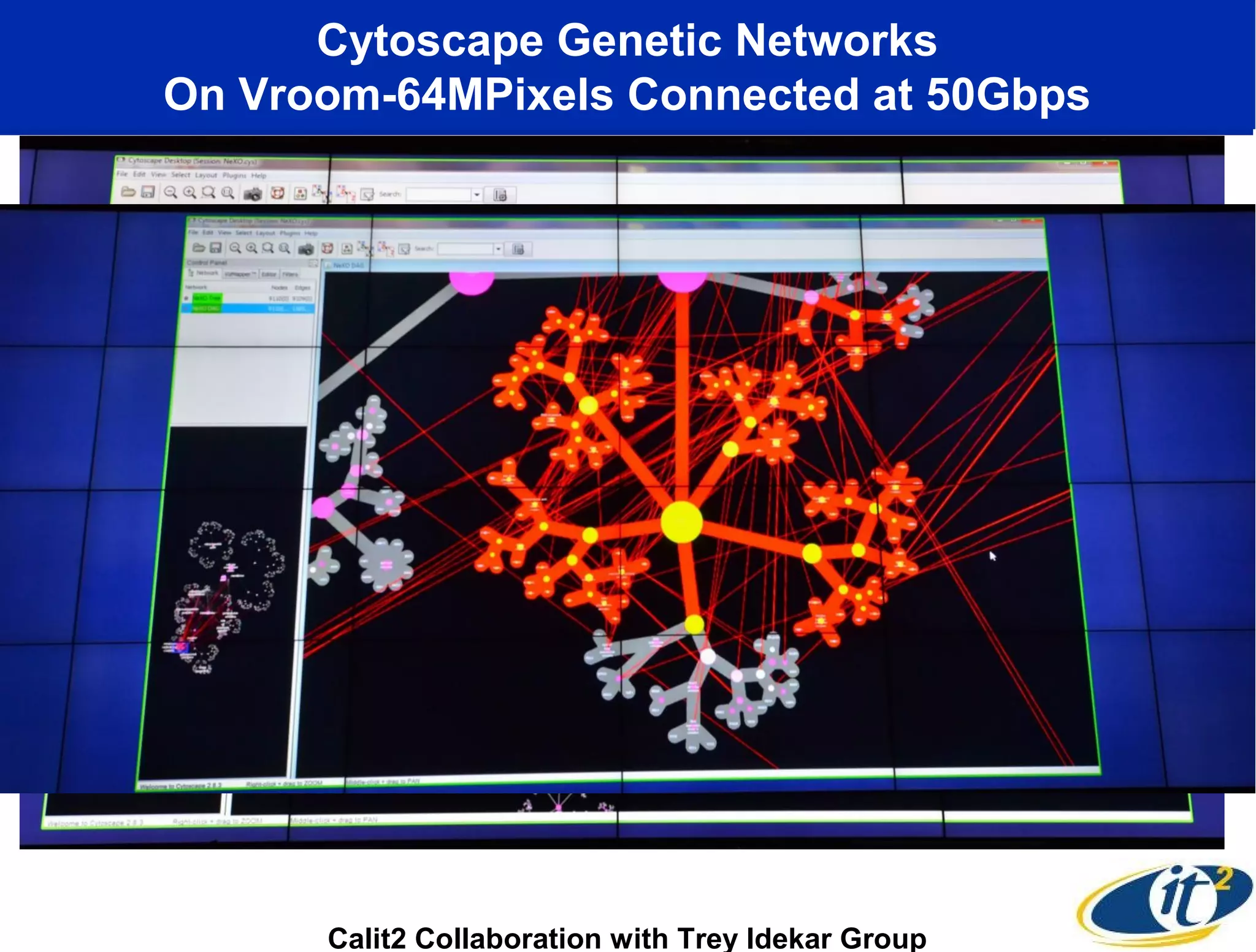

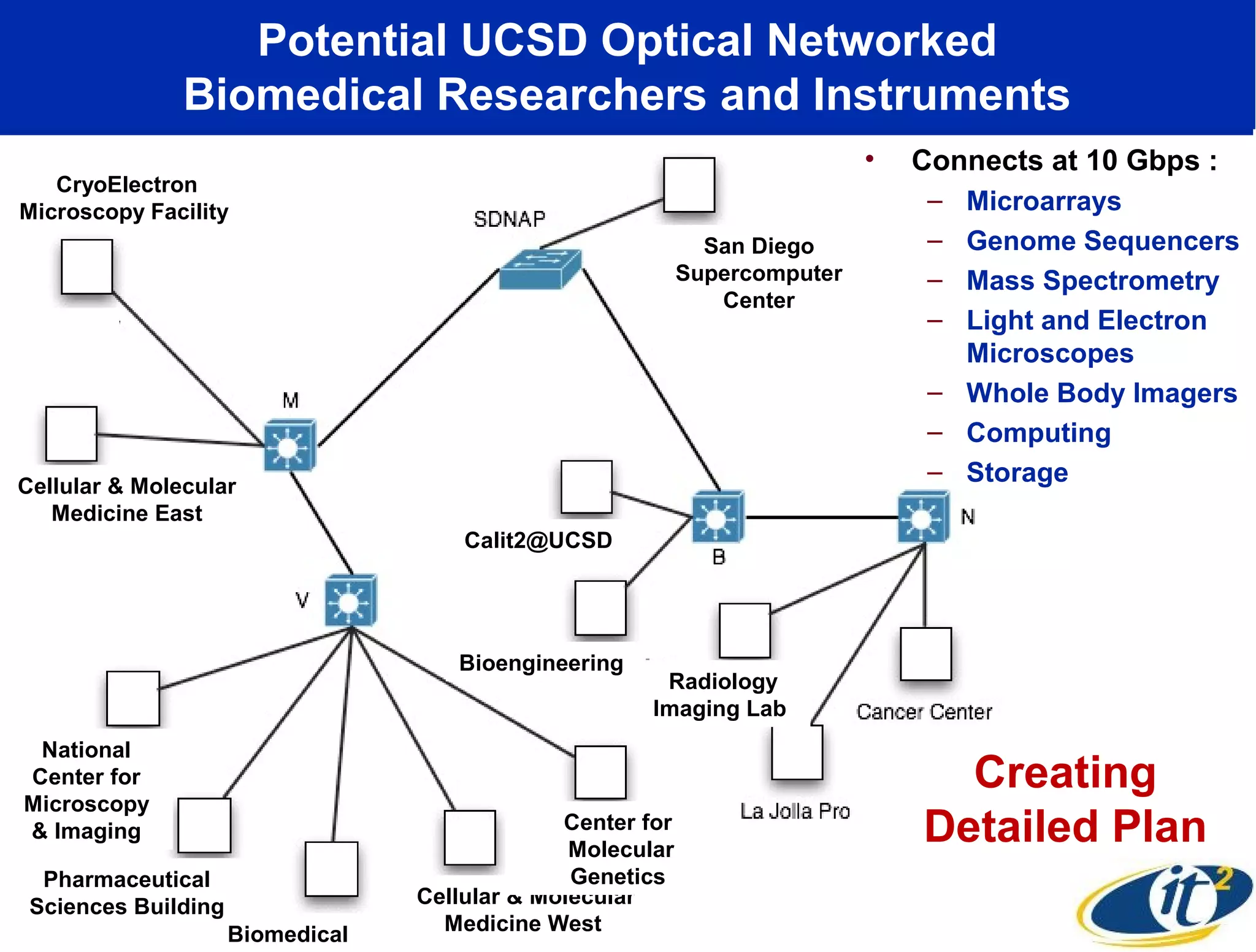

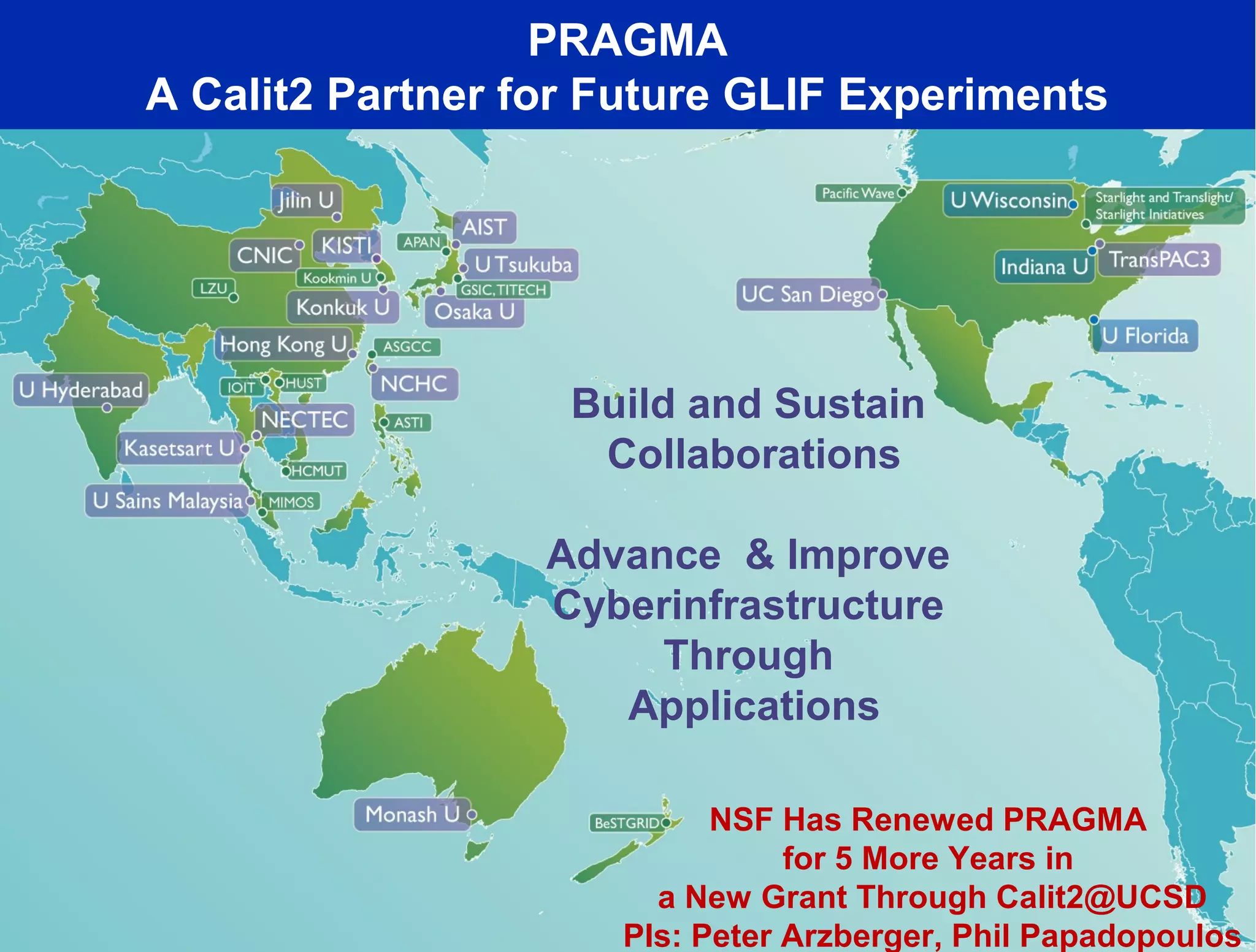

The keynote lecture by Dr. Larry Smarr discusses the enhancement of campus cyberinfrastructure (CI) through a 10 Gbps optical network, aimed at facilitating big data collaboration for scientific research. Key topics include the affordability and deployment of optical switches, the integration of remote supercomputing resources, and the utilization of advanced visualization tools. The benefits of this infrastructure extend to various fields, including genomics, climate modeling, and high-energy physics.