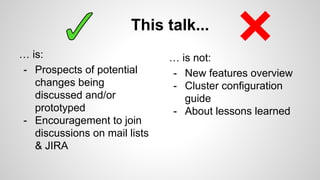

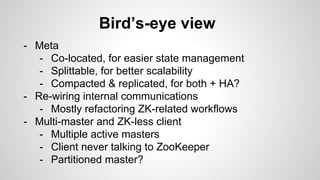

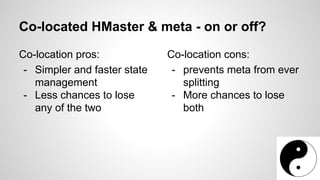

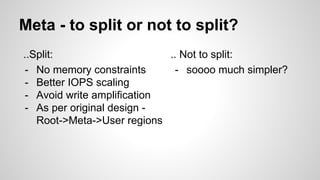

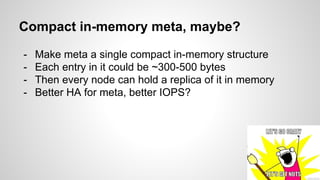

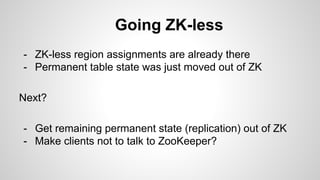

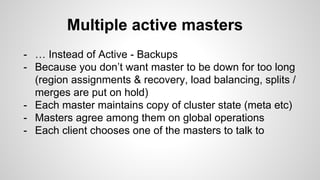

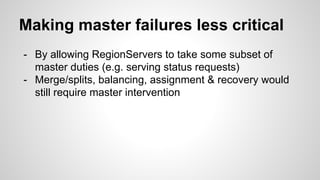

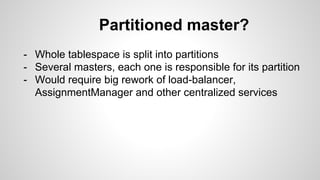

This document discusses potential future changes to the topology and architecture of HBase clusters. It describes drivers for changes like supporting clusters with over 1 million regions and improved high availability. Specific proposals discussed include co-locating the HMaster and metadata, splitting or not splitting the metadata region, compacting the in-memory metadata, removing dependencies on ZooKeeper, implementing multiple active masters, and partitioning the master responsibilities. The talk encourages joining online discussions to provide input on these proposals.