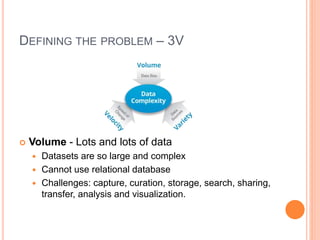

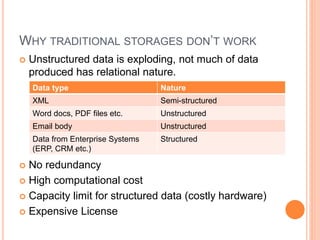

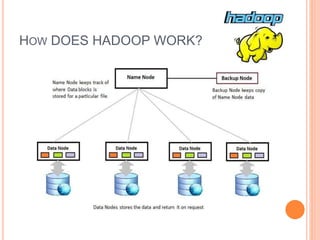

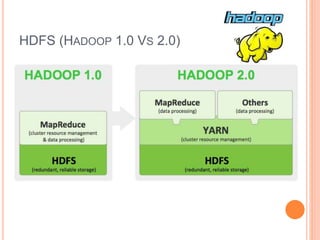

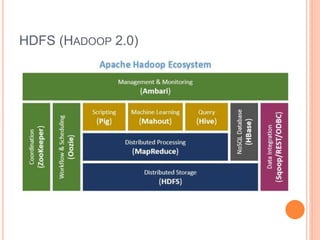

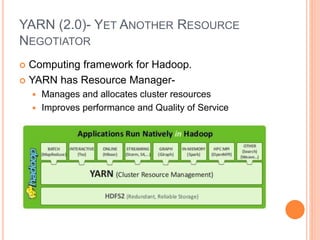

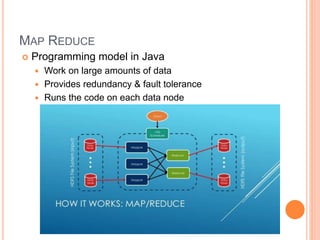

This document discusses big data solutions and introduces Hadoop. It defines common big data problems related to volume, velocity, and variety of data. Traditional storage does not work well for this type of unstructured data. Hadoop provides solutions through HDFS for storage, MapReduce for processing, and additional tools like HBase, Pig, Hive, Zookeeper, and Spark to handle different data and analytic needs. Each tool is described briefly in terms of its purpose and how it works with Hadoop.