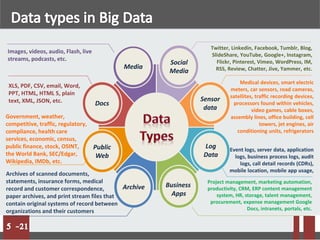

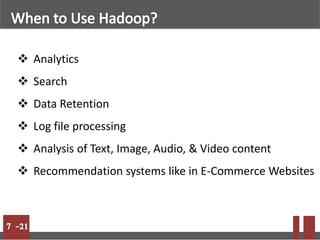

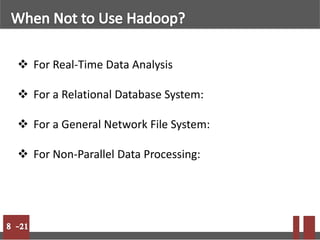

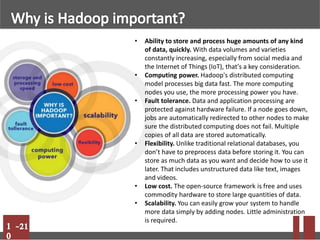

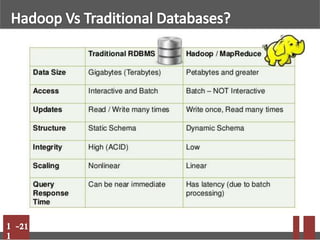

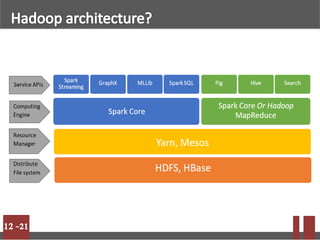

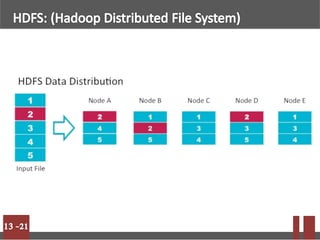

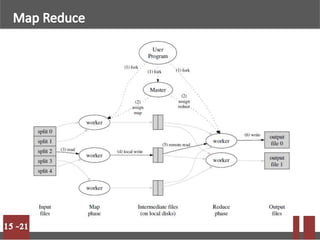

Big data refers to massive volumes of structured and unstructured data that are difficult to process using traditional databases. Hadoop is an open-source framework for distributed storage and processing of big data across clusters of commodity hardware. It uses HDFS for storage and MapReduce as a programming model. HDFS stores data in blocks across nodes for fault tolerance. MapReduce allows parallel processing of large datasets.