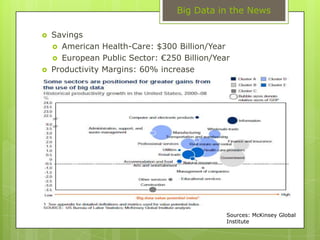

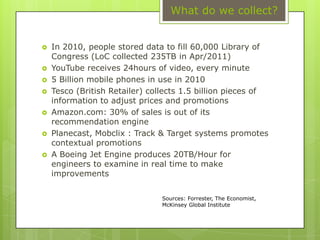

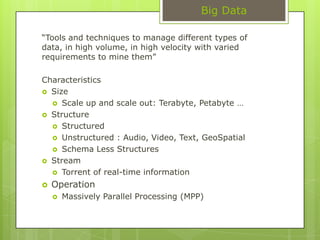

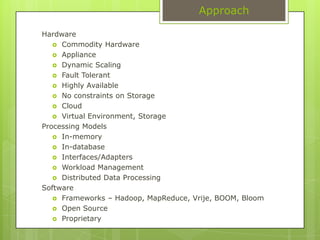

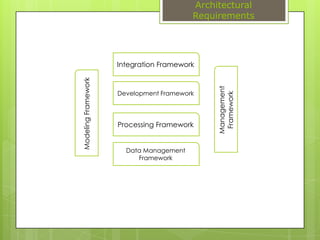

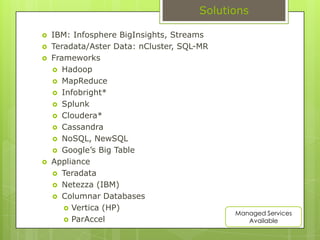

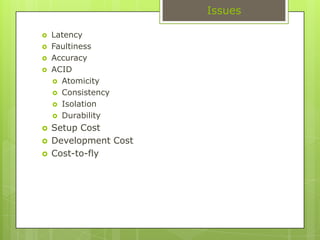

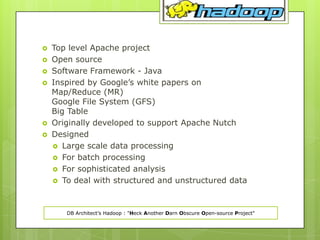

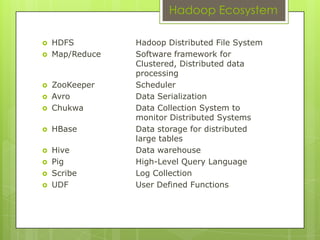

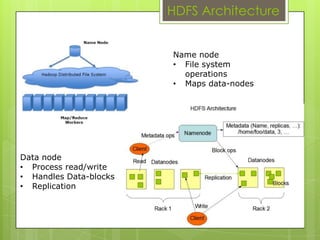

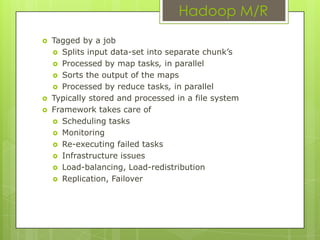

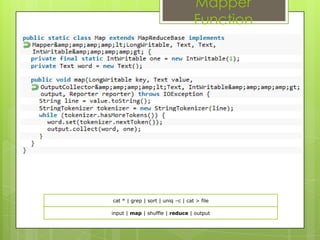

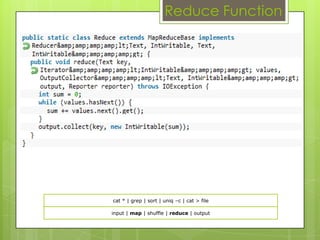

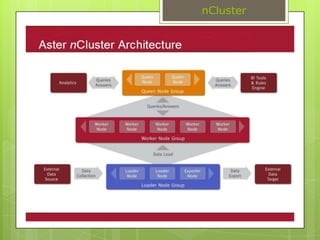

This document discusses big data, including how much data is now being collected, challenges with traditional database management systems, and the need for new approaches like Hadoop and Aster Data. It provides details on characteristics of big data, architectural requirements, techniques for analysis, and solutions from companies like IBM, Teradata, and Aster Data. Hadoop is discussed in depth, covering how it works, the ecosystem, and example users. Aster Data is also summarized, focusing on its massively parallel SQL layer and in-database analytics capabilities.