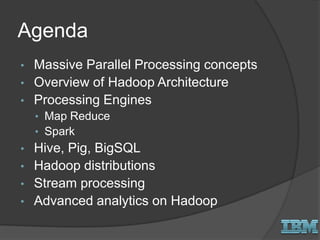

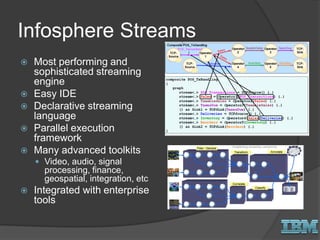

The document discusses big data concepts and Hadoop technologies. It provides an overview of massive parallel processing and the Hadoop architecture. It describes common processing engines like MapReduce, Spark, Hive, Pig and BigSQL. It also discusses Hadoop distributions from Hortonworks, Cloudera and IBM along with stream processing and advanced analytics on Hadoop platforms.