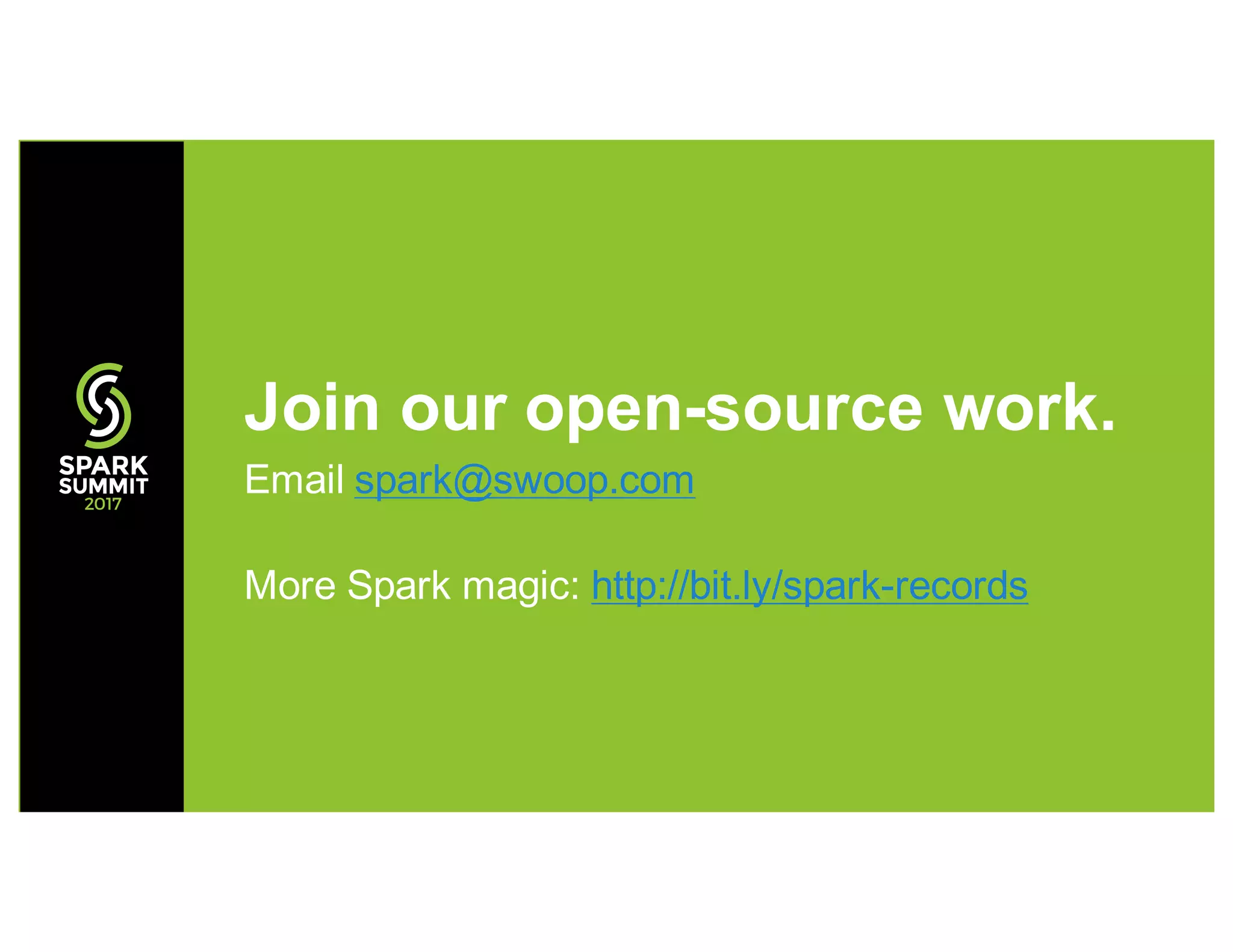

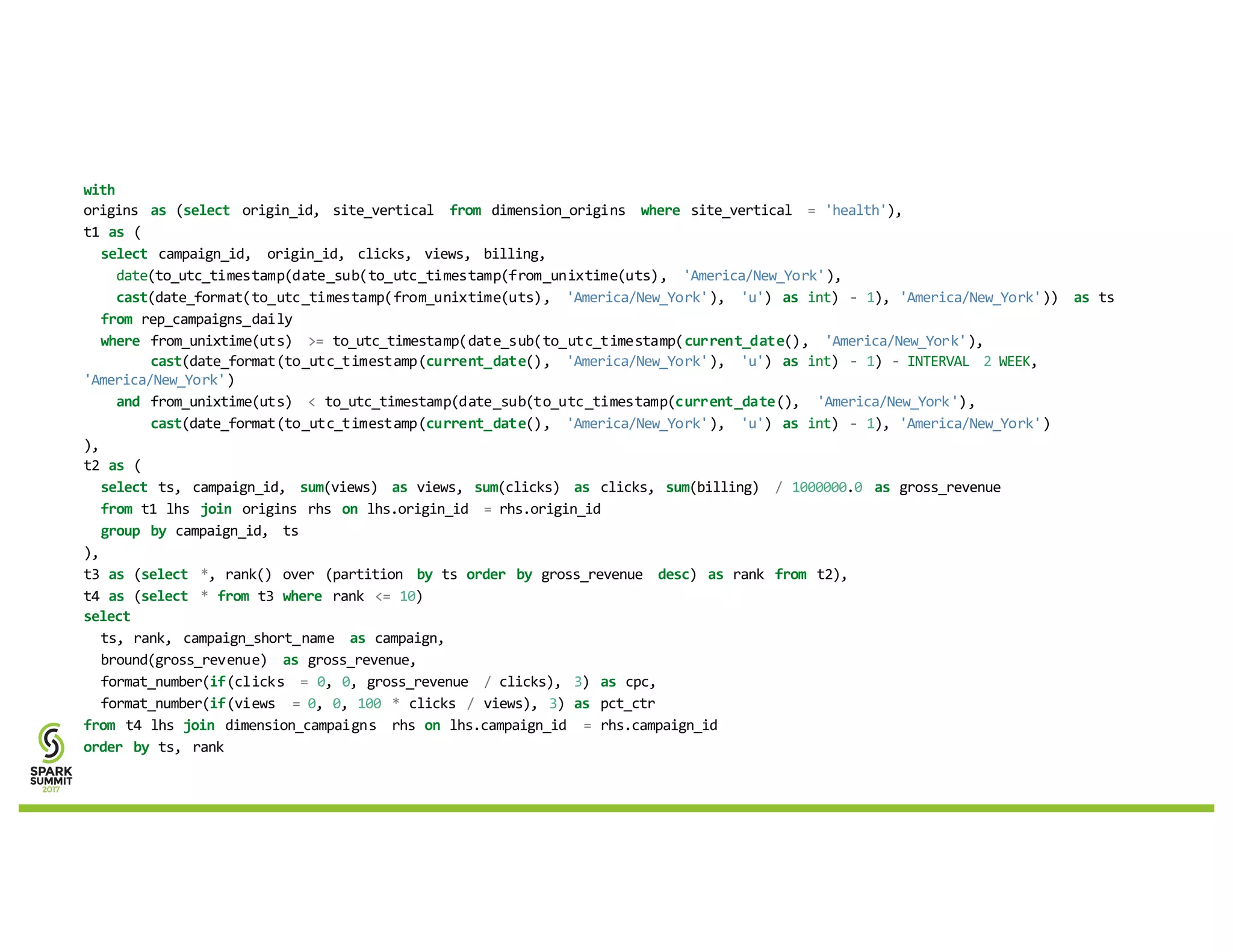

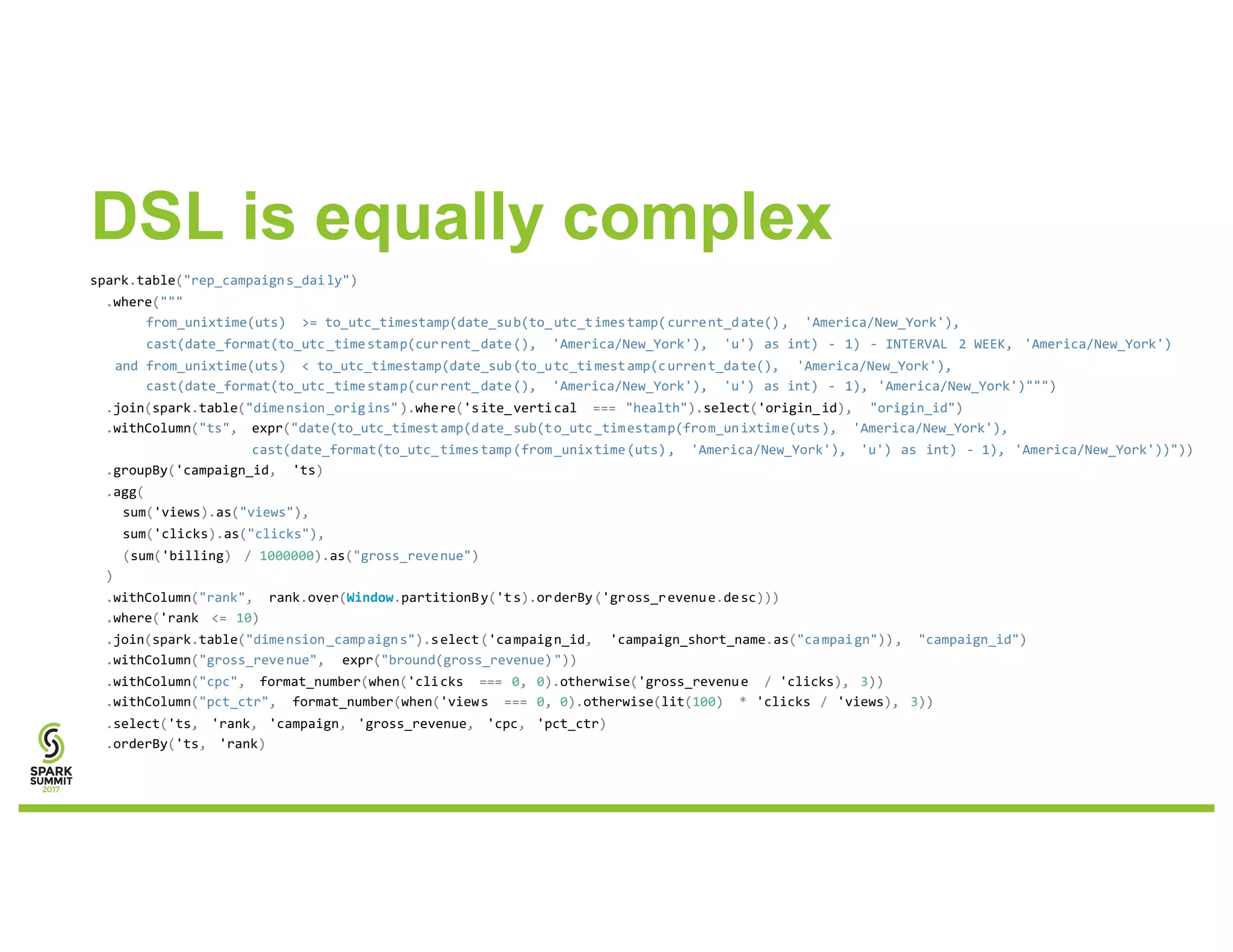

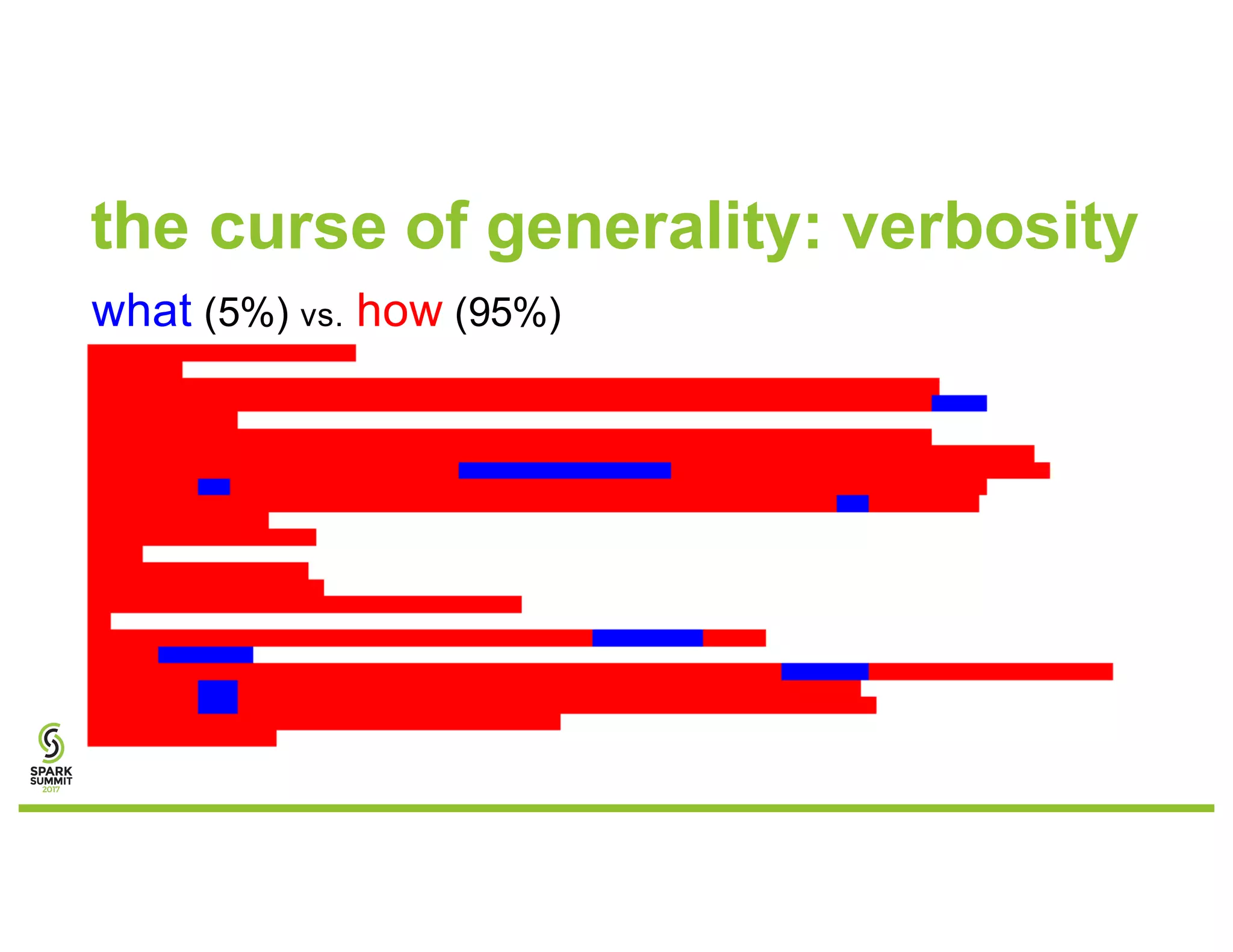

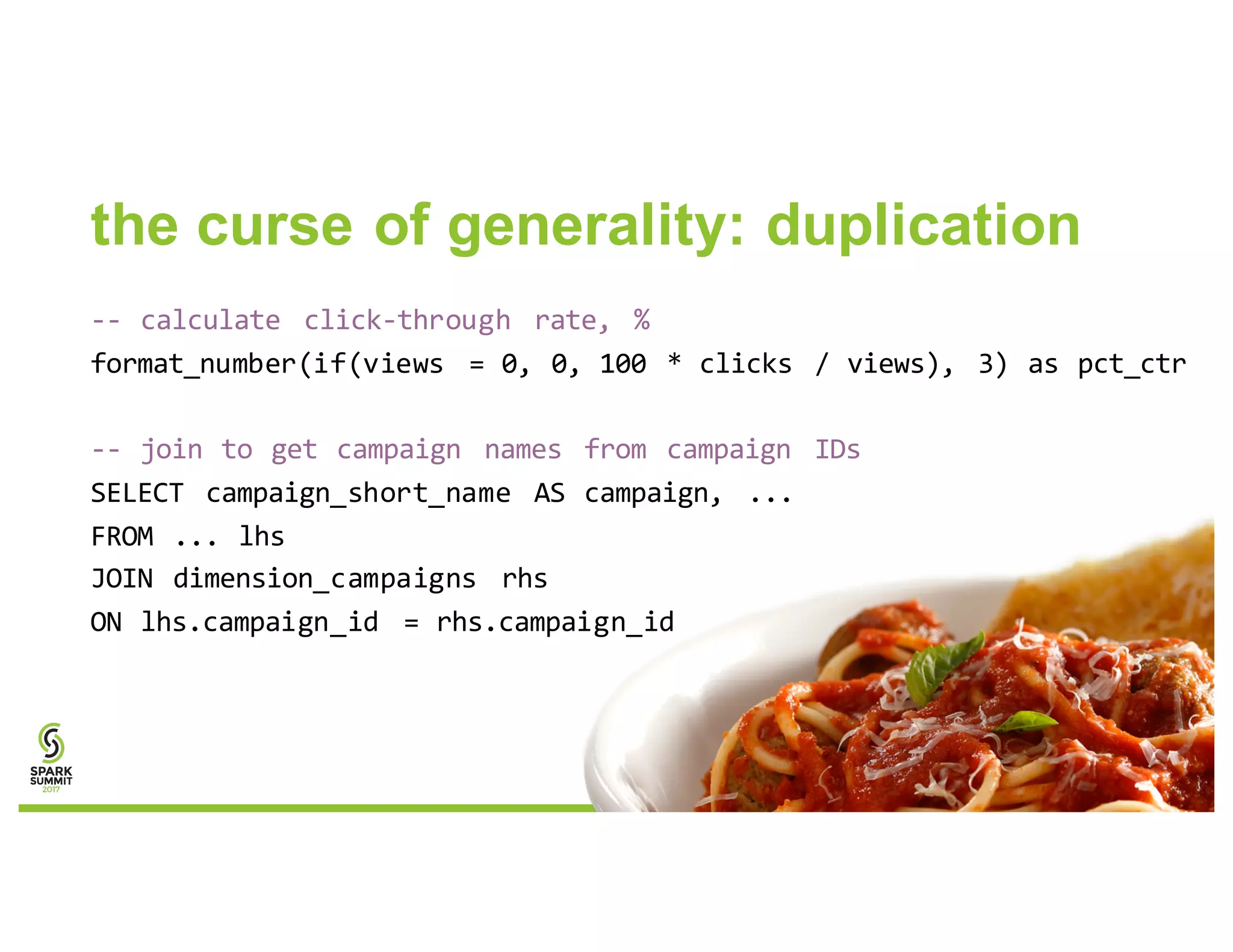

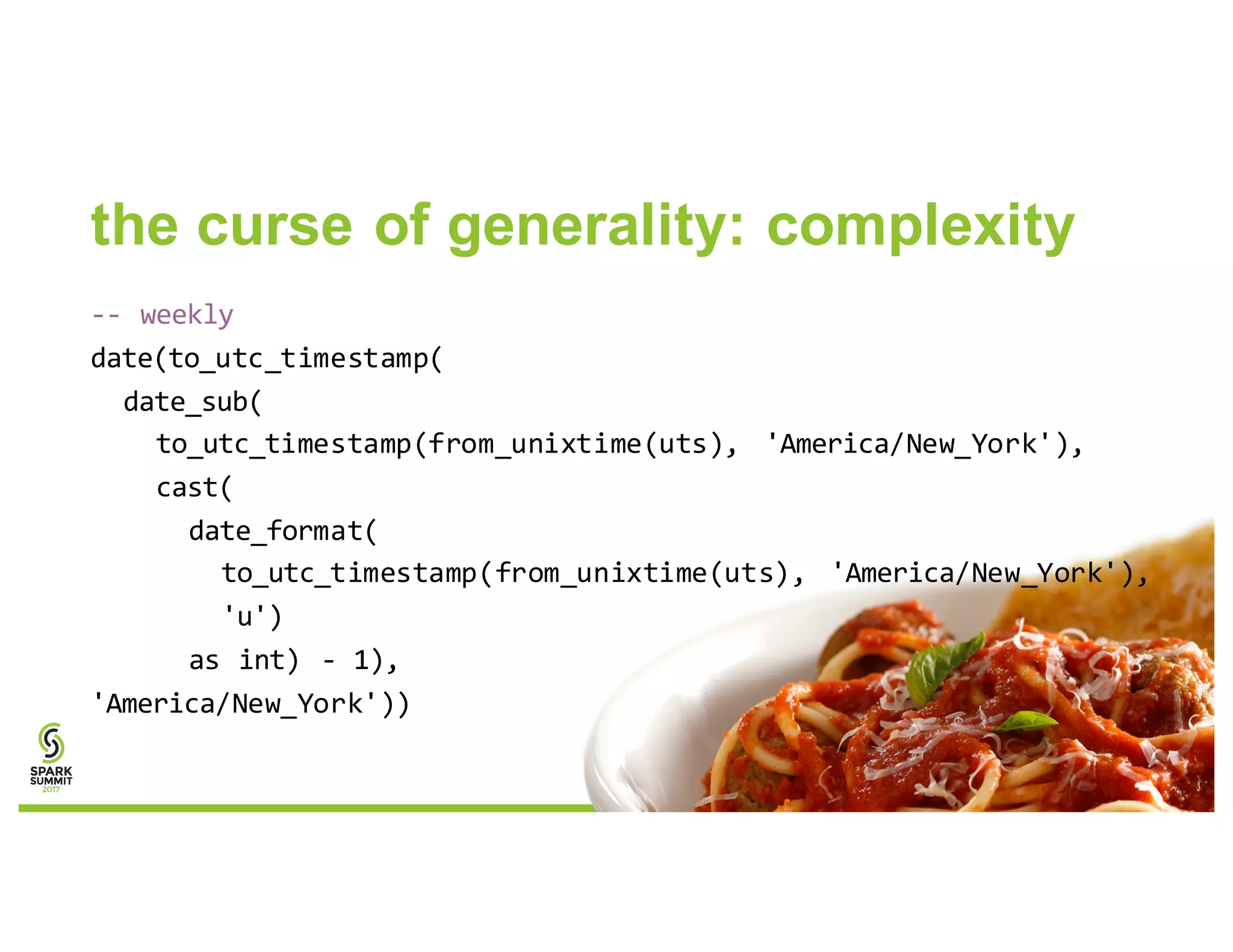

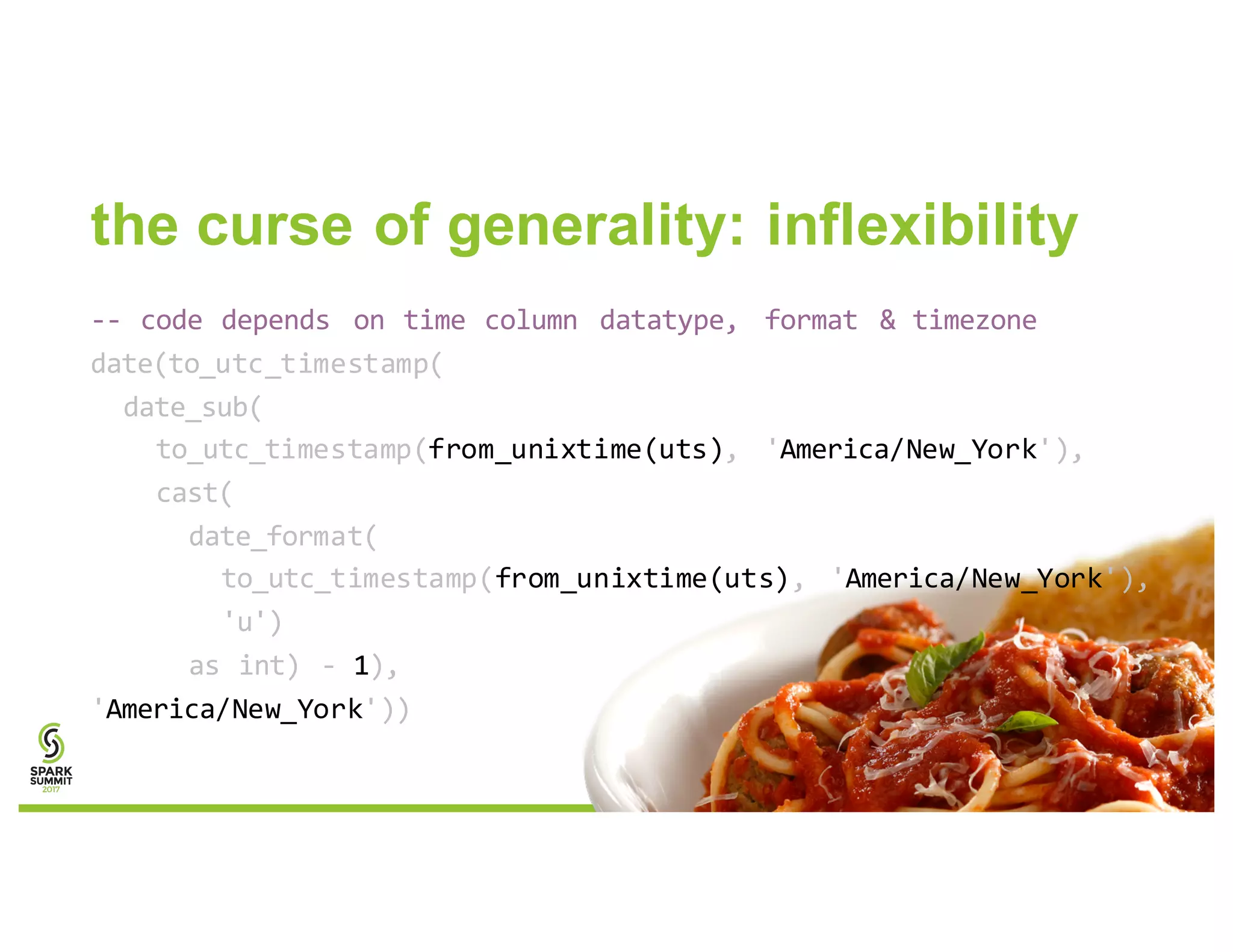

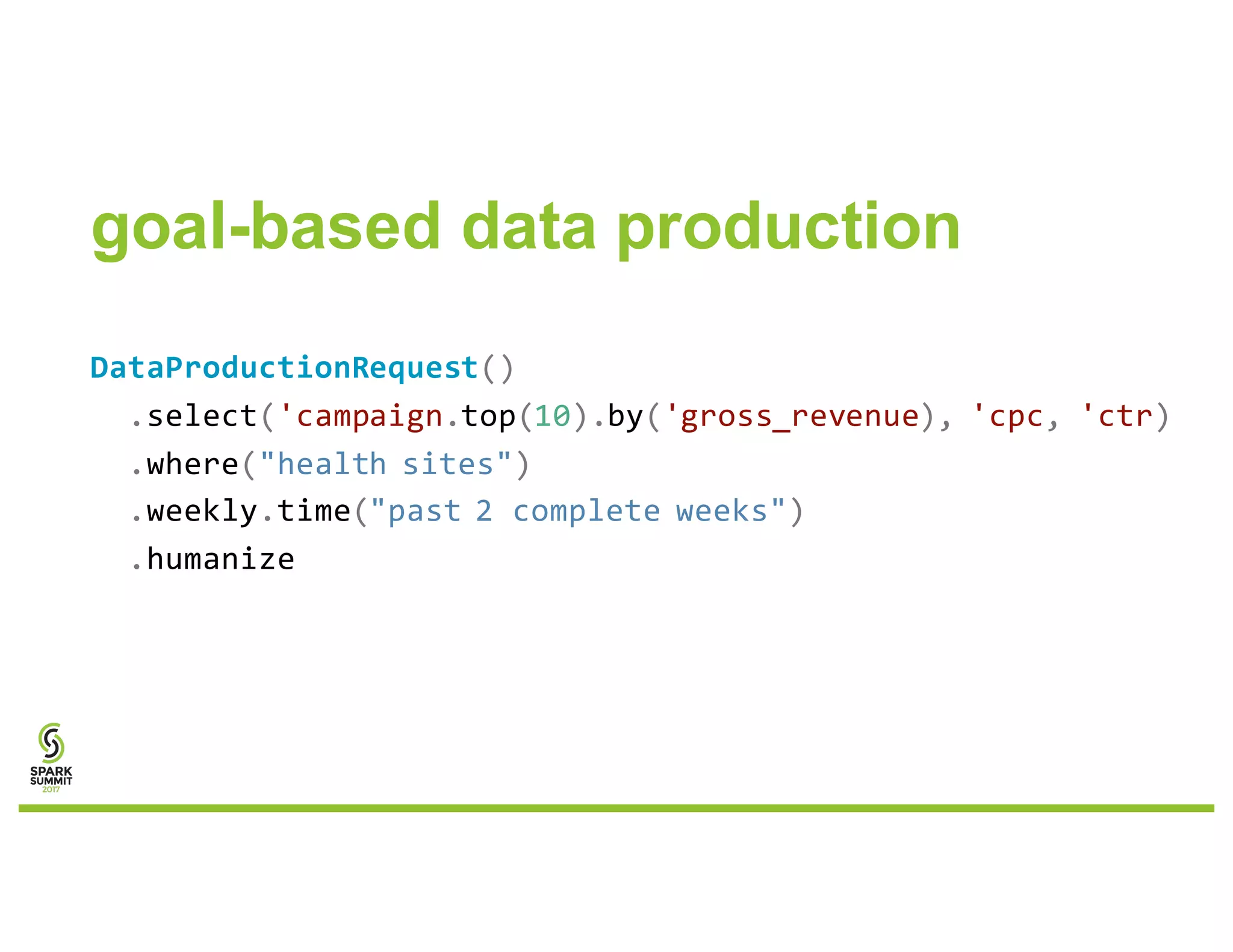

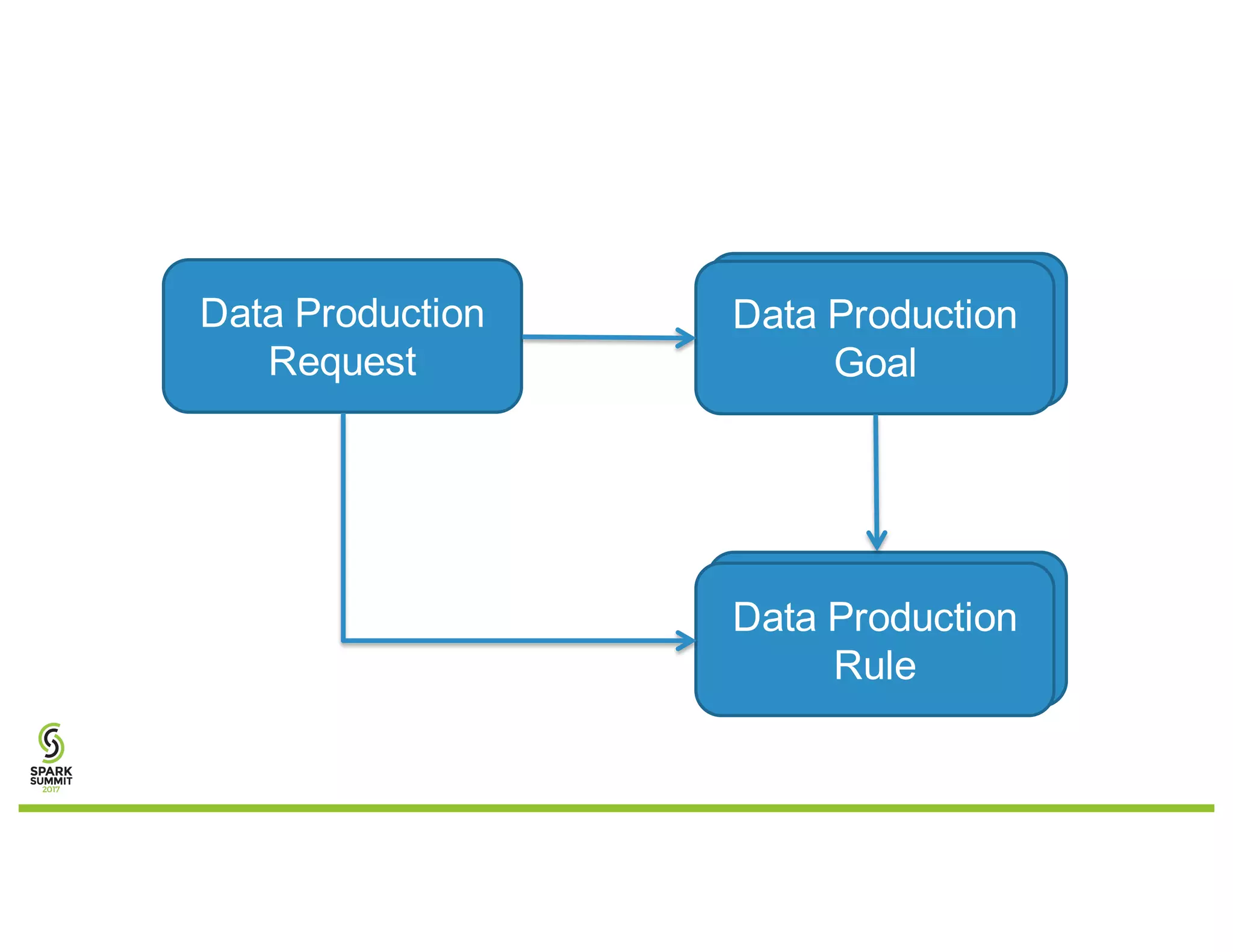

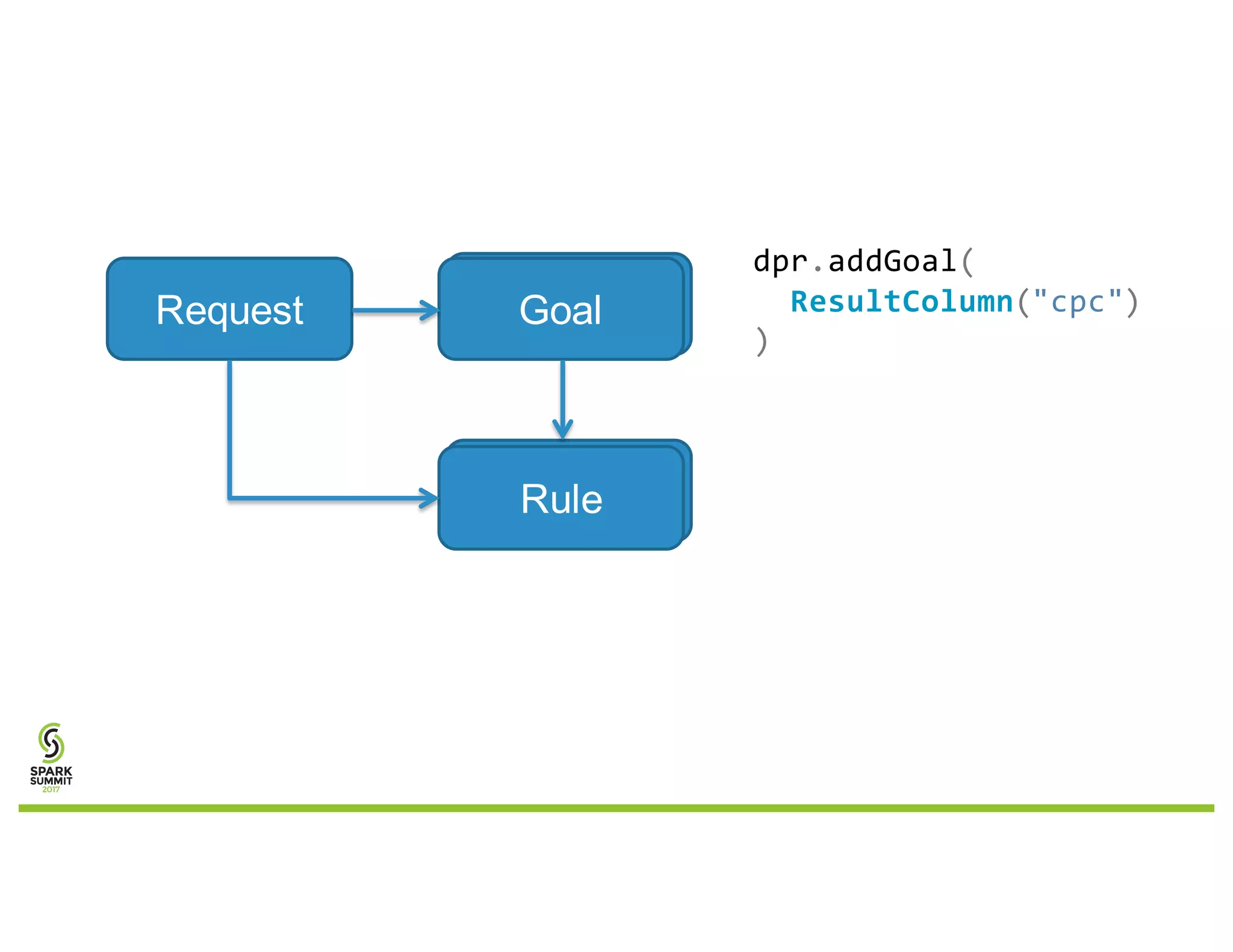

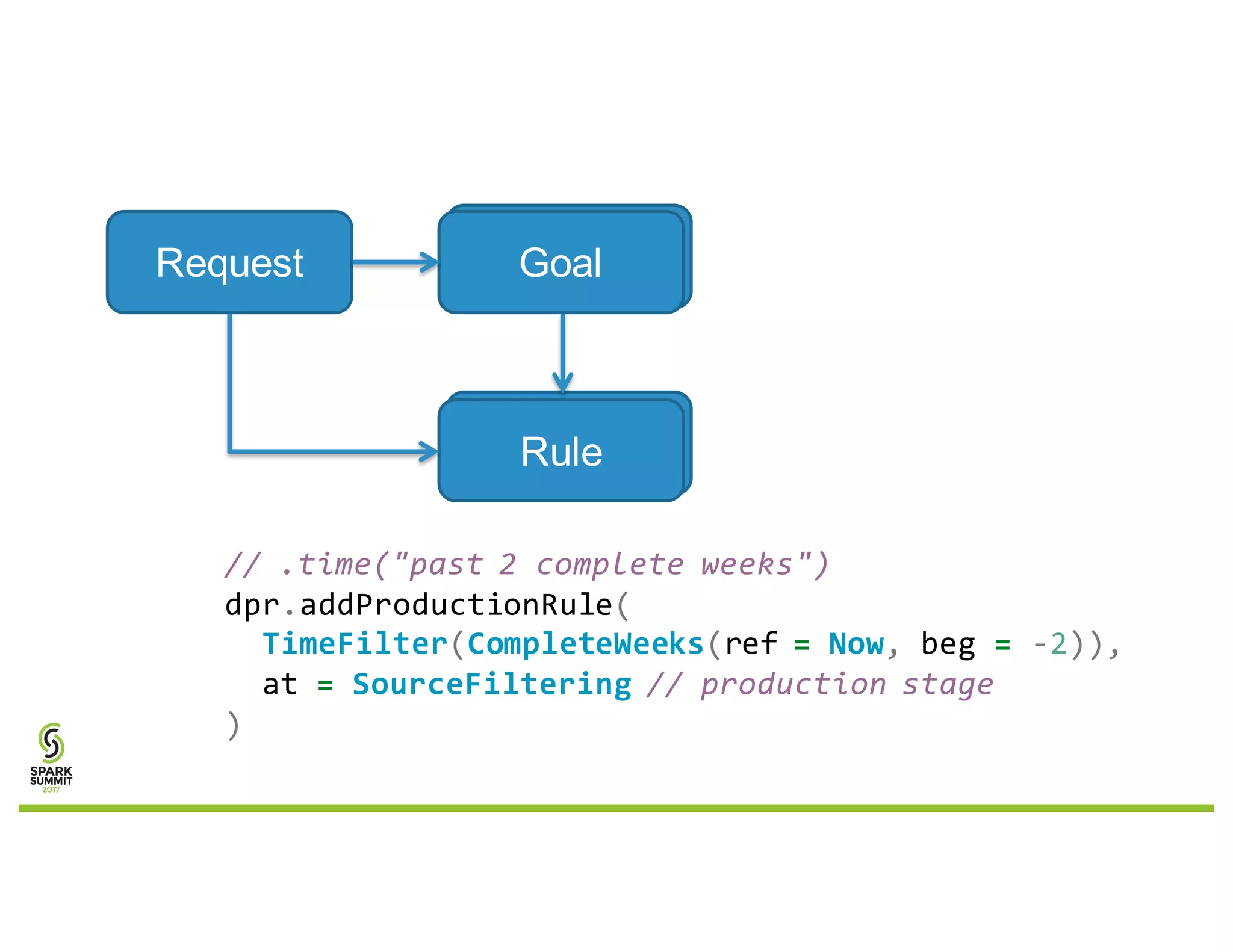

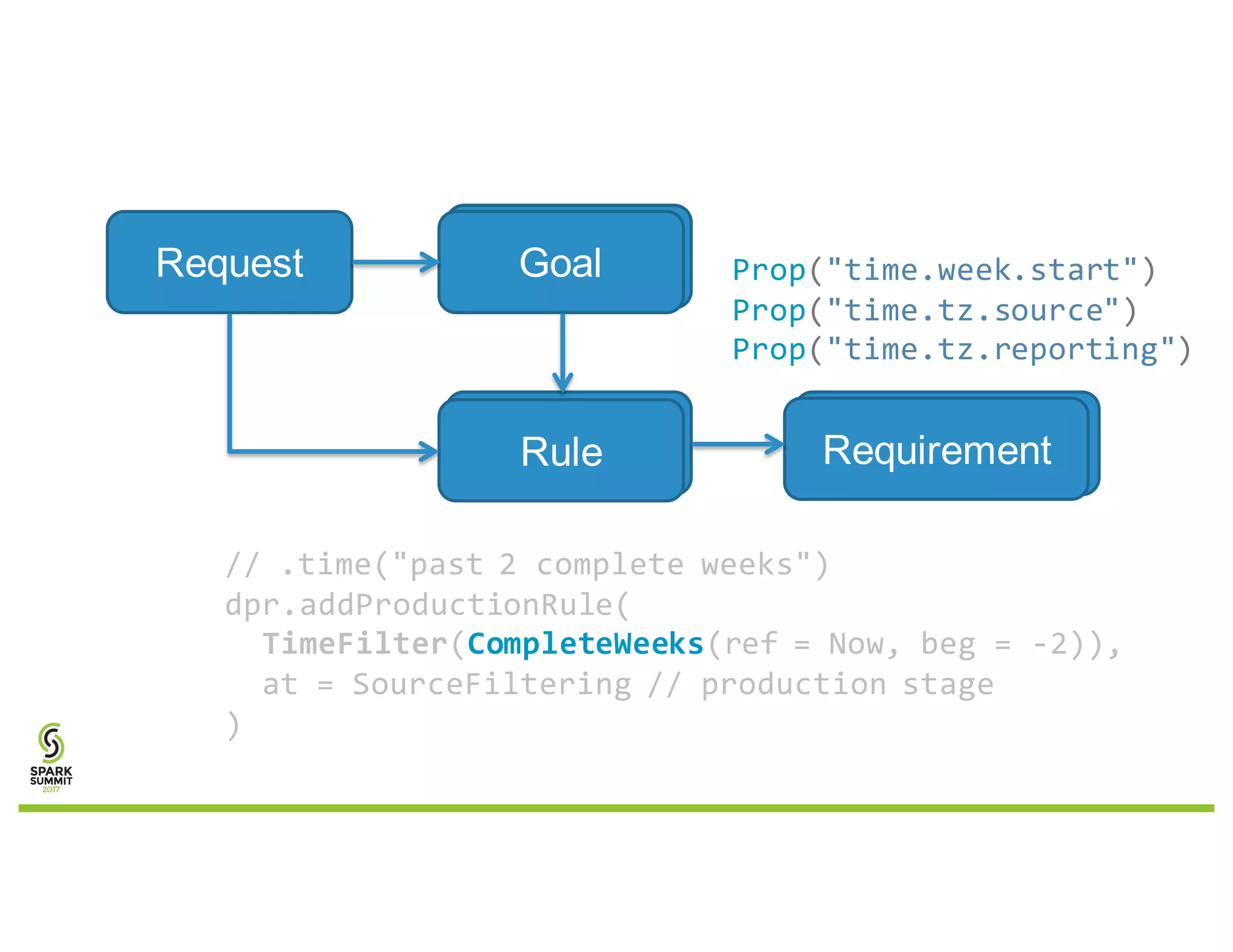

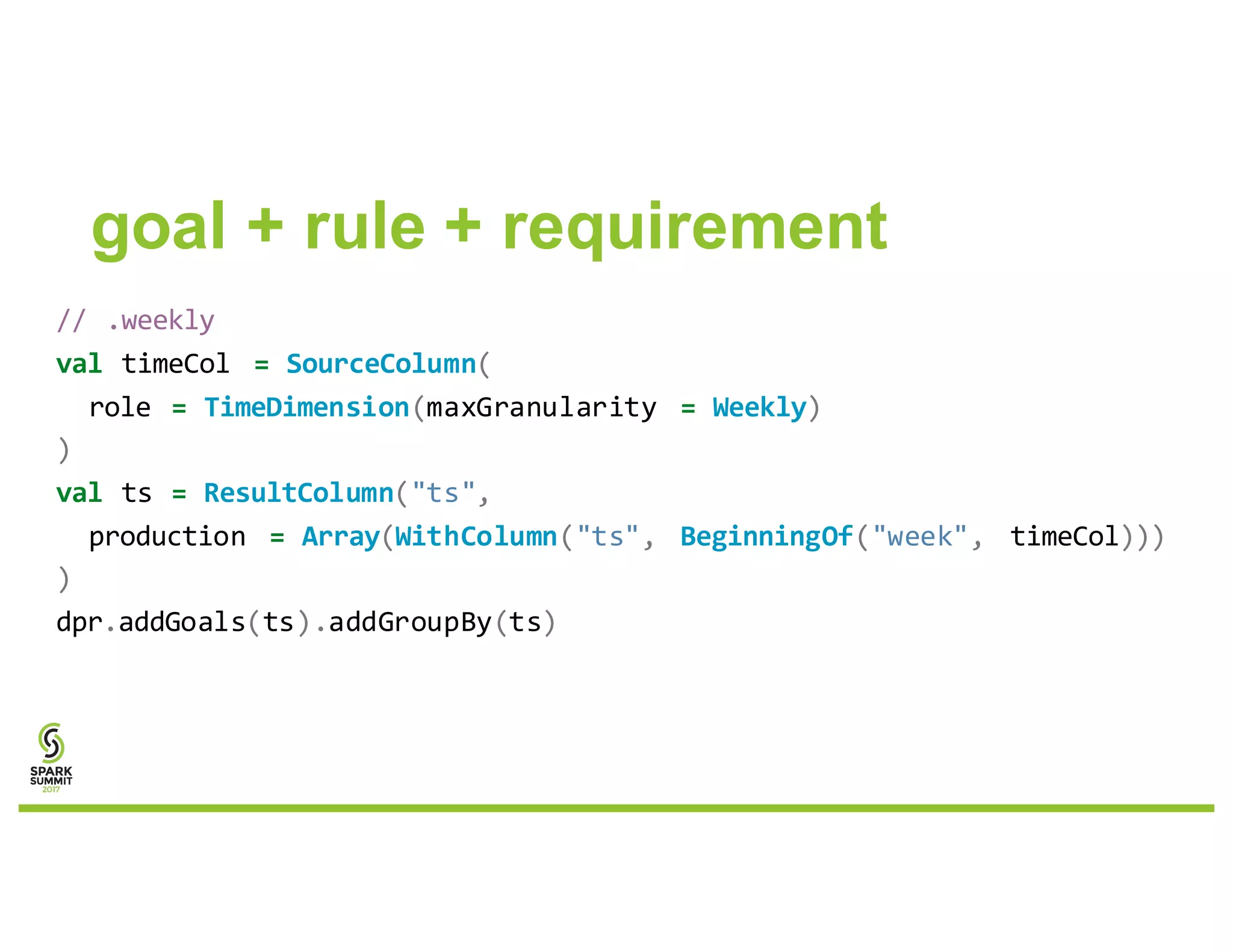

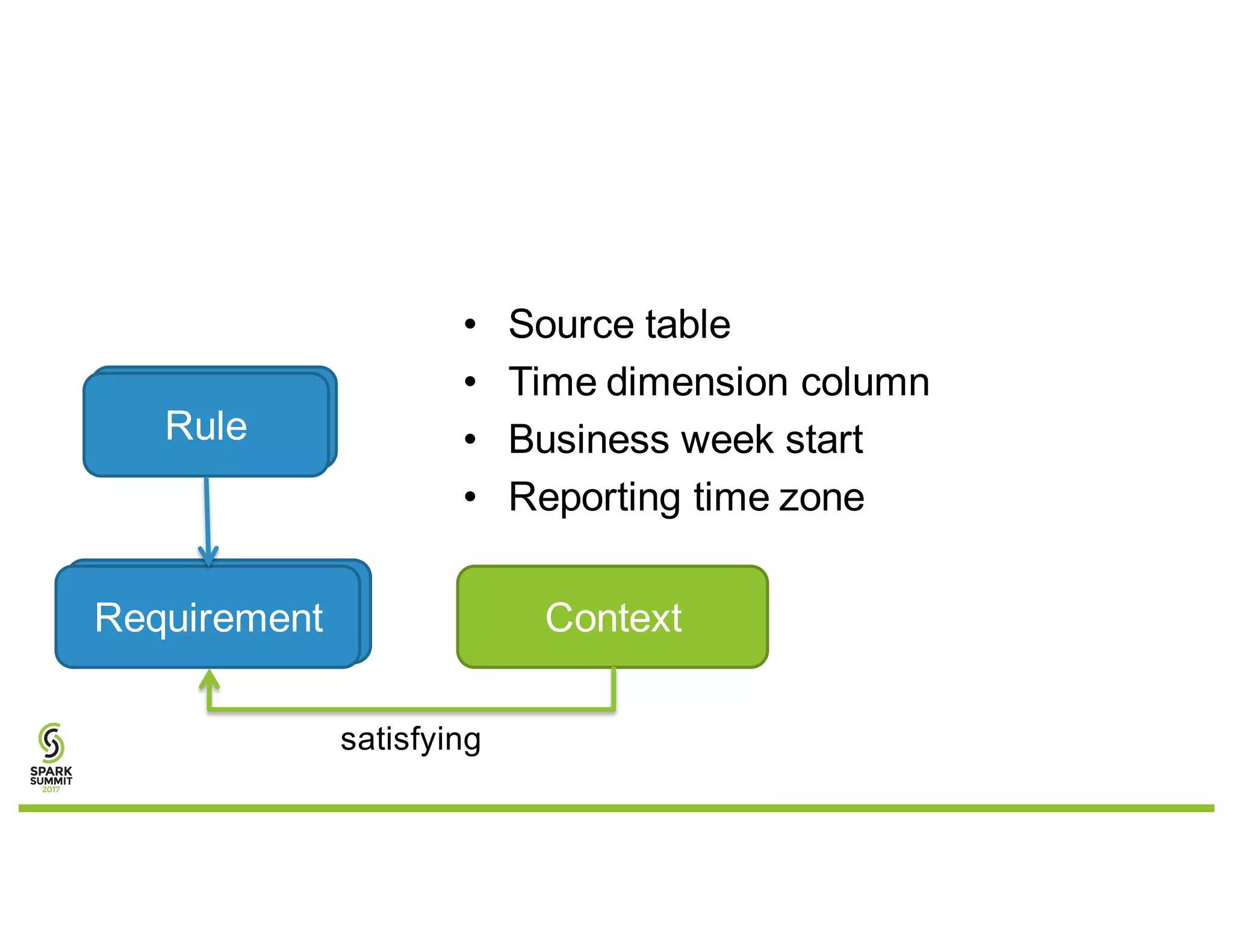

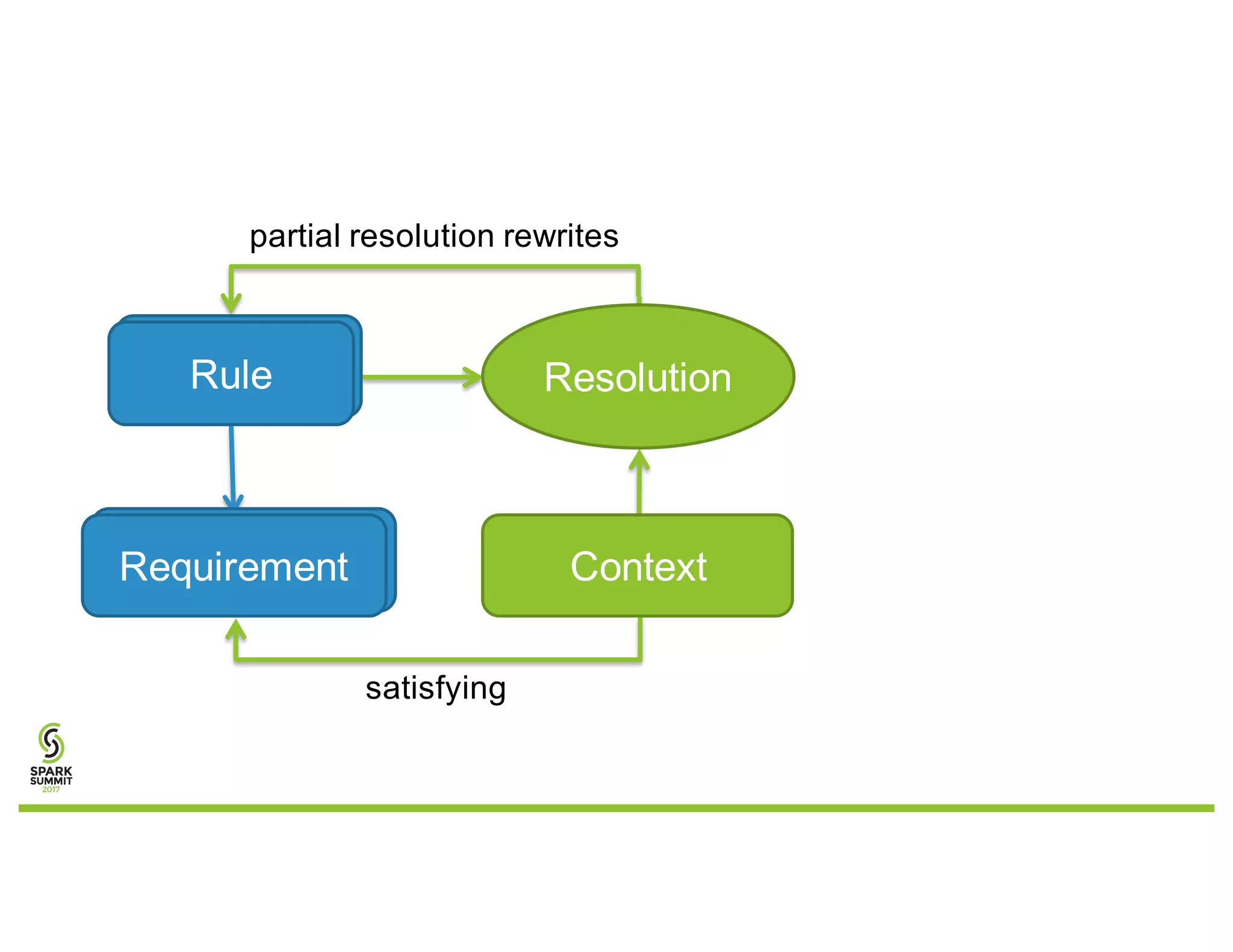

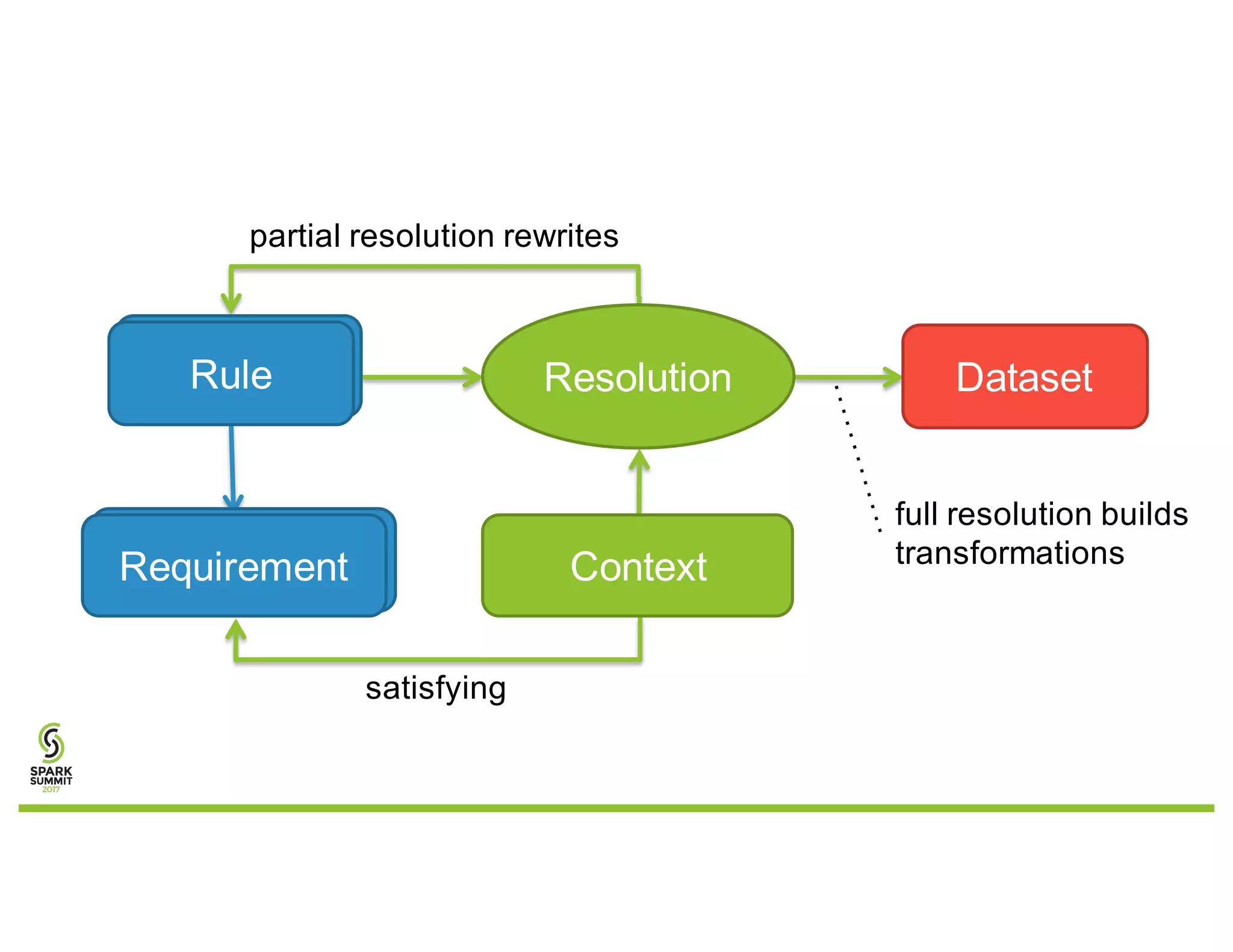

The document discusses a goal-based data production approach for a smart data warehouse, emphasizing simpler data requests and processing efficiency in querying large datasets at a petabyte scale. It outlines the complexities of traditional data processing methods and introduces an easier, more automated way to generate reports, allowing business users to engage directly with Spark without deep technical knowledge. The solution aims to improve productivity, performance, and collaboration while minimizing costs and complexities in data operations.

![automatic joins by column name

{

"table": "dimension_campaigns",

"primary_key": ["campaign_id"]

"joins": [ {"fields": ["campaign_id"]} ]

}

join any table with campaign_id column](https://image.slidesharecdn.com/079simsimeonov-170614195123/75/Goal-Based-Data-Production-with-Sim-Simeonov-32-2048.jpg)

![automatic semantic joins

{

"table": "dimension_campaigns",

"primary_key": ["campaign_id"]

"joins": [ {"fields": ["ref:swoop.campaign.id"]} ]

}

join any table with a Swoop campaign ID](https://image.slidesharecdn.com/079simsimeonov-170614195123/75/Goal-Based-Data-Production-with-Sim-Simeonov-33-2048.jpg)