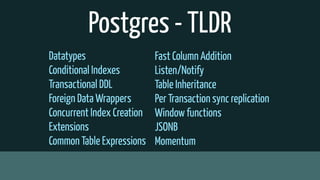

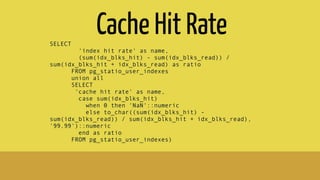

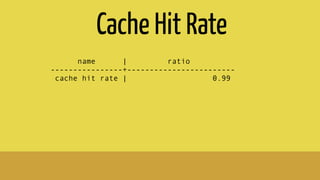

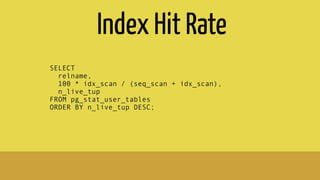

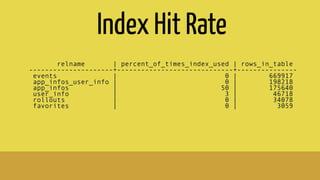

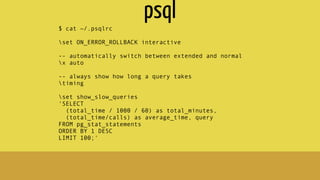

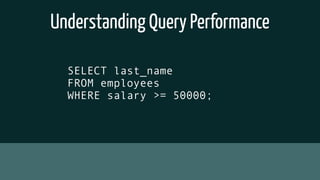

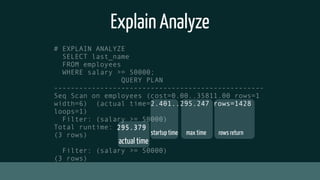

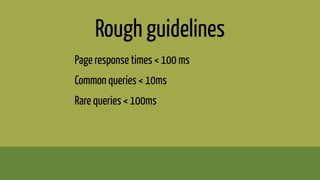

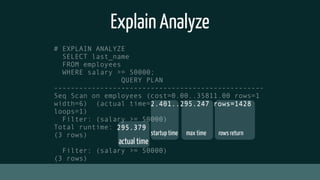

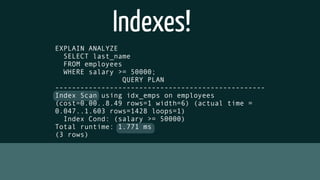

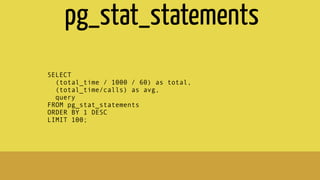

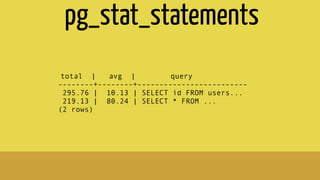

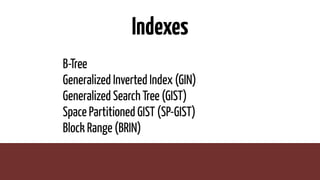

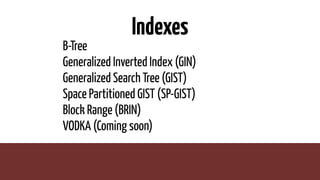

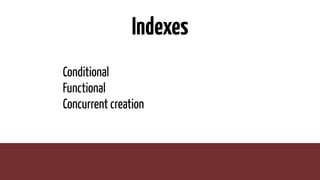

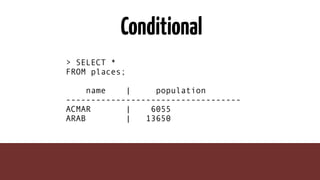

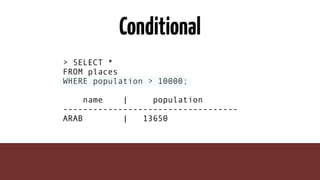

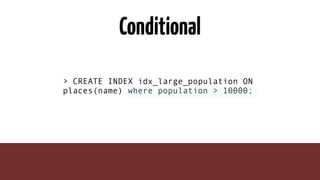

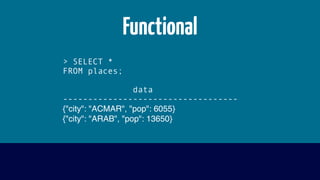

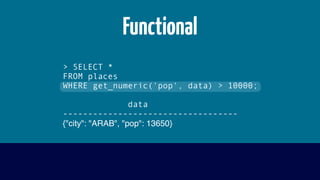

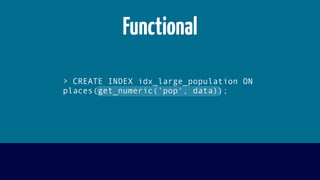

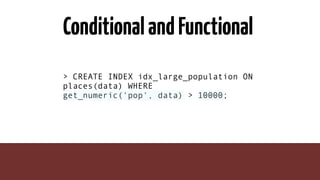

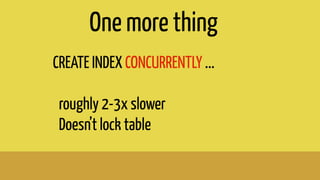

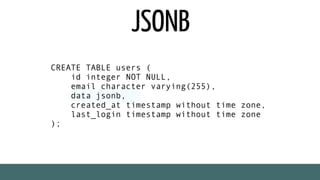

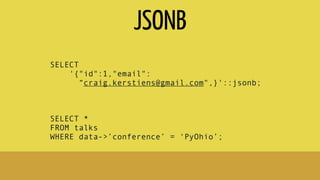

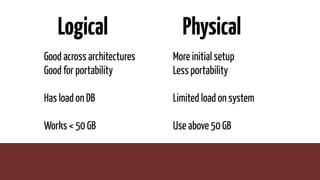

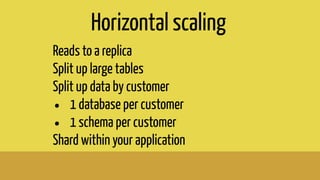

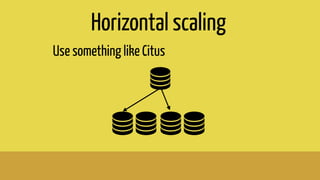

The document provides insights into optimizing PostgreSQL performance, including various techniques like using conditional indexes, efficient query performance analysis, and cache utilization. It discusses different types of indexes and strategies for horizontal and vertical scaling, along with guidelines for performance metrics such as cache hit and index hit rates. Additionally, it emphasizes the importance of query execution plans and indexing strategies for enhancing database efficiency.