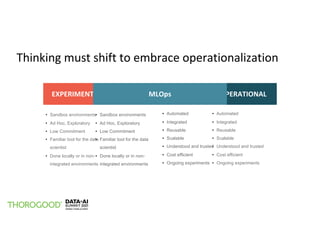

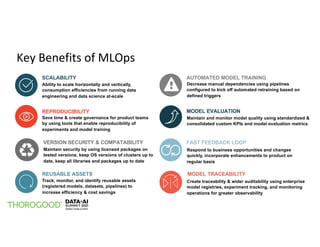

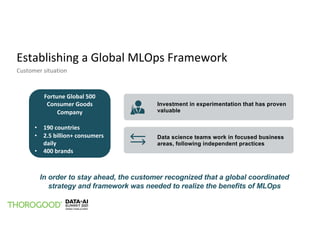

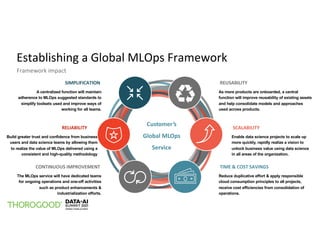

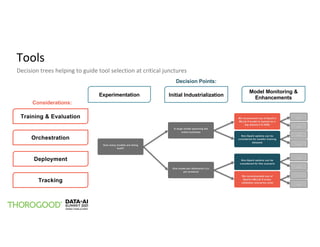

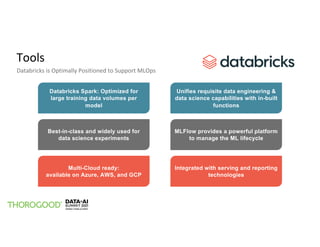

The document outlines the implementation of MLOps by Thorogood Associates and presents a case study on establishing a global MLOps framework for a Fortune Global 500 consumer goods company. It emphasizes the importance of scalability, model reusability, operational efficiency, and ongoing improvements to enhance the value of machine learning and data science across the organization. Key takeaways include the necessity of a well-defined framework, collaboration among data science experts, and the use of integrated tools like Databricks and MLflow to optimize the MLOps process.