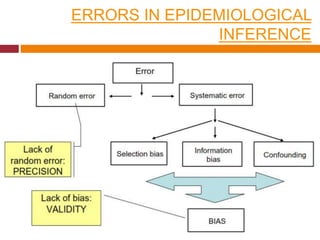

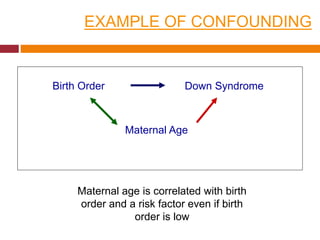

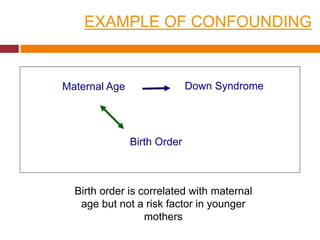

This document discusses different types of error and bias that can occur in epidemiological studies. It defines random error as occurring due to chance and resulting in imprecise measures, while systematic error or bias results in invalid measures that are not true. Types of bias discussed include selection bias, information bias, and confounding. Selection bias can arise from how cases and controls are selected, while information bias occurs when exposure or disease status is incorrectly classified. The document emphasizes the importance of reducing both random and systematic errors to obtain valid study results.