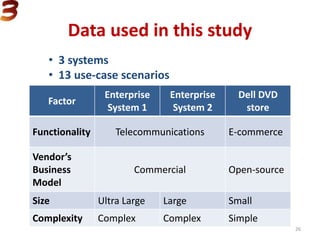

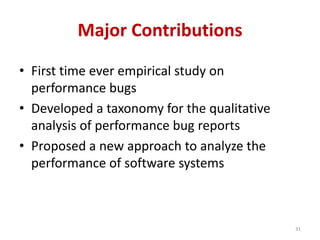

This document summarizes three empirical studies on software performance bugs:

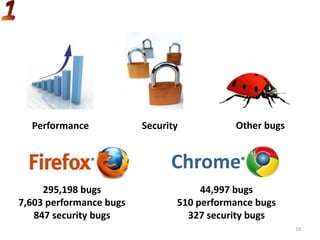

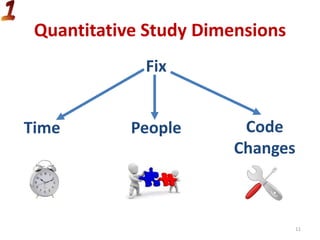

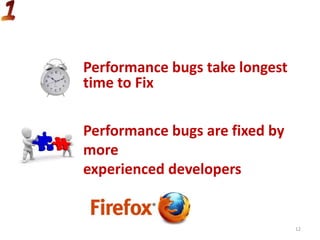

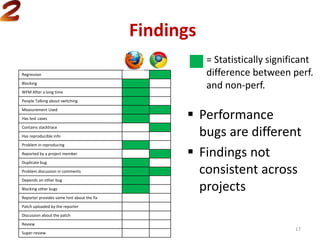

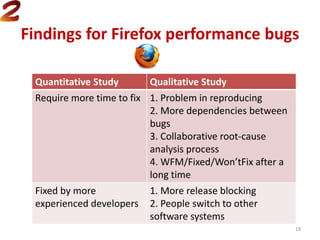

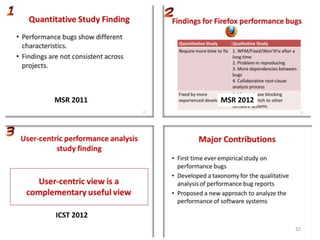

1. A quantitative study found performance bugs have different characteristics than other bugs, such as taking longer to fix, but findings were not consistent across projects.

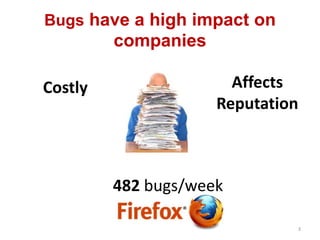

2. A qualitative study of bug reports found performance bugs have a higher impact, more context in reports, and require more collaborative fixing.

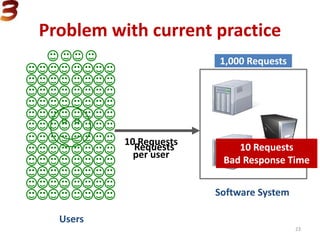

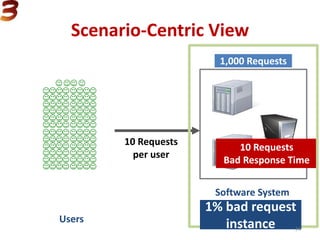

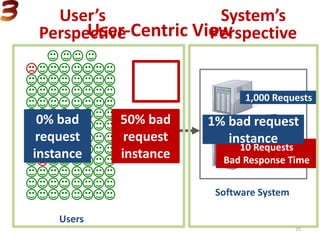

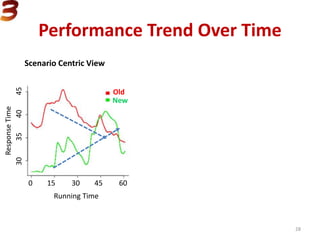

3. A user-centric performance analysis study found examining performance from users' perspectives provided a complementary view to traditional scenario-centric analyses. Considering individual users revealed different performance trends and consistency than aggregate analyses.