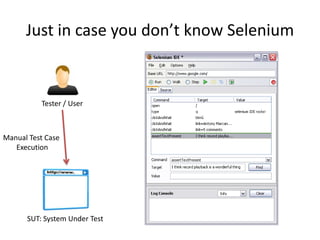

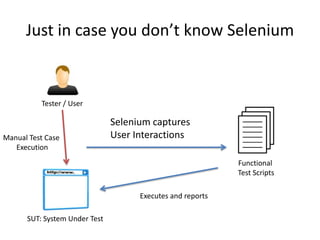

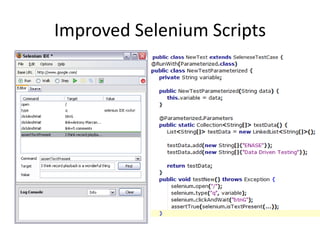

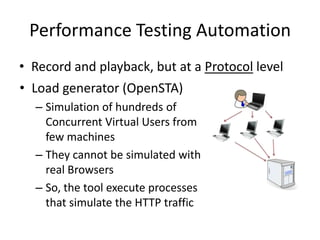

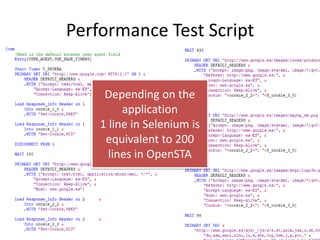

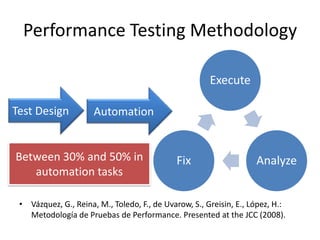

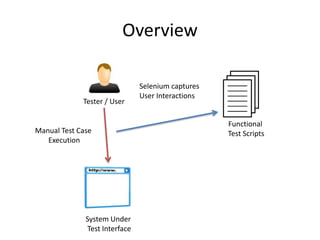

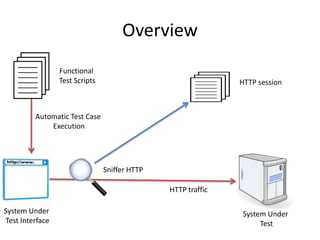

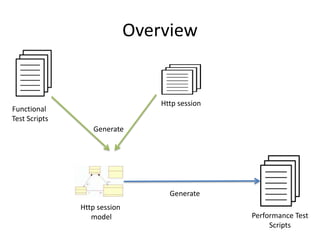

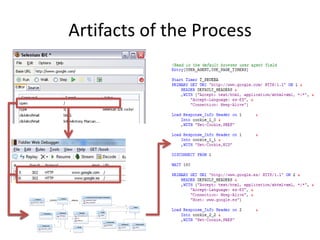

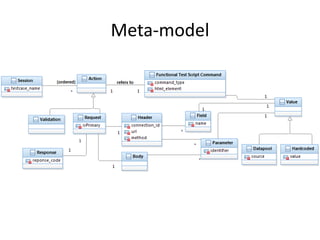

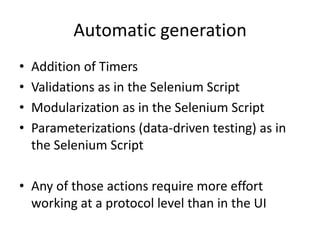

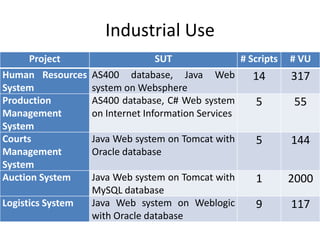

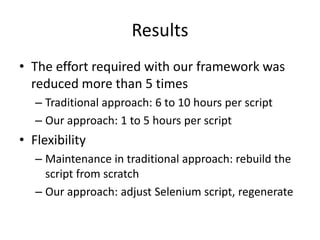

The document discusses the automation of functional and performance testing, highlighting the transition from functional test scripts to performance test scripts using tools like Selenium and OpenSTA. It emphasizes the need for reduced costs and improved flexibility in performance testing, while presenting methodologies and results that demonstrate significant efficiency gains in script generation. Future work includes generating compatible performance tests for different load generators and considering various protocols.