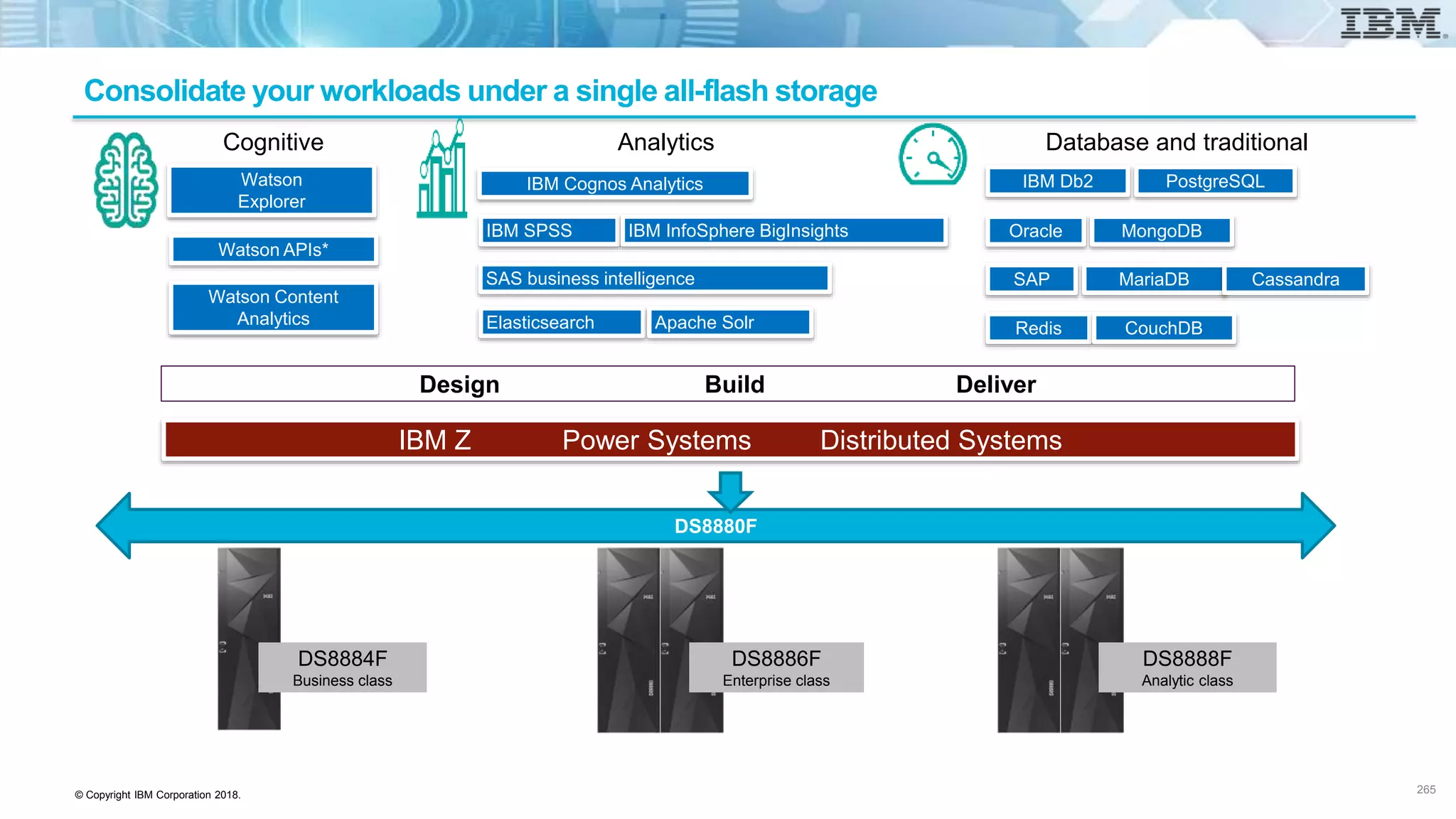

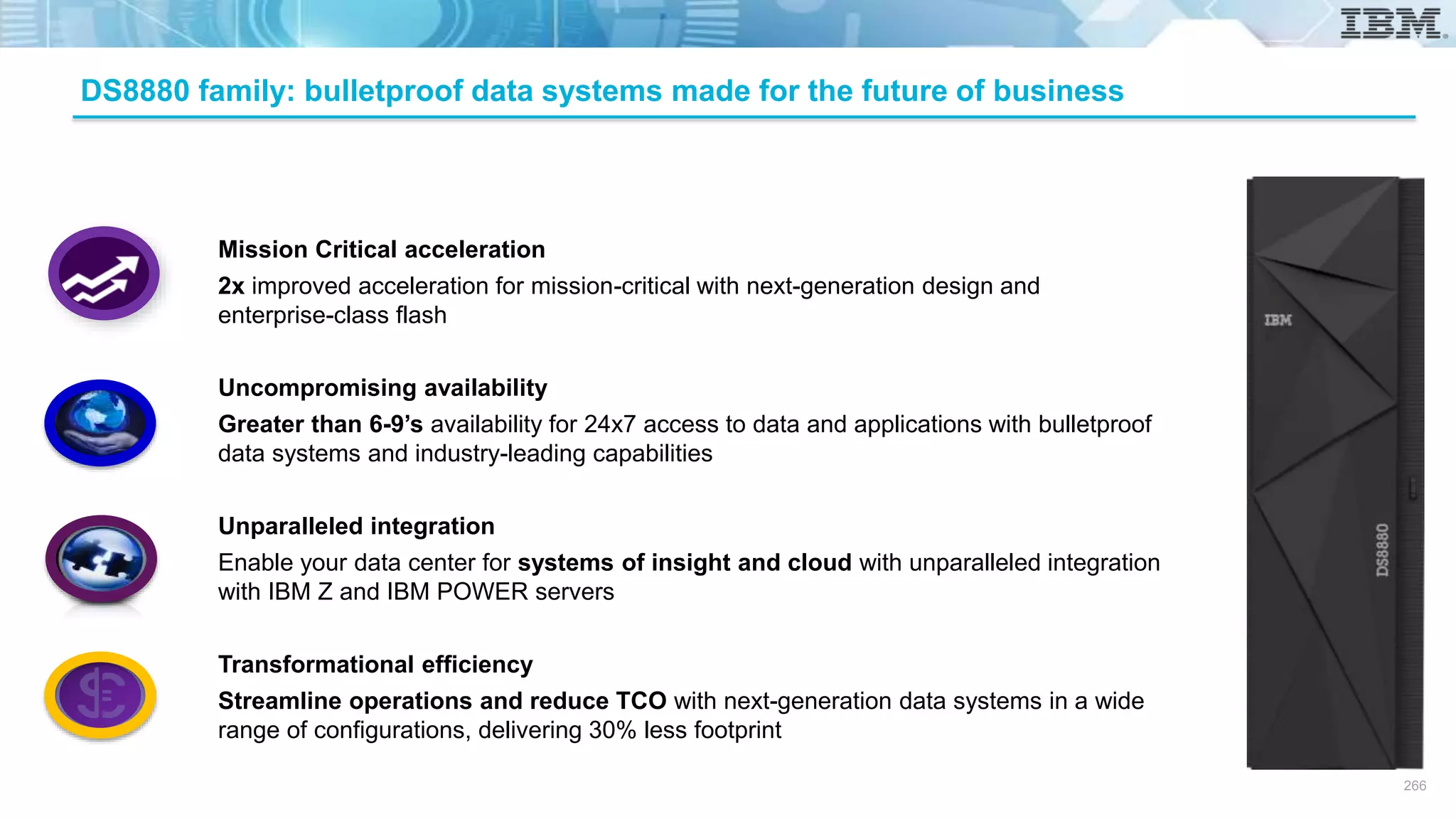

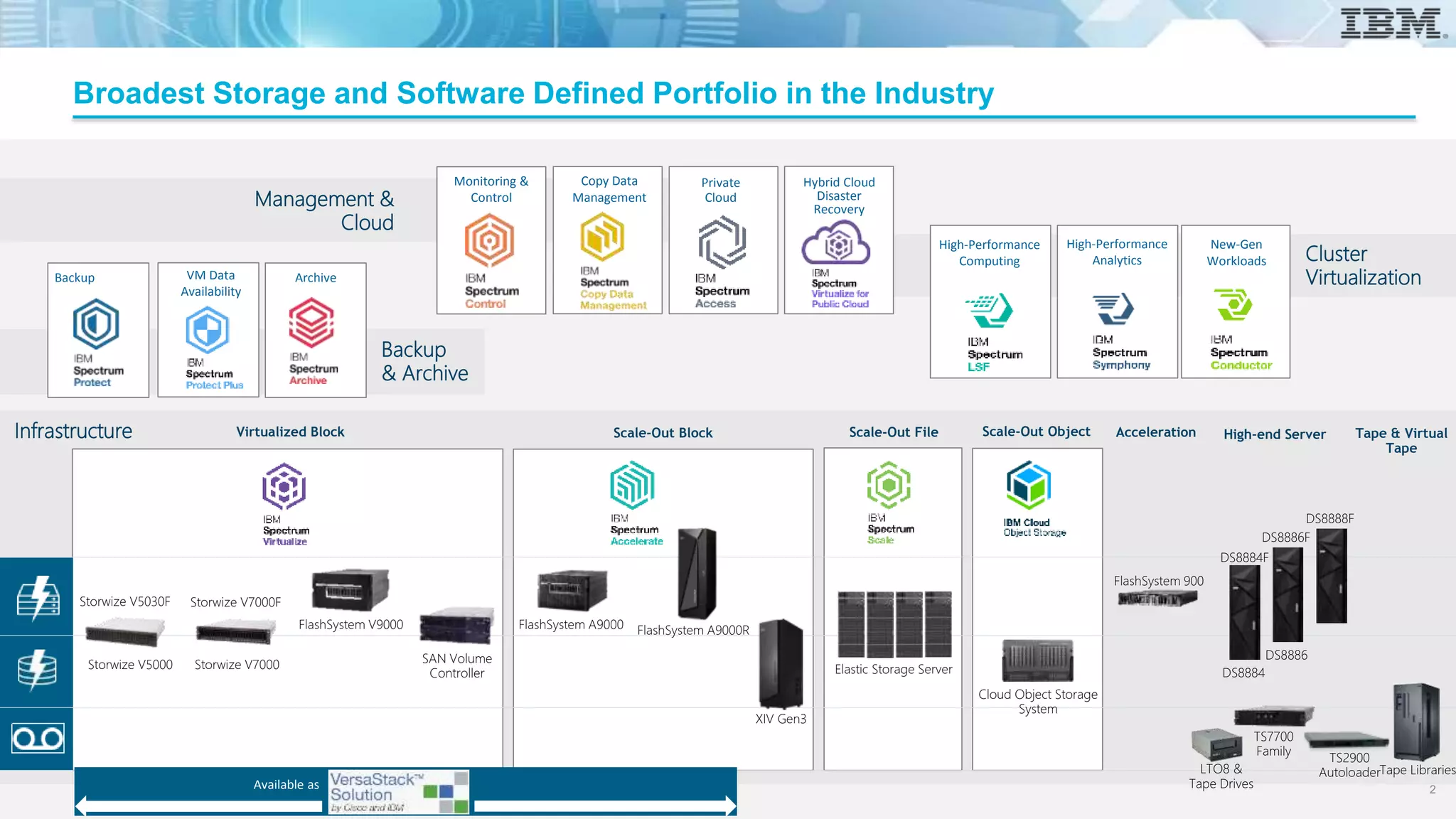

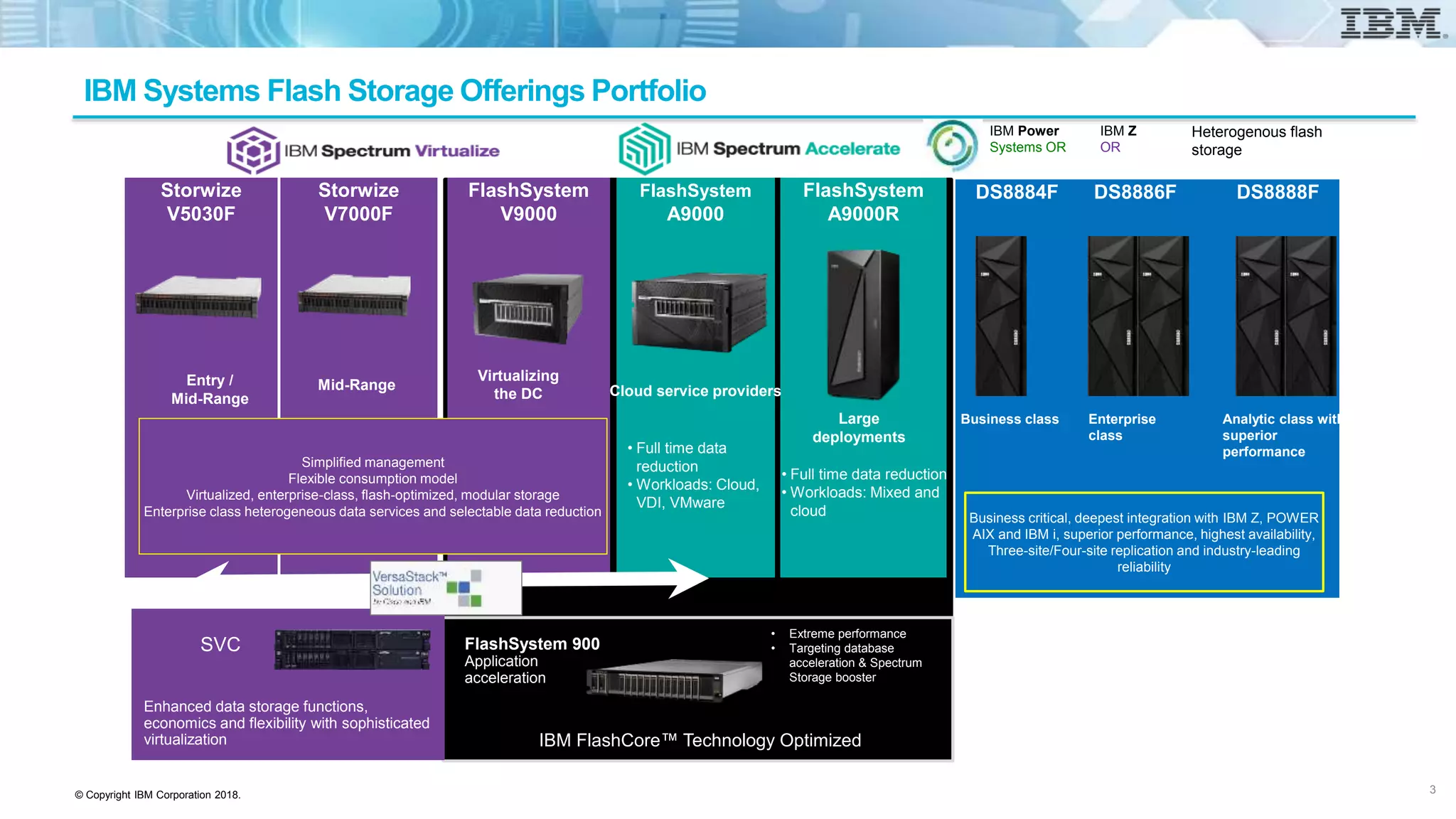

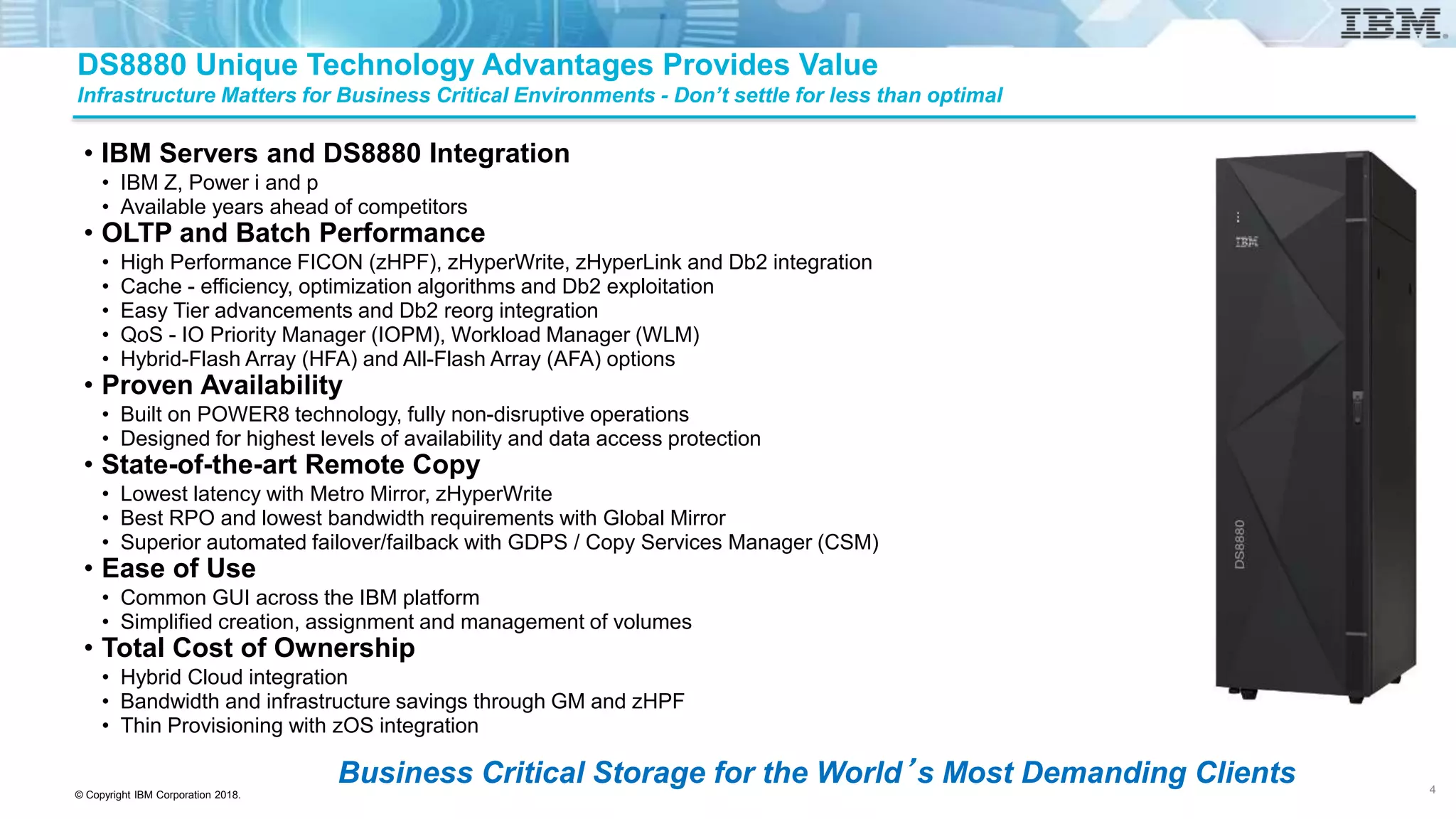

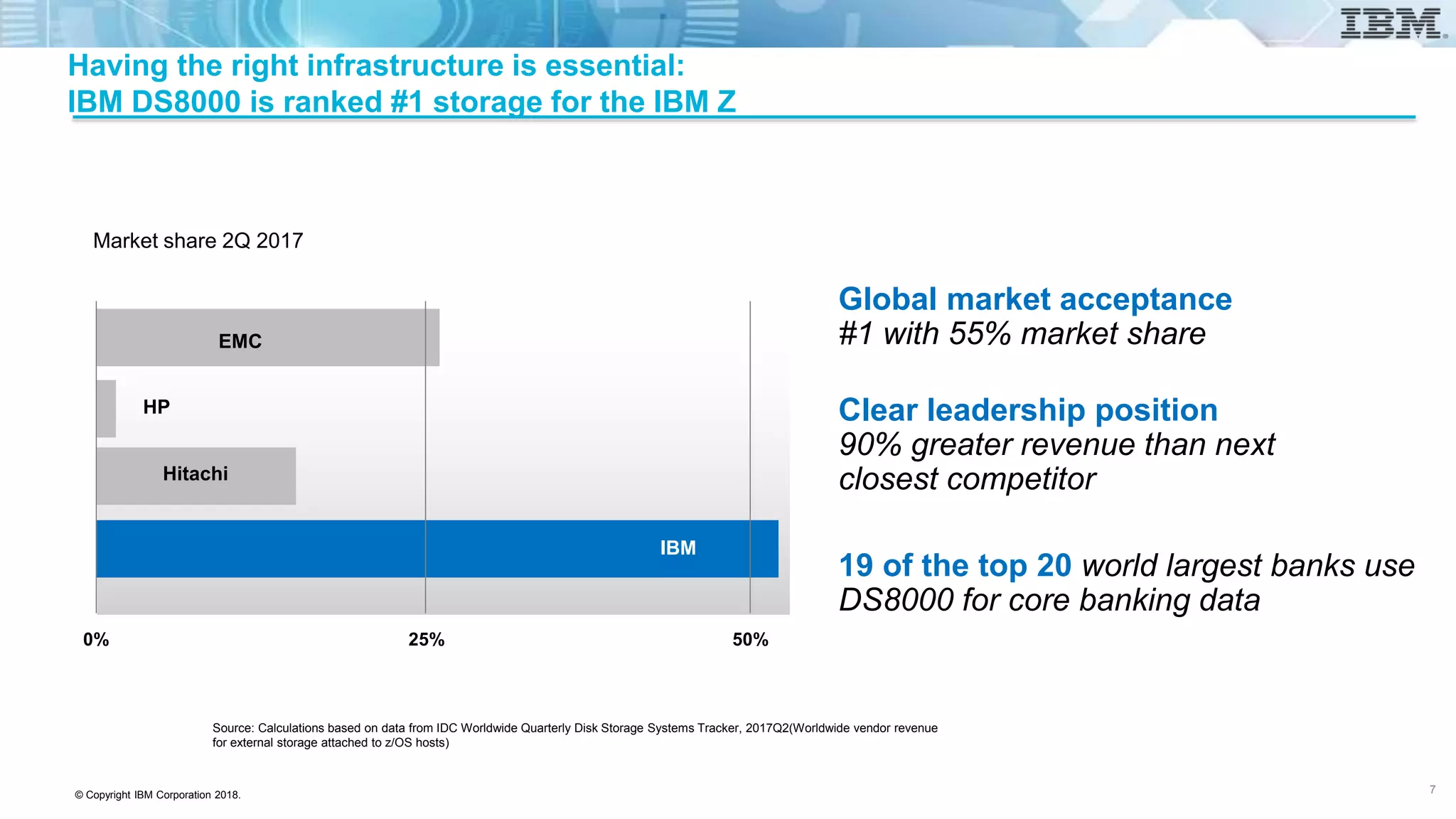

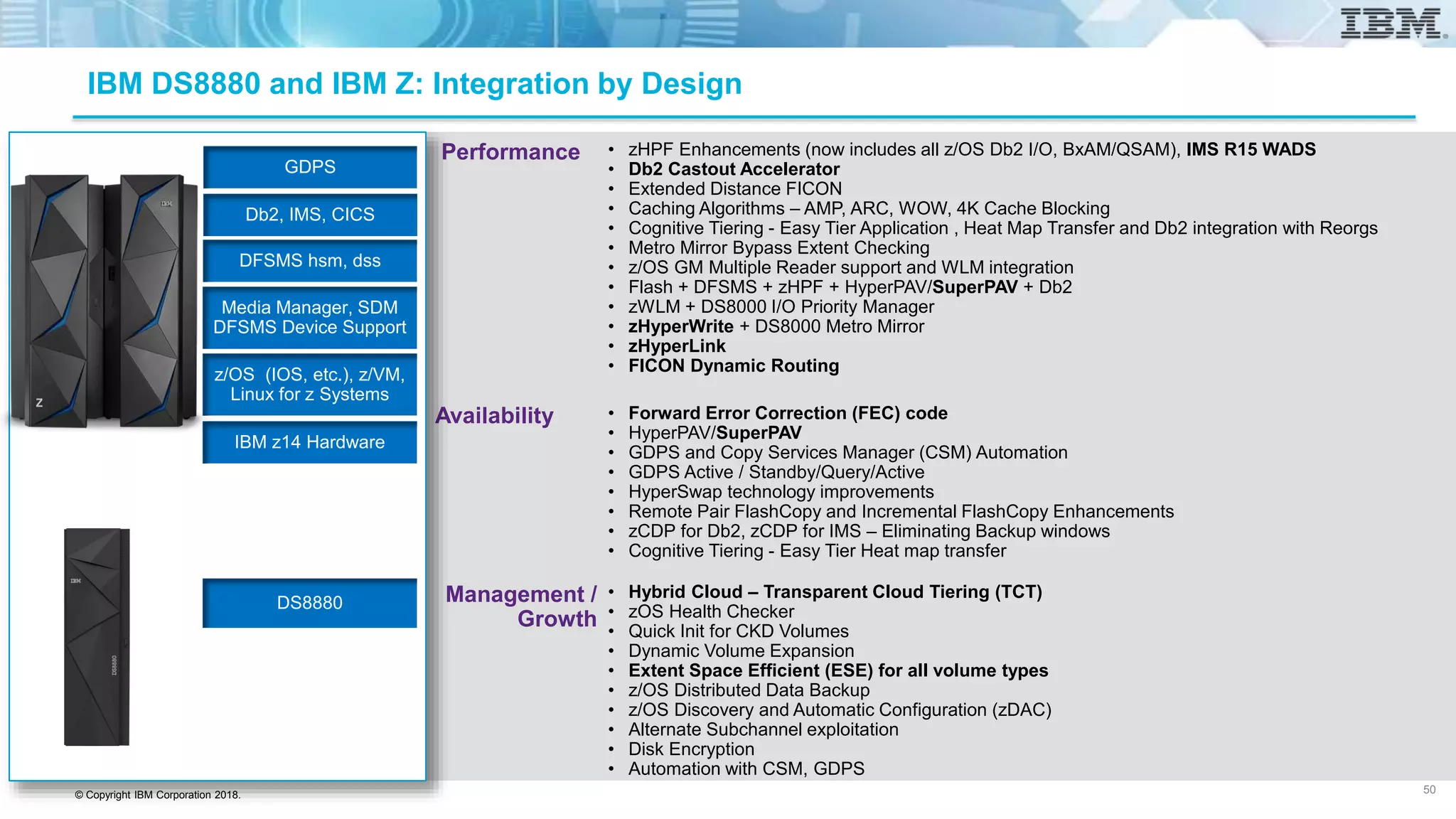

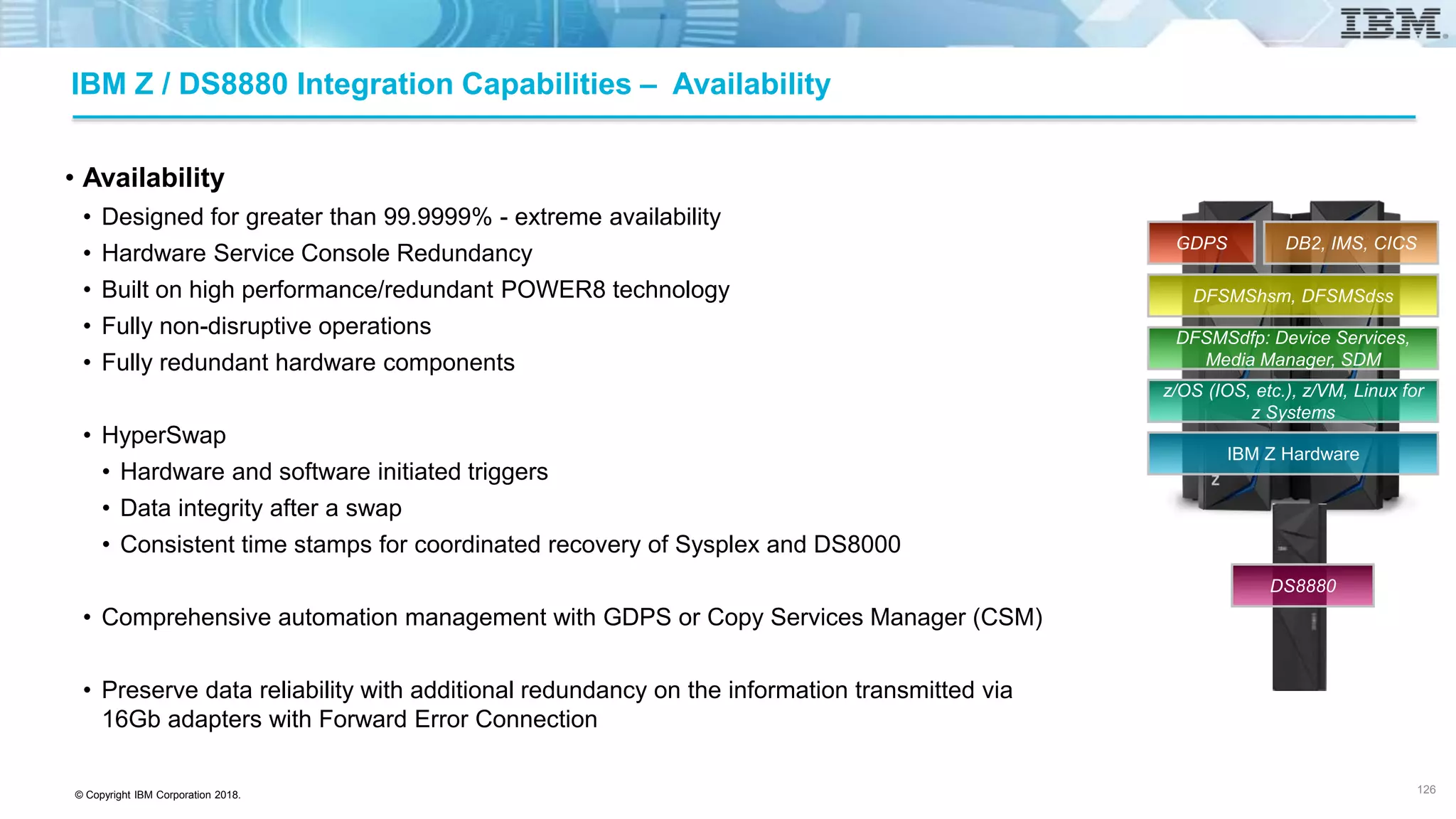

The document provides an overview of IBM's DS8880 storage solutions, highlighting their integration with IBM Z systems for business-critical environments. It details various models, their configurations, and technology advantages like high performance, availability, and data access protection. The document emphasizes IBM's market leadership in storage solutions for core banking and other enterprise applications, showcasing the DS8880's hybrid and all-flash options designed for diverse workloads.

![© Copyright IBM Corporation 2018.

DSCLI zHyperLink Commands

96

chzhyperlink

Description: Modify zHyperLink switch

Syntax:

chzhyperlink [-read enable | disable] [-write enable | disable] storage_image_ID |

Example:

dscli > chzhyperlink –read enable IBM.2107-75FA120

Aug 11 02:23:49 PST 2004 IBM DS CLI Version: 5.0.0.0 DS: IBM.2107-75FA120

CMUC00519I chzhyperlink: zHyperLink read is successfully modified.](https://image.slidesharecdn.com/ibmds8880andibmz-integratedbydesign-171220114227/75/IBM-DS8880-and-IBM-Z-Integrated-by-Design-80-2048.jpg)

![© Copyright IBM Corporation 2018.

DSCLI zHyperLink Commands

97

lszhyperlink

Description:

Display the status of zHyperLink switch for a given Storage Image

Syntax:

lszhyperlink [ -s | -l ] [ storage_image_ID […] | -]

Example:

dscli > lszhyperlink

Date/Time: July 21, 2017 1:18:19 PM MST IBM DSCLI Version: 7.8.30.364 DS: -

ID Read Write

===============================

IBM.2107-75FBH11 enable disable](https://image.slidesharecdn.com/ibmds8880andibmz-integratedbydesign-171220114227/75/IBM-DS8880-and-IBM-Z-Integrated-by-Design-81-2048.jpg)

![© Copyright IBM Corporation 2018.

DSCLI zHyperLink Commands

98

lszhyperlinkport

Description:

Display a list of zHyperLink ports for the given storage image

Syntax:

lszhyperlinkport [-s | -l] [-dev storage_image_ID] [port_ID […] | -]

Example:

dscli> lszhyperlinkport

Date/Time: July 12, 2017 9:54:02 AM CST IBM DSCLI Version: 0.0.0.0 DS: -

ID State loc Speed Width

=============================================================

HL0028 Connected U1500.1B3.RJBAY03-P1-C7-T3 GEN3 8

HL0029 Connected U1500.1B3.RJBAY03-P1-C7-T4 GEN3 8

HL0038 Disconnected U1500.1B4.RJBAY04-P1-C7-T3 GEN3 8

HL0039 Disconnected U1500.1B4.RJBAY04-P1-C7-T4 GEN3 8](https://image.slidesharecdn.com/ibmds8880andibmz-integratedbydesign-171220114227/75/IBM-DS8880-and-IBM-Z-Integrated-by-Design-82-2048.jpg)

![© Copyright IBM Corporation 2018.

DSCLI zHyperLink Commands

99

showzhyperlinkport

Description:

Displays detailed properties of an individual zHyperLink port

Syntax:

showzhyperlinkport [-dev storage_image_ID] [-metrics] “ port_ID” | -

Example:

dscli> showzhyperlinkport –metrics HL0068

Date/Time: July 12, 2017 9:59:05 AM CST IBM DSCLI Version: 0.0.0.0 DS: -

ID HL0068

Date Fri Jun 23 11:26:15 PDT 2017

TxLayerErr 2

DataLayerErr 3

PhyLayerErr 4

================================

Lane RxPower (dBm) TxPower (dBm)

================================

0 0.4 0.5884

1 0.1845 -0.2909

2 -0.41 -0.0682

3 0.114 -0.4272](https://image.slidesharecdn.com/ibmds8880andibmz-integratedbydesign-171220114227/75/IBM-DS8880-and-IBM-Z-Integrated-by-Design-83-2048.jpg)

![© Copyright IBM Corporation 2018.

SuperPAV / DS8880 Integration

• Building upon IBM’s success with PAVs and HyperPAV, SuperPAVs which provide cross

control unit aliases

• Previously aliases must be from within the logical control unit (LCU)

• 3390 devices + aliases ≤ 256 could be a limiting factor

• LCUs with many EAVs could potential require additional aliases

• LCUs with many logical devices and few aliases required reconfiguration if they required additional aliases

• SuperPAVs, an IBM DS8880 exclusive, extends aliases beyond the LCU barrier

• SuperPAVs can cross control unit boundaries and enable aliases to be shared among multiple LCUs provided

that:

• The 3390 devices and the aliases are assigned to the same DS8000 server (even/odd LCU)

• The devices share a common path group on the z/OS system

• Even numbered control units with the exact same paths (CHPIDs [and destination addresses]) are considered peer

control units and may share aliases

• Odd numbered control units with the exact same paths (CHPIDs [and destination addresses]) are considered peer

control units and may share aliases

• There is still a requirement to have a least one base device per LCU so it is not possible to define a LCU with

nothing but aliases.

• Using SuperPAVs will provide benefits to clients especially with a large number of systems

(LPARs) or many LCUs sharing a path group

102

z/OS](https://image.slidesharecdn.com/ibmds8880andibmz-integratedbydesign-171220114227/75/IBM-DS8880-and-IBM-Z-Integrated-by-Design-86-2048.jpg)

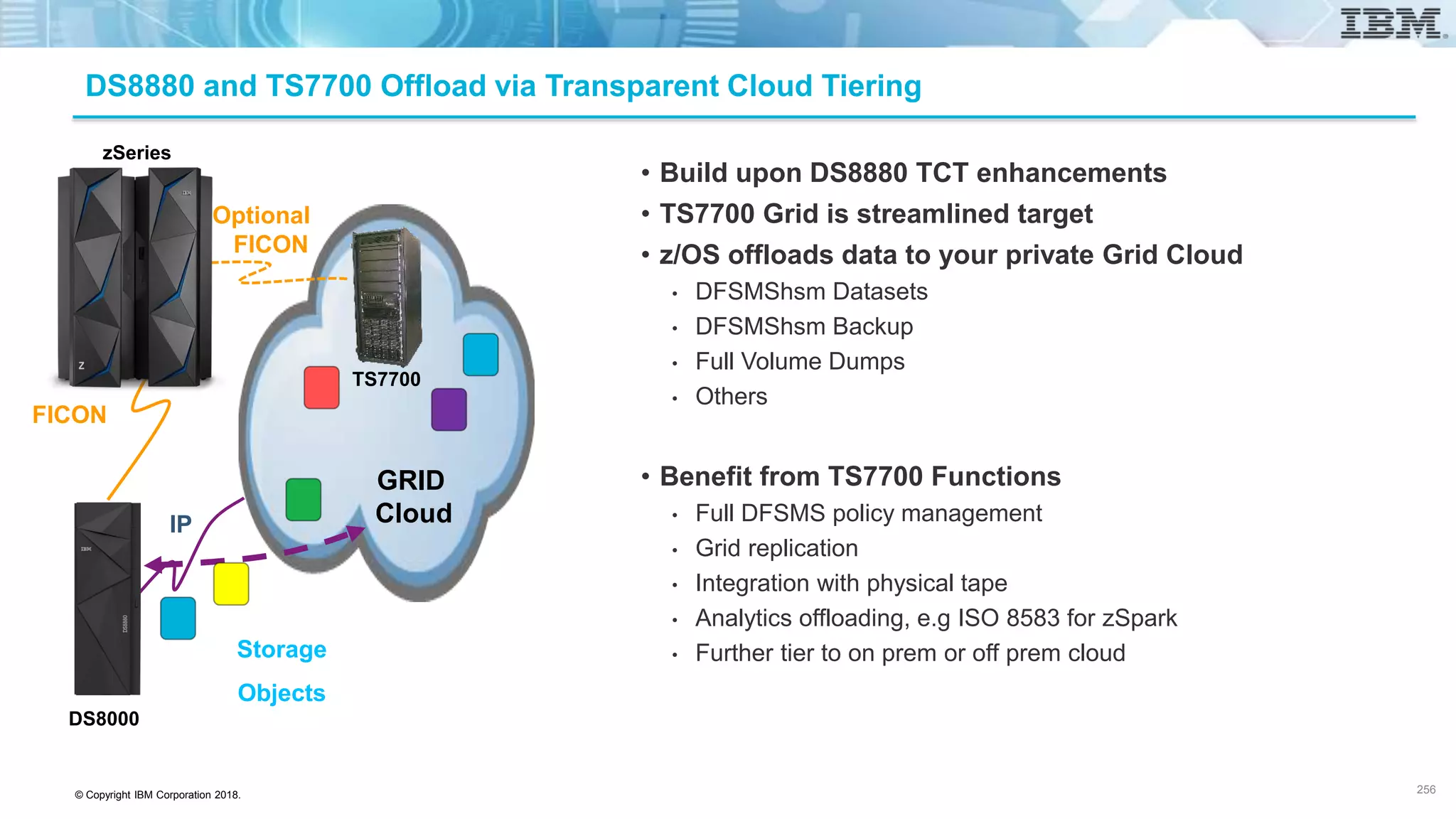

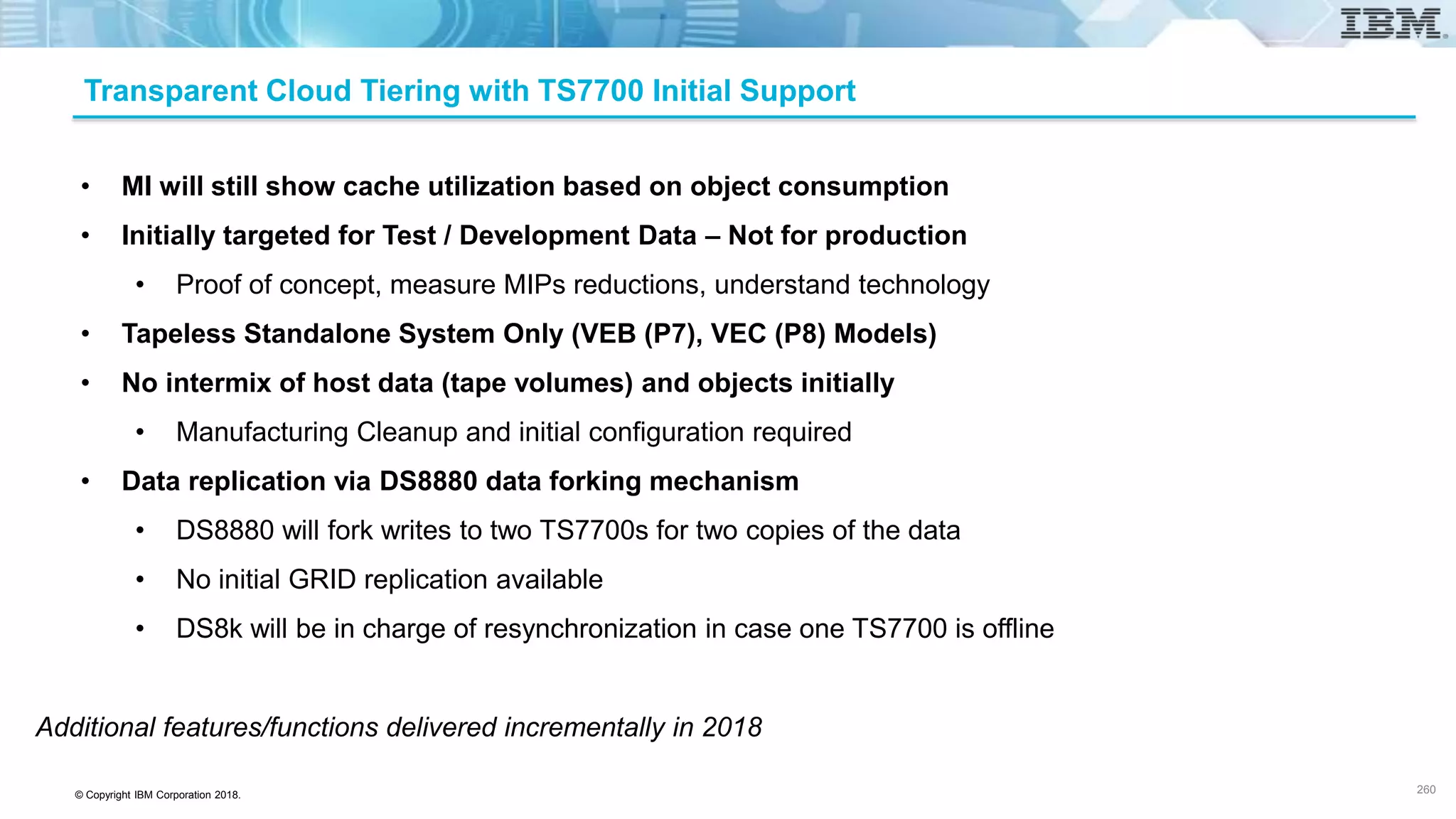

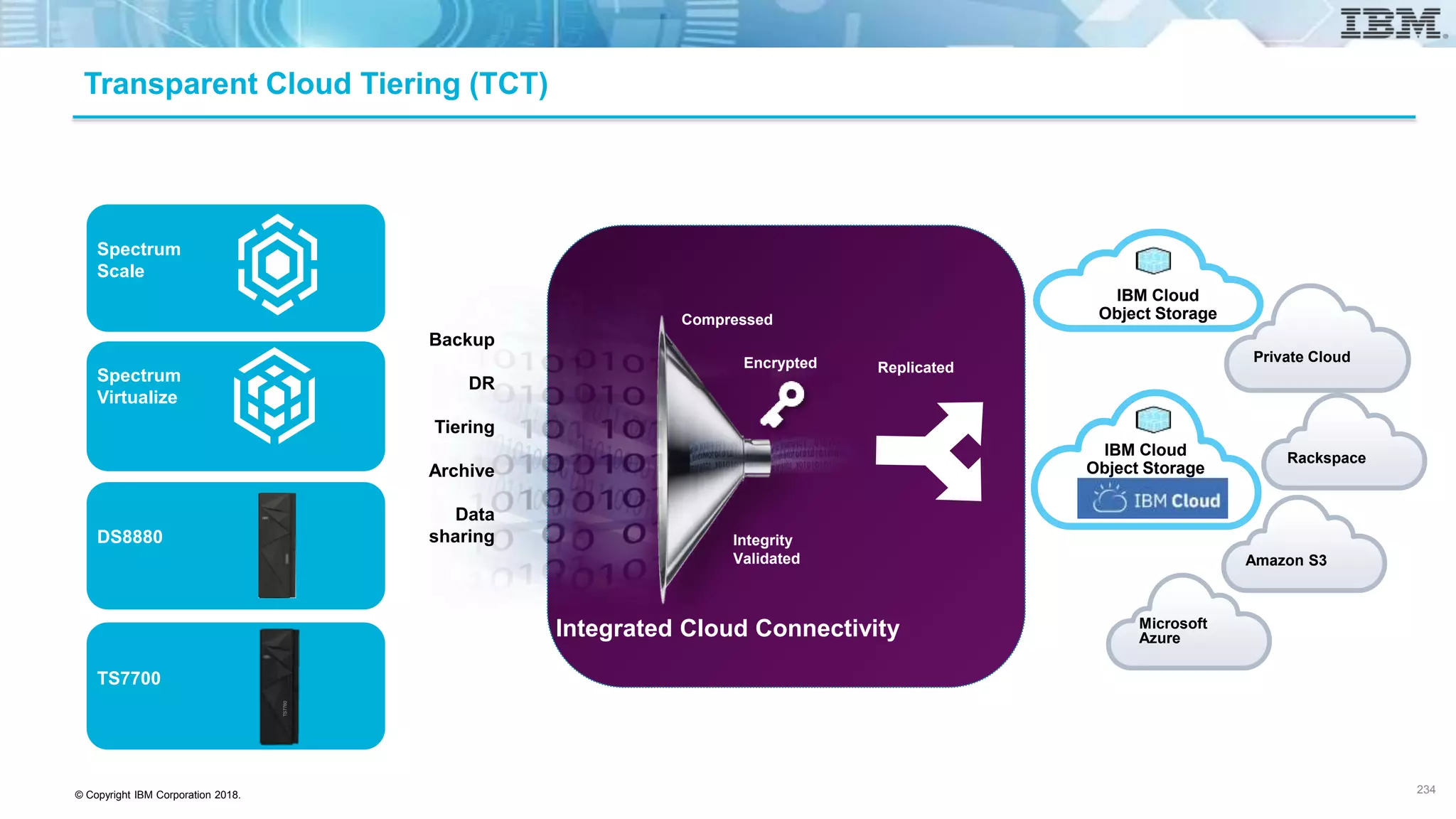

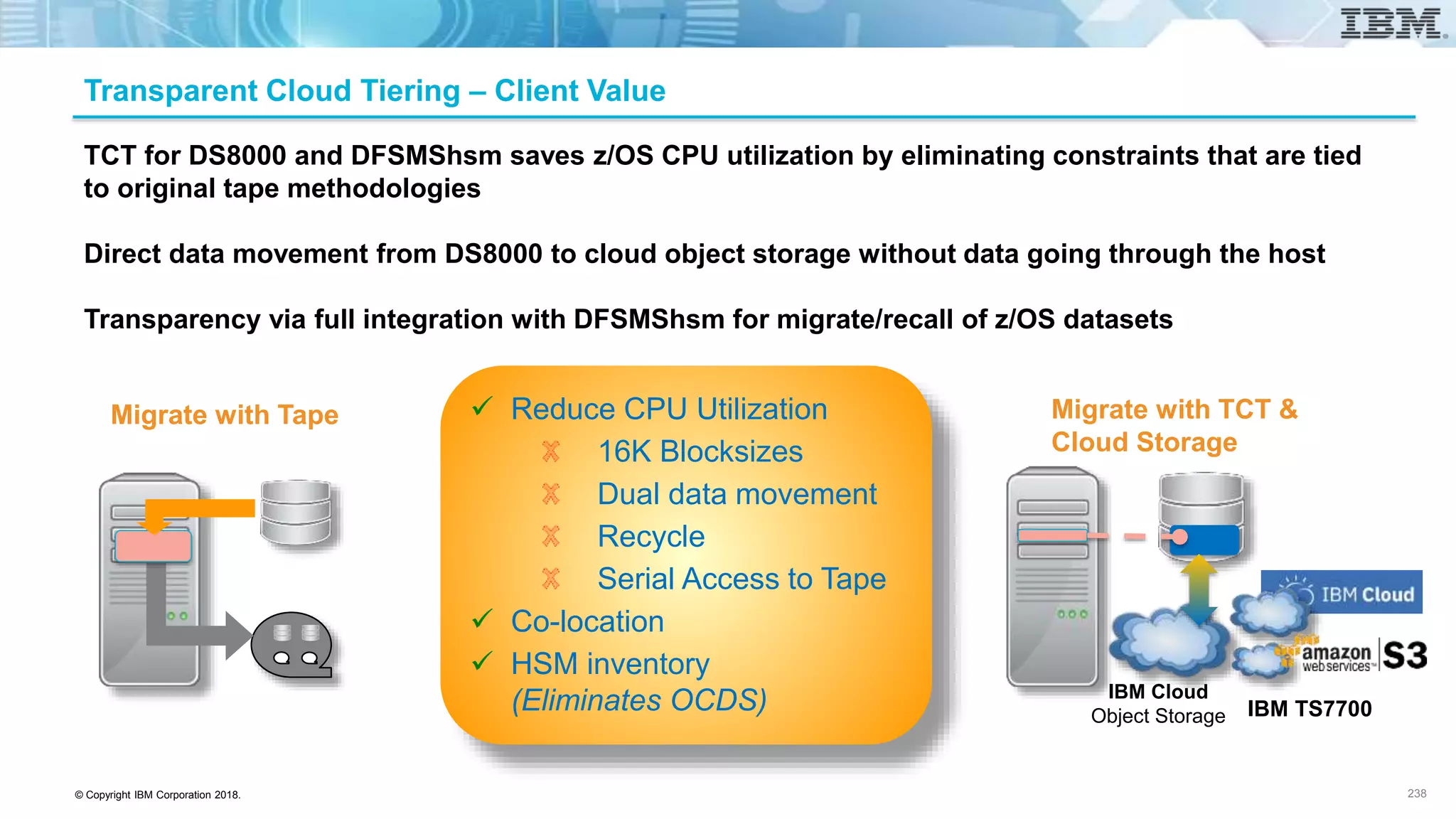

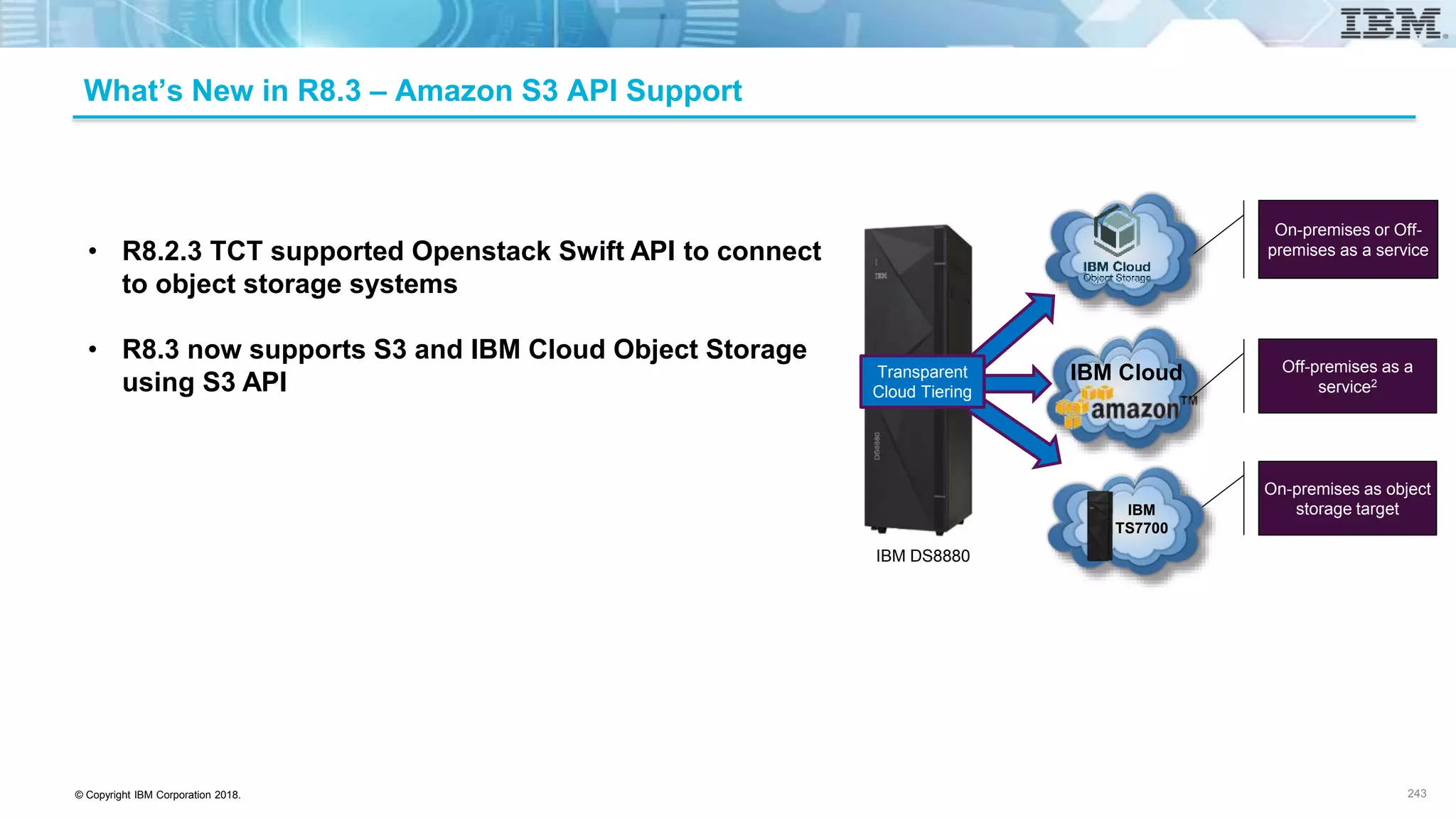

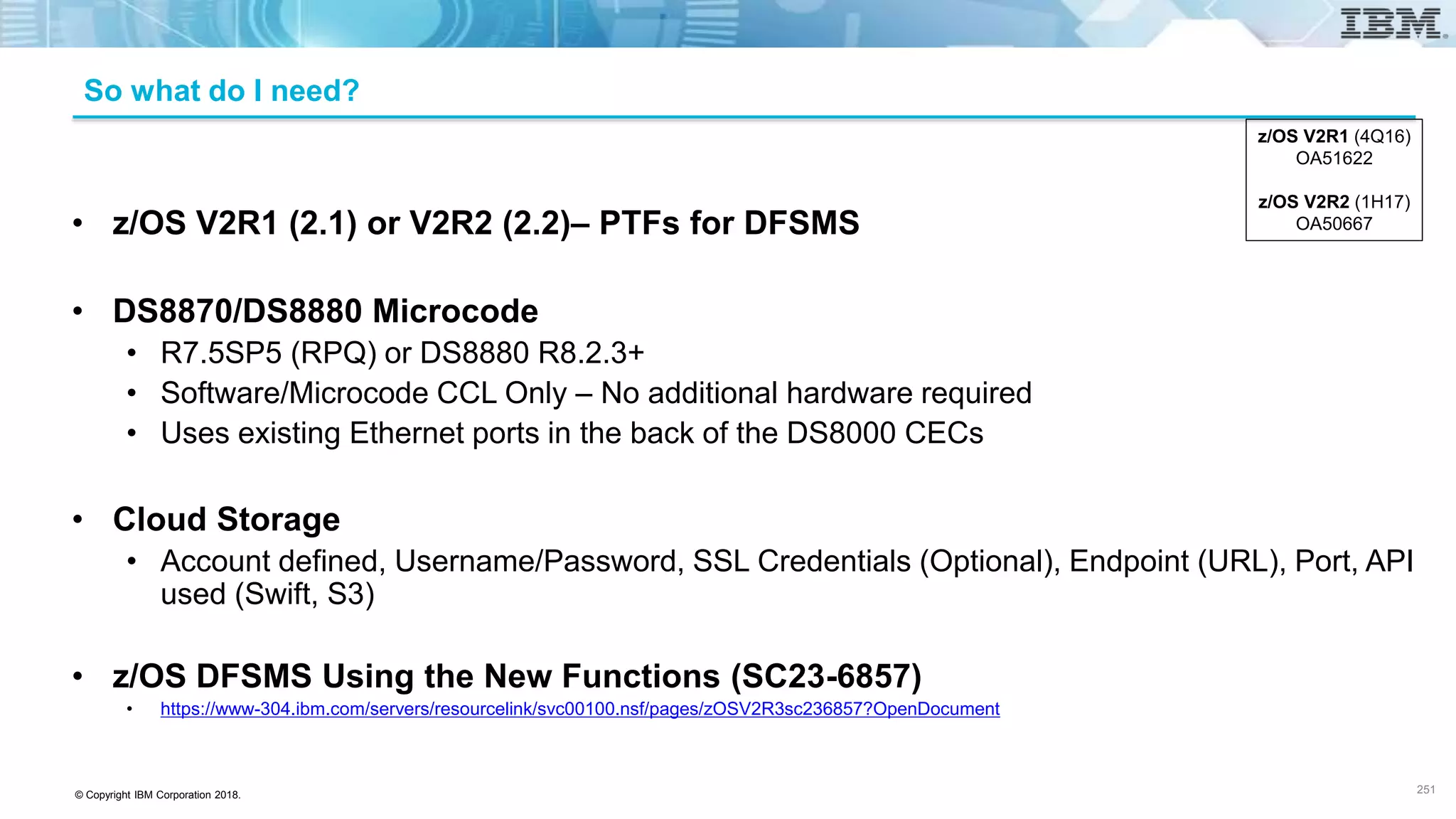

![© Copyright IBM Corporation 2018.

Setup on DS8870/DS8880

252

• Plug in Ethernet cables into both free CEC Ethernet ports

• Two empty ports per card today

• Use DSCLI to import your certificates if you plan to use TLS

• Use DSCLI to configure TCPIP on Ethernet cards

• setnetworkport [-ipaddr IP_address] [-subnet IP_mask] [-gateway IP_address] Port_ID

• This will automatically set up the firewall – outgoing ports only

• Use DSCLI to configure DS8000 to the Cloud Storage

• mkcloudserver -type cloud_type [–ssl tls_version] -account account_name -user user_name -pw

user_password -endpoint location_address –port # cloud_name](https://image.slidesharecdn.com/ibmds8880andibmz-integratedbydesign-171220114227/75/IBM-DS8880-and-IBM-Z-Integrated-by-Design-221-2048.jpg)