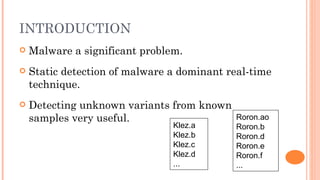

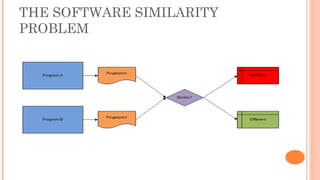

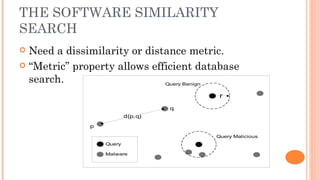

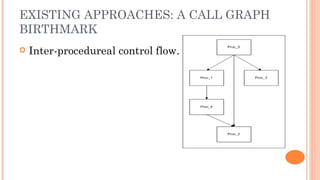

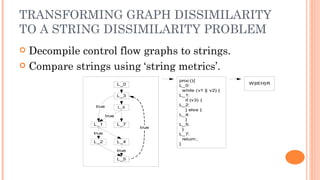

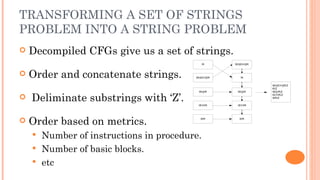

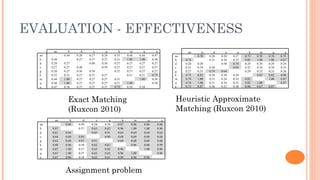

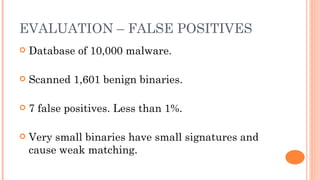

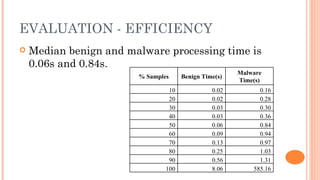

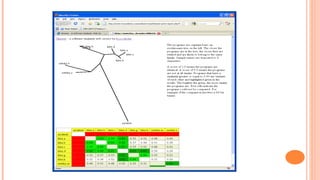

Silvio Cesare presented an effective approach for flowgraph-based malware variant detection. The approach transforms control flow graphs into strings that are then compared using an assignment problem dissimilarity metric for sets of strings. Evaluation on Roron malware variants showed the approach was more effective at detecting variants than previous exact matching approaches. The system was also shown to have low false positive rates and efficient processing times for malware detection. The techniques developed have also been applied to other software analysis tools for similarity detection, bug finding, and more.

![BUGWISE – SGID GAMES XONIX BUG IN

DEBIAN LINUX

memset(score_rec[i].login, 0, 11);

strncpy(score_rec[i].login, pw->pw_name, 10);

memset(score_rec[i].full, 0, 65);

strncpy(score_rec[i].full, fullname, 64);

score_rec[i].tstamp = time(NULL);

free(fullname);

if((high = freopen(PATH_HIGHSCORE, "w",high)) == NULL) {

fprintf(stderr, "xonix: cannot reopen high score filen");

free(fullname);

gameover_pending = 0;

return;

}](https://image.slidesharecdn.com/silviocesarewed1145-effectiveflowgraph-basedmalwarevariantdetection-120516083641-phpapp02/85/Effective-flowgraph-based-malware-variant-detection-34-320.jpg)