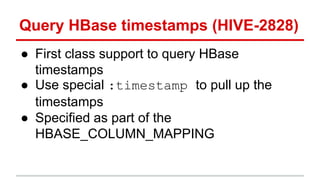

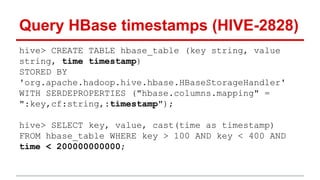

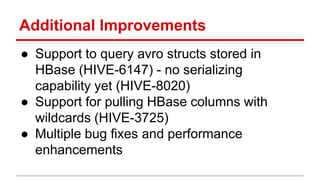

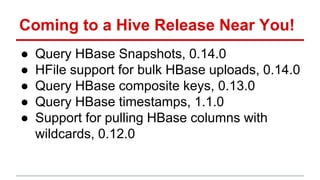

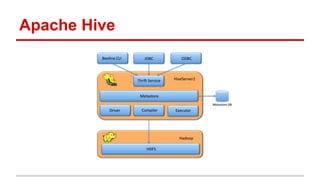

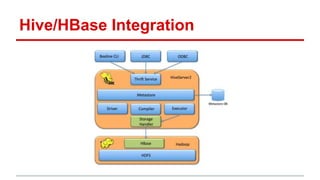

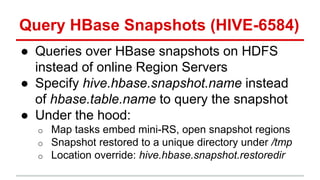

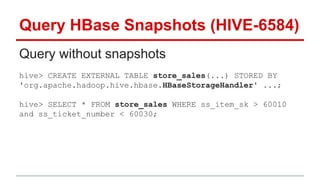

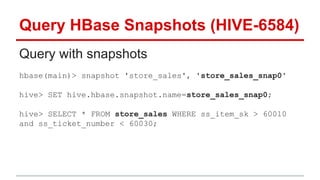

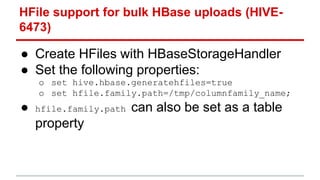

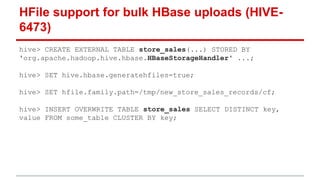

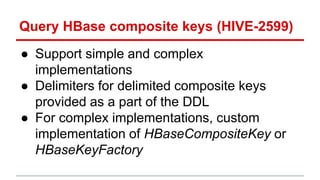

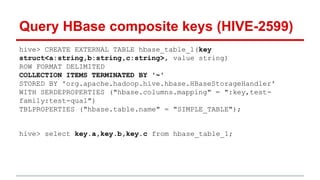

This document summarizes new features for analyzing HBase data with Apache Hive, including the ability to query HBase snapshots, generate HFiles for bulk uploads to HBase, support for composite and timestamp keys, and additional improvements and future work. It provides an overview of Hive and its integration with HBase, describes the new features in detail, and indicates which releases the features will be included in.

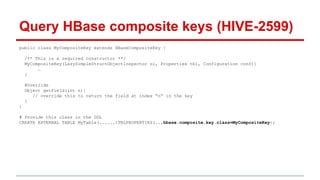

![public interface HBaseKeyFactory extends HiveStoragePredicateHandler {

/** Initialize factory with properties */

void init(HBaseSerDeParameters hbaseParam, Properties properties) throws SerDeException;

/** Create custom object inspector for hbase key */

ObjectInspector createKeyObjectInspector(TypeInfo type) throws SerDeException;

/** Create custom object for hbase key */

LazyObjectBase createKey(ObjectInspector inspector) throws SerDeException;

/** Serialize hive object in internal format of custom key */

byte[] serializeKey(Object object, StructField field) throws IOException;

}

# Provide the implementation in the DDL

CREATE EXTERNAL TABLE MyTable(......)TBLPROPERTIES(..,hbase.composite.key.factory=MyCompositeKeyFactory);

Query HBase composite keys (HIVE-2599)](https://image.slidesharecdn.com/ecosystem-session3a-150605172345-lva1-app6891/85/HBaseCon-2015-Analyzing-HBase-Data-with-Apache-Hive-17-320.jpg)