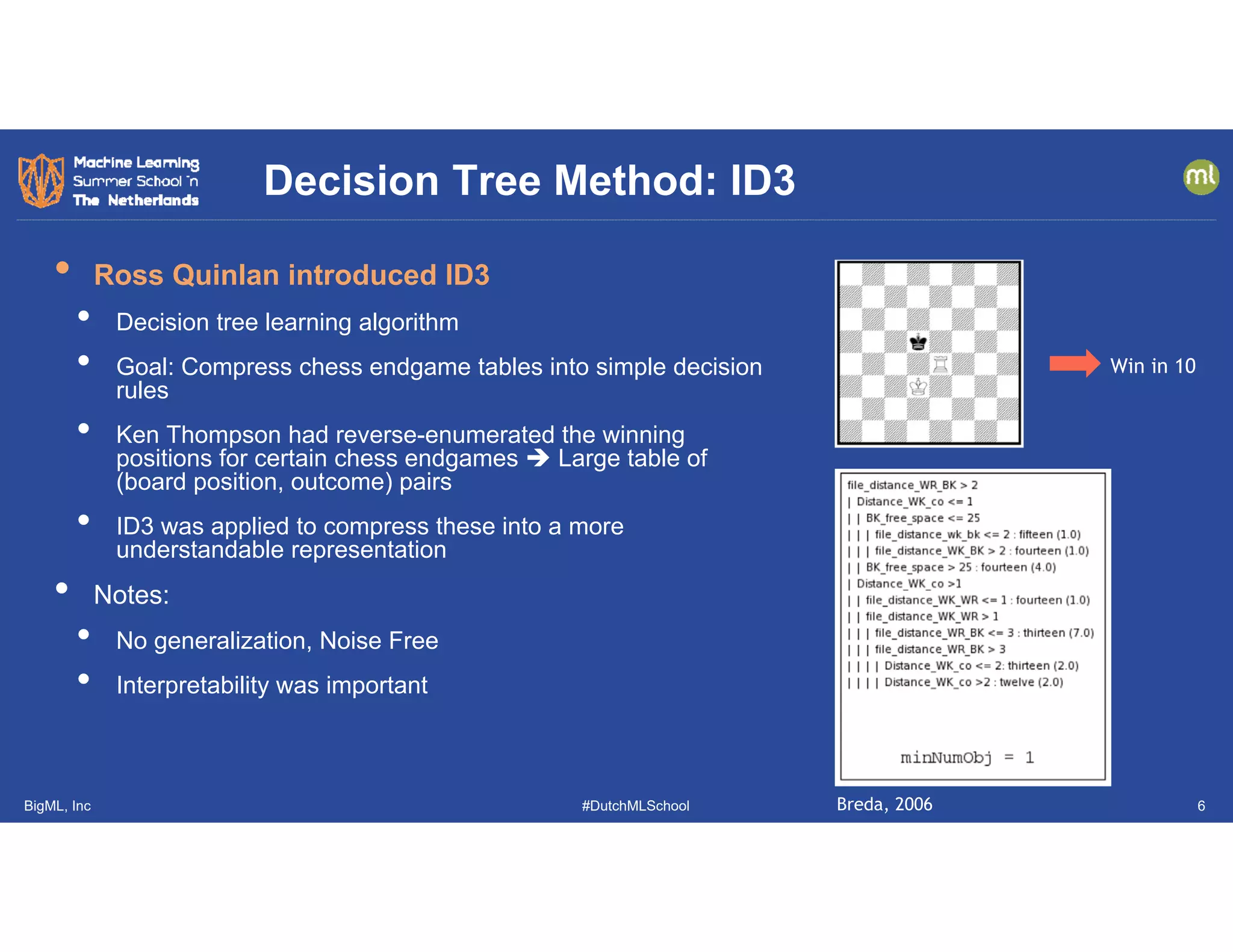

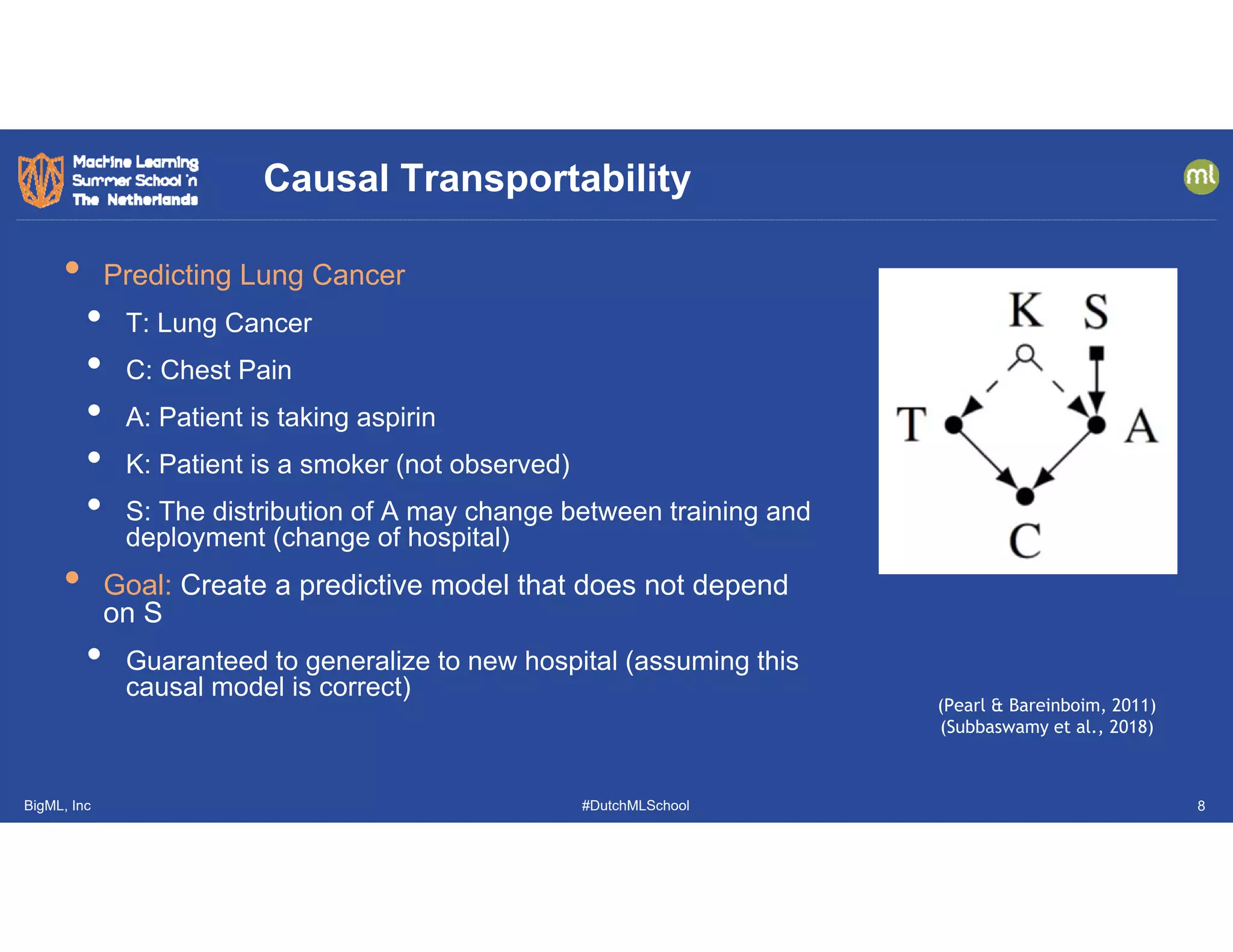

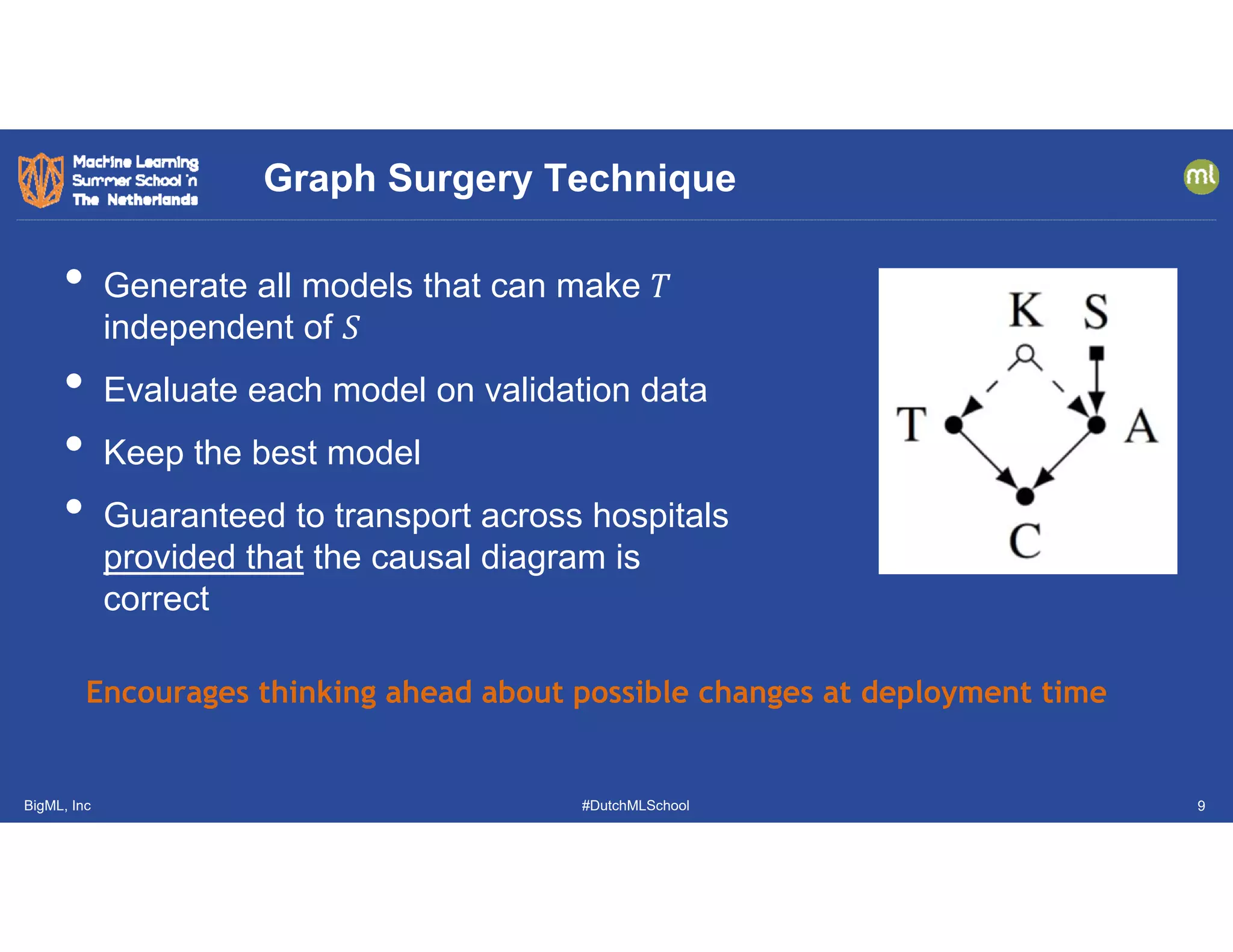

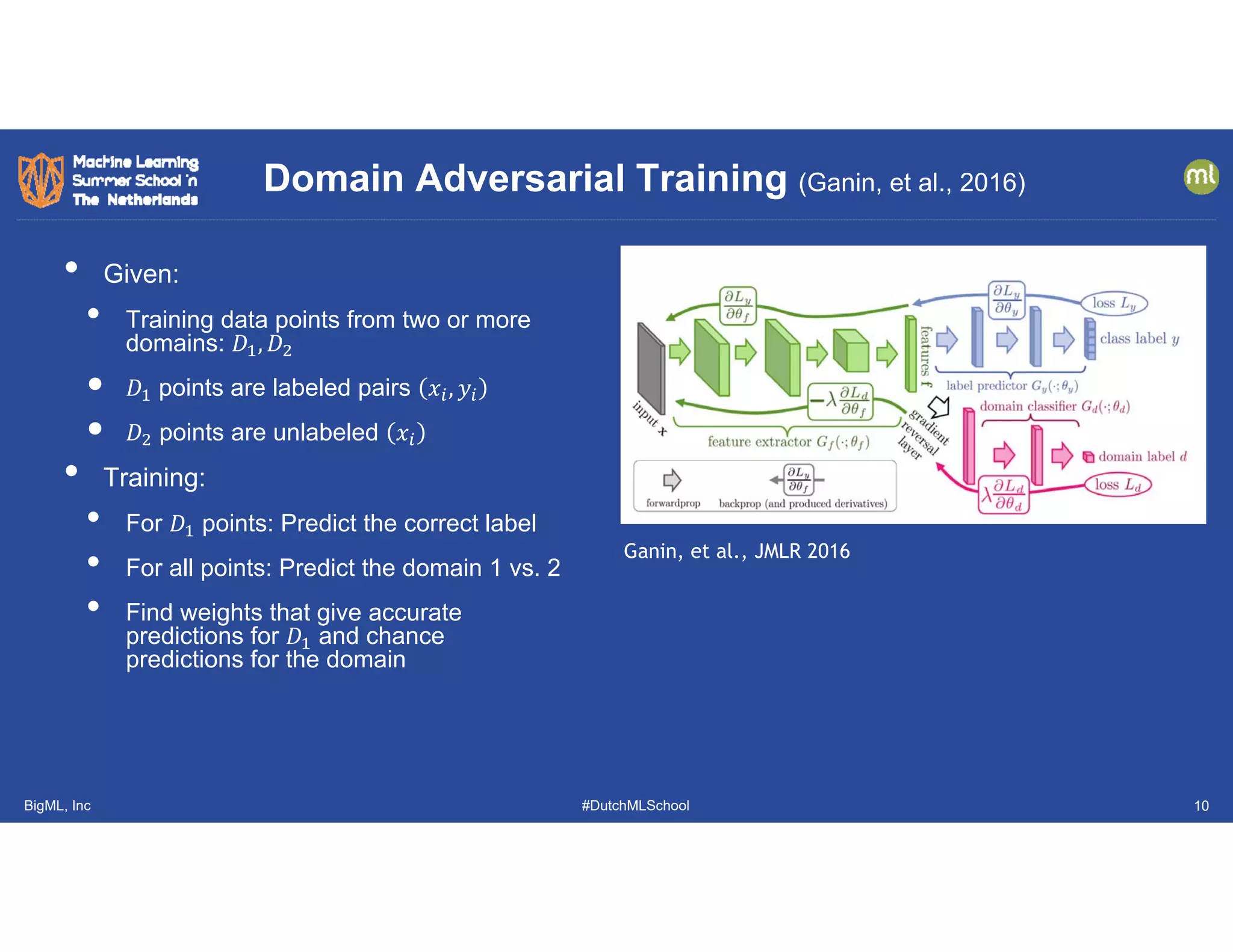

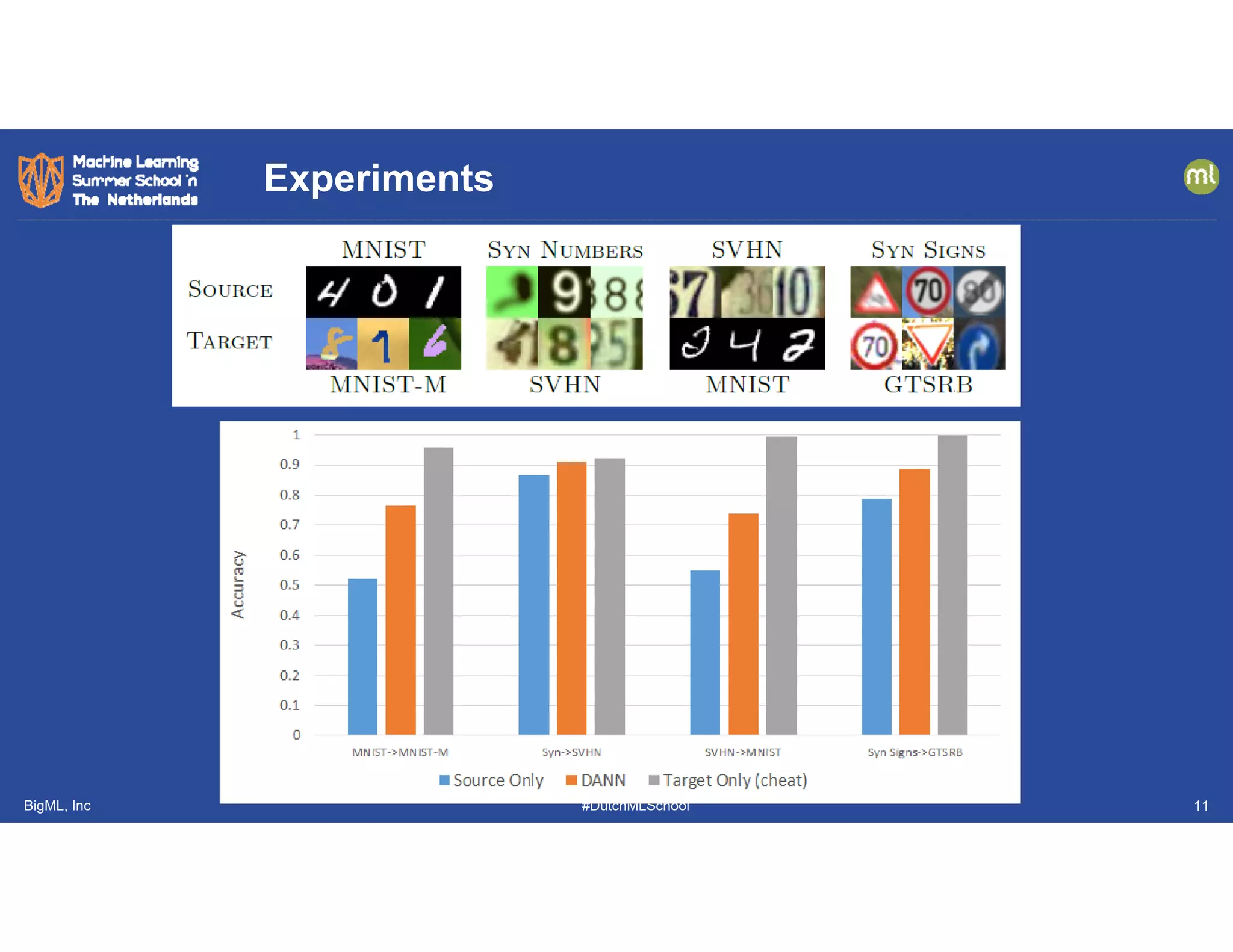

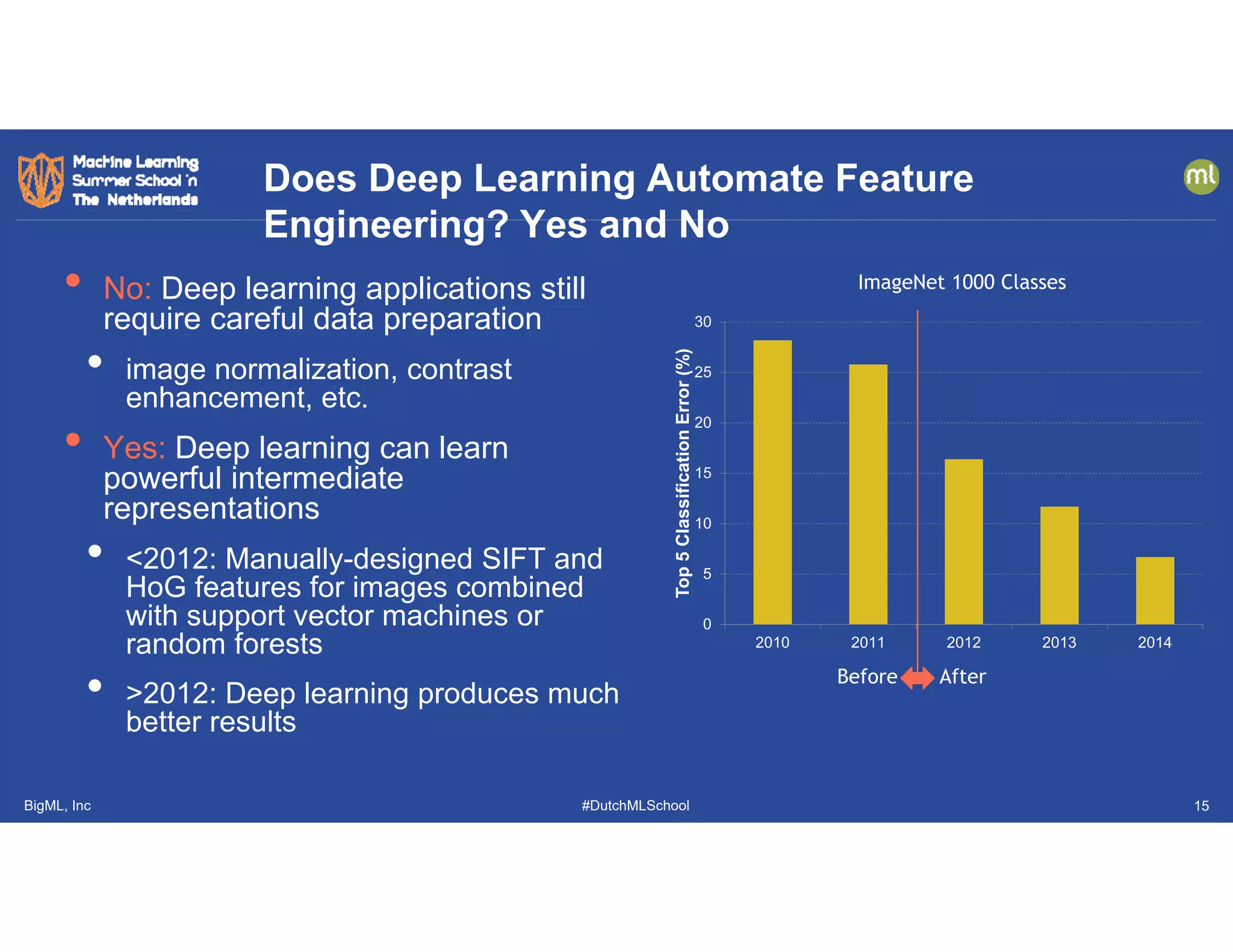

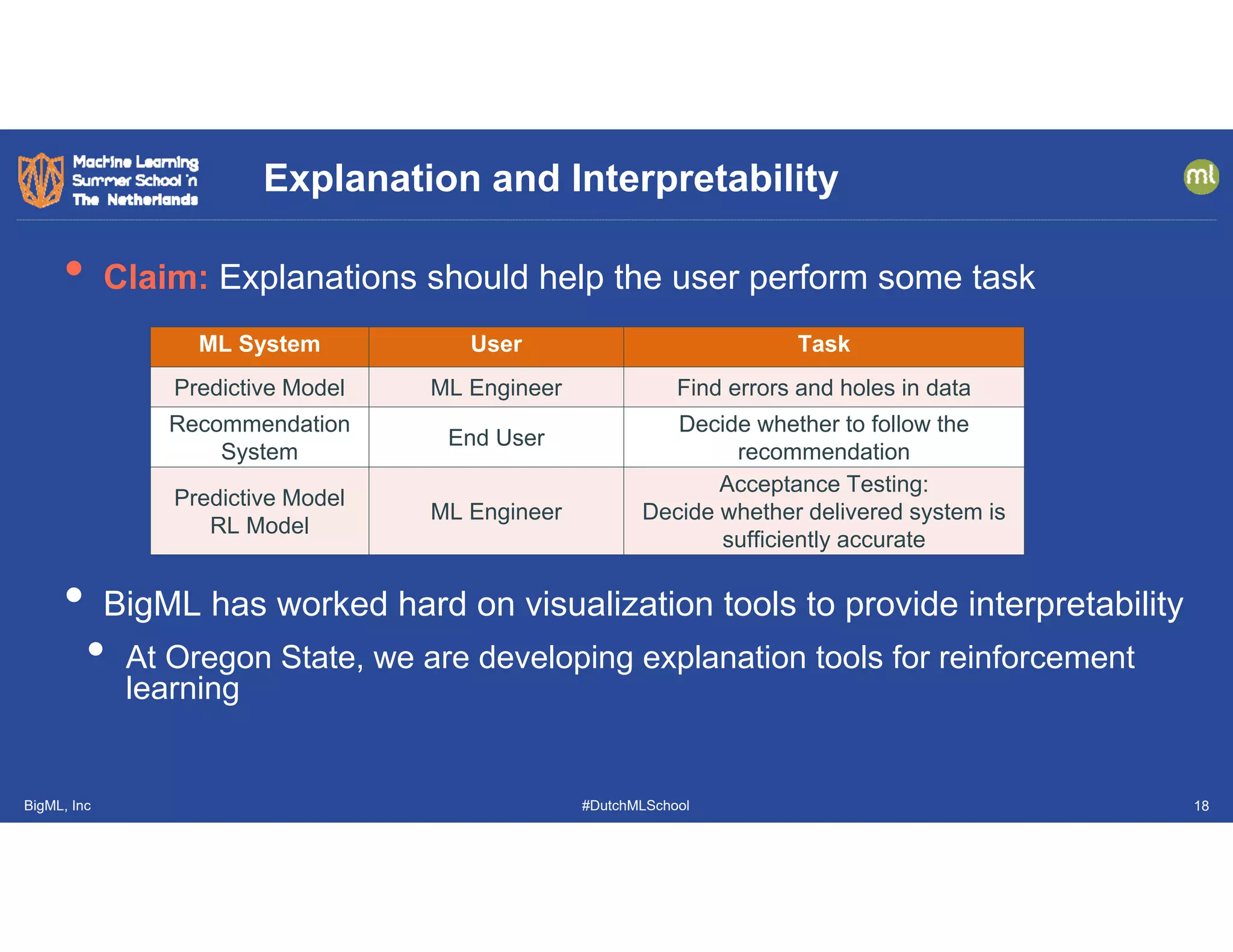

This document discusses the evolution and challenges in machine learning since its inception, covering topics such as generalization, feature engineering, explanation, uncertainty quantification, run-time monitoring, and evaluation metrics. It emphasizes the significance of causal transportability, the careful design of features, the necessity for uncertainty calibration, and the importance of application-specific evaluation metrics. The document highlights ongoing research and practical implications in addressing these challenges within the machine learning field.

![BigML, Inc #DutchMLSchool 28

• Most AD papers only evaluate on a few datasets

• Often proprietary or very easy (e.g., KDD 1999)

• ML community needs a large and growing collection of public

anomaly benchmarks

Anomaly Detection Benchmarking Study

[Emmott, Das, Dietterich, Fern, Wong, 2013; KDD ODD-2013]

[Emmott, Das, Dietterich, Fern, Wong. 2016; arXiv 1503.01158v2]](https://image.slidesharecdn.com/dutchmlschooltechnicalperspectivetdietterich190710-190710085350/75/DutchMLSchool-ML-A-Technical-Perspective-28-2048.jpg)

![BigML, Inc #DutchMLSchool

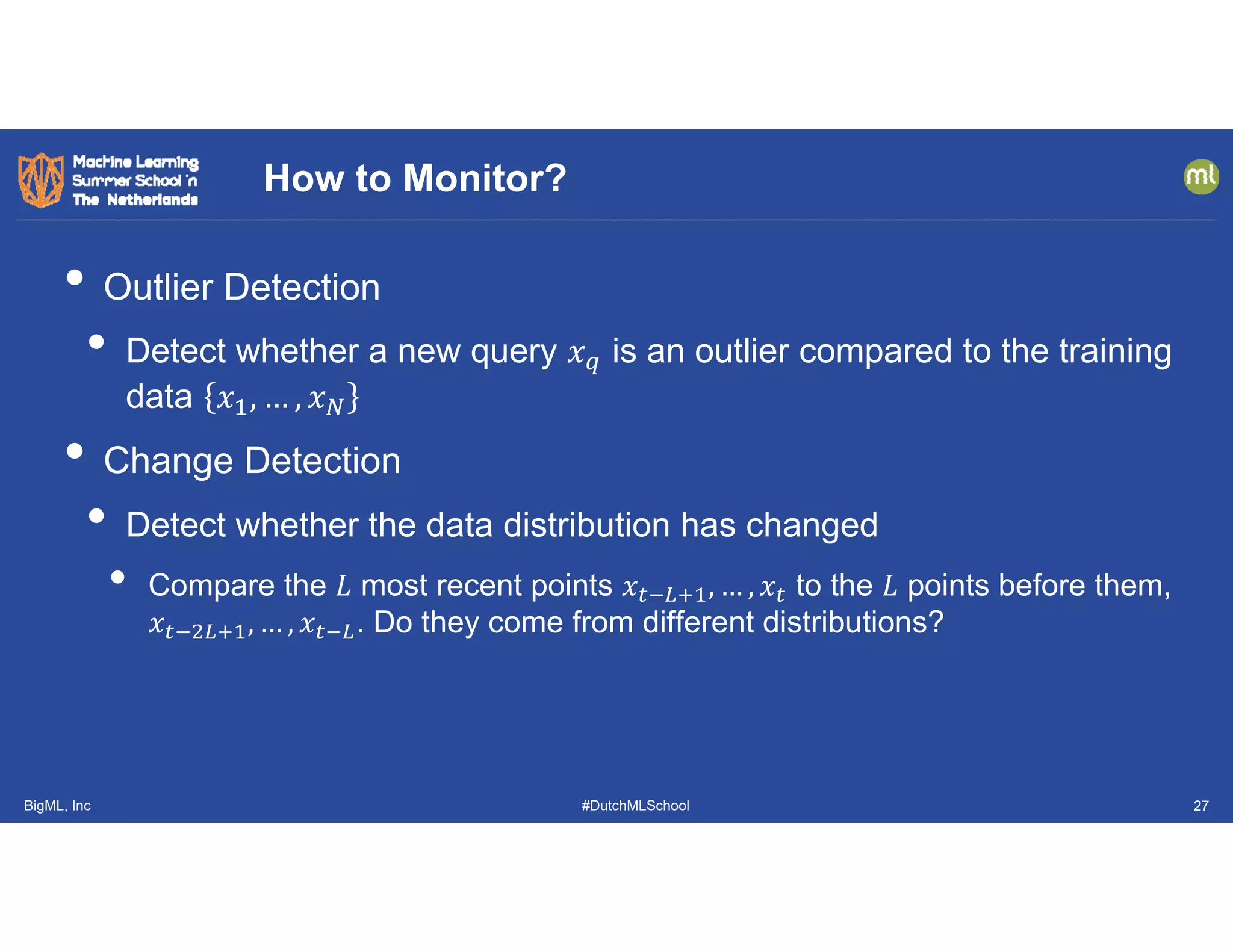

• Only make a prediction

if the query 𝑥 has a

low anomaly score

• Liu, et al. 2018

showed how to set 𝜏 to

guarantee detecting

new category queries

with high probability

Open Category Detection

𝑥

Anomaly

Detector

𝐴 𝑥 𝜏?

Classifier 𝑓

Training

Examples

𝑥 , 𝑦 no

𝑦 𝑓 𝑥

yes

reject

[Liu, Garrepalli, Fern, Dietterich, ICML 2018]

31](https://image.slidesharecdn.com/dutchmlschooltechnicalperspectivetdietterich190710-190710085350/75/DutchMLSchool-ML-A-Technical-Perspective-31-2048.jpg)