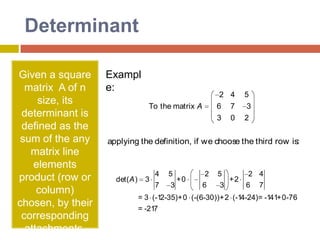

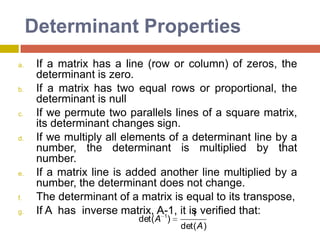

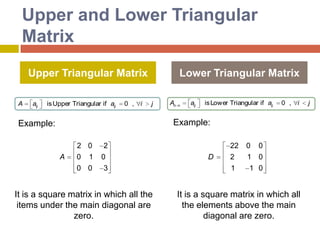

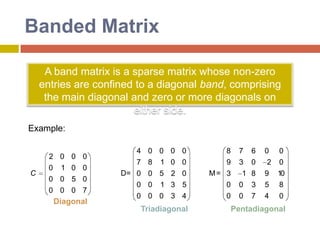

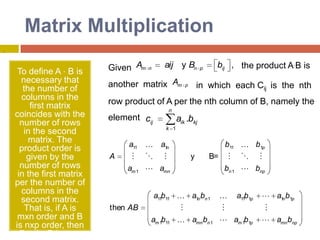

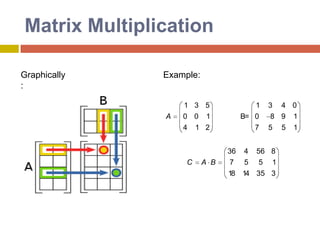

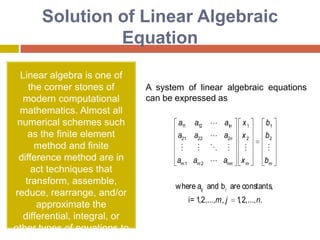

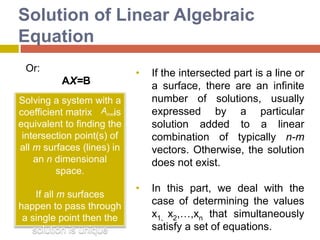

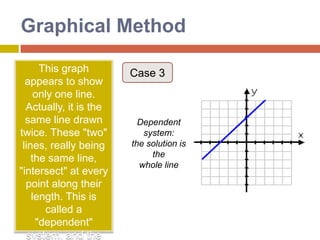

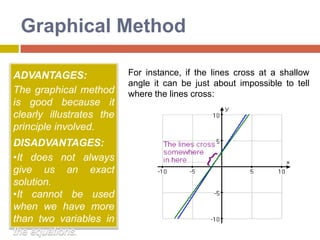

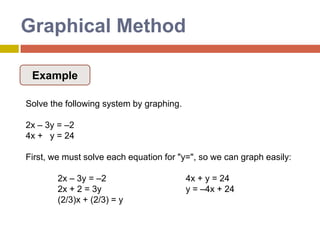

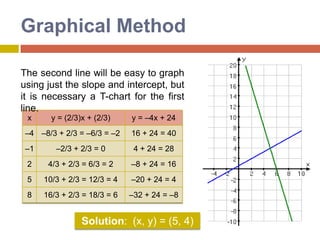

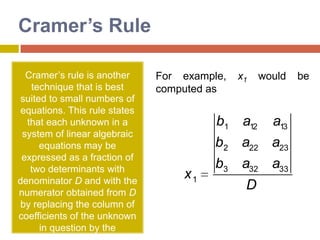

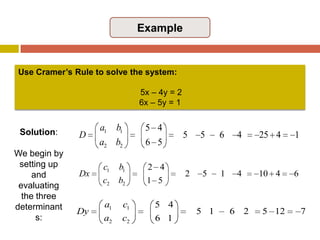

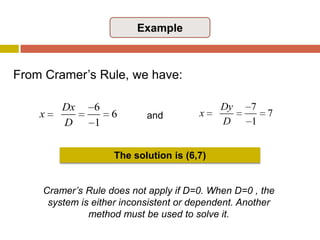

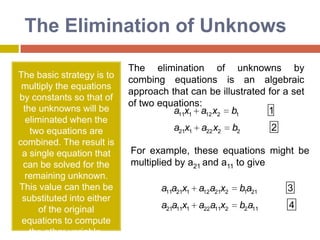

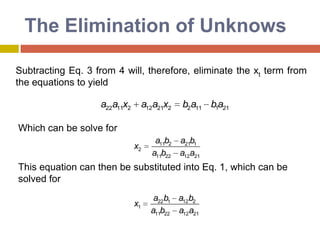

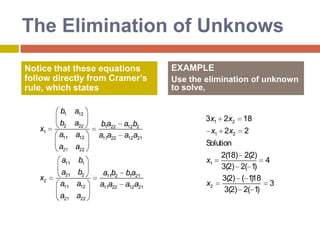

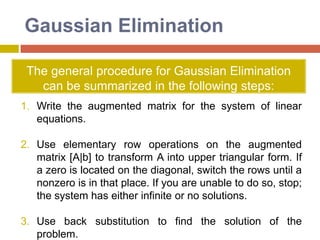

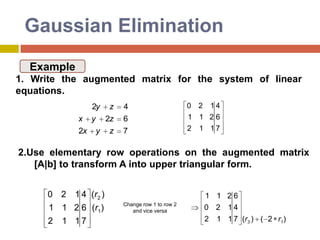

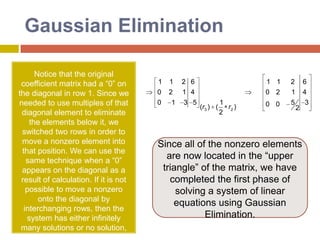

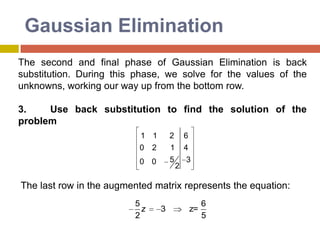

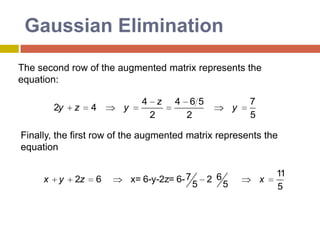

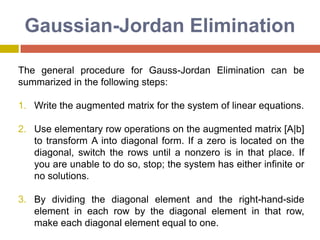

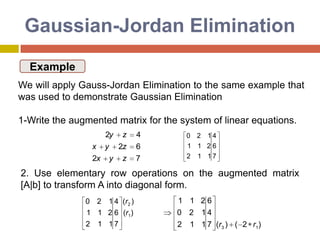

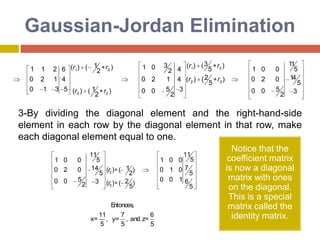

This document discusses various direct methods for solving linear systems of equations, including graphical methods, Cramer's rule, elimination of unknowns, Gaussian elimination, Gaussian-Jordan elimination, and LU decomposition. It provides examples and explanations of each method. Graphical methods can solve systems of 2 equations visually by plotting the lines. Cramer's rule uses determinants to find solutions. Elimination of unknowns combines equations to remove variables. Gaussian elimination converts the matrix to upper triangular form. Gaussian-Jordan elimination converts it to an identity matrix. LU decomposition factors the matrix into lower and upper triangular matrices.

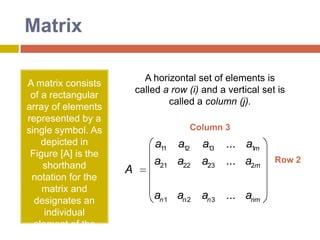

![MatrixA horizontal set of elements is called a row(i) and a vertical set is called a column (j). A matrix consists of a rectangular array of elements represented by a single symbol. As depicted in Figure [A] is the shorthand notation for the matrix and designates an individual element of the matrix.Column 3Row 2](https://image.slidesharecdn.com/directmethods-100727100216-phpapp01/85/Direct-Methods-to-Solve-Lineal-Equations-10-320.jpg)

![GaussianEliminationThe general procedure for Gaussian Elimination can be summarized in the following steps: Write the augmented matrix for the system of linear equations. Use elementary row operations on the augmented matrix [A|b] to transform A into upper triangular form. If a zero is located on the diagonal, switch the rows until a nonzero is in that place. If you are unable to do so, stop; the system has either infinite or no solutions. Use back substitution to find the solution of the problem.](https://image.slidesharecdn.com/directmethods-100727100216-phpapp01/85/Direct-Methods-to-Solve-Lineal-Equations-39-320.jpg)

![GaussianEliminationExample1. Write the augmented matrix for the system of linear equations.2.Use elementary row operations on theaugmented matrix [A|b] to transform A into upper triangular form.Change row 1 to row 2and vice versa](https://image.slidesharecdn.com/directmethods-100727100216-phpapp01/85/Direct-Methods-to-Solve-Lineal-Equations-40-320.jpg)