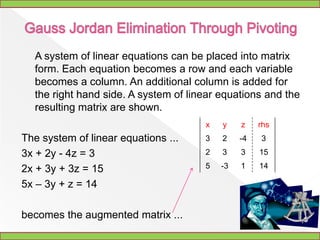

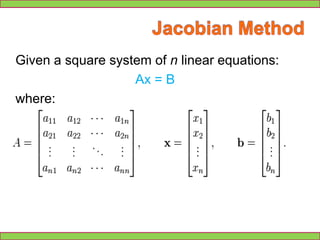

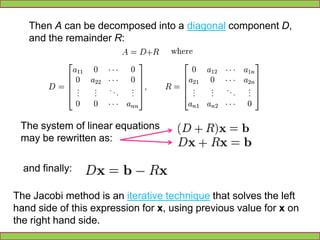

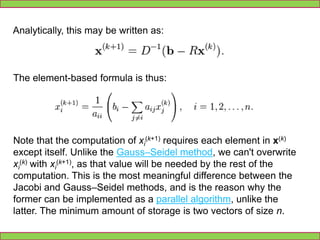

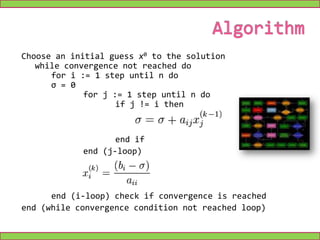

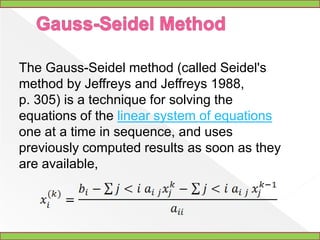

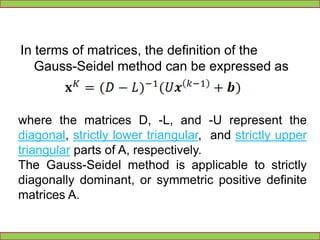

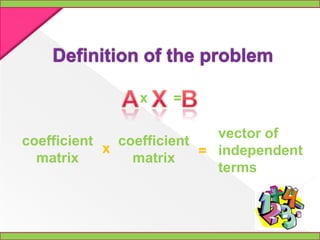

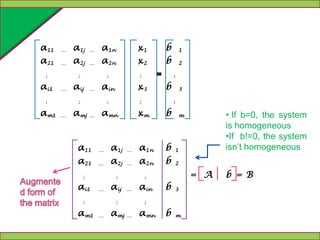

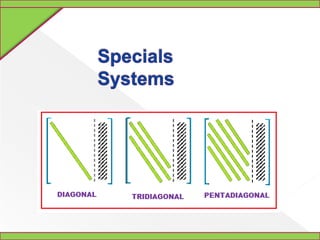

The document provides a detailed explanation of direct methods for solving systems of linear equations, focusing on Gaussian elimination and its variants. It describes the processes of forward elimination, back substitution, and various techniques such as pivoting and the Gauss-Jordan method. Additionally, it touches on other methods like the Jacobi and Gauss-Seidel methods, including their characteristics and applications.

![OtherApplicationsFinding the inverse of a matrixSuppose A is a matrix and you need to calculate its inverse. The identity matrix is augmented to the right of A, forming a matrix (the block matrixB = [A,I]). Through application of elementary row operations and the Gaussian elimination algorithm, the left block of B can be reduced to the identity matrix I, which leaves A−1 in the right block of B. If the algorithm is unable to reduce A to triangular form, then A is not invertible.](https://image.slidesharecdn.com/directmethodsforthesolutionofsystemsof-100719234925-phpapp01/85/Direct-Methods-For-The-Solution-Of-Systems-Of-12-320.jpg)