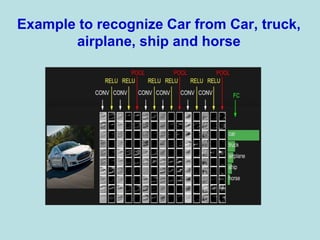

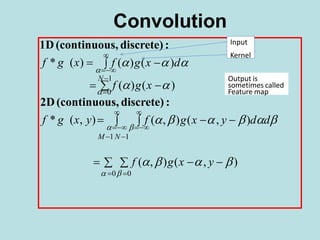

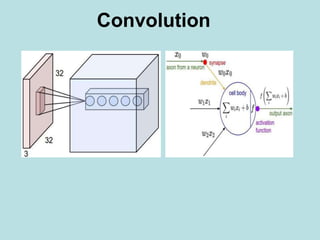

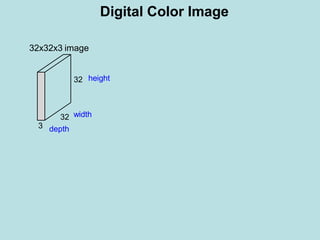

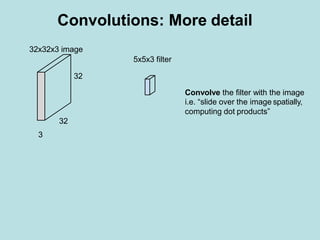

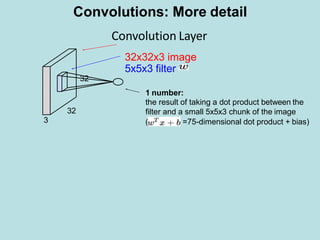

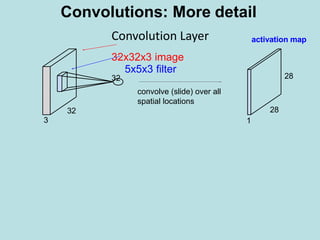

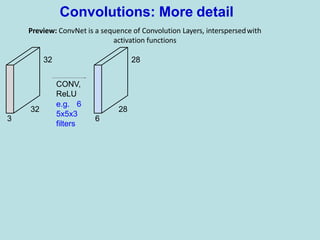

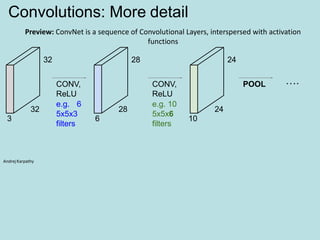

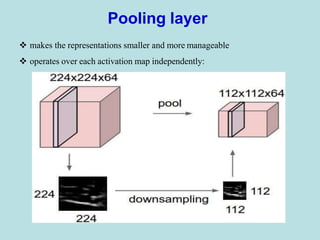

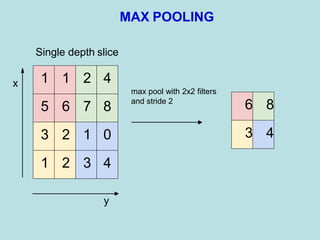

The document discusses deep learning and convolutional neural networks. It provides details on concepts like convolution, activation maps, pooling, and the general architecture of CNNs. CNNs are made up of repeating sequences of convolutional layers and pooling layers, followed by fully connected layers at the end. Convolutional layers apply filters to input images or feature maps from previous layers to extract features. Pooling layers reduce the spatial size to make representations more manageable.

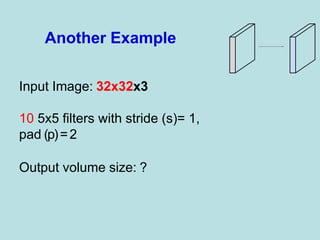

![Input volume: 32x32x3

10 5x5 filters with stride 1, pad 2

Output volume size:

[(32+2*2-5)/1+1]×[(32+2*2-5)/1+1] = 32×32

spatially, so

32x32x10

Output](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-12-320.jpg)

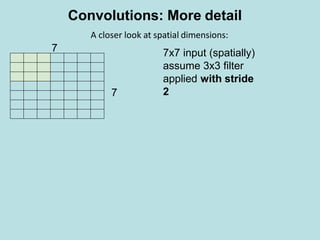

![7

• • 7x7 input (spatially)

assume 3x3 filter

7

A closer look at spatial dimensions:

Convolutions: More detail

[(7+2*0-3)/1+1]×[(7+2*0-3)/1+1] = 5×5 Output](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-17-320.jpg)

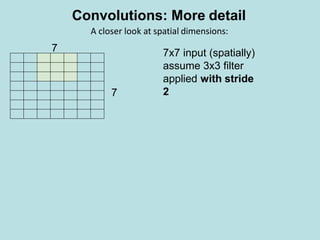

![7x7 input (spatially)

assume 3x3 filter

applied with stride

2

=> 3x3 output!

7

7

A closer look at spatial dimensions:

Convolutions: More detail

[(7+2*0-3)/2+1]×[(7+2*0-3)/2+1] = 3×3 Output](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-20-320.jpg)

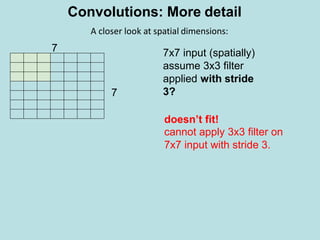

![7x7 input (spatially)

assume 3x3 filter

applied with stride

3?

7

7

A closer look at spatial dimensions:

Convolutions: More detail

[(7+2*0-3)/3+1]×[(7+2*0-3)/3+1] = [4/3+1]×[4/3+1] =2.33×2.33](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-21-320.jpg)

![In practice: Common to zero pad the border

0 0 0 0 0 0

0

0

0

0

e.g. input 7x7

3x3 filter, applied with stride 1

pad with 1 pixel border => what is theoutput?

Convolutions: More detail

[(7+2*1-3)/1+1]×[(7+2*1-3)/1+1] = 7×7 Output](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-23-320.jpg)

![In practice: Common to zero pad the border

e.g. input 7x7

3x3 filter, applied with stride 2

pad with 1 pixel border => what is theoutput?

0 0 0 0 0 0

0

0

0

0

Convolutions: More detail

[(7+2*1-3)/2+1]×[(7+2*1-3)/2+1] = 4×4 Output](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-24-320.jpg)

![In practice: Common to zero pad the border

0 0 0 0 0 0

0

0

0

0

Convolutions: More detail

e.g. input 7x7

3x3 filter, applied with stride 3

pad with 1 pixel border => what is theoutput?

[(7+2*1-3)/3+1]×[(7+2*1-3)/3+1] = 3×3 Output](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-25-320.jpg)

![[(CONVRELU)*NPOOL]*MFC

N: up to 5

M is Large

FC: Contains neurons that connect to the

entire input volume, as in ordinary Neural

Networks

General Architecture of CNNs](https://image.slidesharecdn.com/deeplearning-200425115412/85/Deep-learning-31-320.jpg)