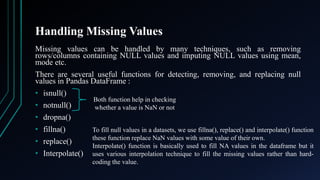

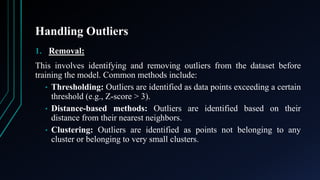

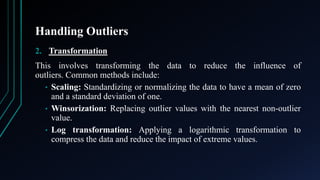

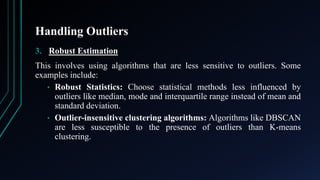

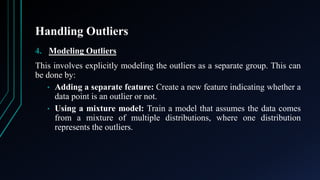

Data preprocessing is a vital step in data analysis, involving the cleaning, transforming, and integrating of raw data to ensure accurate and consistent outcomes. Key techniques in data cleaning include handling missing values, addressing outliers, and removing duplicates to enhance data quality for machine learning. Effective handling methods include imputation, robust statistics, and visualization tools to identify and manage data irregularities.