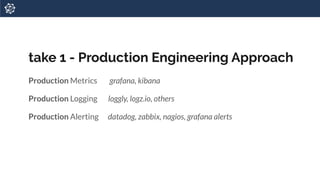

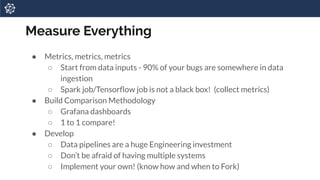

This document discusses the need for observability in data pipelines. It notes that real data pipelines often fail or take a long time to rerun without providing any insight into what went wrong. This is because of frequent code, data, dependency, and infrastructure changes. The document recommends taking a production engineering approach to observability using metrics, logging, and alerting tools. It also suggests experiment management and encapsulating reporting in notebooks. Most importantly, it stresses measuring everything through metrics at all stages of data ingestion and processing to better understand where issues occur.