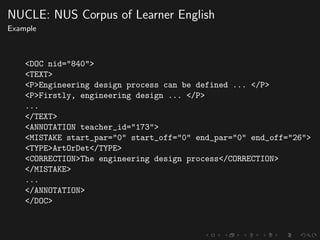

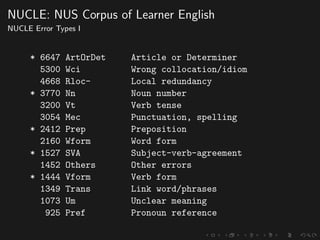

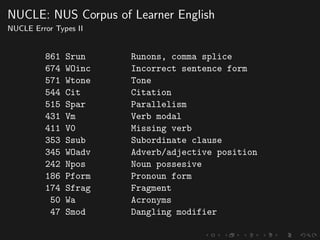

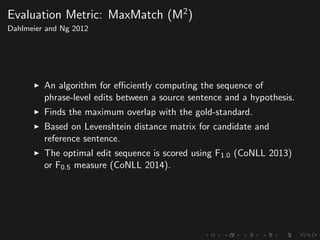

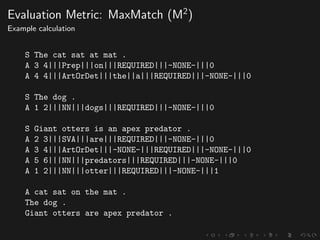

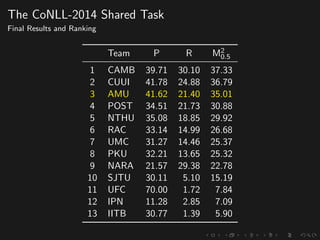

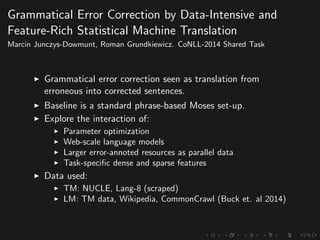

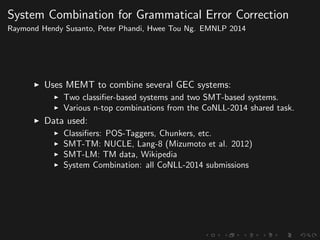

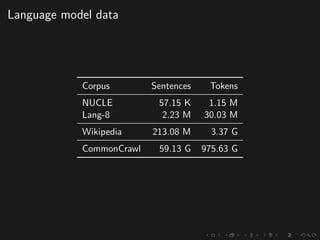

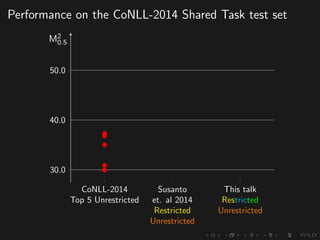

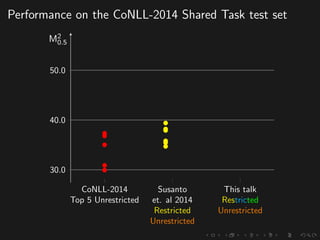

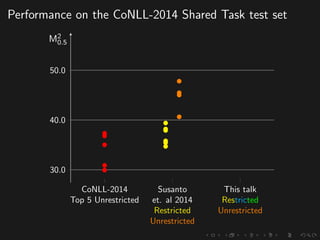

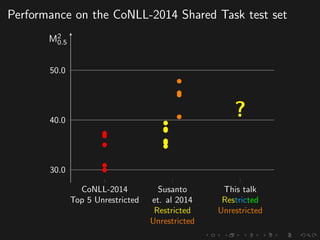

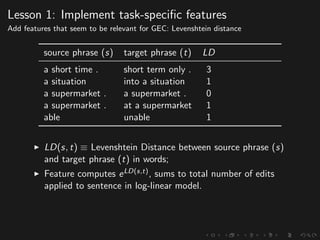

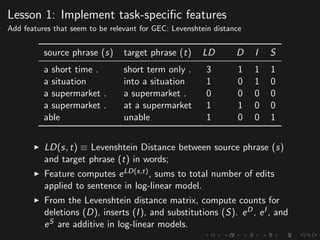

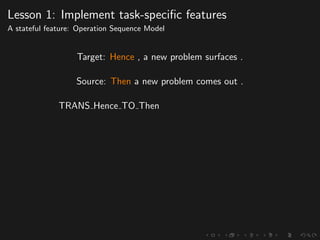

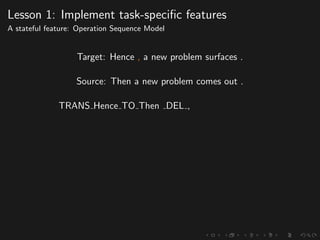

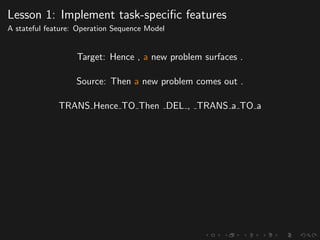

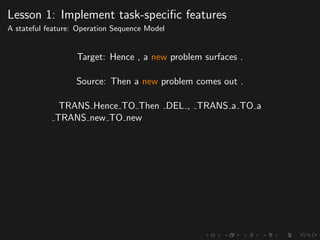

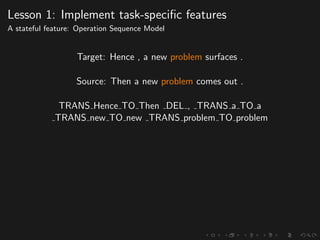

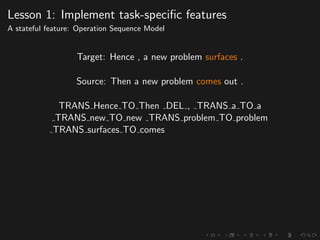

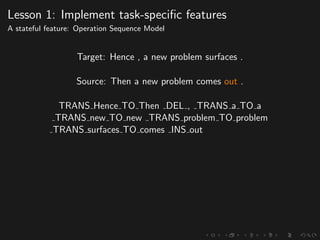

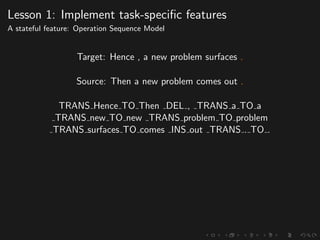

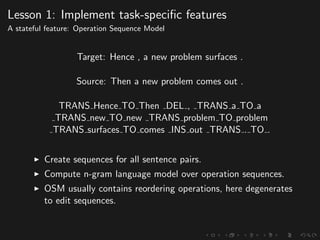

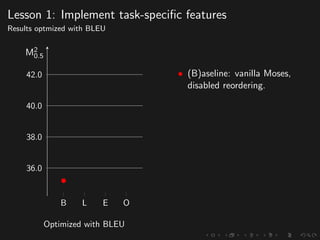

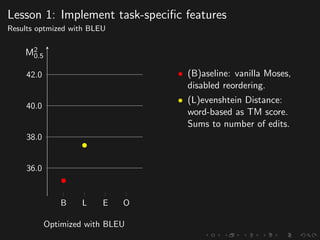

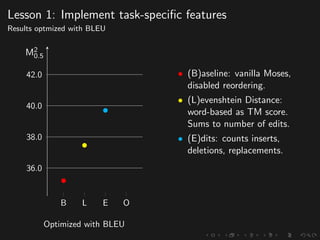

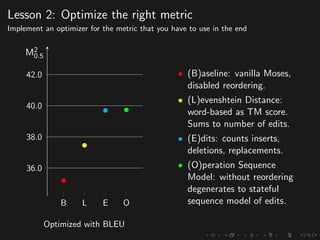

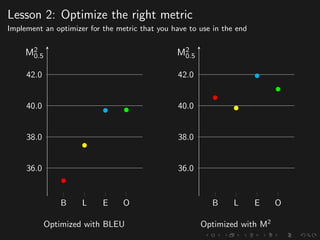

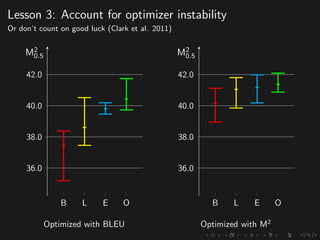

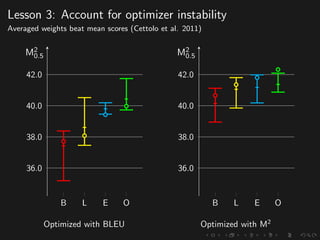

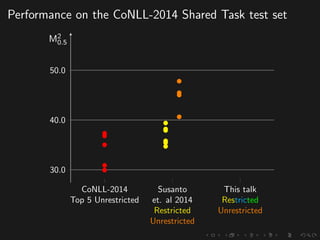

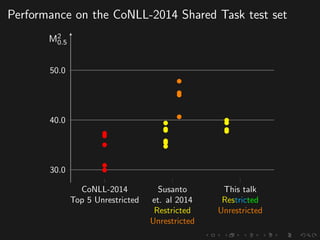

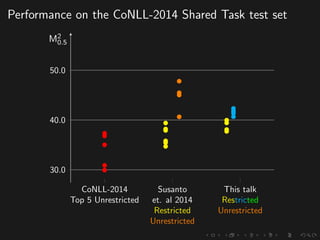

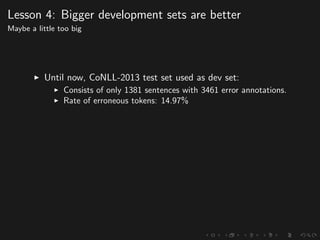

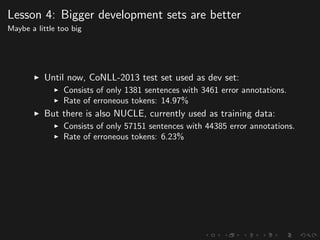

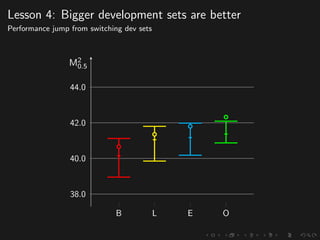

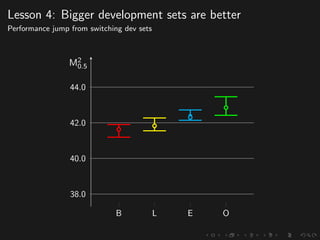

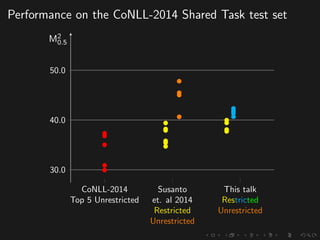

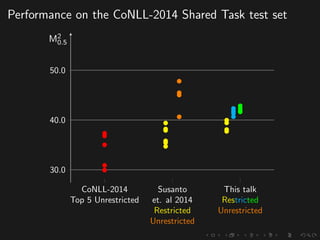

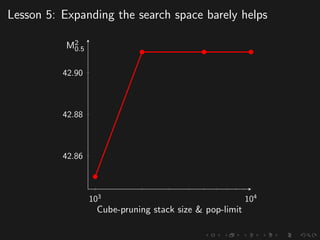

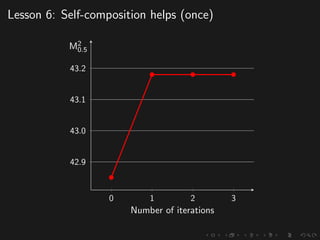

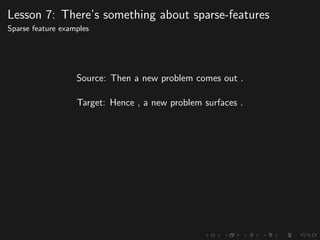

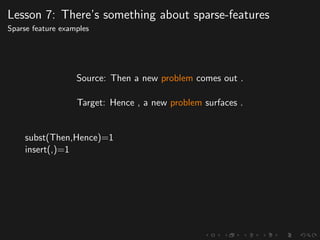

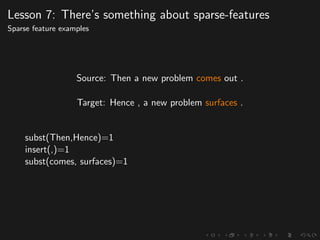

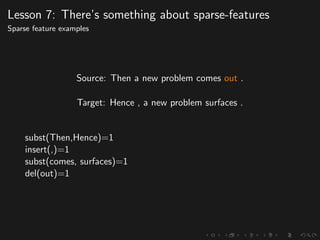

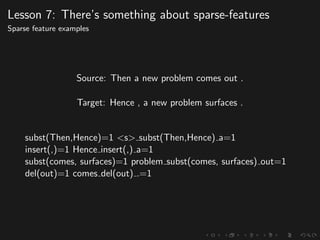

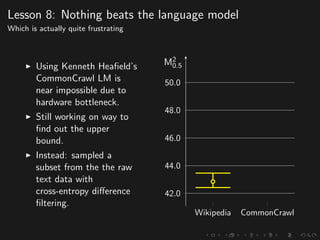

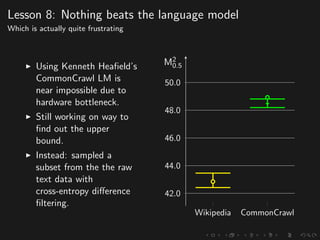

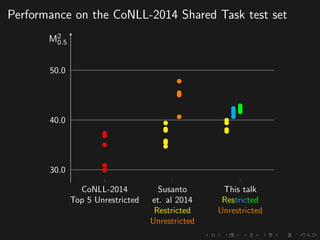

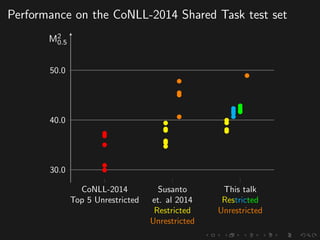

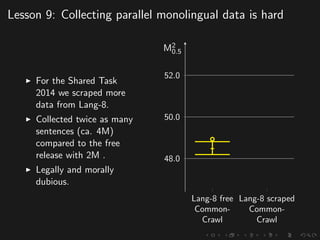

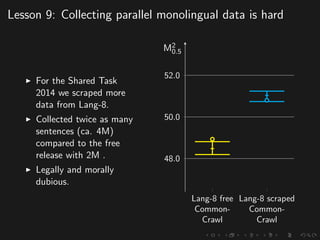

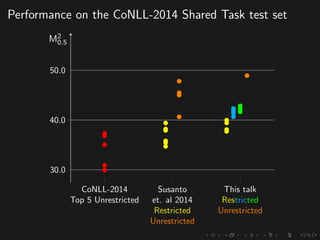

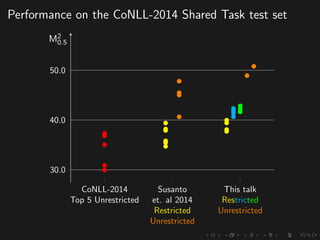

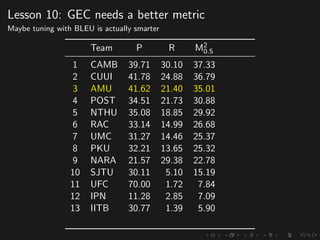

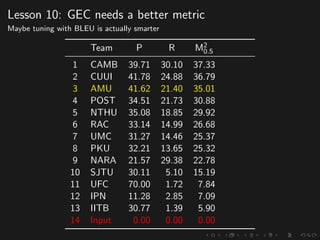

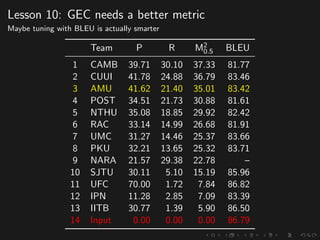

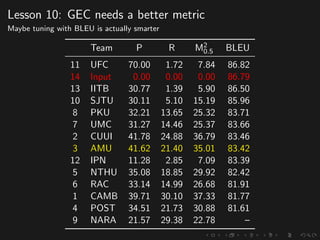

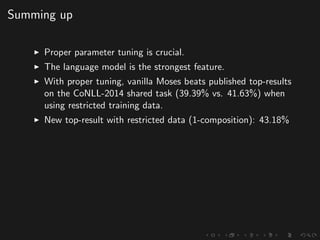

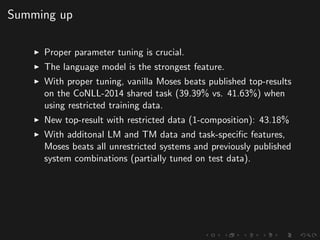

The document discusses advancements in grammatical error correction (GEC) for English as a second language (ESL) learners, detailing the evolution of the CONLL shared tasks and participation from various teams. It presents the NUCLE corpus, evaluation metrics like MaxMatch, system combinations, and lessons learned from the 2014 shared task, emphasizing the importance of feature engineering and large training datasets. Key takeaways include optimizing metrics for GEC systems and the significance of task-specific features in improving correction strategies.