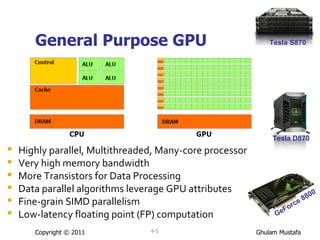

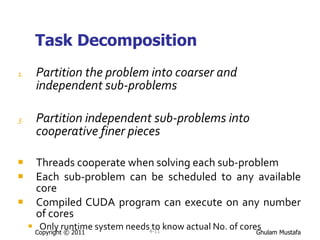

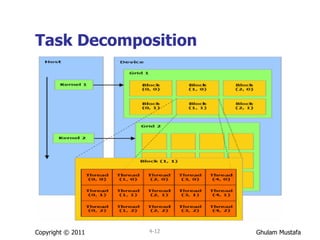

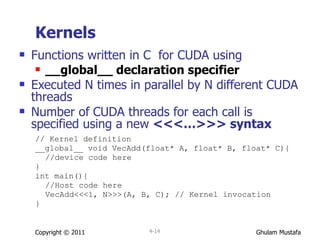

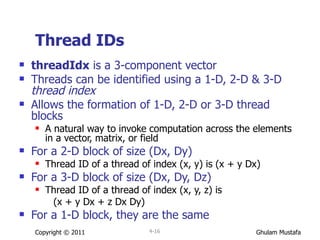

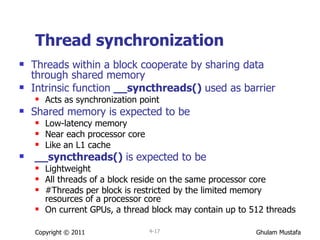

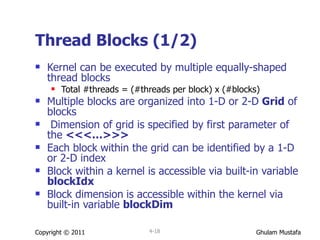

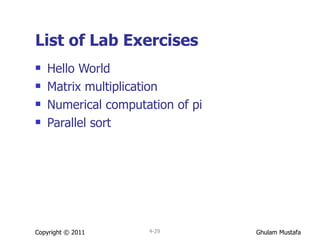

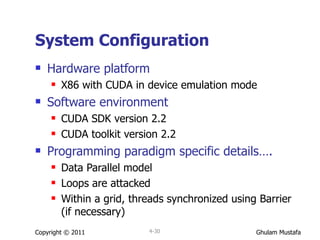

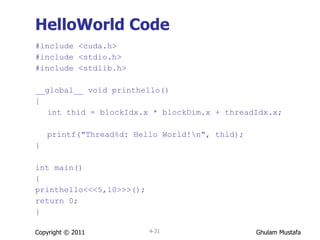

The document summarizes a lecture on parallel computing with CUDA (Compute Unified Device Architecture). It introduces CUDA as a parallel programming model for GPUs, covering key concepts like memory architecture, host-GPU workload partitioning, programming paradigm, and programming examples. It then outlines the agenda, benefits of GPU computing, and provides details on CUDA programming interfaces, kernels, threads, blocks, and memory hierarchies. Finally, it lists some lab exercises on CUDA programming including HelloWorld, matrix multiplication, and parallel sorting algorithms.

![Threads within kernel Each thread of kernel is given a unique thread ID Accessible within the kernel via built-in variable threadIdx // Kernel definition __global__ void VecAdd(float* A, float* B, float* C){ int i = threadIdx.x; //Each threads performs one pair-wise addition C[i] = A[i] + B[i]; } int main(){ // Kernel invocation VecAdd<<<1, N>>>(A, B, C); } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-15-320.jpg)

![Example // Kernel definition __global__ void MatAdd(float A[N][N], float B[N][N], float C[N][N]){ int i = blockIdx.x * blockDim.x + threadIdx.x; // x + y Dx int j = blockIdx.y * blockDim.y + threadIdx.y; // y + x Dy if (i < N && j < N) C[i][j] = A[i][j] + B[i][j]; } int main(){ // Kernel invocation dim3 dimBlock(16, 16); //16x16=256 threads dim3 dimGrid((N + dimBlock.x – 1) / dimBlock.x, (N + dimBlock.y – 1) / dimBlock.y); MatAdd<<<dimGrid, dimBlock>>>(A, B, C); } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-20-320.jpg)

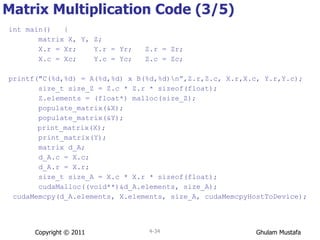

![Matrix Multiplication Code (1/5) /* Matrices are stored in row-major order: * M(row, col) = M.ents[row * M.w + col] */ #define BLOCK_SZ 2 #define Xc (2 * BLOCK_SZ) #define Xr (3 * BLOCK_SZ) #define Yc (2 * BLOCK_SZ) #define Yr Xc #define Zc Yc #define Zr Xr typedef struct Matrix{ int r,c; float* elements; } matrix; void populate_matrix(matrix*); void print_matrix(matrix); Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-32-320.jpg)

![Matrix Multiplication Code (2/5) __global__ void matrix_mul_krnl(matrix A, matrix B, matrix C) { float C_entry = 0; int row = blockIdx.y * blockDim.y + threadIdx.y; int col = blockIdx.x * blockDim.x + threadIdx.x; int i; for (i = 0; i < A.c; i++) C_entry += A.elements[row * A.c + i] * B.elements[i * B.c + col]; C.elements[row * C.c + col] = C_entry; } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-33-320.jpg)

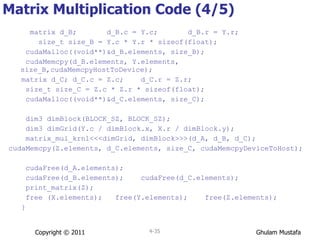

![Matrix Multiplication Code (5/5) void populate_matrix(matrix* mat){ int dim = mat -> c * mat -> r; size_t sz = dim * sizeof(float); mat -> elements = (float*) malloc(sz); int i; for (i = 0; i < dim; i++) mat->elements[i] = (float)(rand()%1000); } void print_matrix(matrix mat){ int i, n = 0, dim; dim = mat.c * mat.r; for (i = 0; i < dim; i++) { if (i == mat.c * n){ printf("\n"); n++; } printf("%0.2f\t", mat.elements[i]); } } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-36-320.jpg)

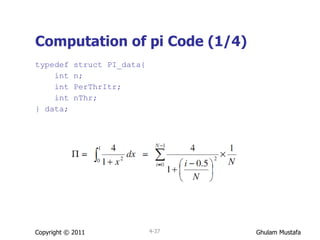

![Computation of pi Code (2/4) __global__ void calculate_PI(data d, float* s){ float sum, x, w; int itr,i,j; itr = d.PerThrItr; i = blockIdx.x * blockDim.x + threadIdx.x; int N = d.n-i; w = 1.0/(float)N; sum = 0.0; if (i < d.nThr) { for (j = i * itr; j < (i * itr+itr); j++) { x = w * (j-0.5); sum+= (4.0)/(1.0 + x*x); } s[i] = sum * w; } } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-38-320.jpg)

![Computation of pi Code (3/4) int main(int argc, char** argv){ if(argc < 2){ printf("Usage: ./<progname> #itrations #Threads\n"); exit(1); } data pi_data; float PI=0.0; pi_data.n = atoi(argv[1]); pi_data.nThr = atoi(argv[2]); pi_data.PerThrItr = pi_data.n/pi_data.nThr; float *d_sum; float *h_sum; size_t size = pi_data.nThr * sizeof(float); cudaMalloc((void**)&d_sum, size); h_sum = (float*) malloc(size); Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-39-320.jpg)

![Computation of pi Code (4/4) int threads_per_block = 4; int blocks_per_grid; blocks_per_grid = (pi_data.nThr + threads_per_block - 1)/threads_per_block; calculate_PI<<<blocks_per_grid,threads_per_block>>>(pi_data, d_sum); cudaMemcpy(h_sum, d_sum, size, cudaMemcpyDeviceToHost); int i; for (i = 0; i < pi_data.nThr; i++) PI+= h_sum[i]; printf("Using %d itrations, Value of PI is %f \n", pi_data.n, PI); cudaFree(d_sum); } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-40-320.jpg)

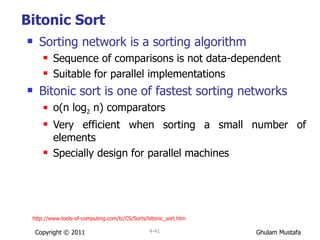

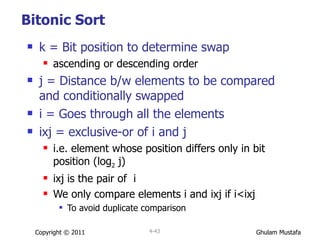

![Bitonic Sort All elements Sorted single element subsequences Pairs of elements Sorted in ascending or descending subsequences Elements differing in bit 0 (lowest) are compared and exchanged conditionally If (bit 1 is zero) elements are sorted in ascending order If(bit 1 is 1) Elements are sorted in descending order Copyright © 2011 4- Index 0 1 2 3 4 5 6 7 Binary Form 0000 0001 0010 0011 0100 0101 0110 0111 [0000 , 0001] ascending [0010 , 0011] descending [0100 , 0101] ascending [0110 , 0111] descending](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-42-320.jpg)

![Parallel Sort Code (1/3) #define NUM 32 __device__ inline void swap(int & a, int & b){ int tmp = a; a = b; b = tmp; } __global__ static void bitonicSort(int * values){ extern __shared__ int shared[]; const unsigned int tid = threadIdx.x; shared[tid] = values[tid]; __syncthreads(); Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-45-320.jpg)

![// Parallel bitonic sort for (unsigned int k = 2; k <= NUM; k *= 2) { // Bitonic merge: for (unsigned int j = k / 2; j>0; j /= 2) { unsigned int ixj = tid ^ j; if (ixj > tid){ if ((tid & k) == 0) { if (shared[tid] > shared[ixj]) swap(shared[tid], shared[ixj]); } else{ if (shared[tid] < shared[ixj]) swap(shared[tid], shared[ixj]); } } __syncthreads(); } } // Write result. values[tid] = shared[tid]; } Parallel Sort Code (2/3) Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-46-320.jpg)

![Parallel Sort Code (3/3) int main(int argc, char** argv){ int values[NUM]; for(int i = 0; i < NUM; i++) values[i] = rand()%1000; int * dvalues; cudaMalloc((void**)&dvalues, sizeof(int) * NUM); cudaMemcpy(dvalues, values, sizeof(int) * NUM,cudaMemcpyHostToDevice); bitonicSort<<<1, NUM, sizeof(int) * NUM>>>(dvalues); cudaMemcpy(values, dvalues, sizeof(int) * NUM,cudaMemcpyDeviceToHost); cudaFree(dvalues); bool passed = true; int i; for( i = 1; i < NUM; i++) { if (values[i-1] > values[i]) passed = false; } printf( "Test %s\n", passed ? "PASSED" : "FAILED"); } Copyright © 2011 4-](https://image.slidesharecdn.com/cuda2011-110726012136-phpapp01/85/Cuda-2011-47-320.jpg)