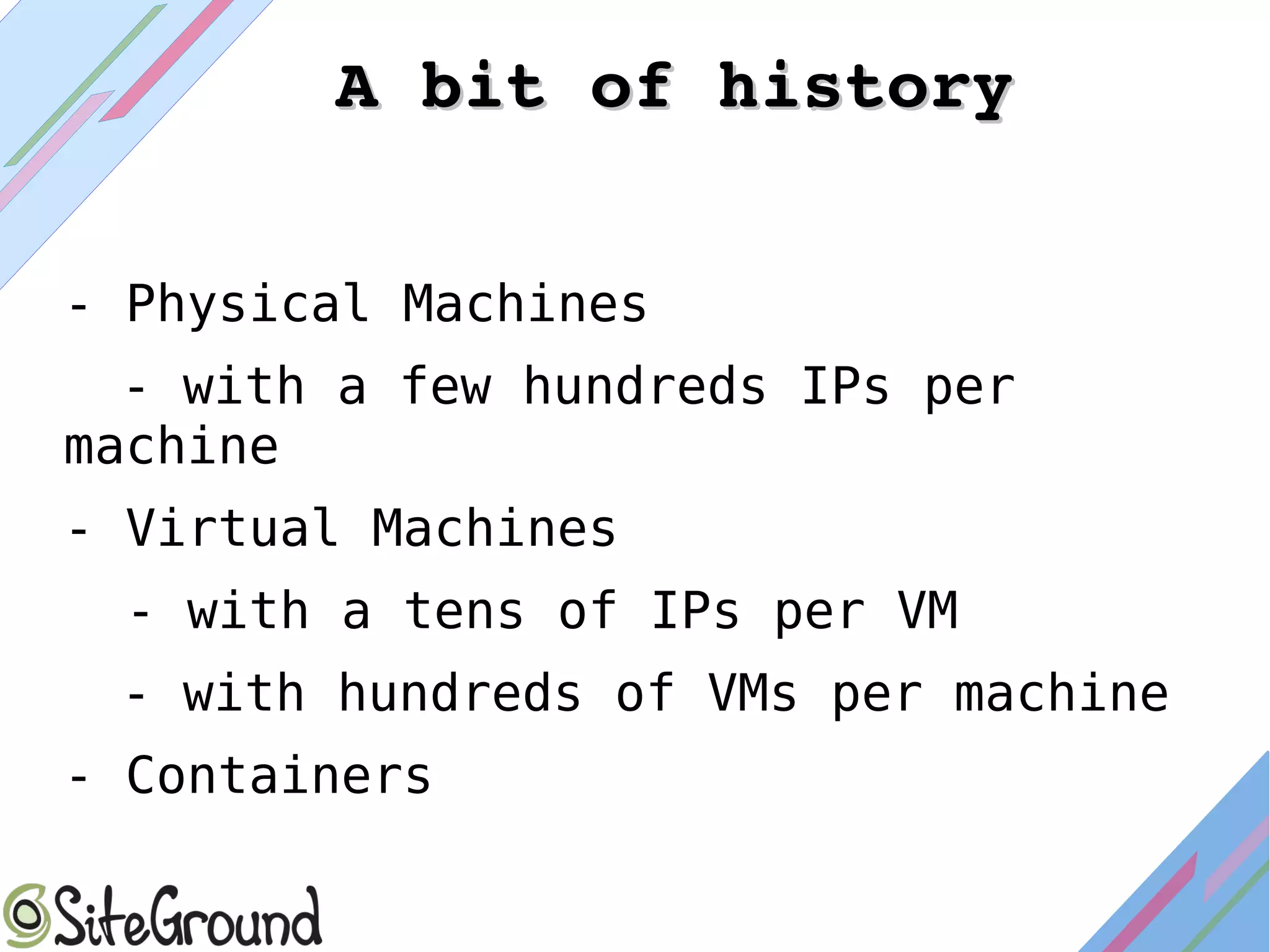

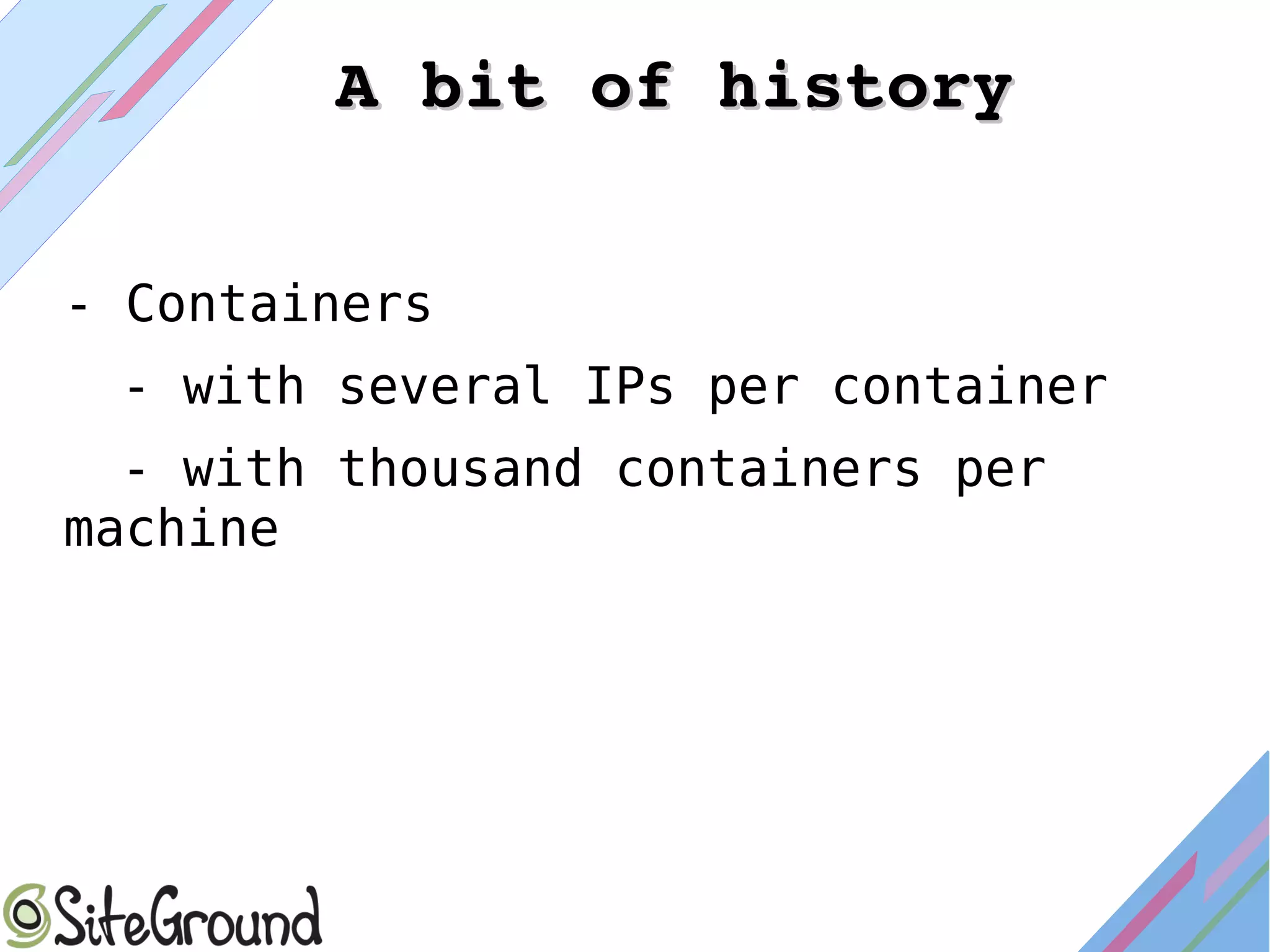

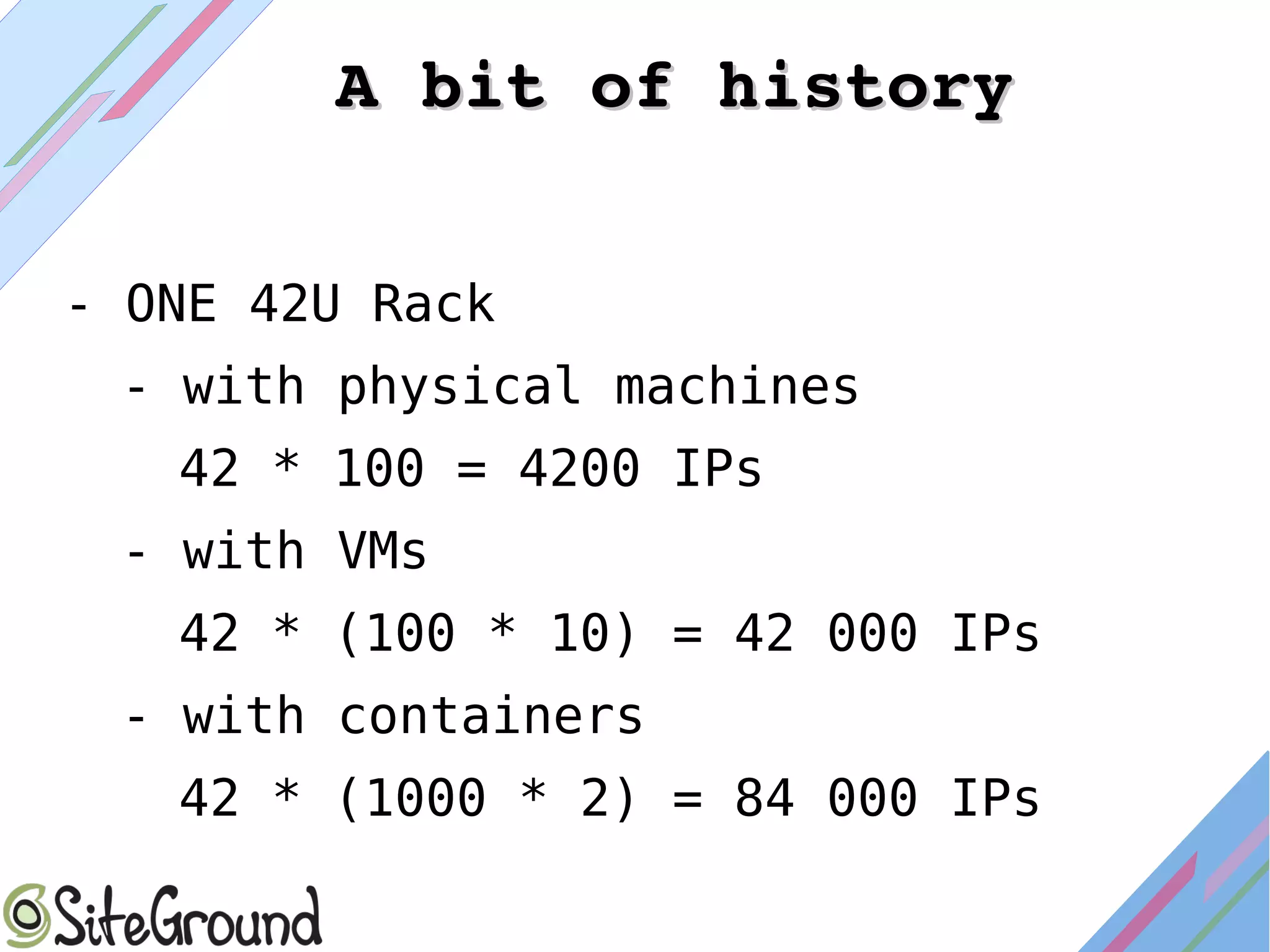

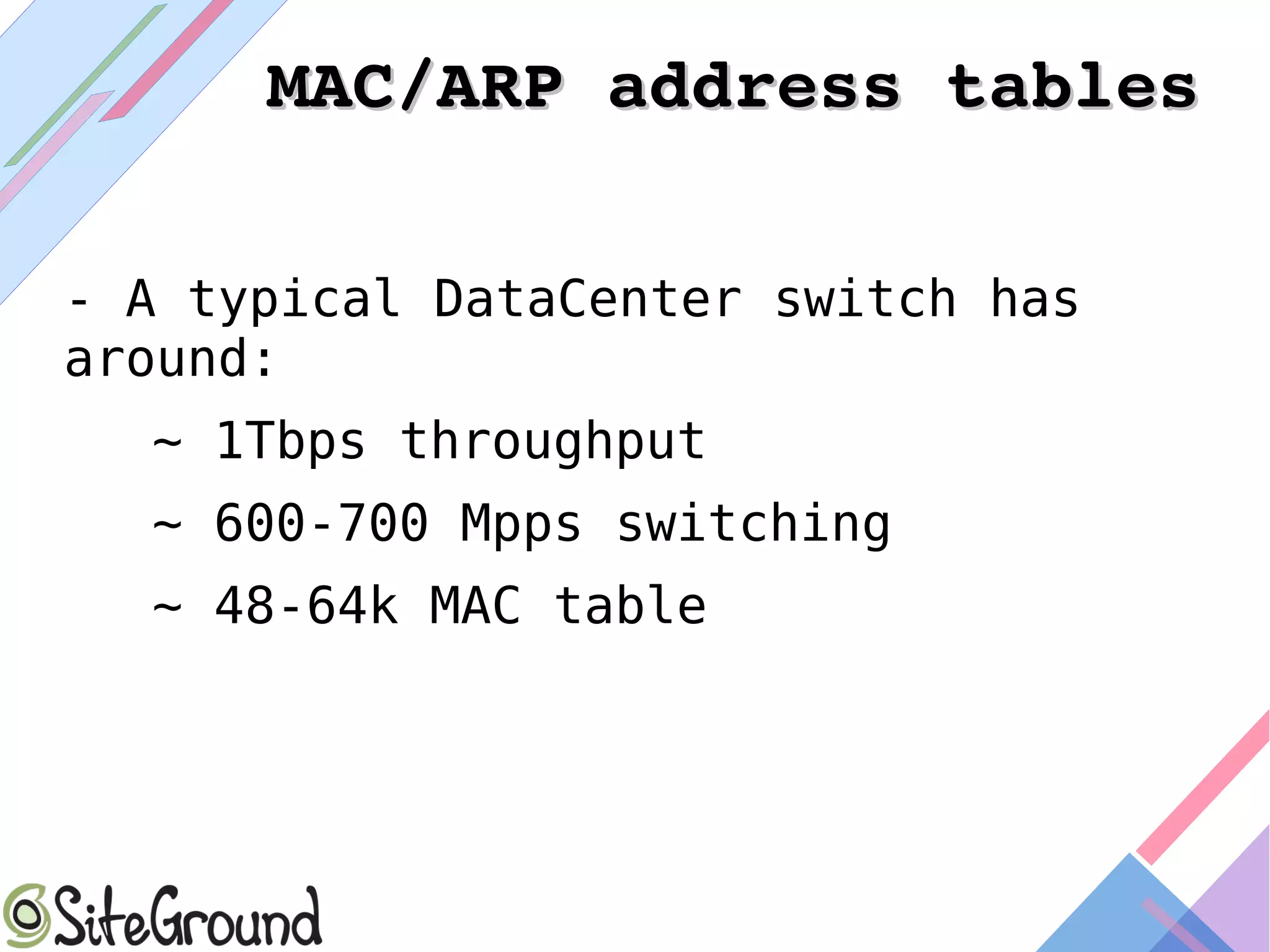

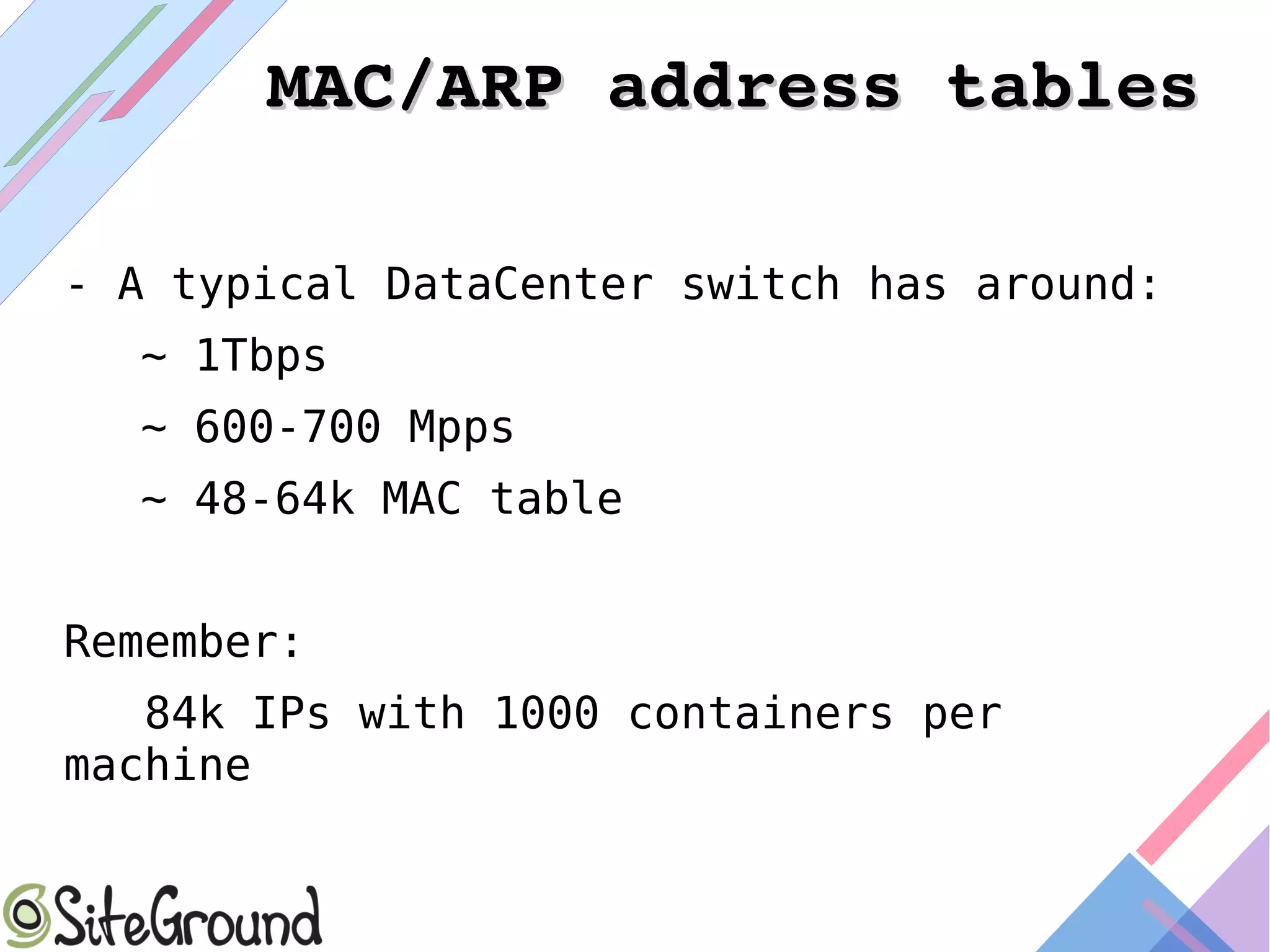

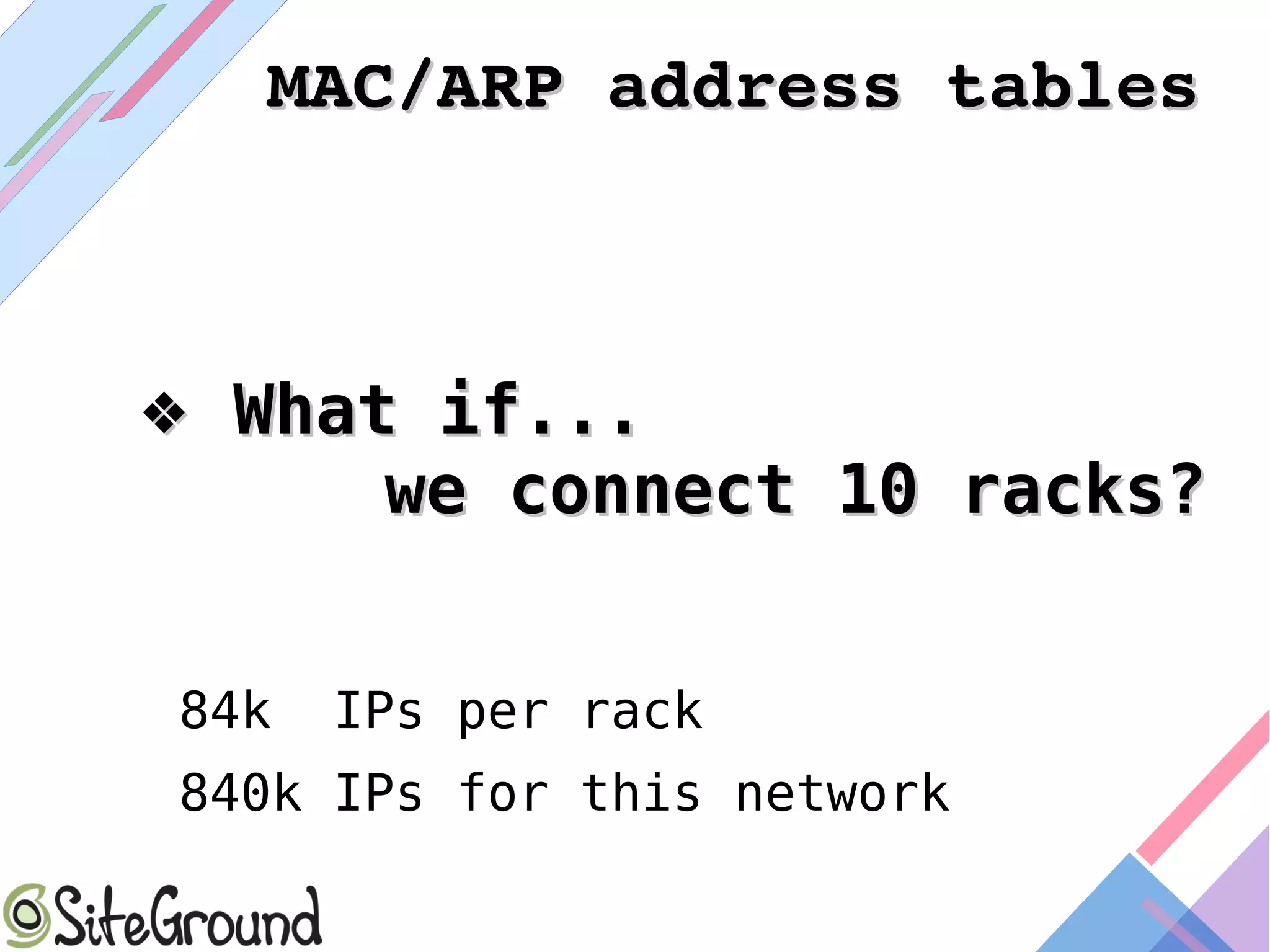

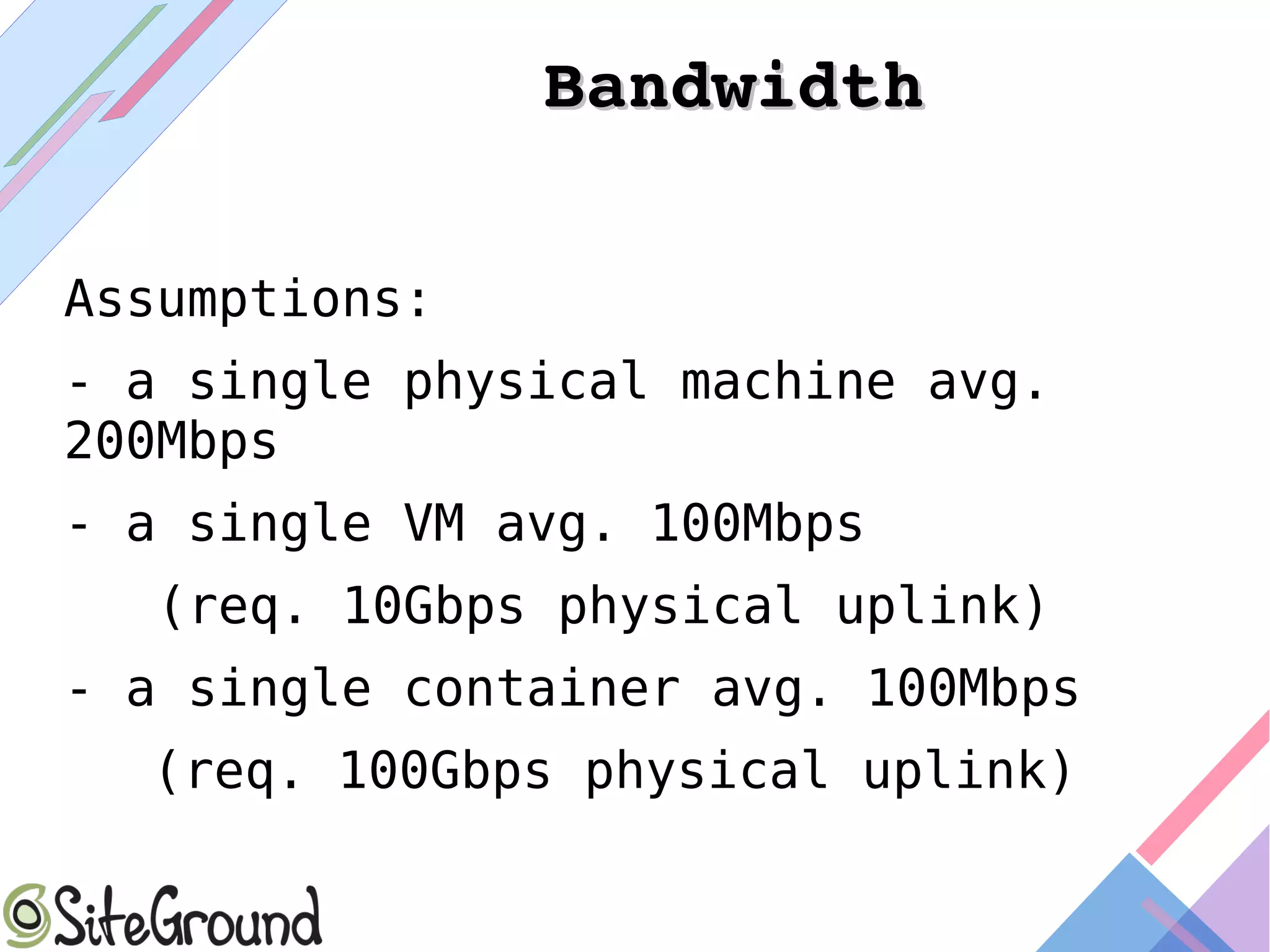

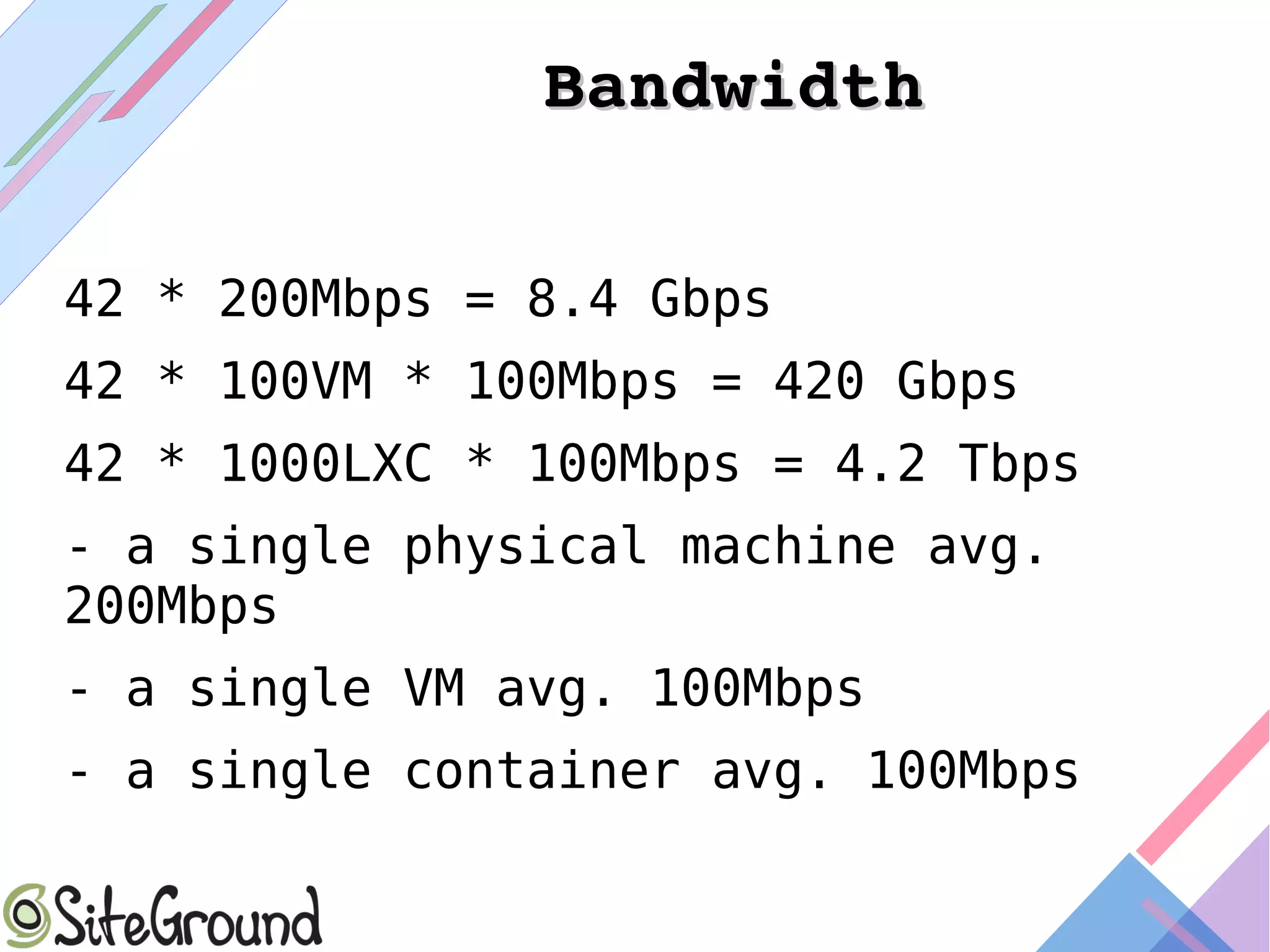

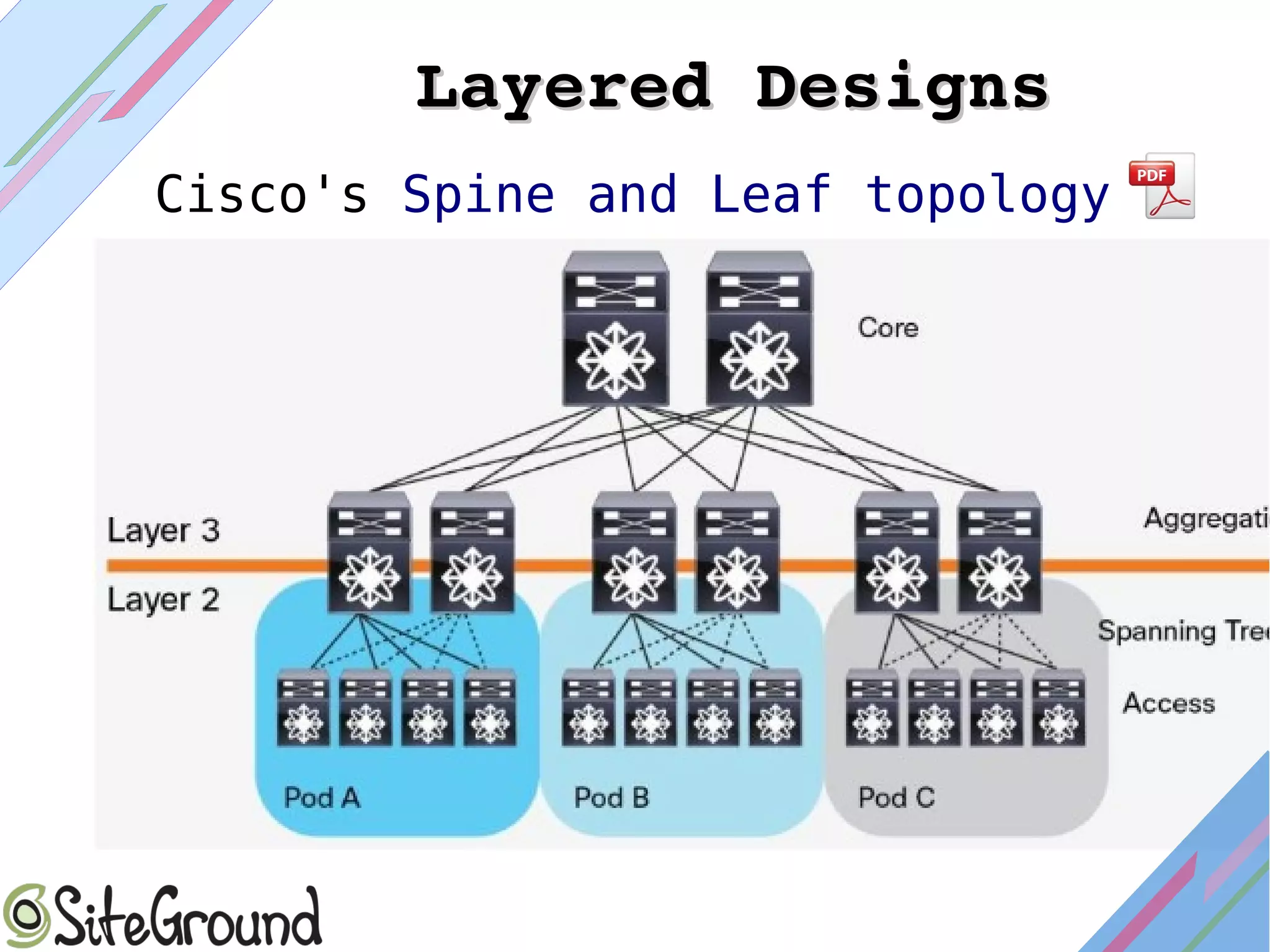

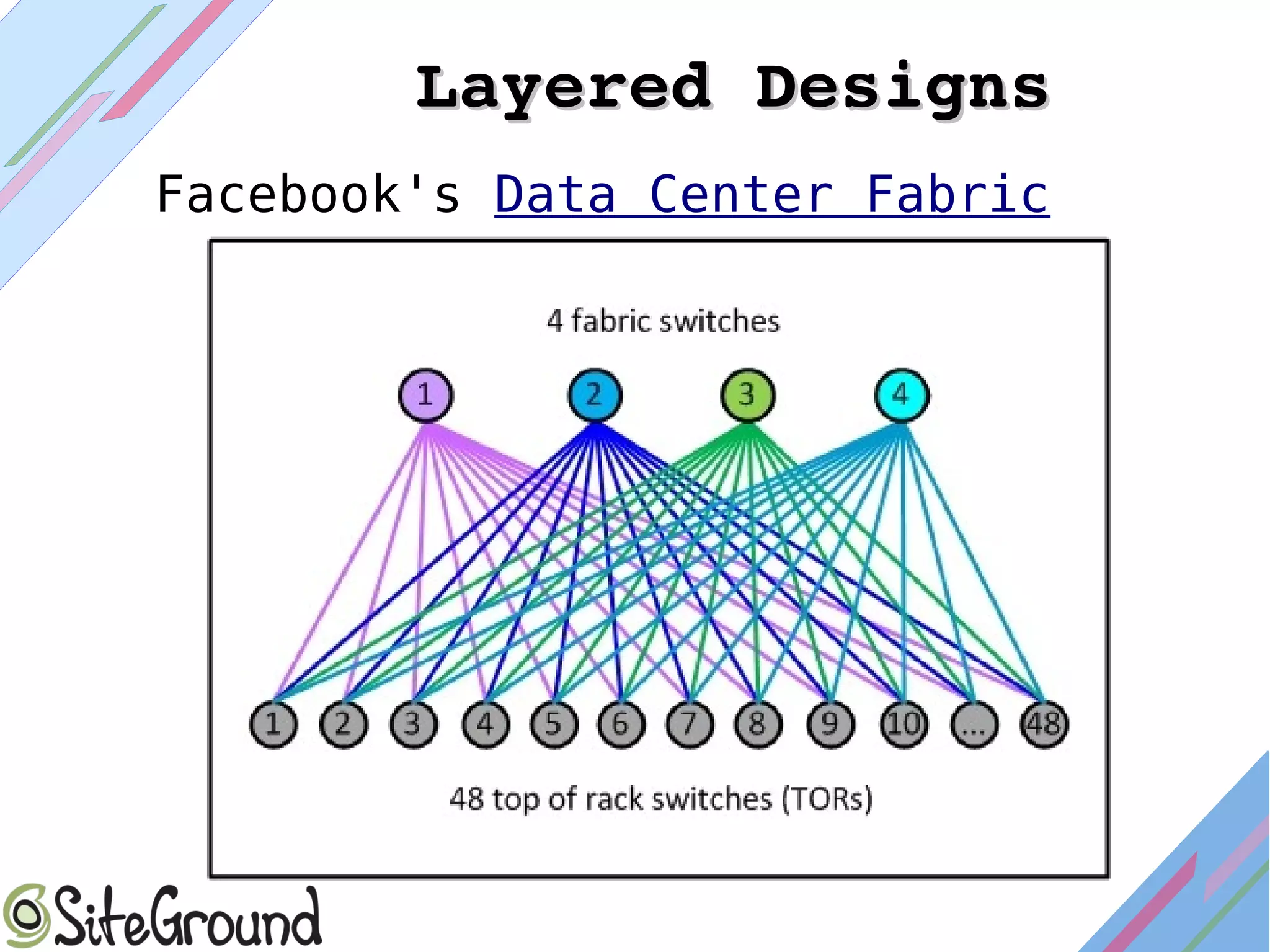

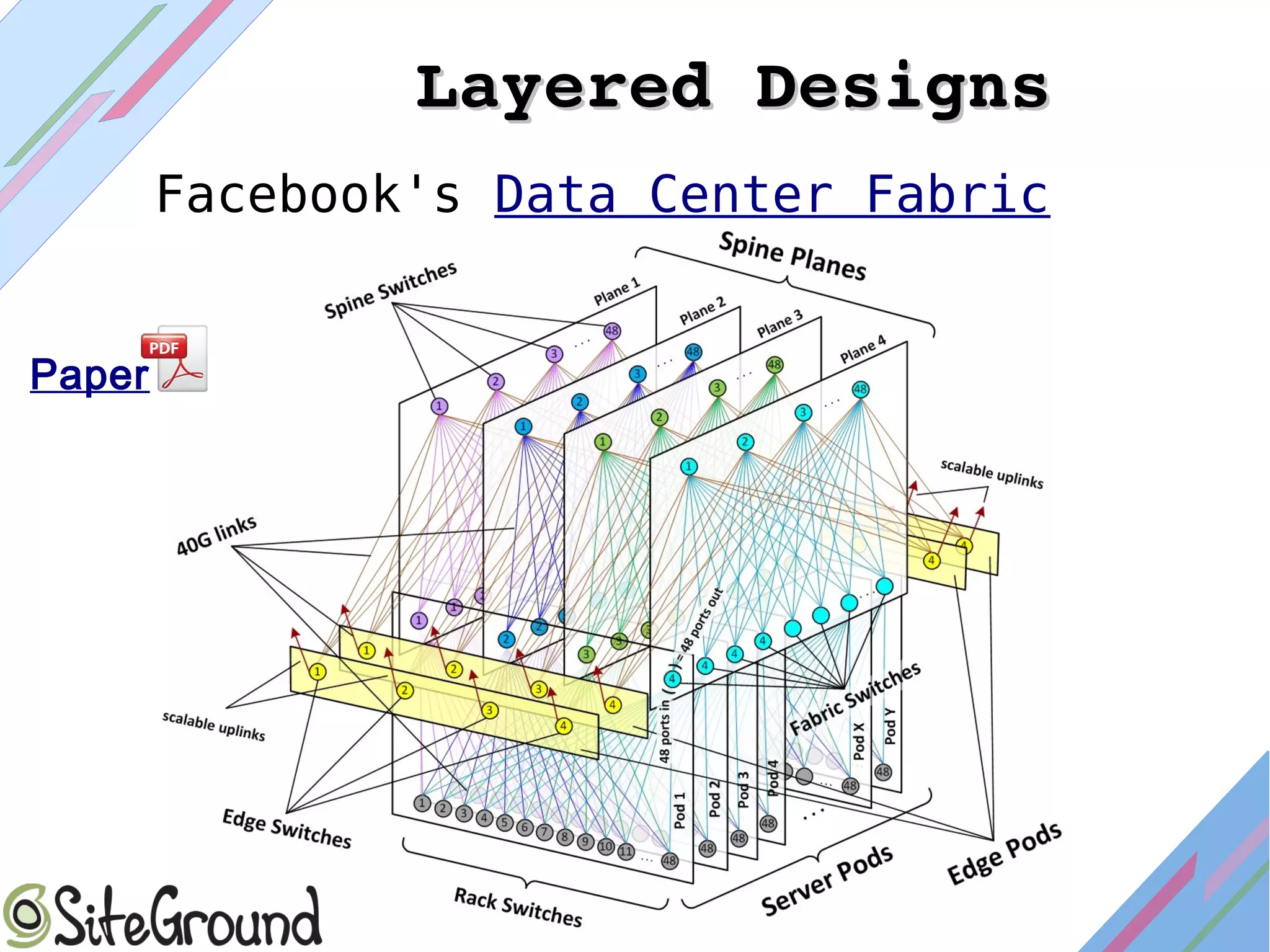

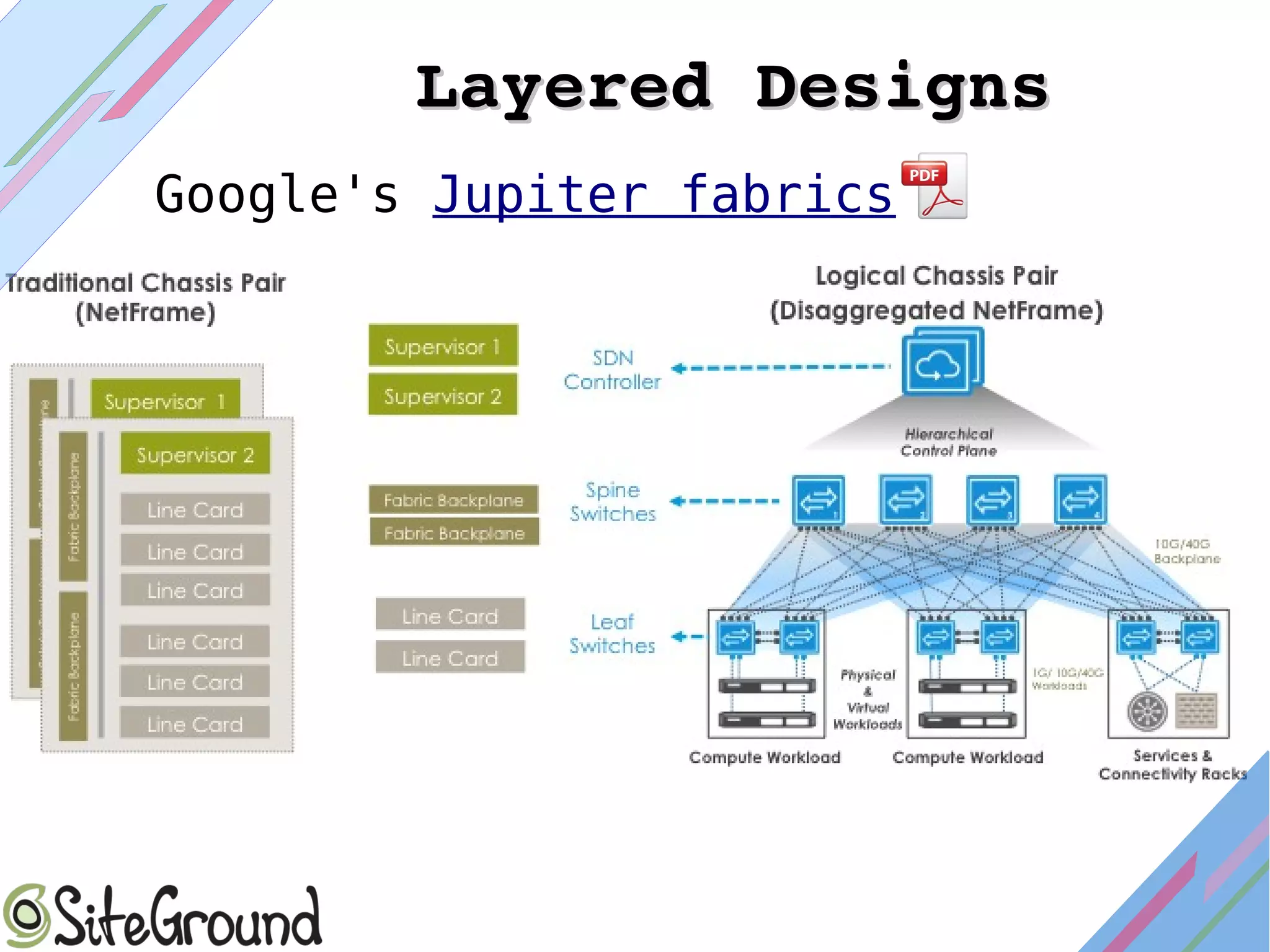

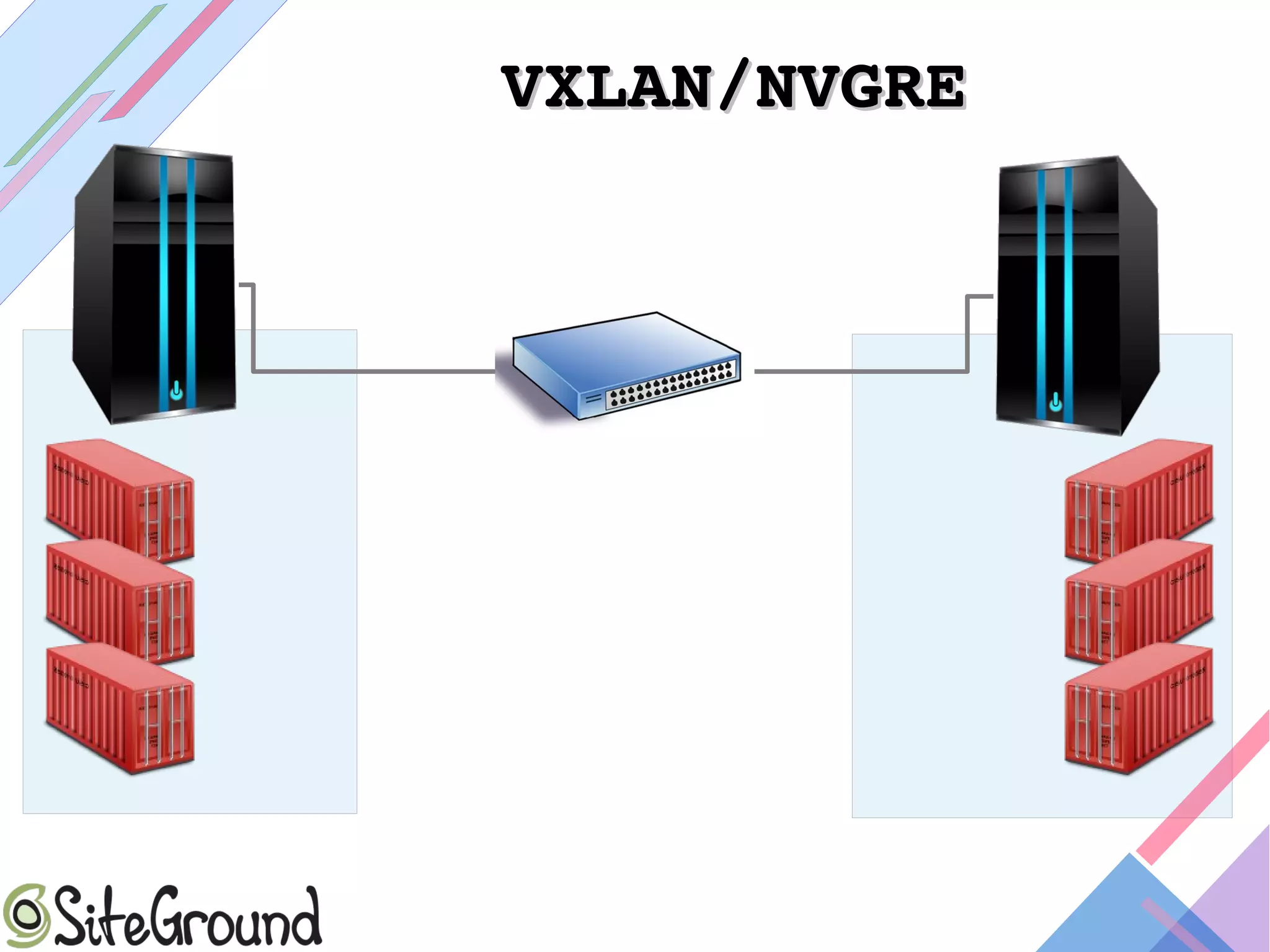

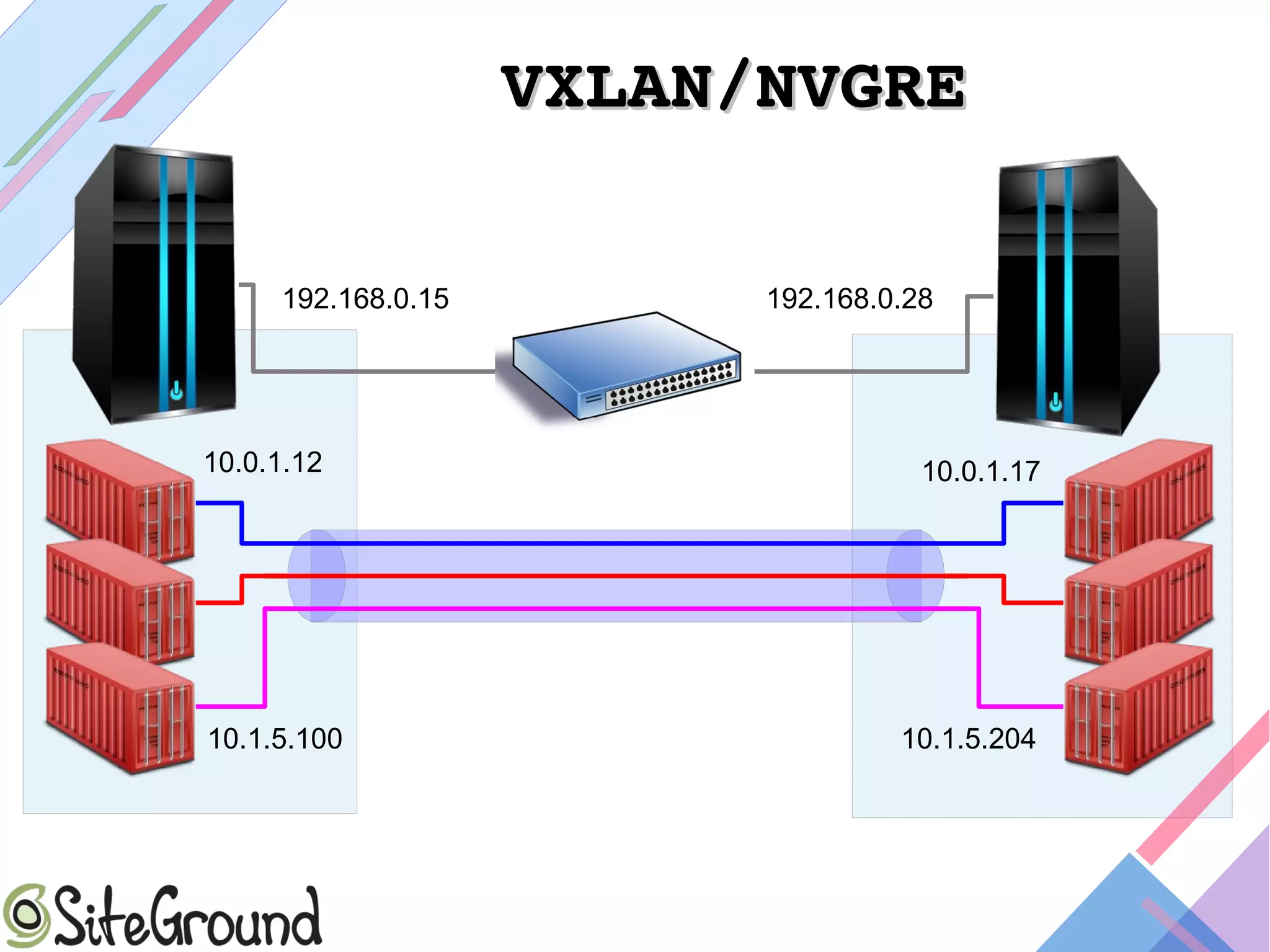

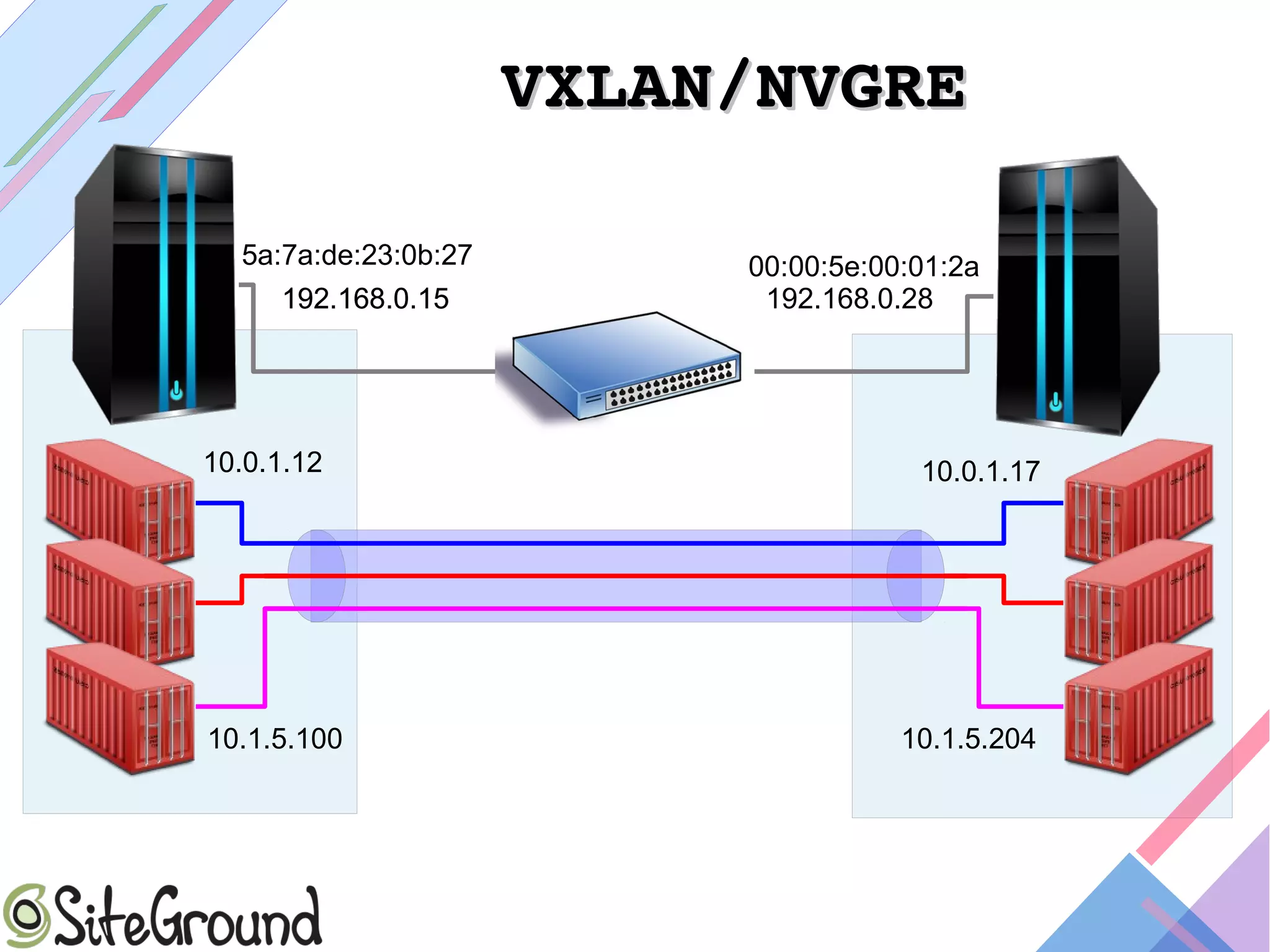

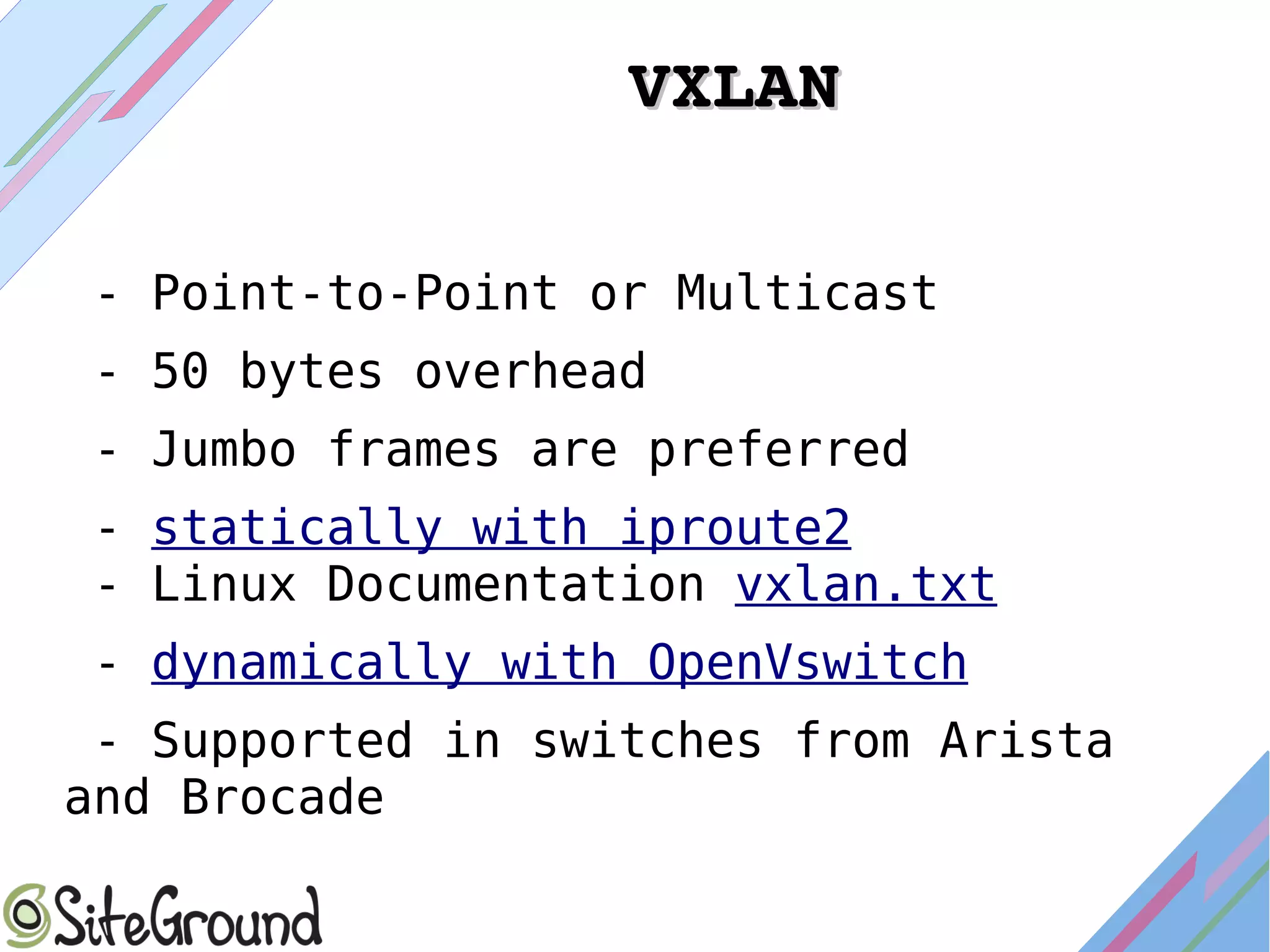

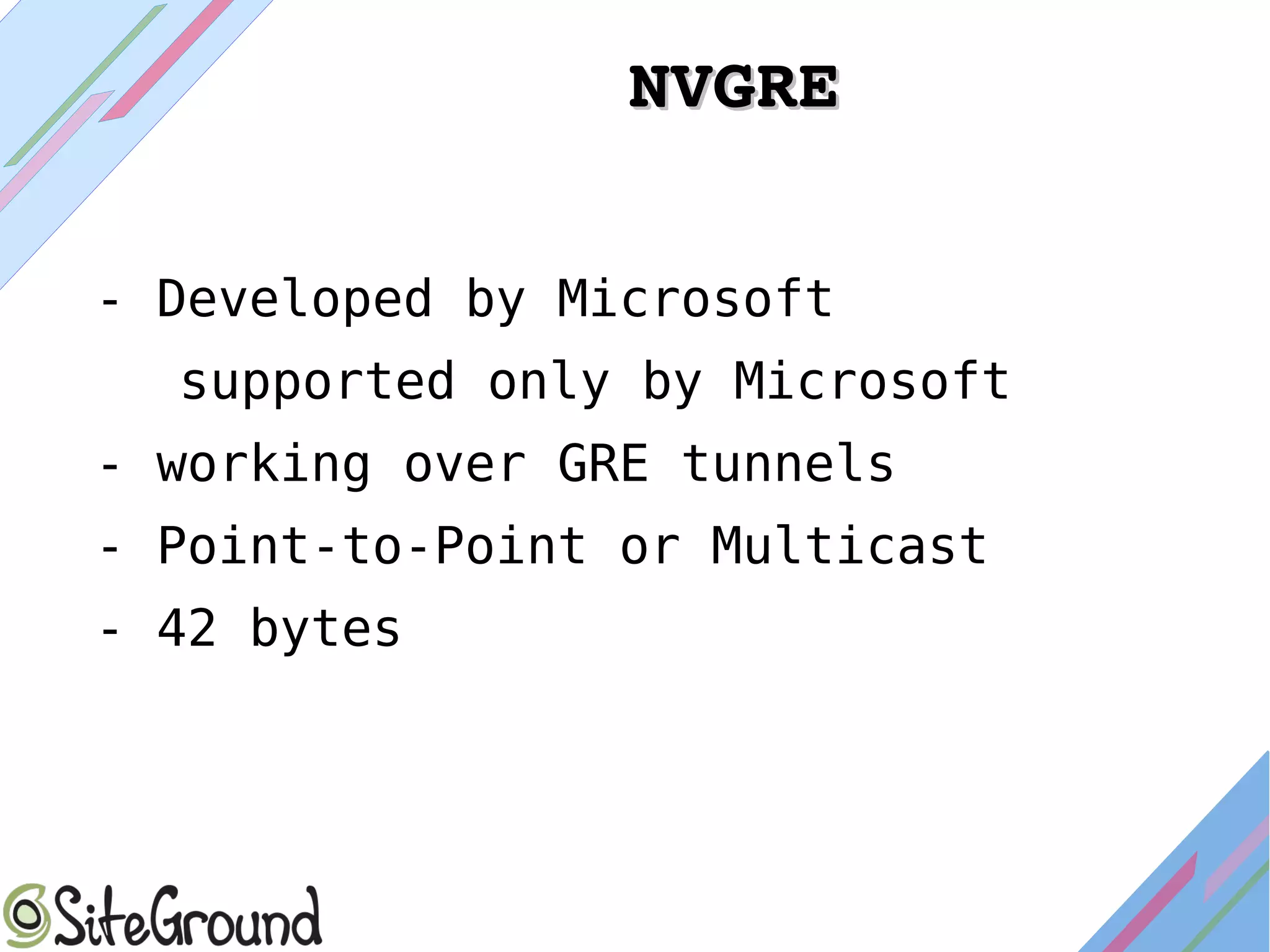

Marian Marinov is the chief system architect and head of the DevOps department at SiteGround.com. He discussed the challenges of high-density networks including large broadcast domains, limited MAC/ARP tables, and bandwidth constraints. Some solutions proposed were using VLANs, layered network designs, and overlay technologies like VXLAN and NVGRE to divide the network into smaller segments and increase scalability.