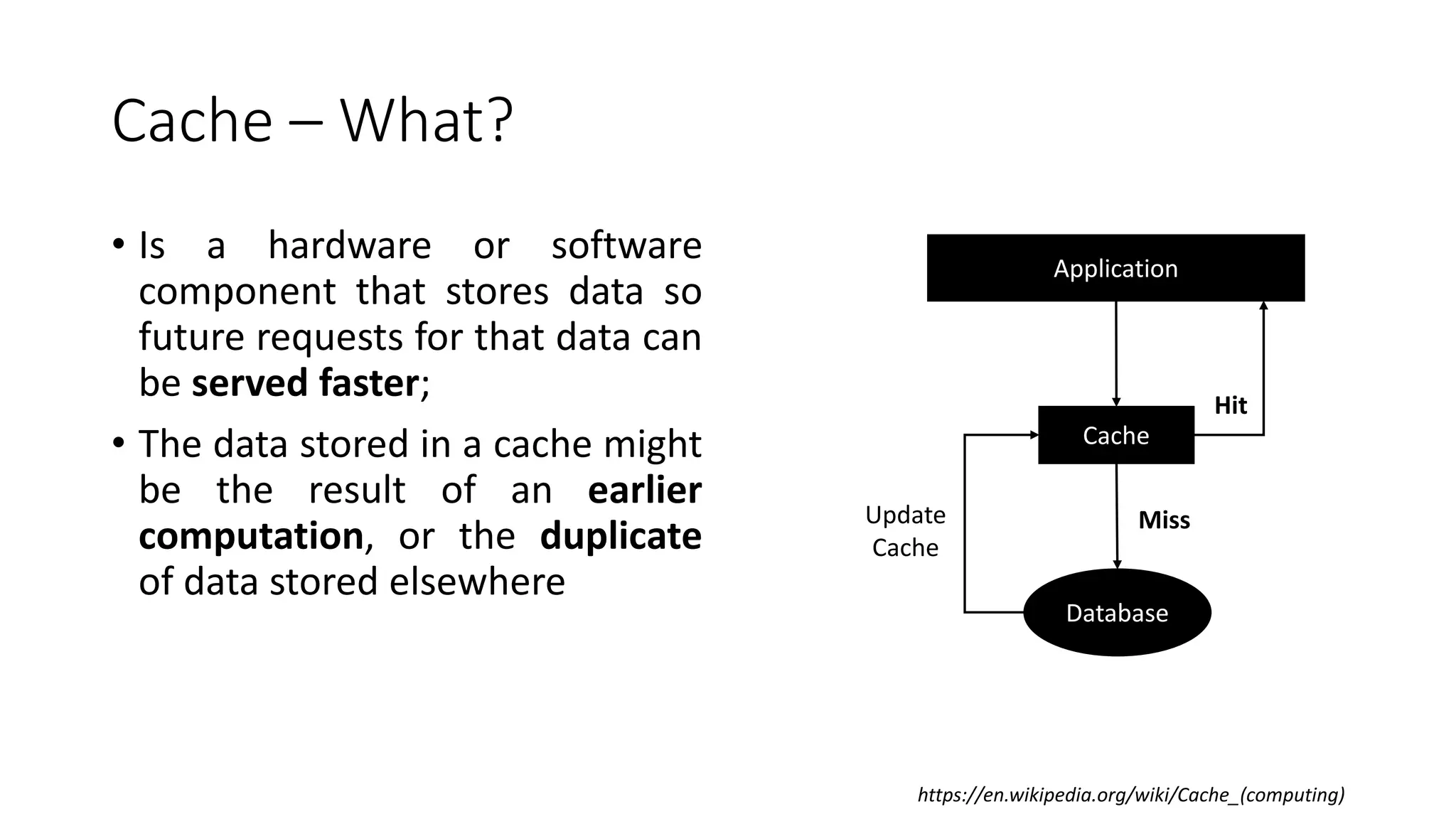

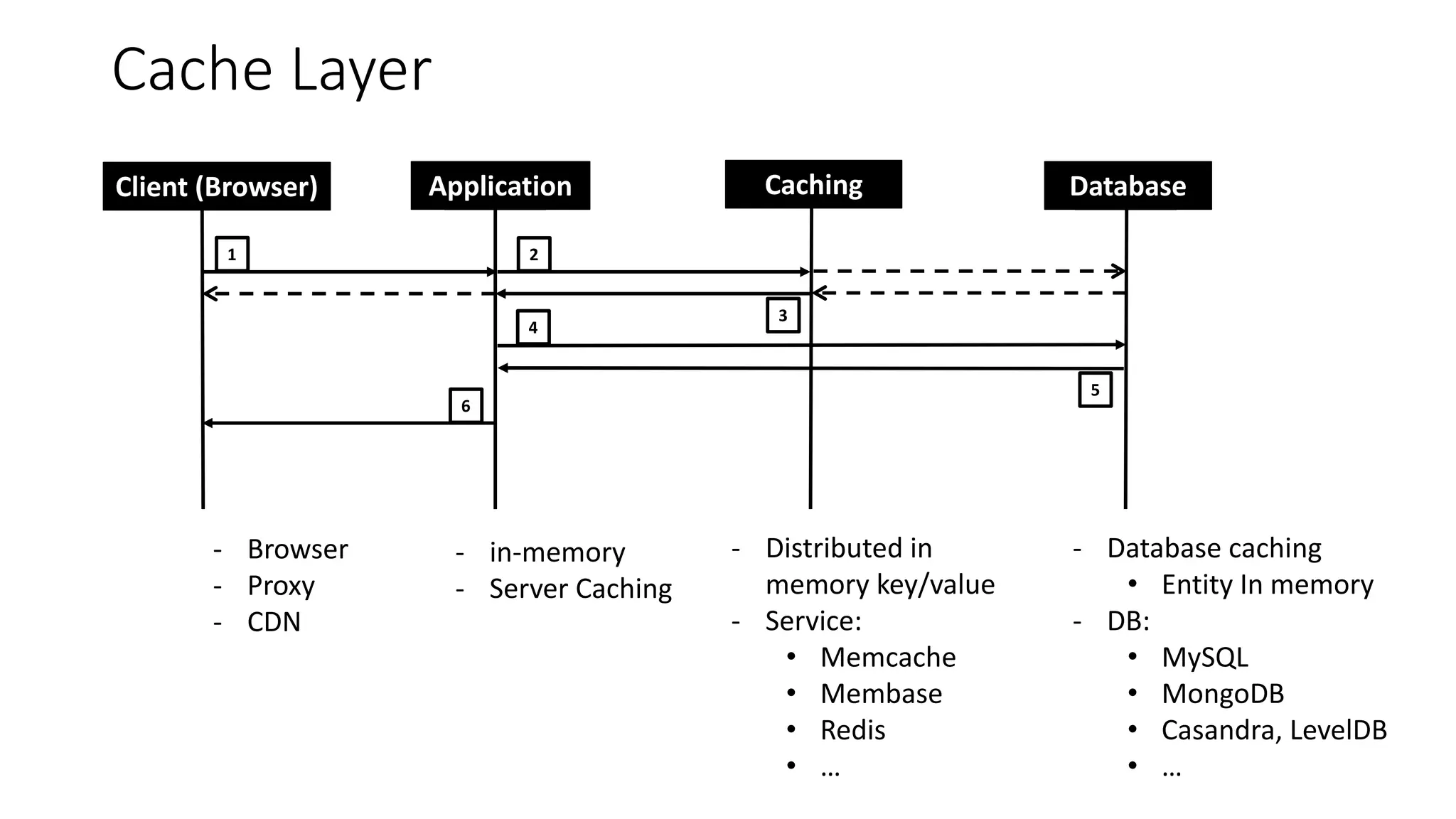

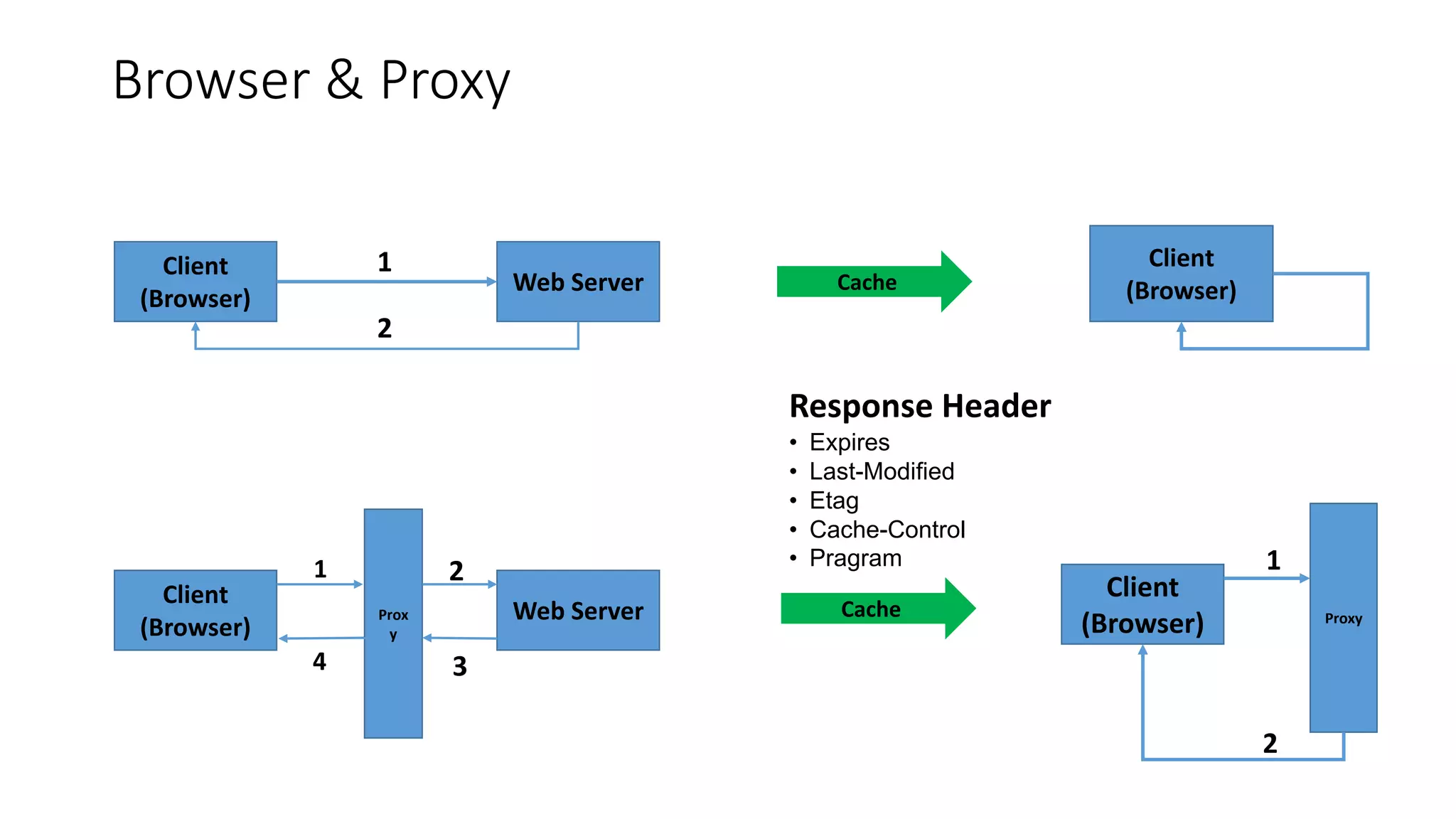

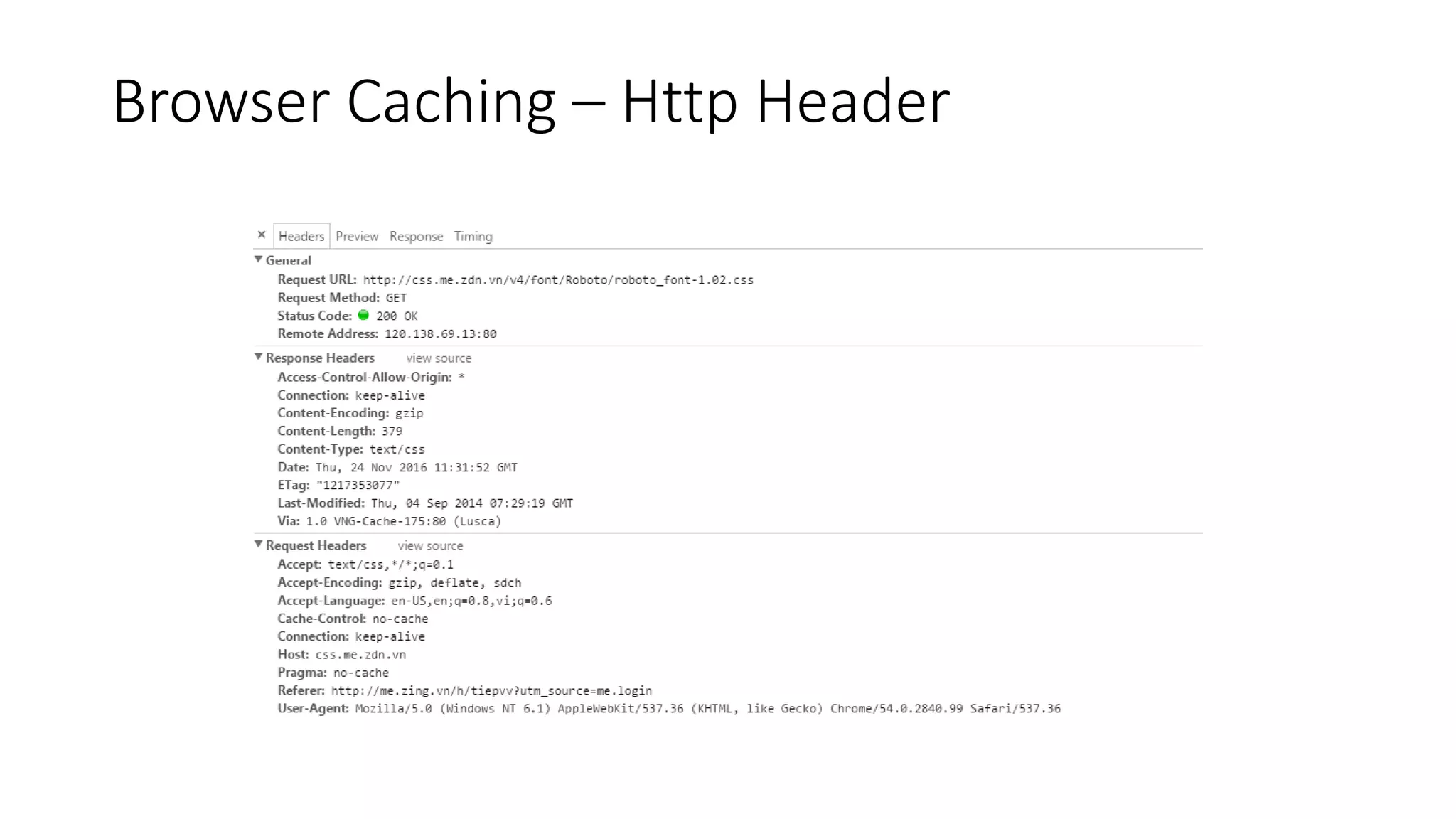

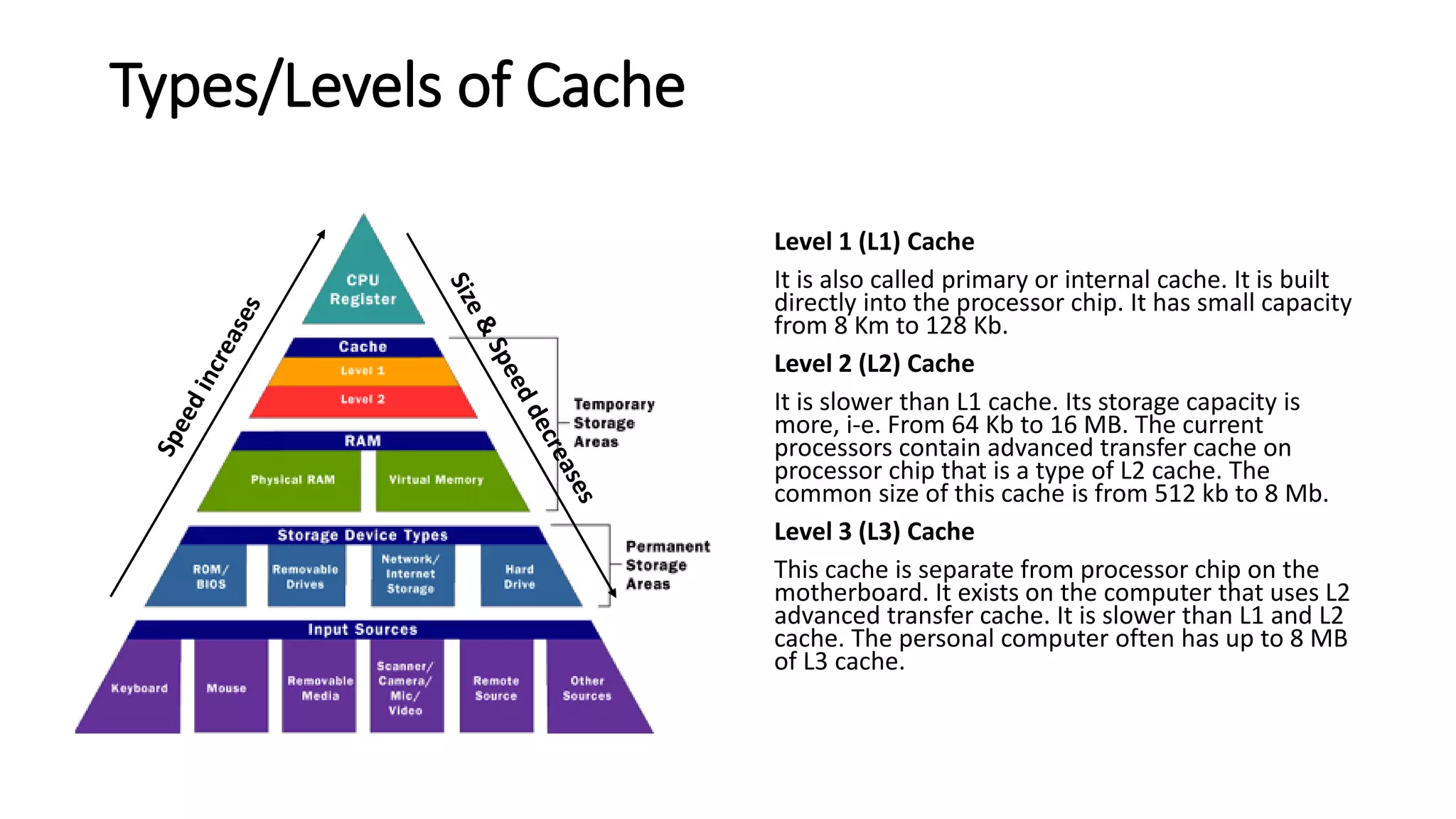

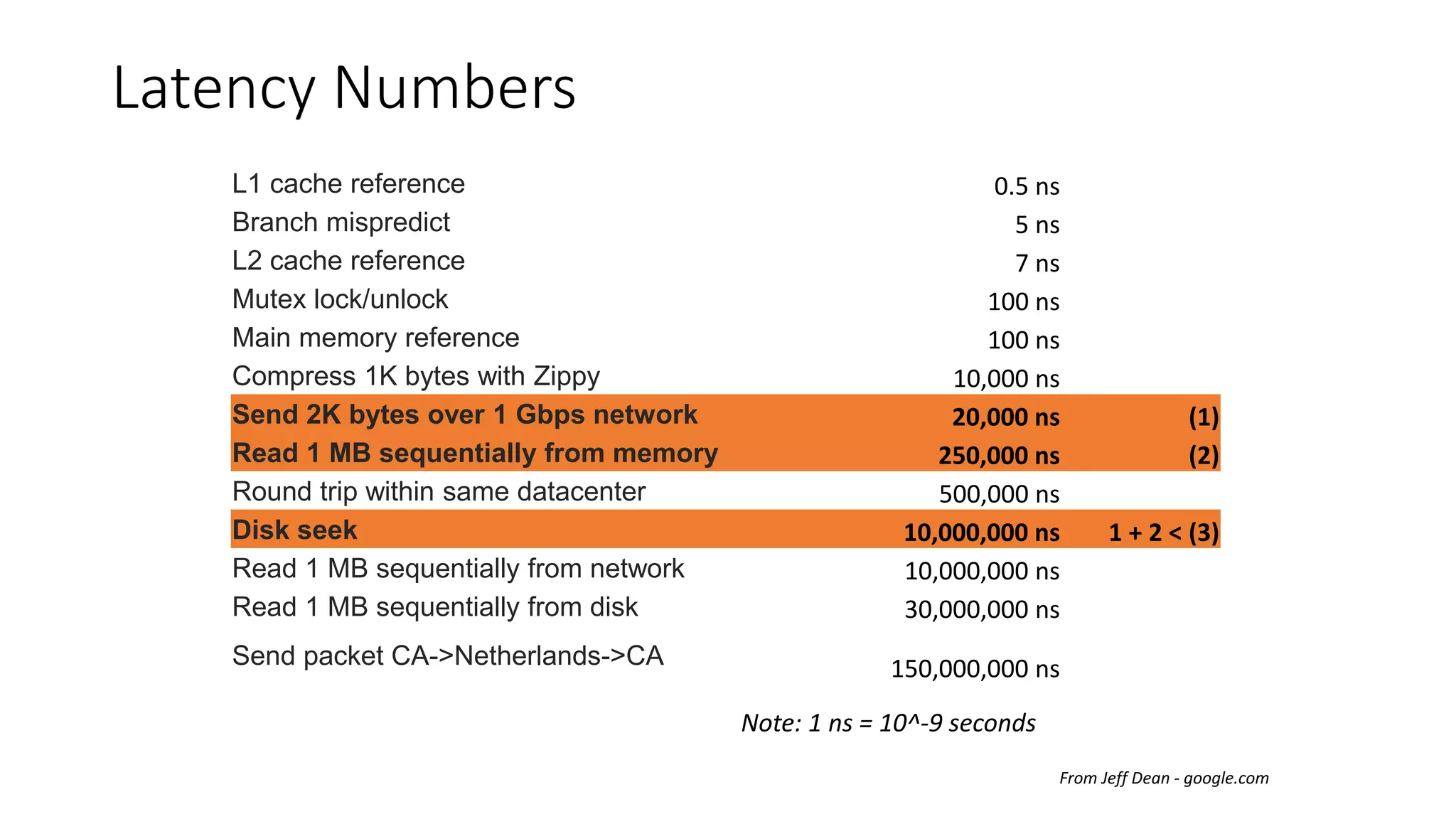

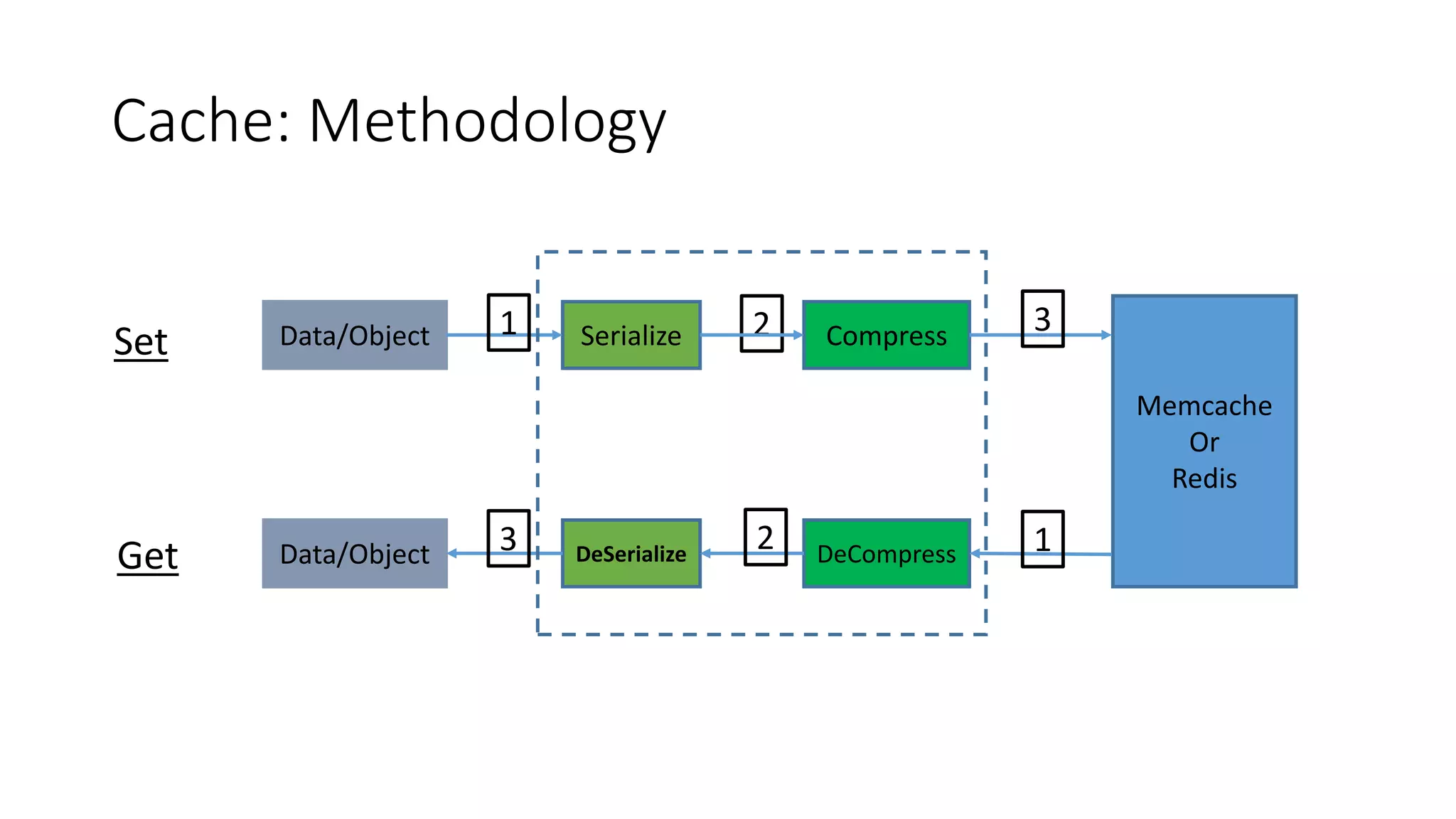

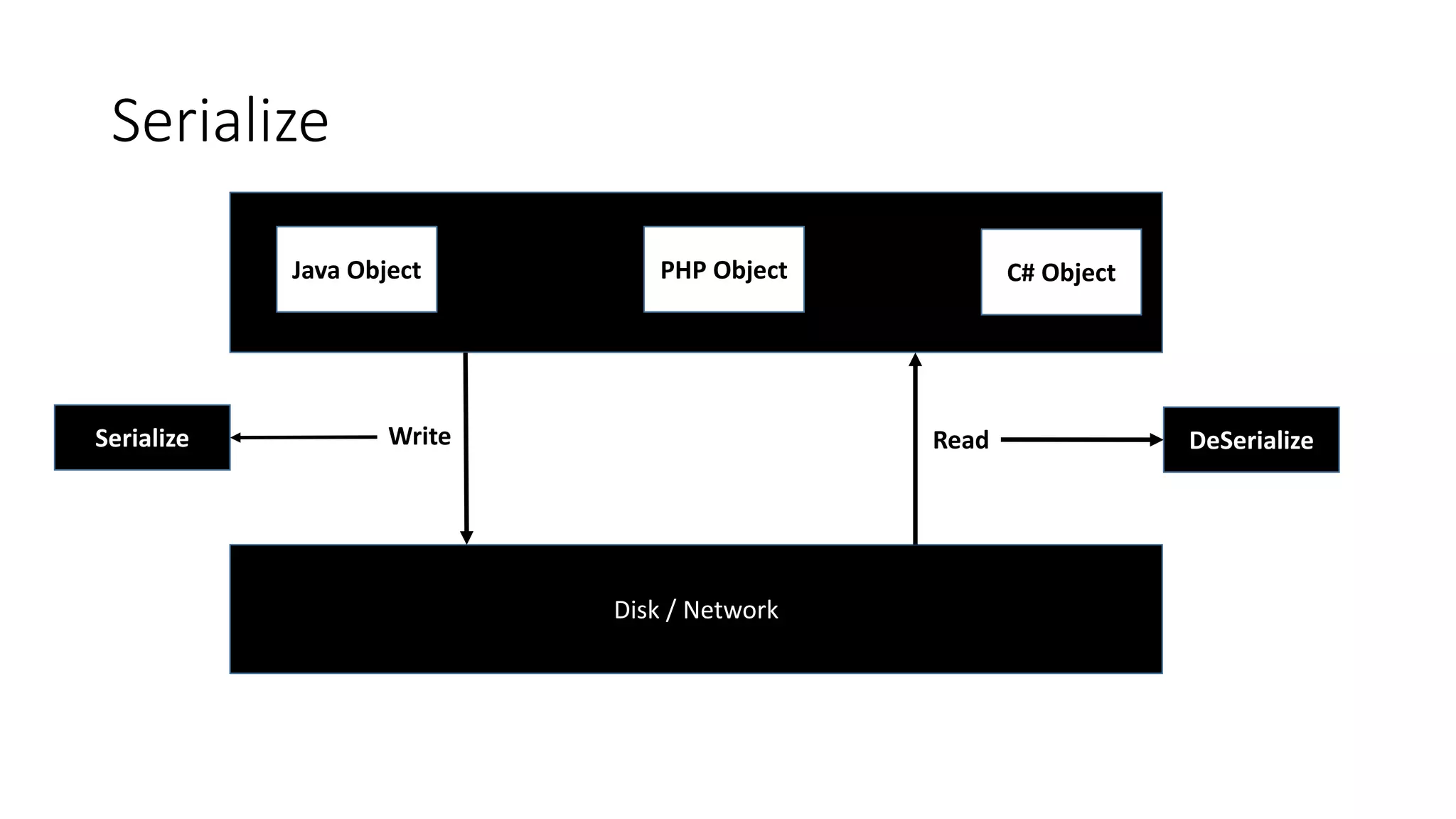

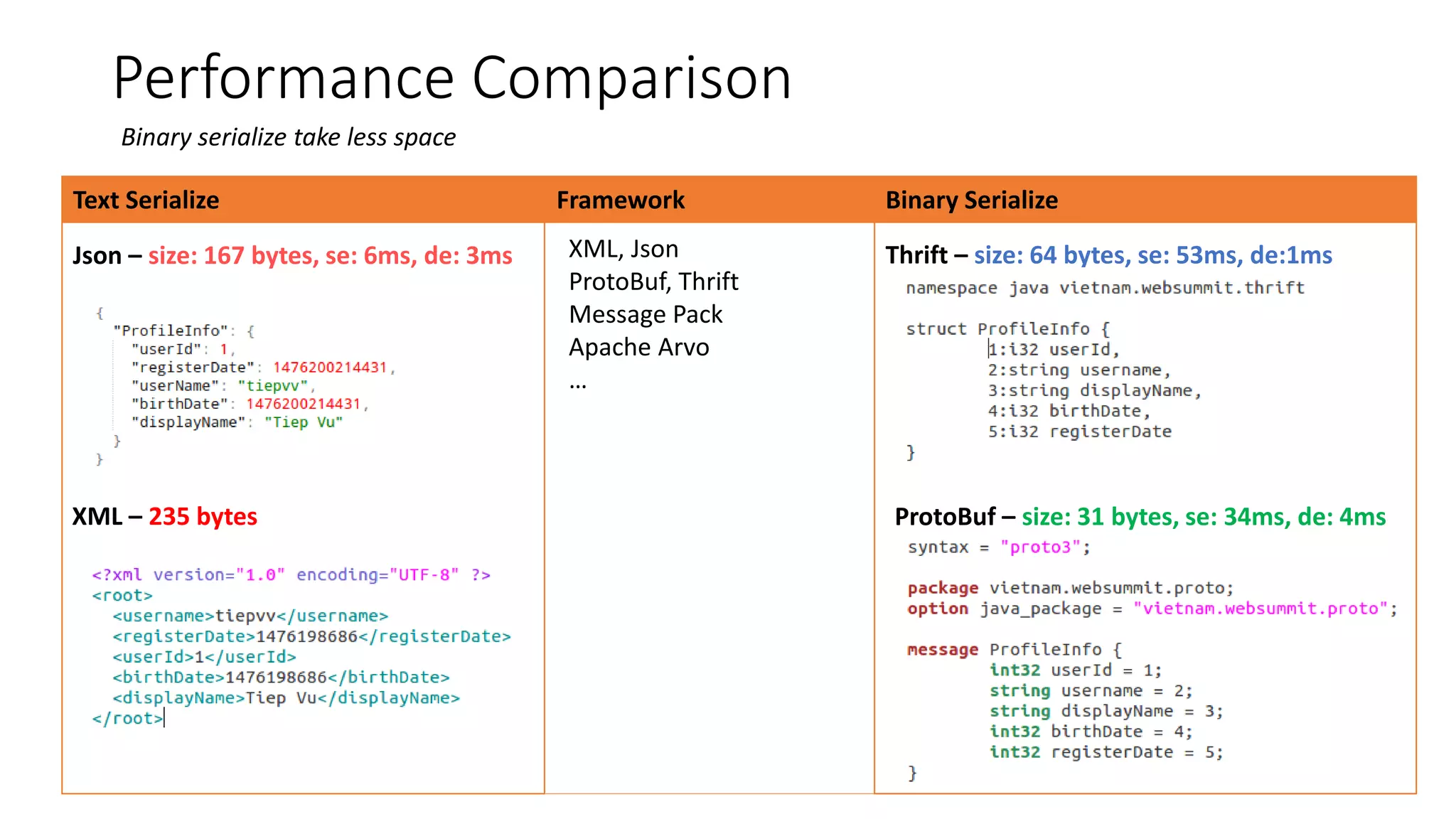

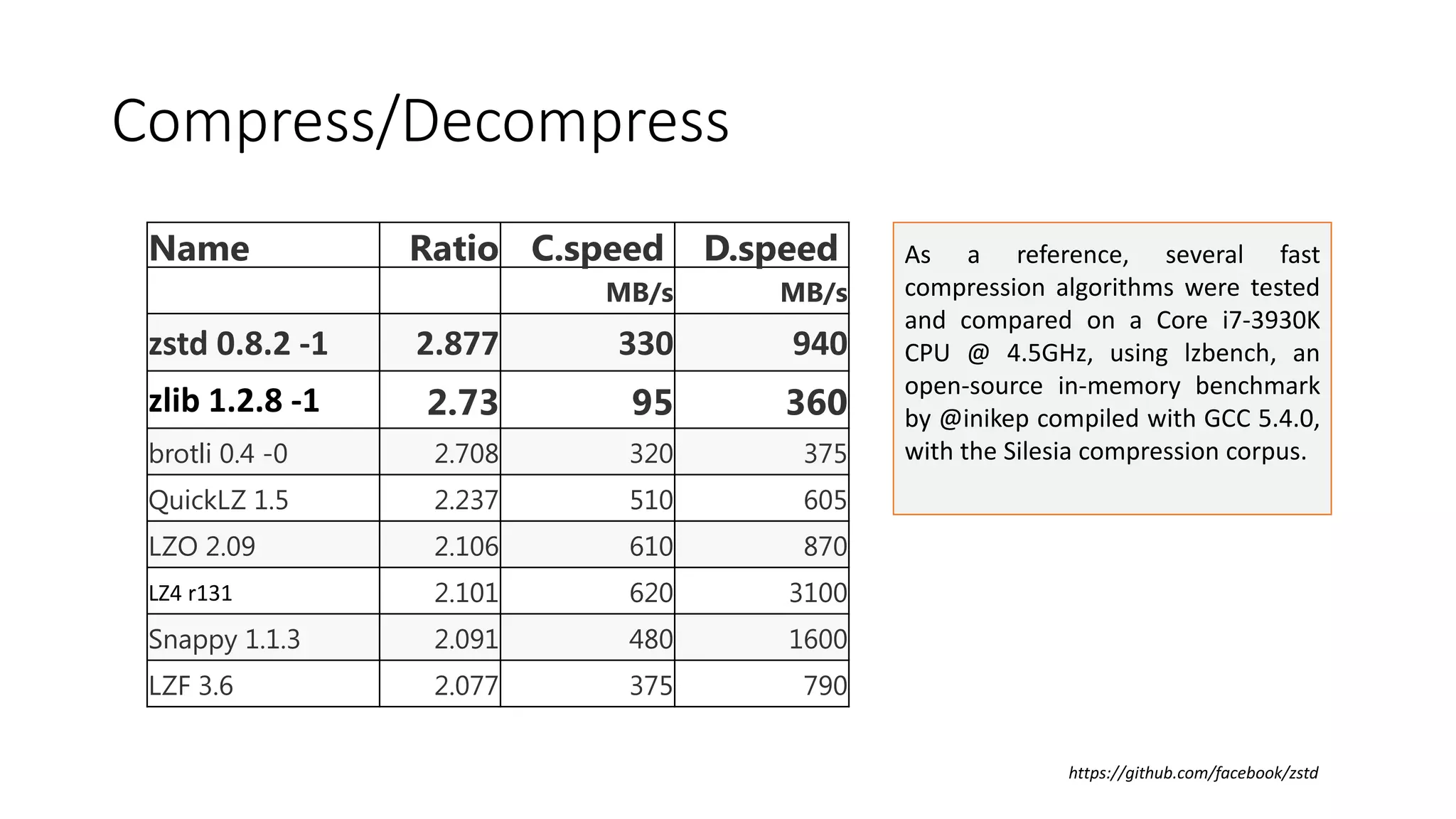

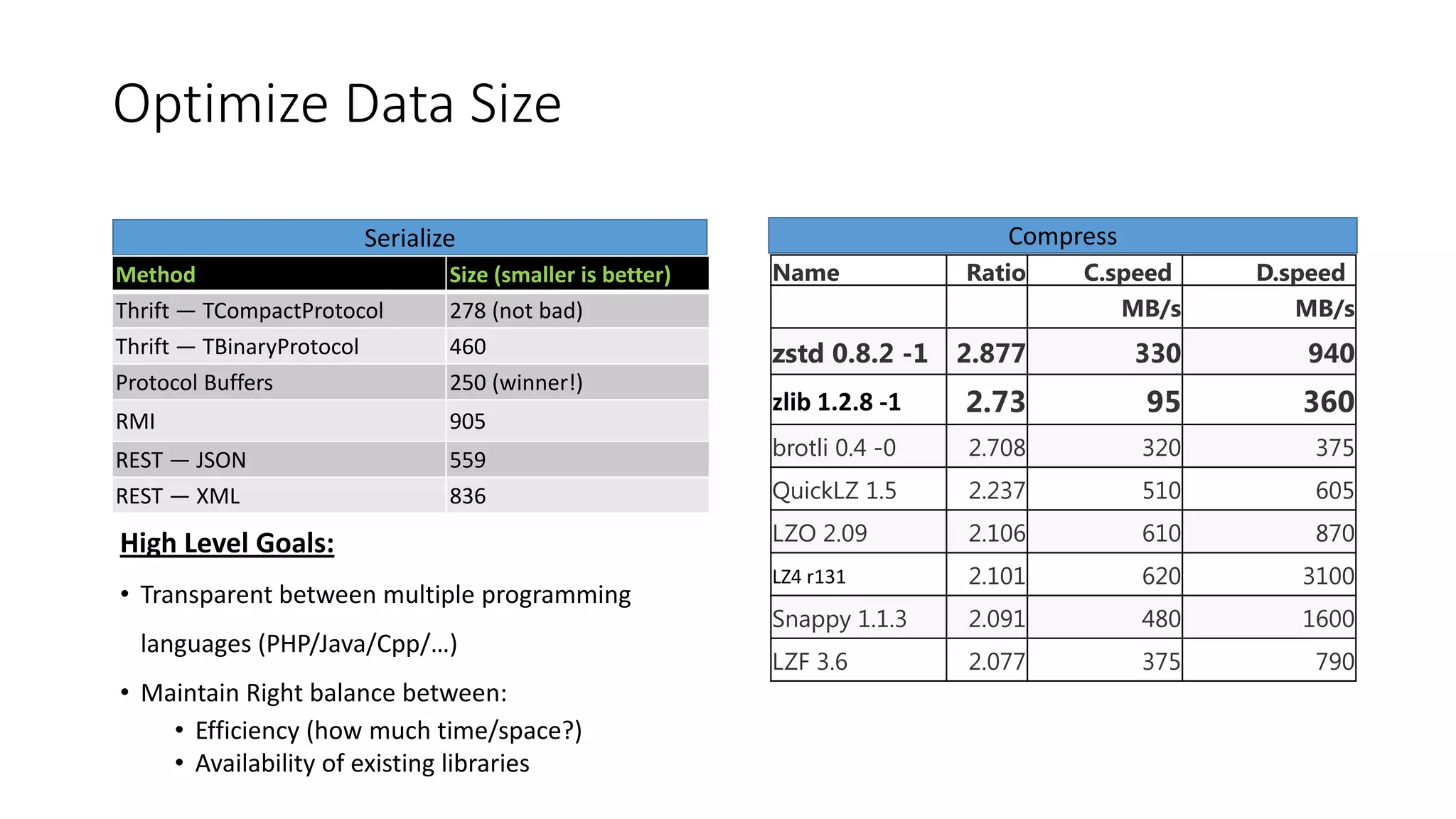

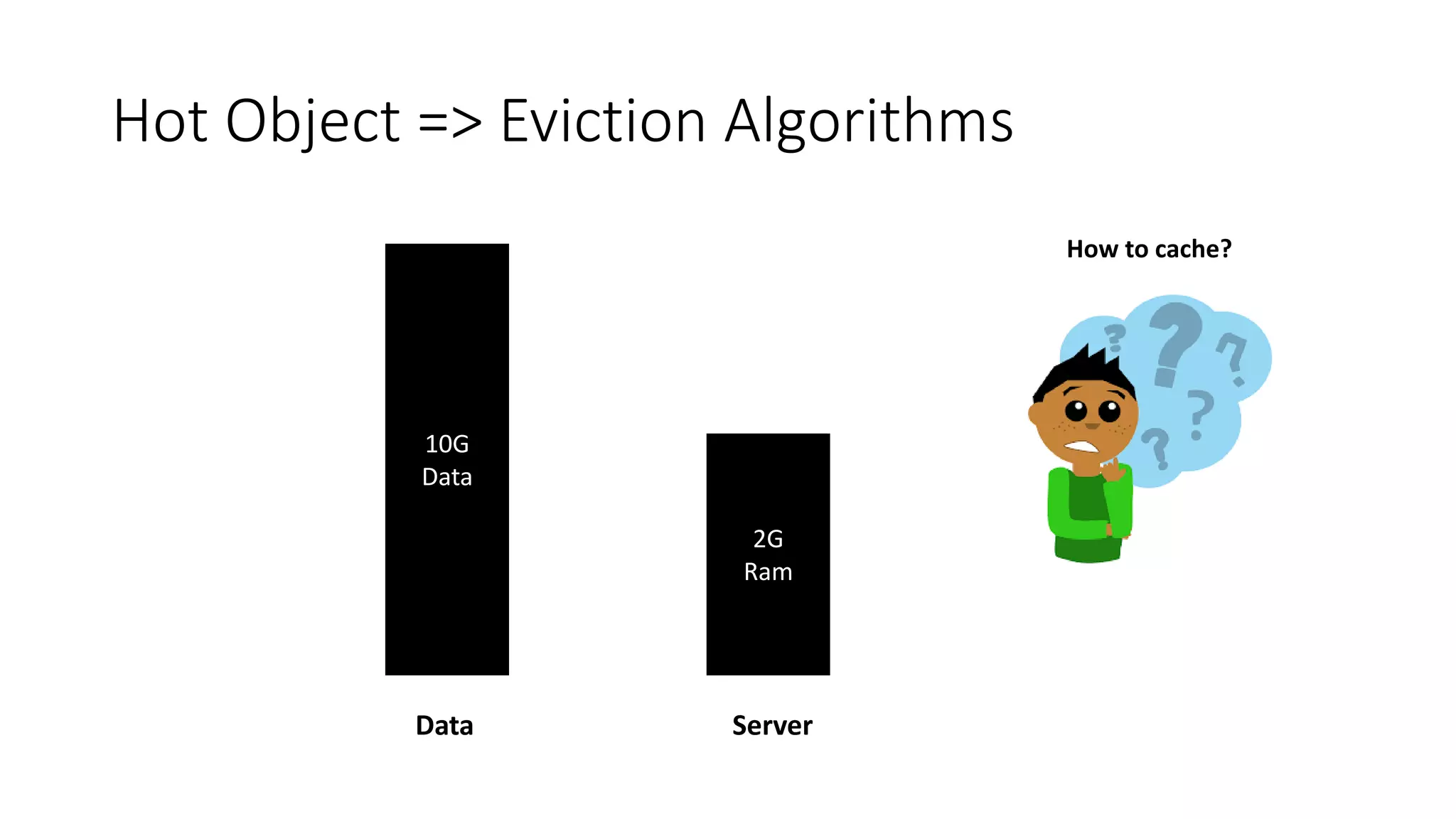

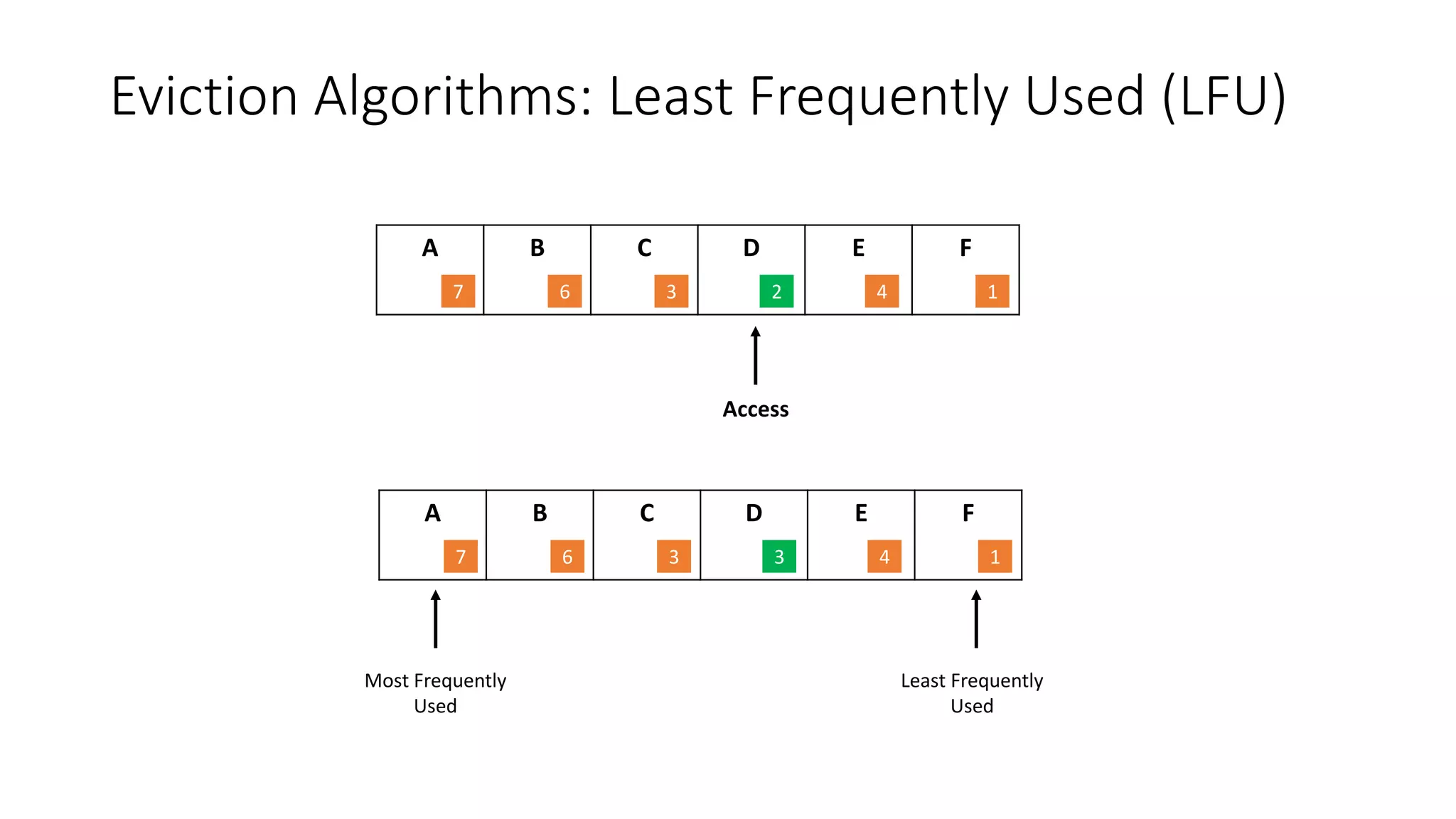

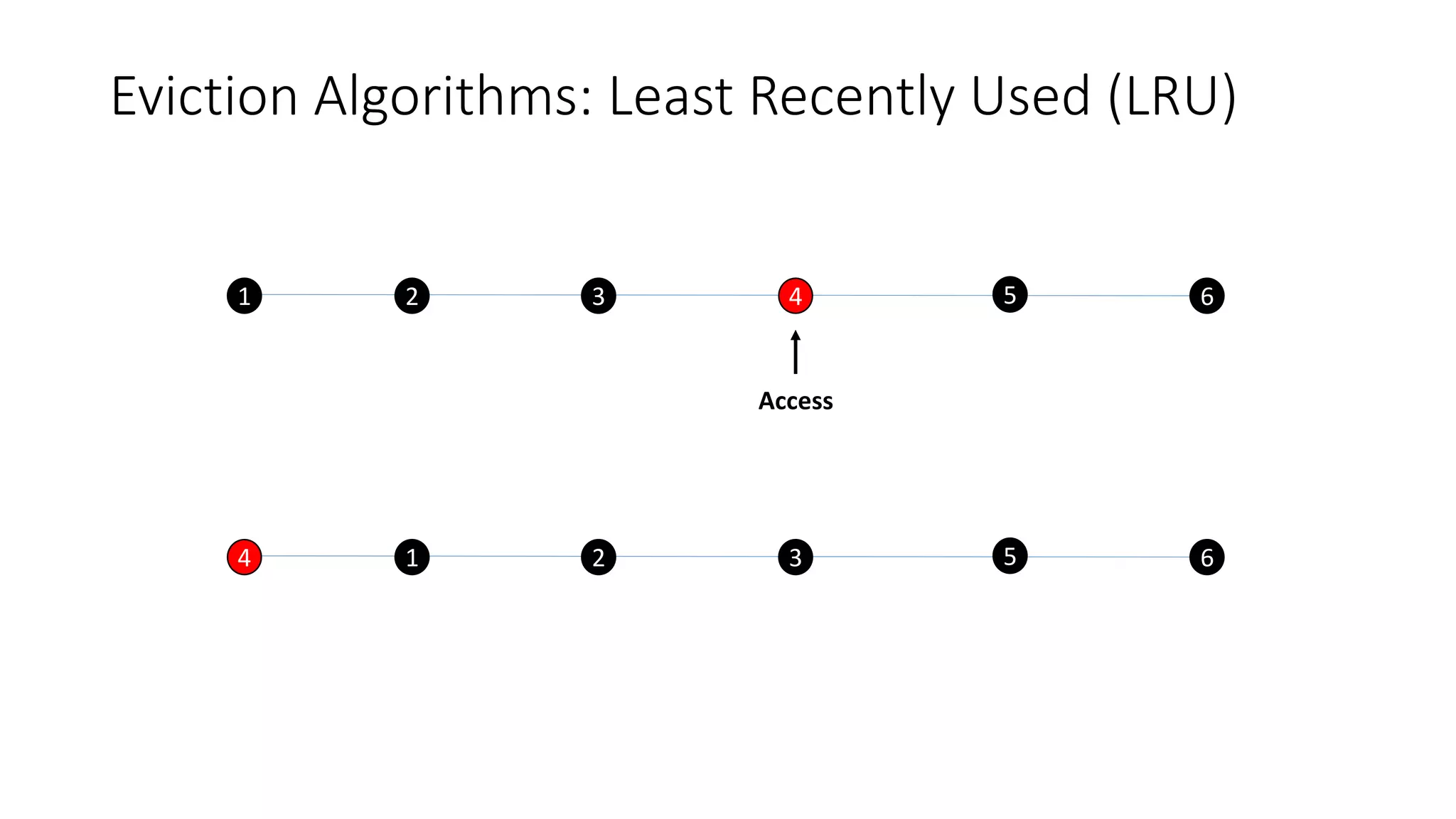

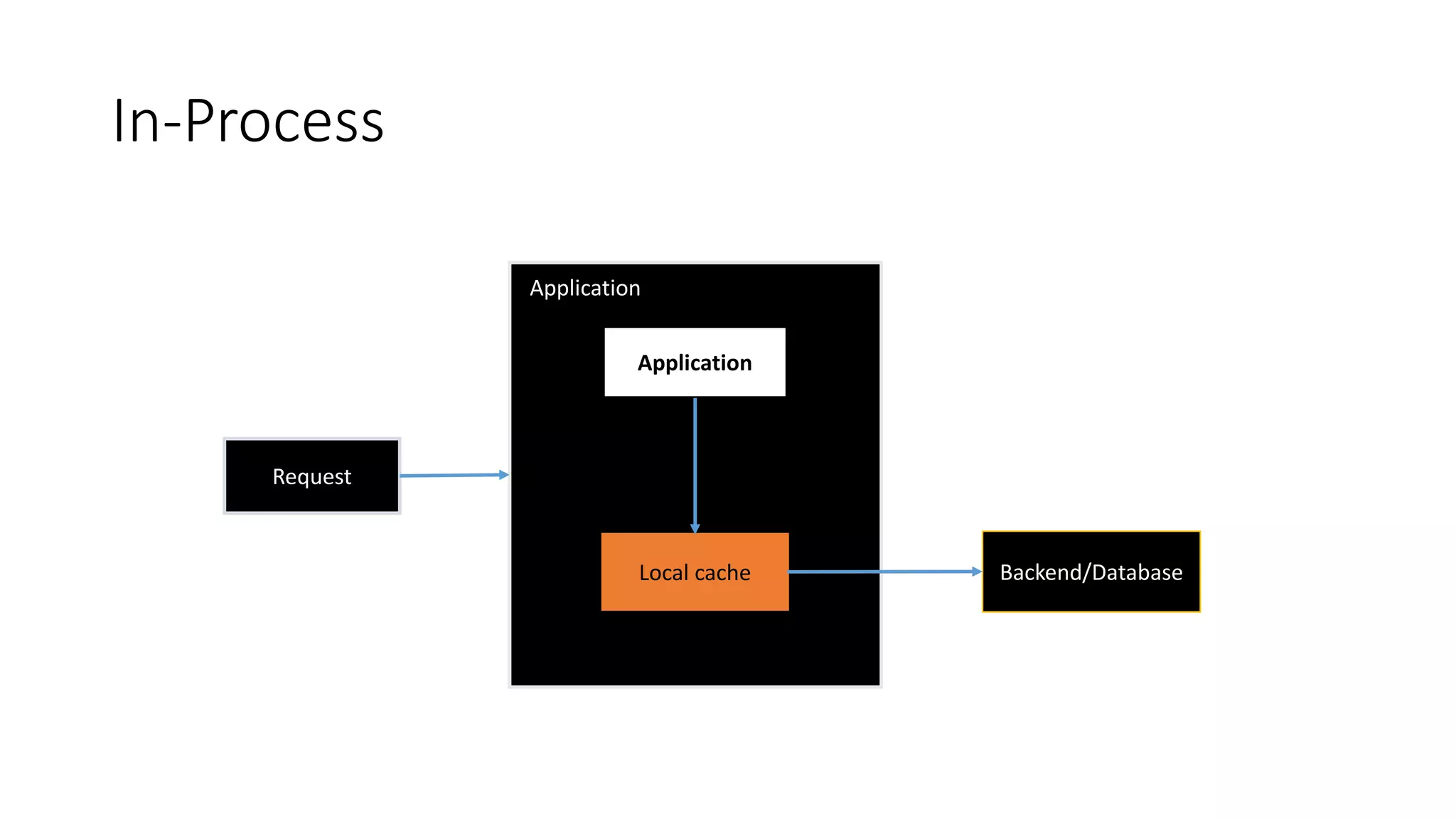

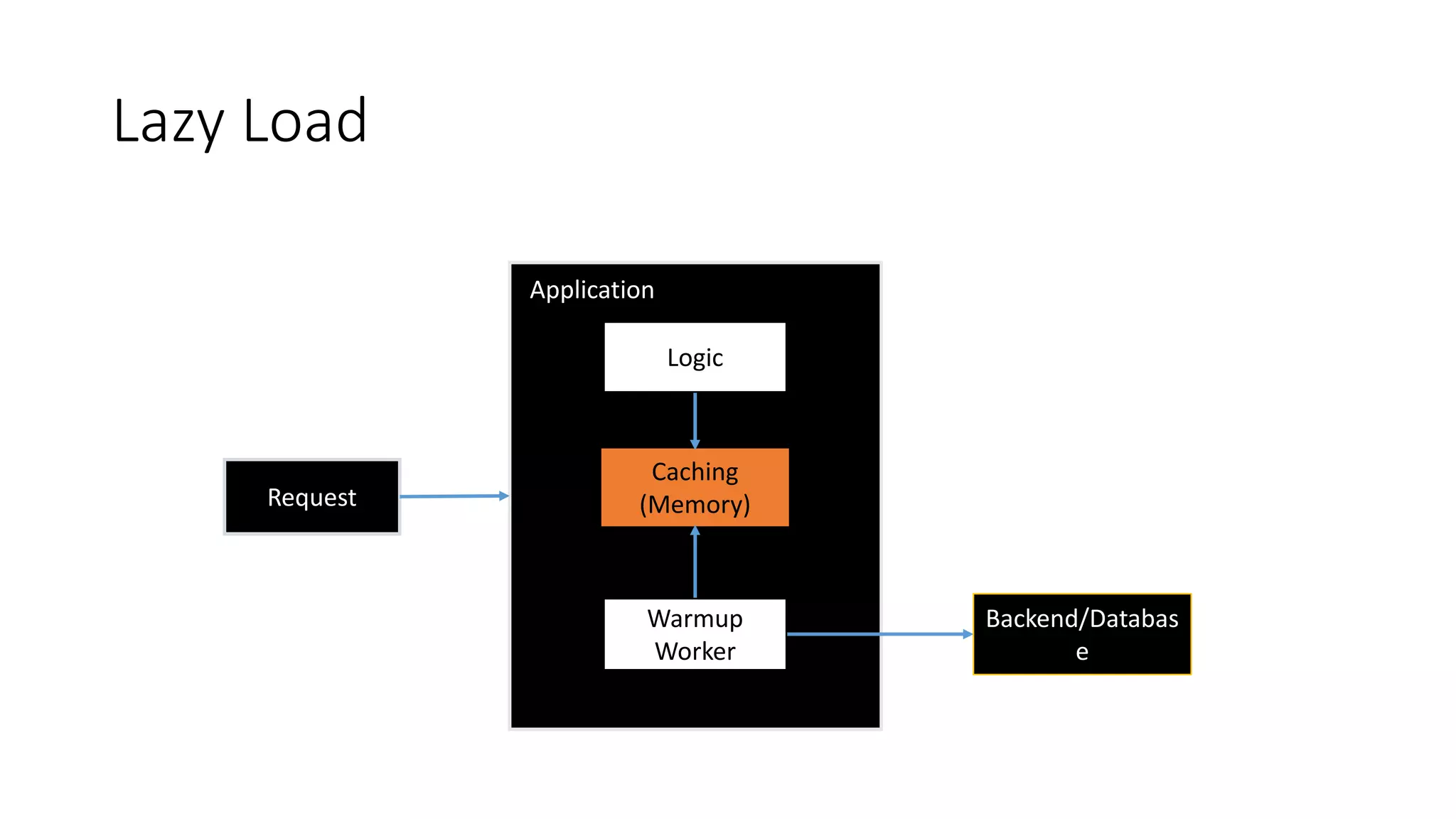

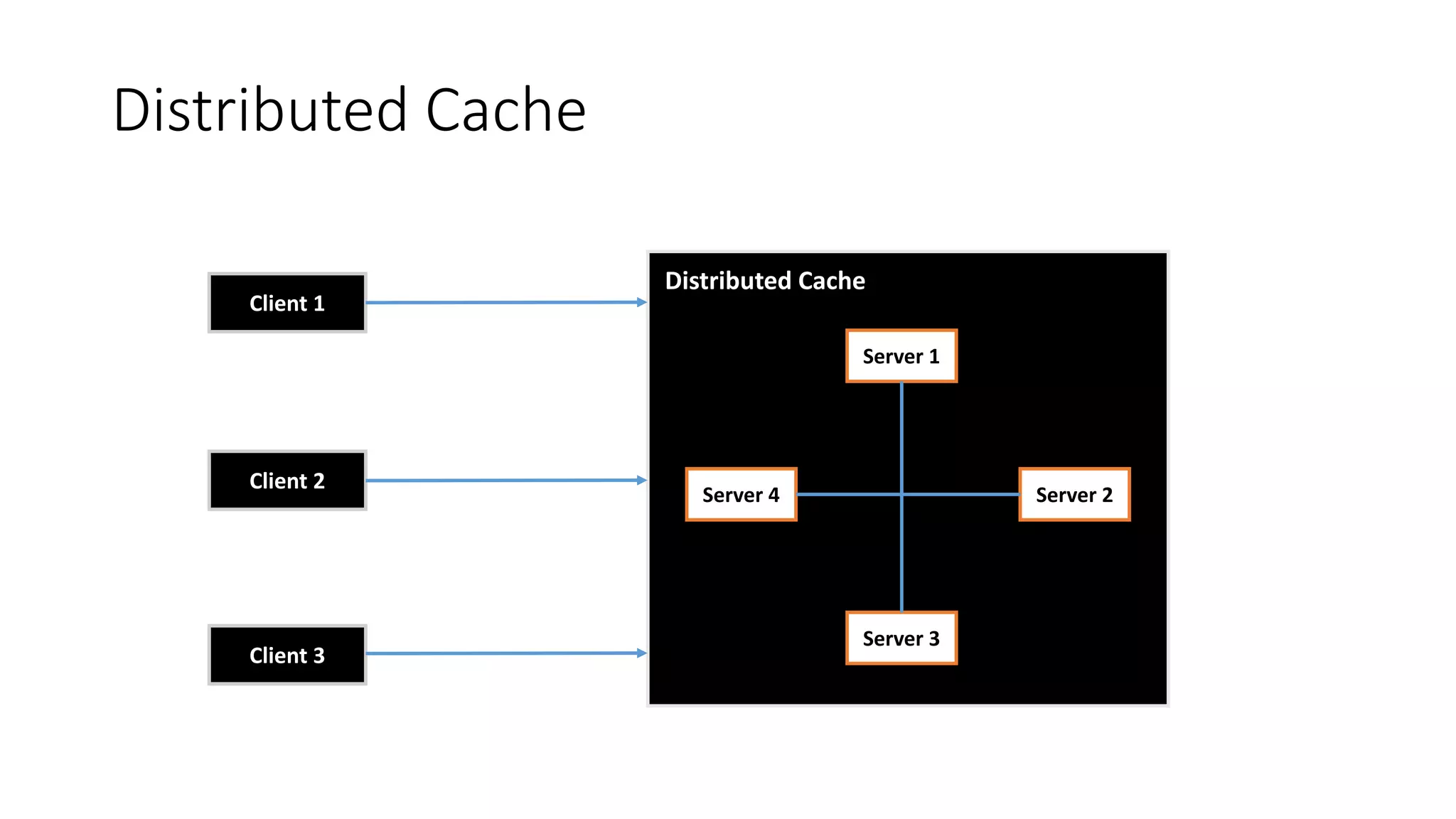

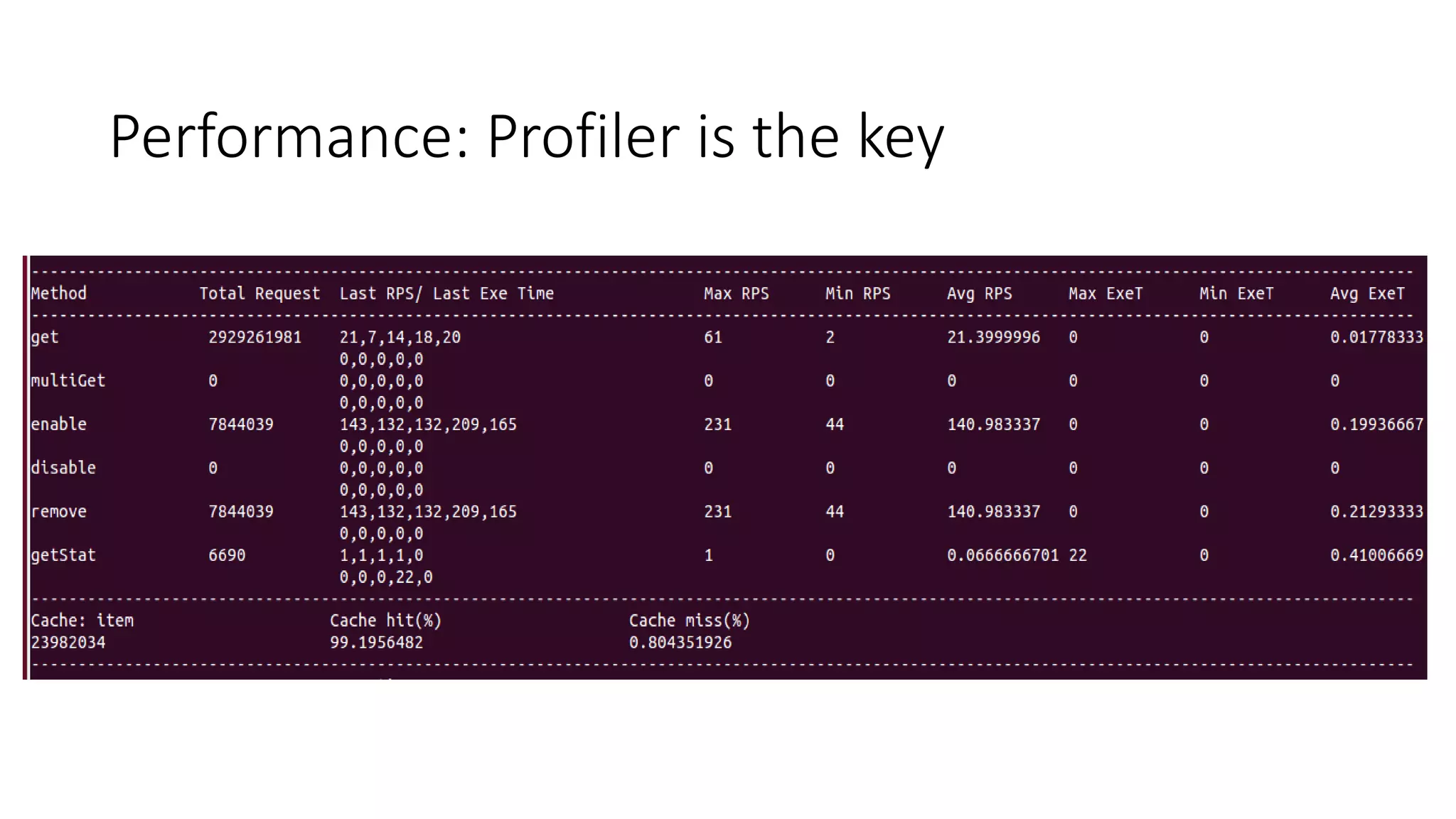

The document discusses caching methodologies and strategies, focusing on the role of cache layers and various application caching techniques to improve performance. It explores different types of caches, eviction algorithms, and compression strategies, highlighting their functionalities and use cases within computing systems. Additionally, it includes a performance comparison of serialization methods and compression algorithms, along with contact information for further inquiries.