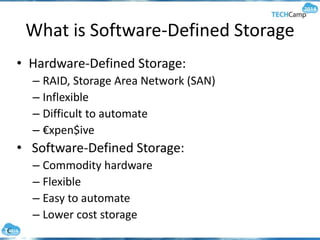

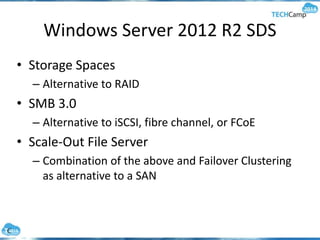

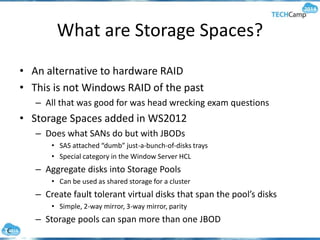

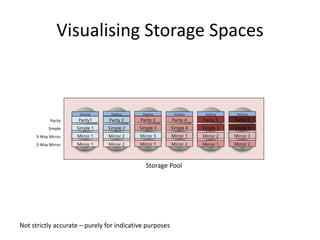

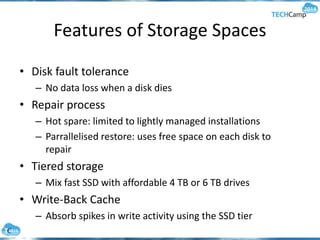

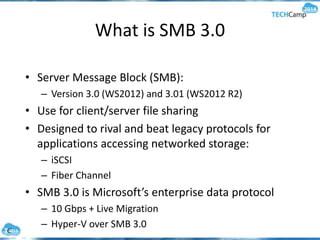

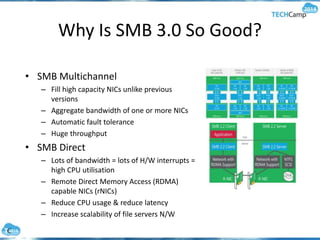

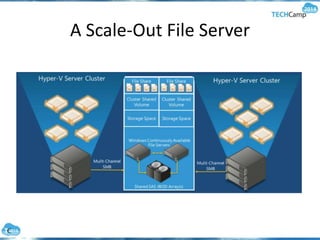

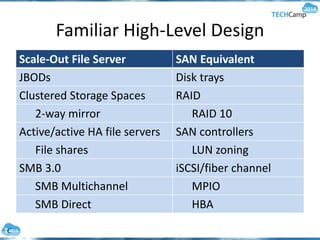

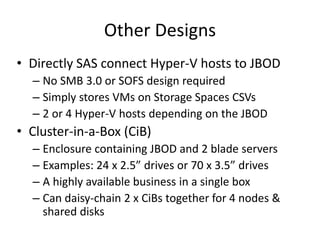

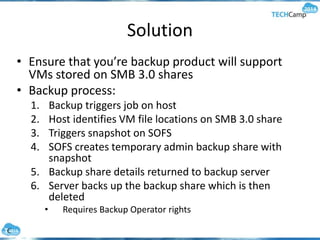

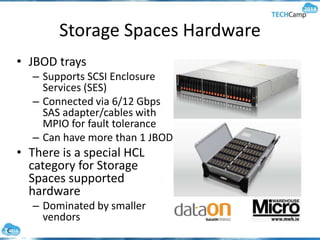

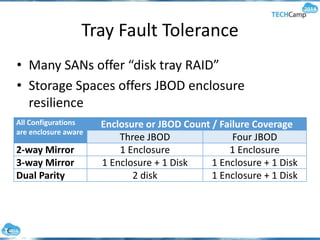

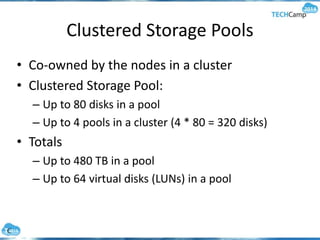

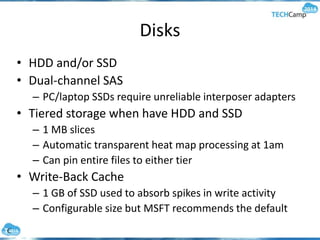

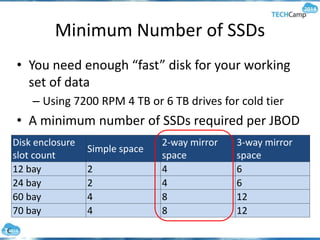

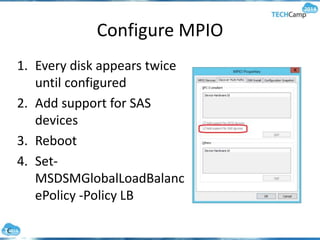

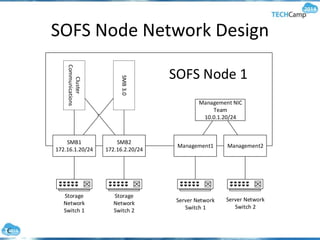

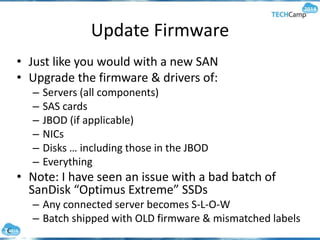

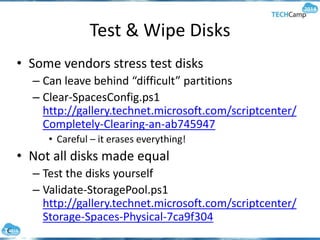

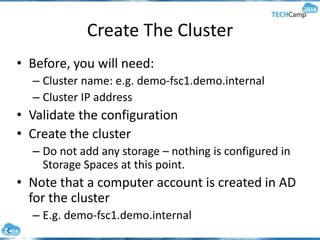

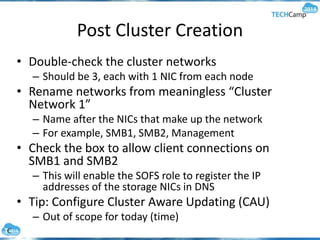

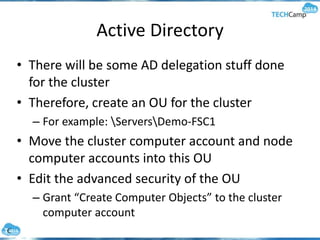

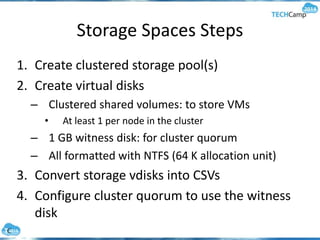

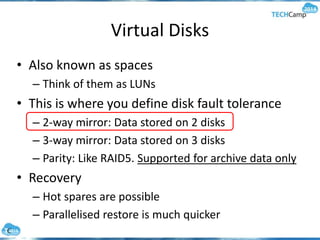

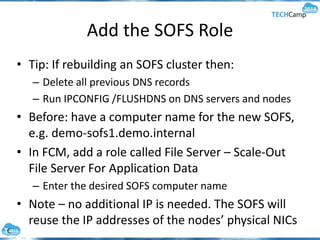

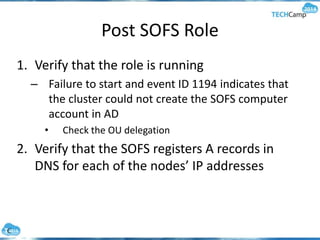

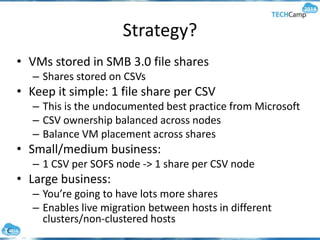

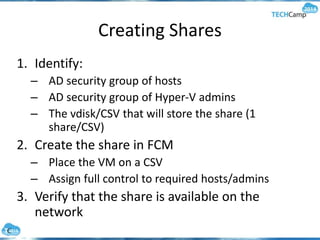

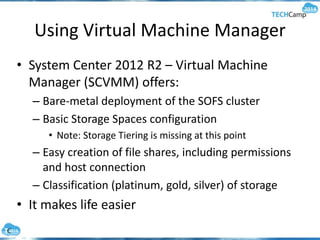

The document discusses software-defined storage (SDS) and its advantages over traditional hardware-defined storage, highlighting technologies such as Storage Spaces and SMB 3.0. It covers the architecture of a Scale-Out File Server (SOFS), including configurations, fault tolerance, and backup solutions. Additionally, it provides detailed steps for implementing and managing SDS using Windows Server 2012 R2, including setting up storage pools, virtual disks, and shares.