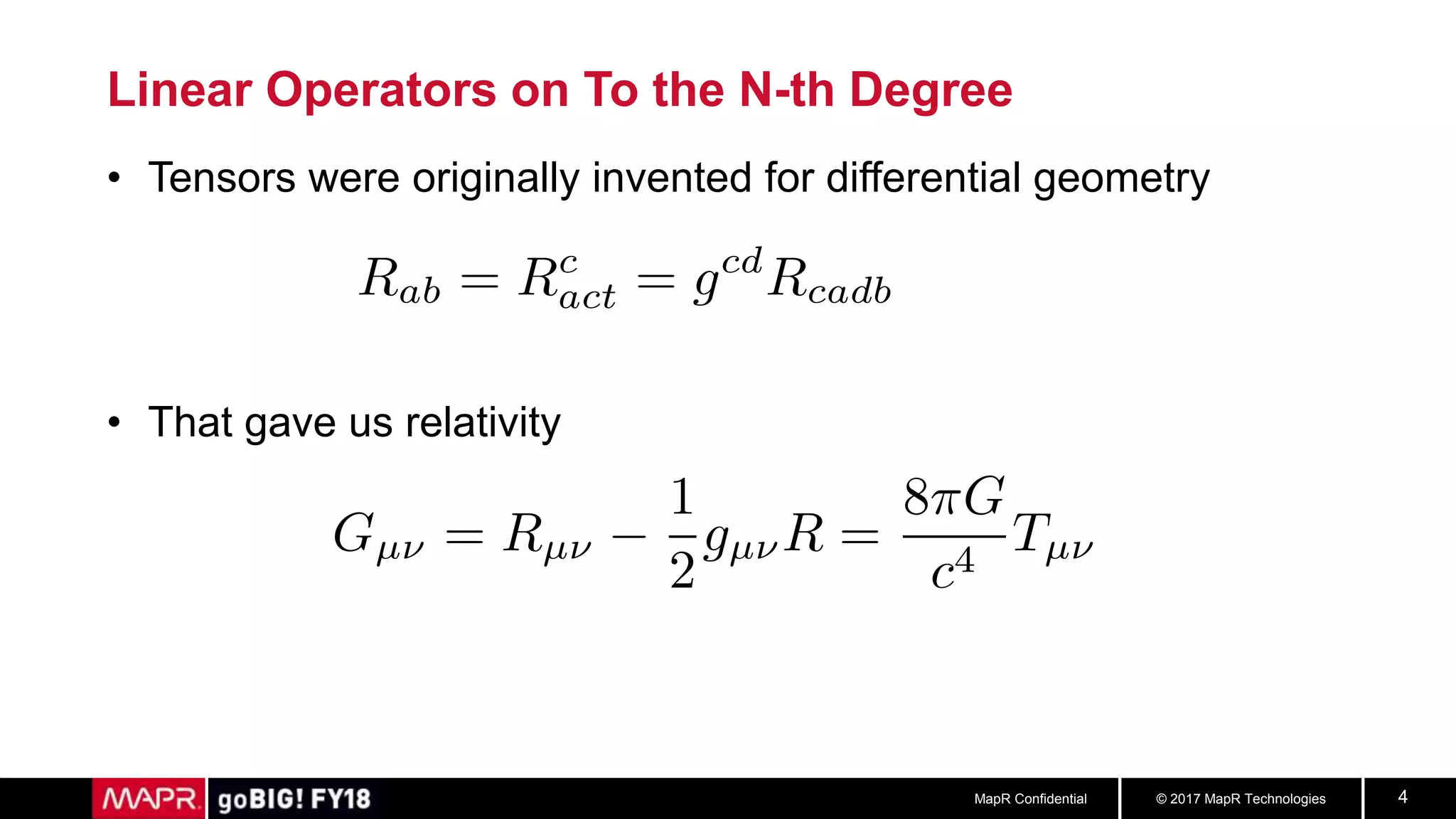

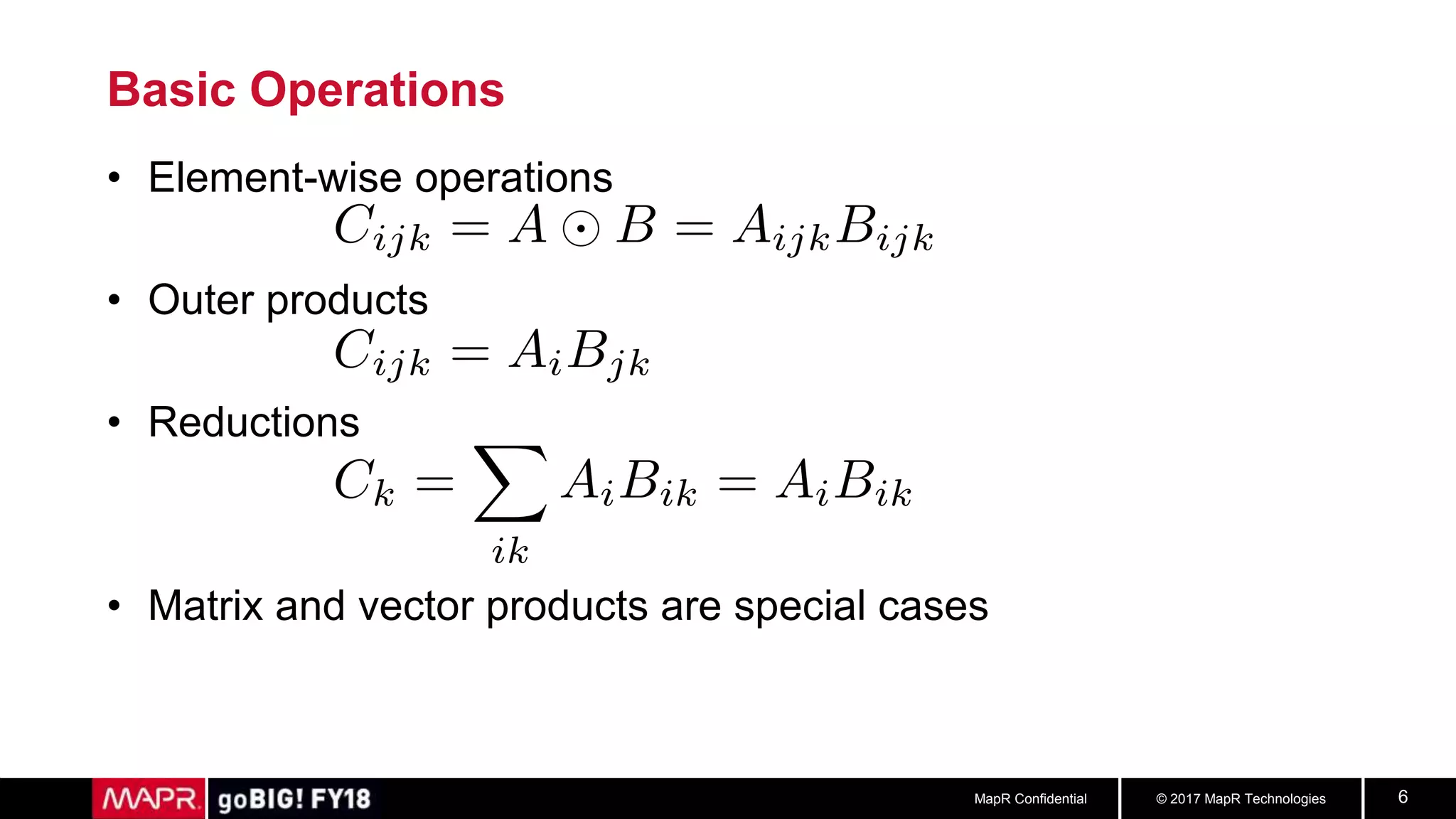

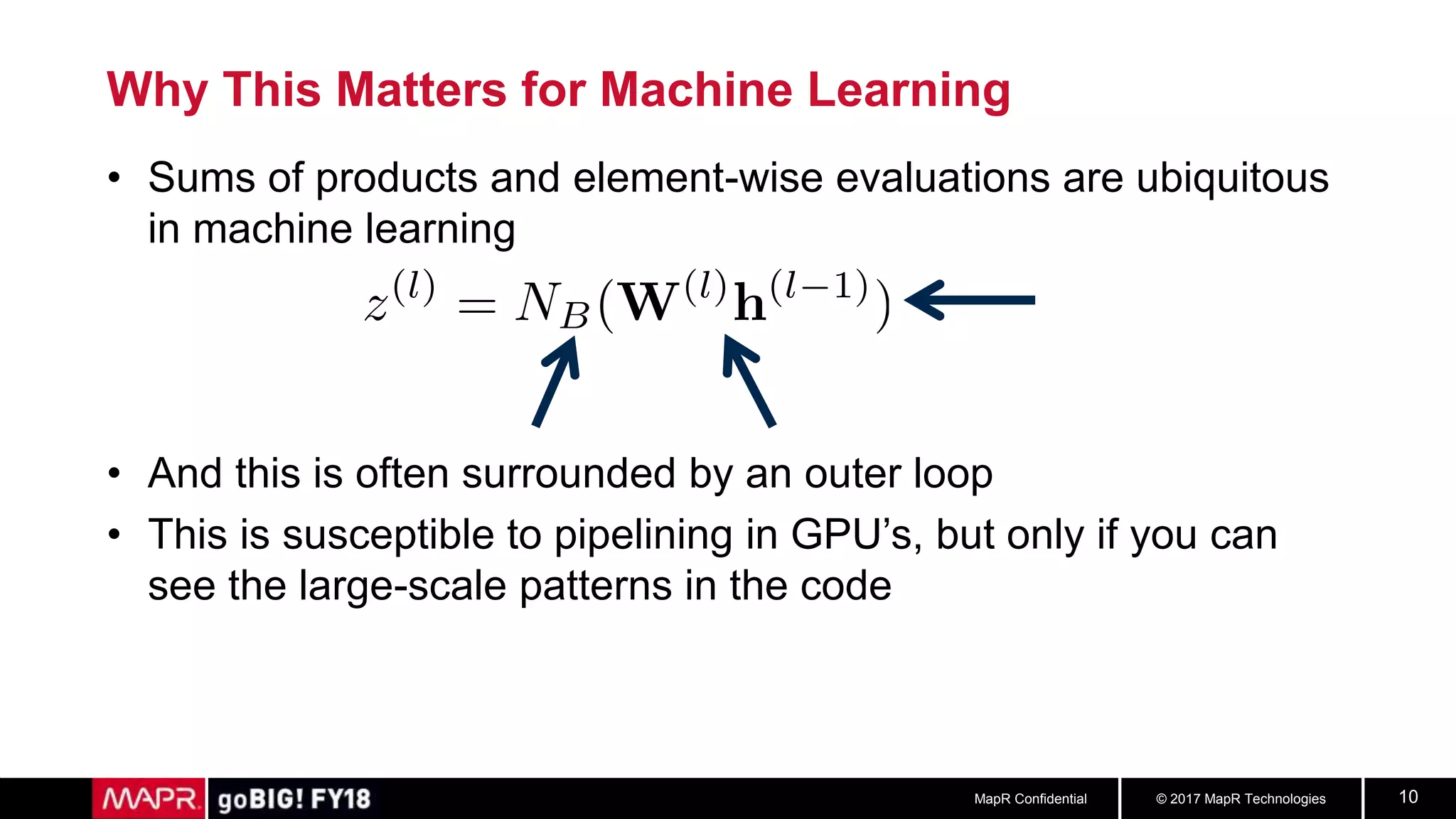

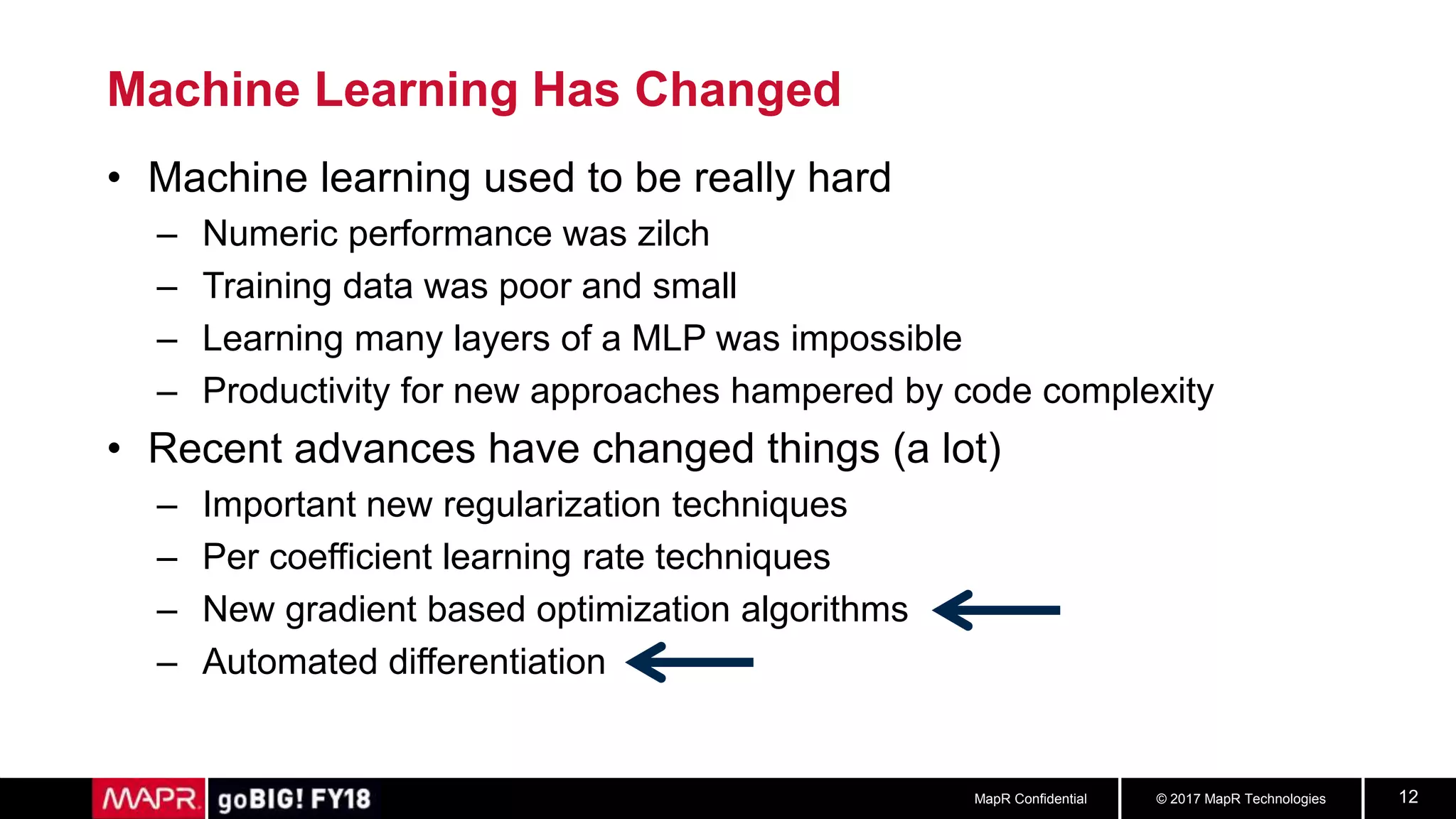

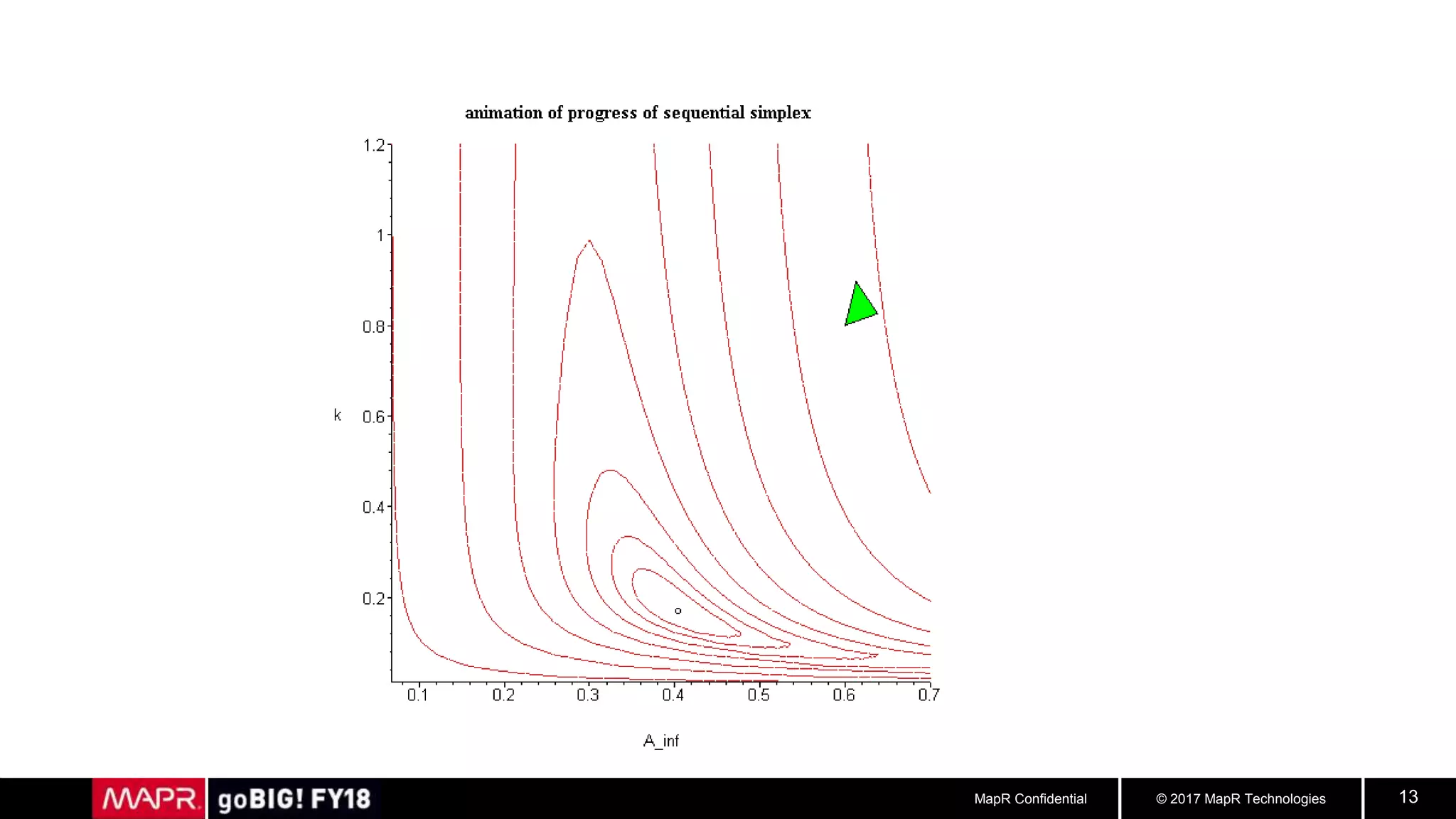

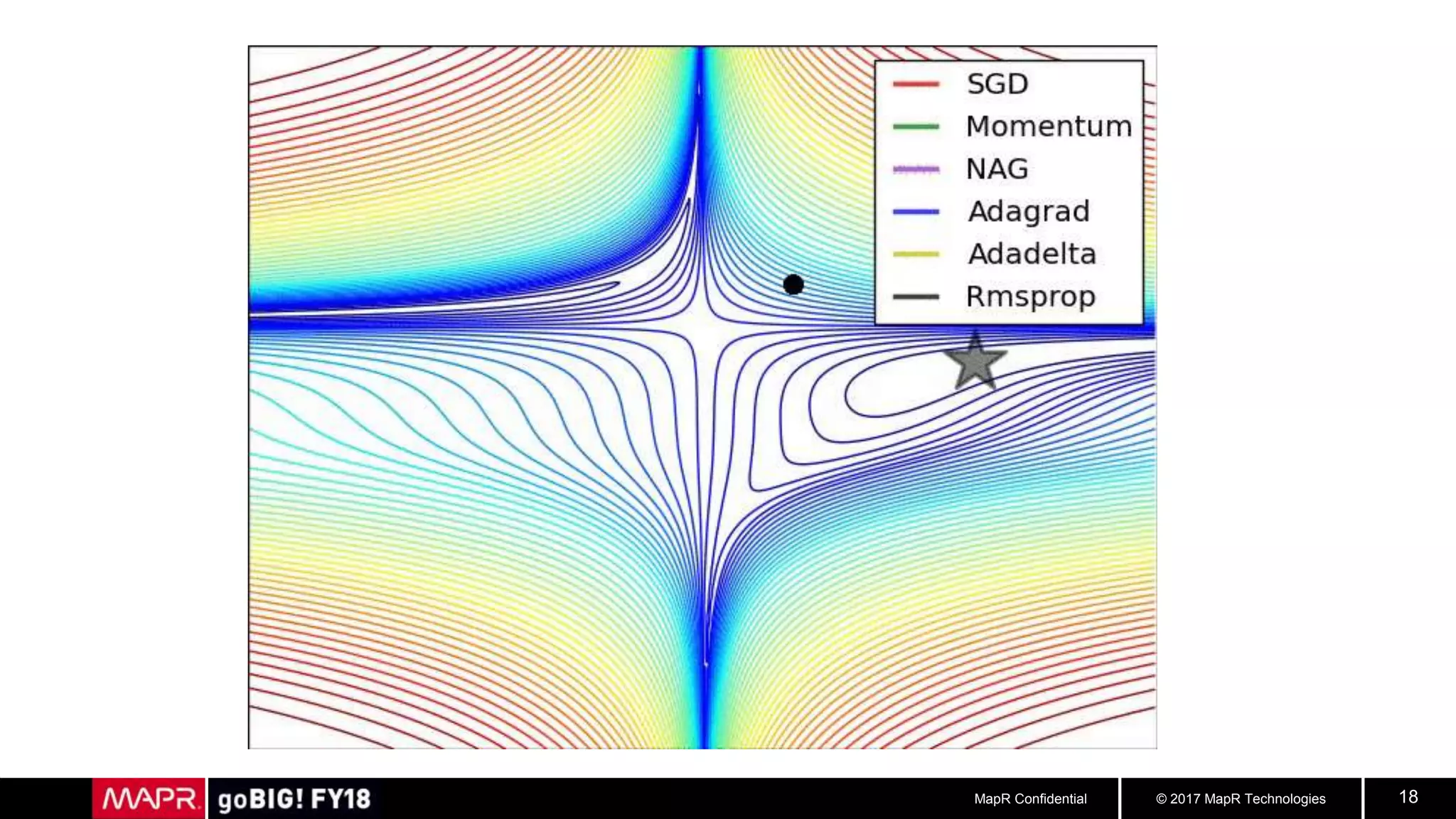

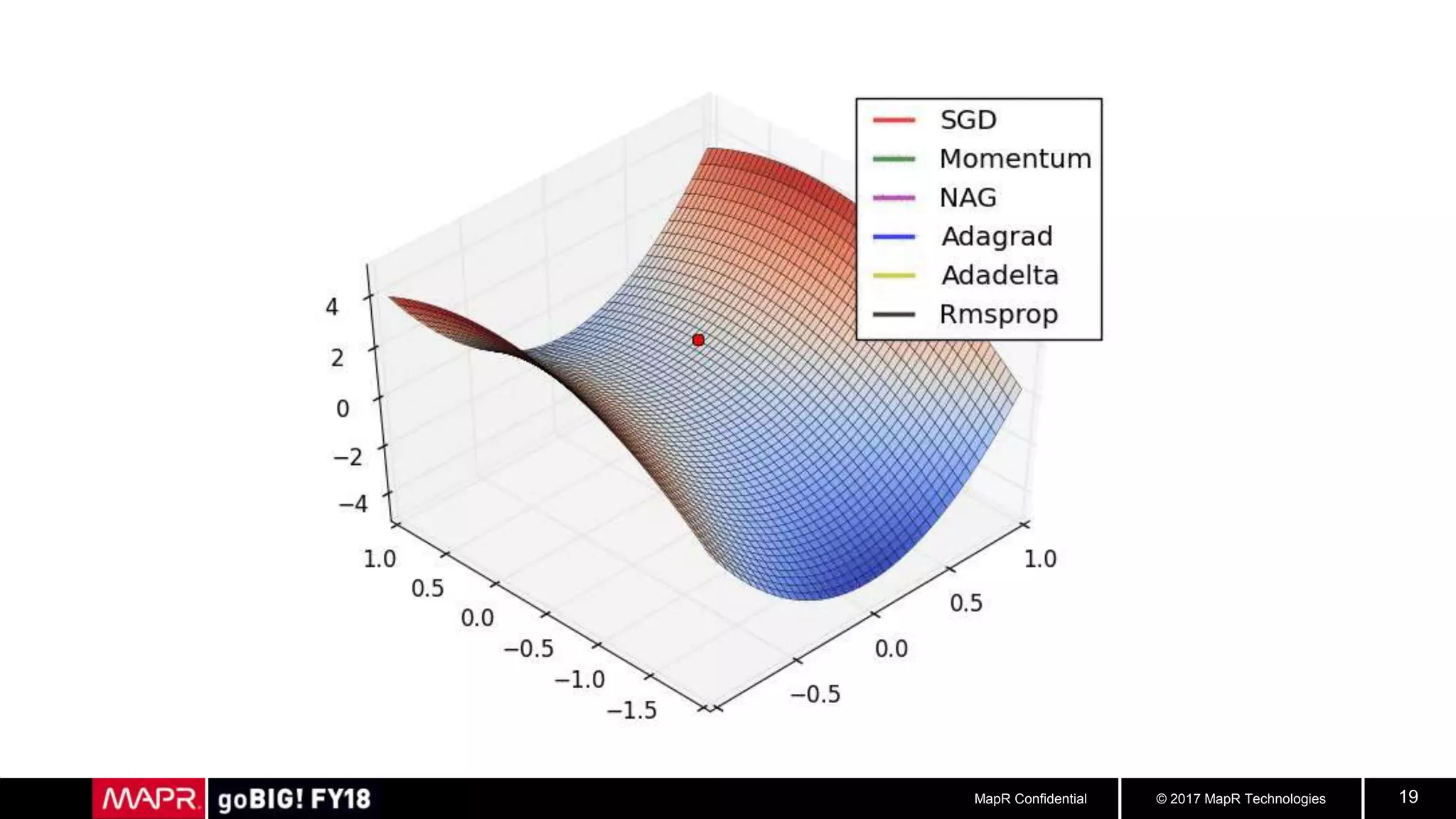

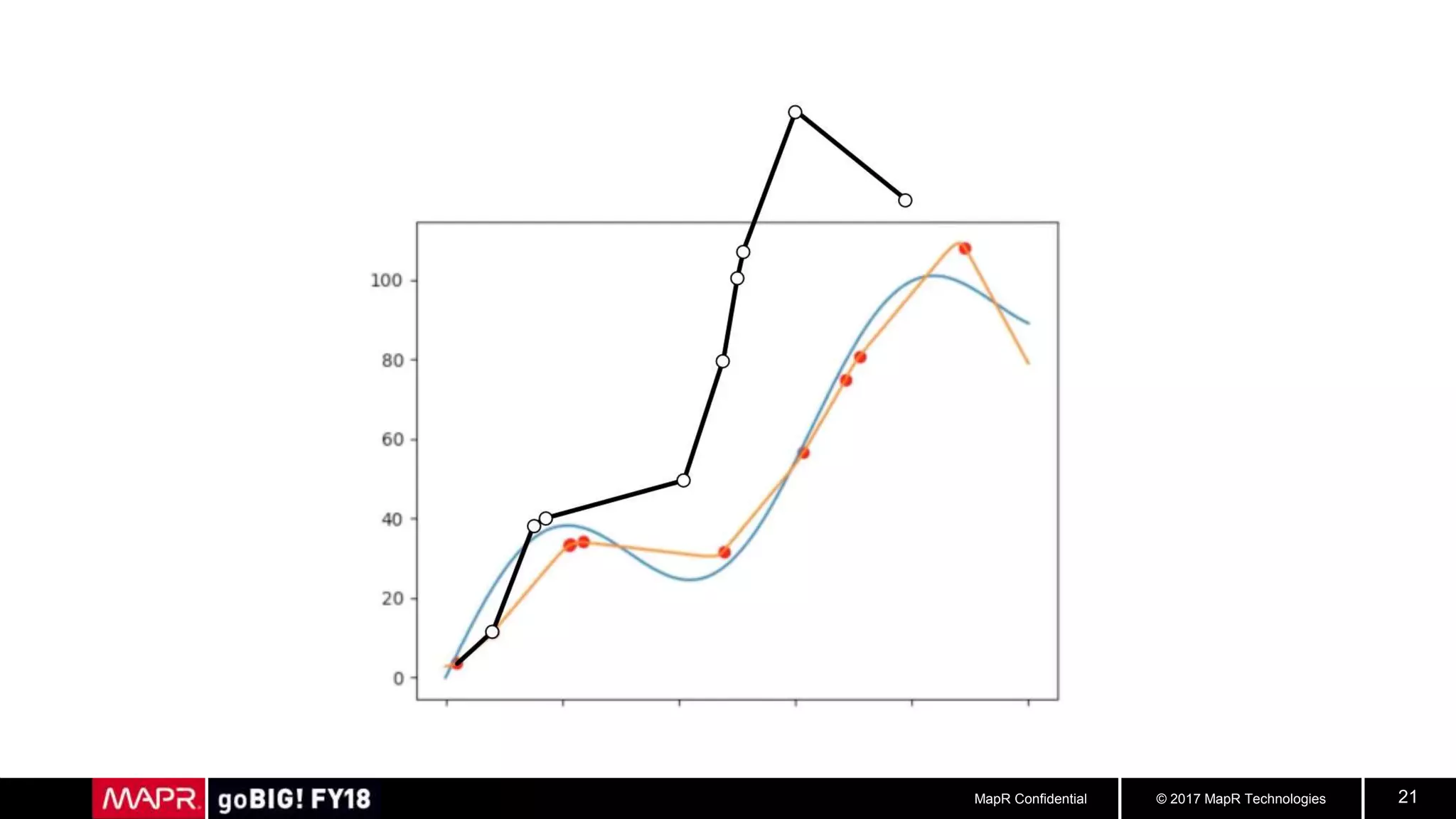

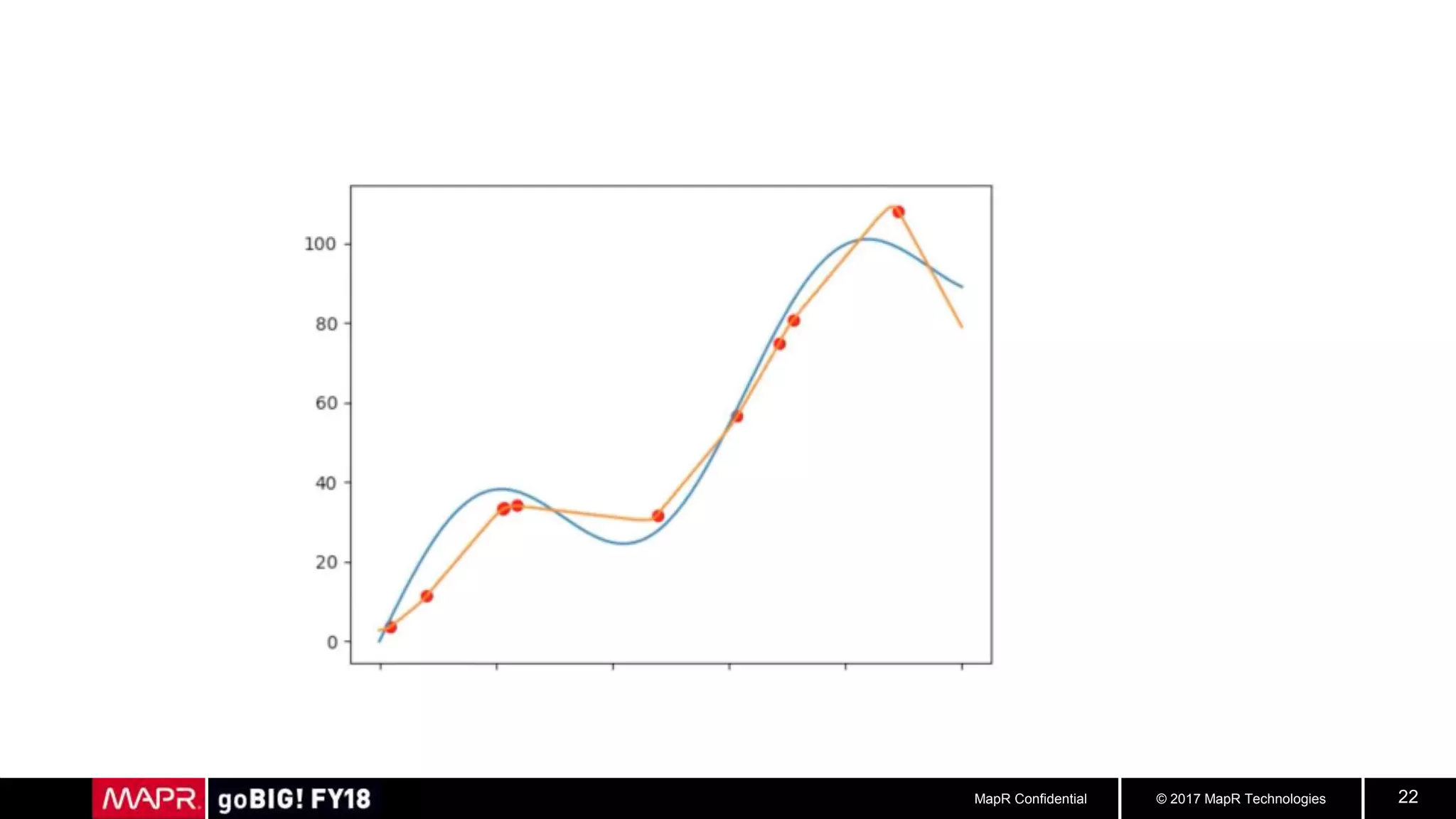

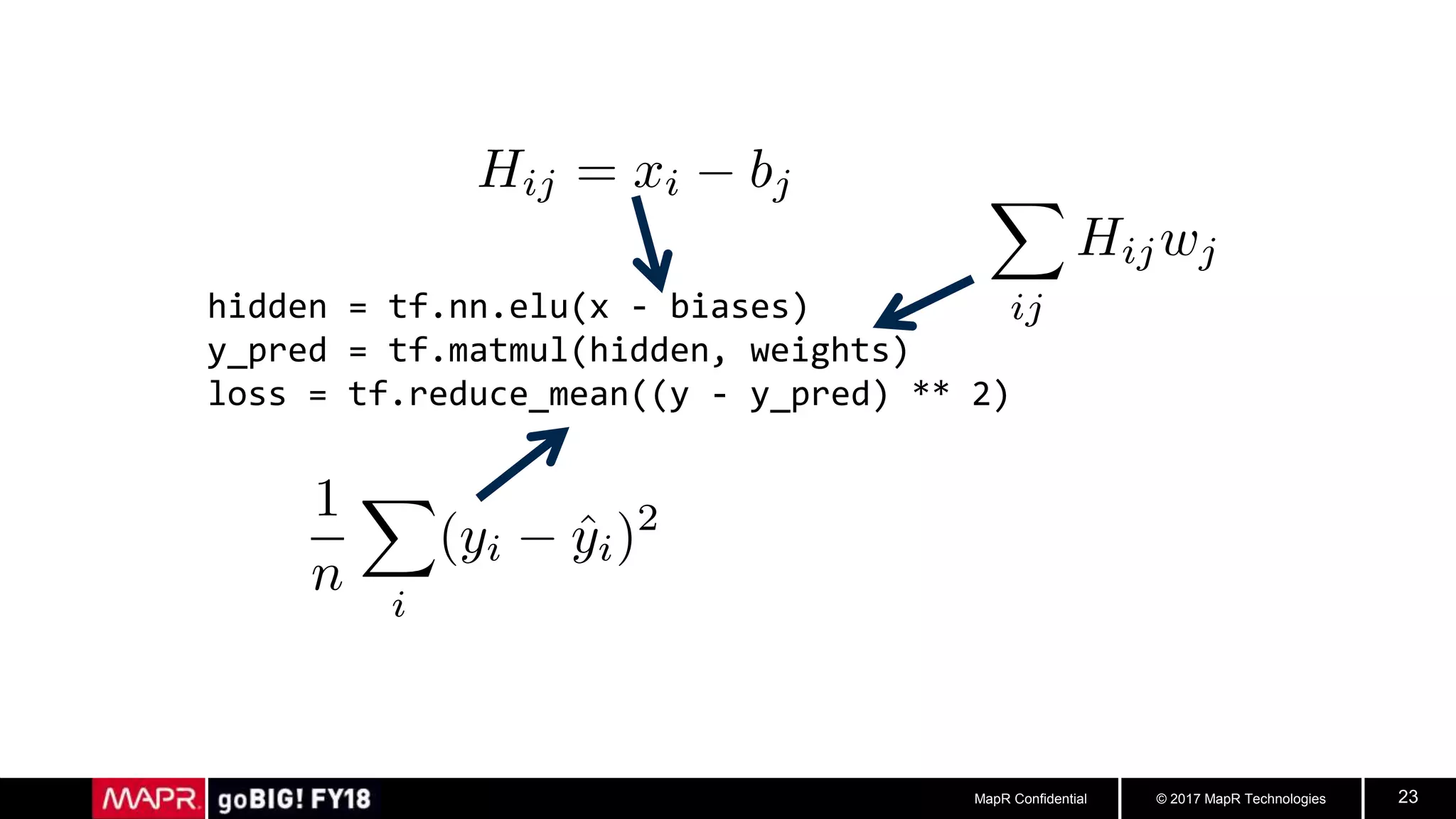

This document discusses tensors and their use in machine learning. It explains that tensors were originally developed for physics but are now commonly used in computing to represent important patterns of computation. Tensors make it easier to code numerical algorithms by capturing operations like element-wise computations, outer products, reductions, and matrix/vector products. Additionally, automatic differentiation is now possible using tensor frameworks, which allows gradients to be computed automatically rather than derived by hand. This has significantly advanced machine learning by enabling new optimization algorithms and the training of complex neural networks. Tensor systems also allow the same code to run on CPUs, GPUs, and clusters, improving productivity.