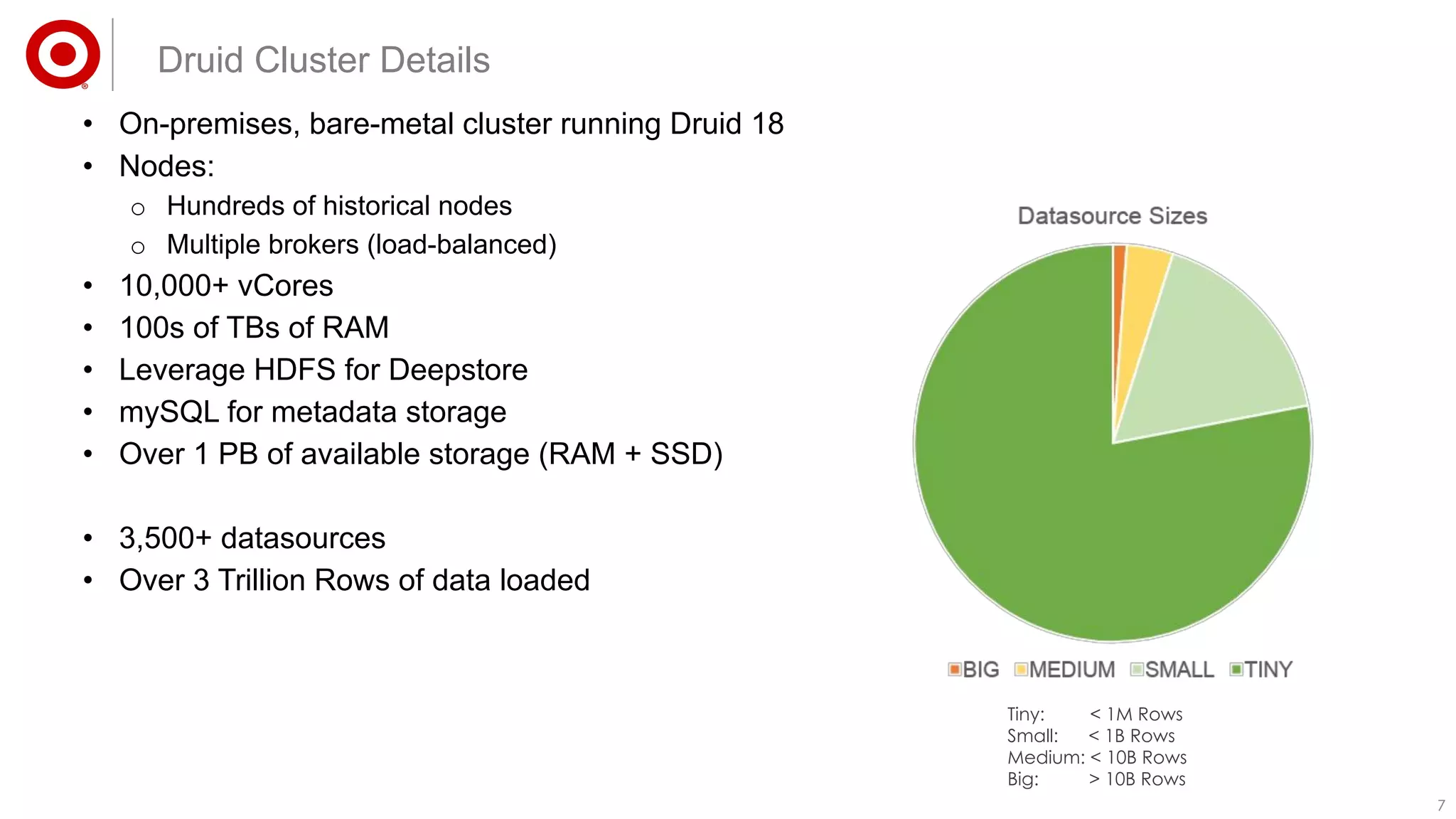

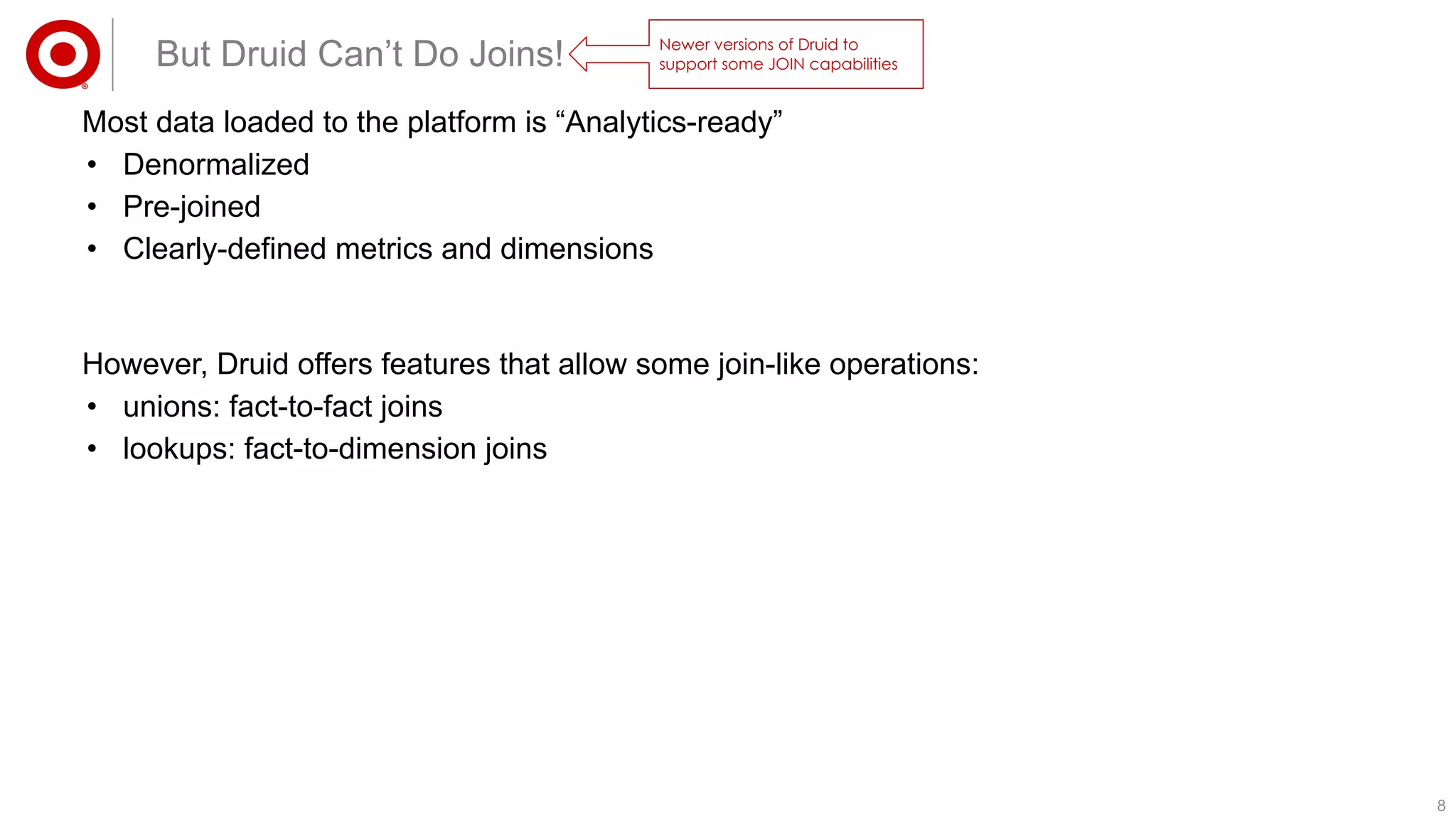

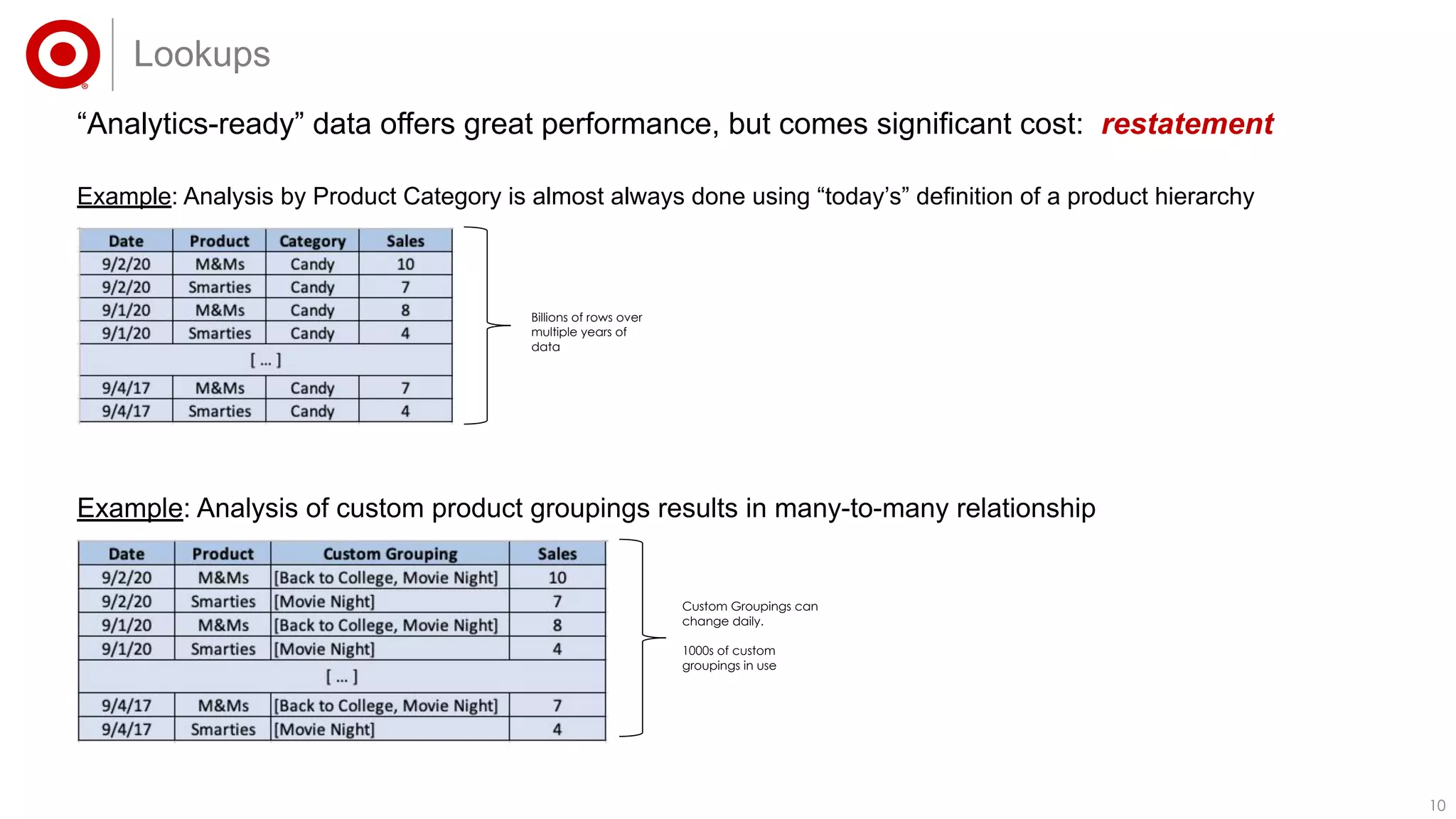

The document details the implementation of an enterprise analytics platform at Target, utilizing Apache Druid for its speed, scalability, and capabilities to support complex queries. It emphasizes the necessity for a data-accessible solution across multiple business units and highlights the performance of the platform, processing over 4 million queries daily. Key features discussed include data ingestion options, real-time analytics, and user collaboration, alongside the importance of a robust data architecture.

![99

SELECT Sales_Date, Location, sum(Sales) as Sales, sum(Forecast) as Forecast

FROM sales_table

JOIN sales_forecast_table ON (

sales.Sales_date = sales_forecast_table.Sales_date AND

sales_table.Location = sales_forecast_table.Location AND

sales.Item = sales_forecast.Item

)

GROUP BY sales_table.Sales_Date, sales_forecast_table.Location

Unions

Date Location Item [Metrics]

Date Location Item [Metrics]Sales

Sales Forecast

Date Location Item [Metrics]Inventory

Date Location Item [Metrics]Orders

Date Location [Metrics]Weather

Date Location [Metrics]Demographics

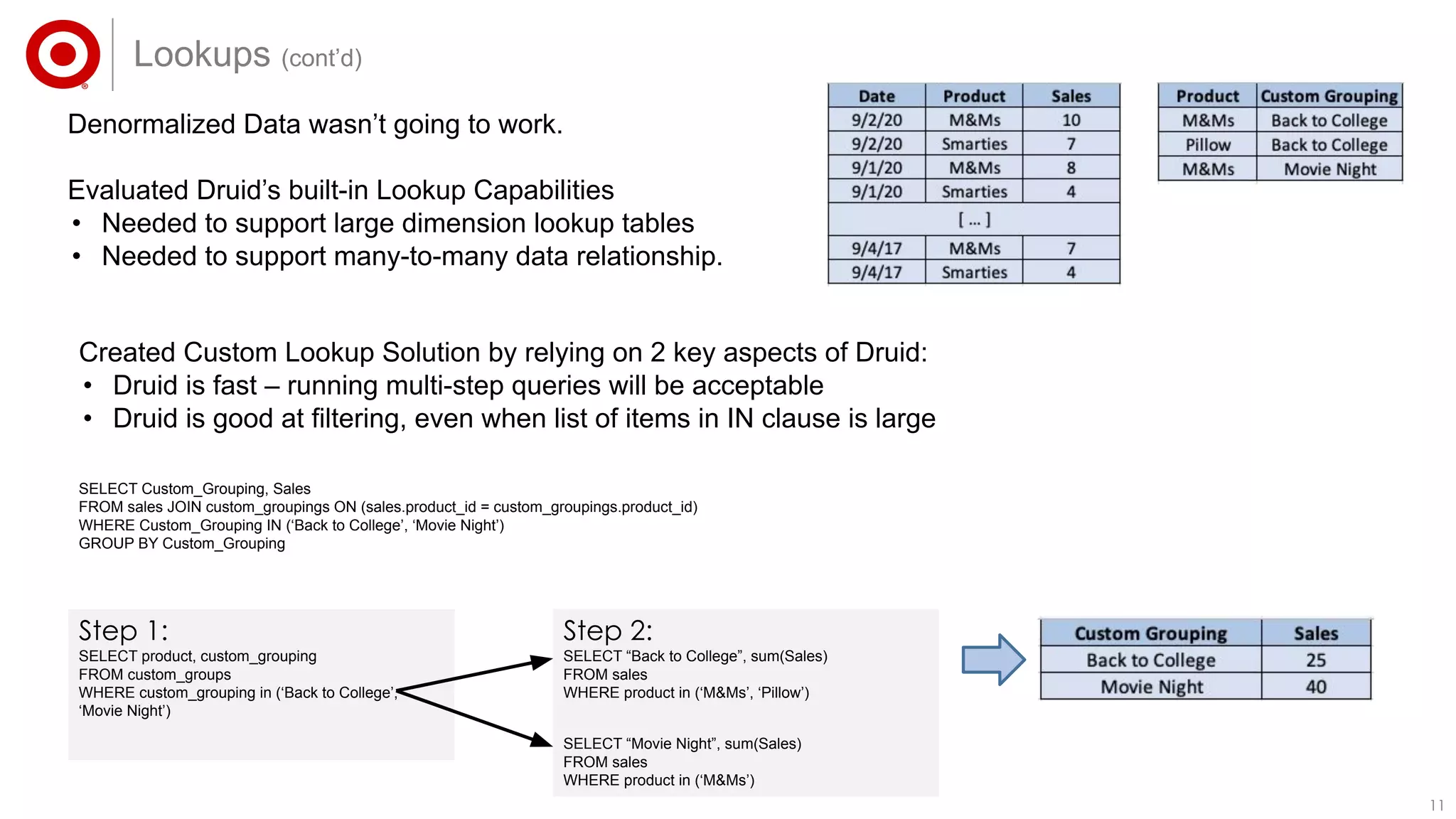

Unions can be used to enable fact-to-fact joins

Keys to Successfully Using Unions:

• Data Architecture Discipline

• columns must match

• dimension data values must match

• Ensure query only includes the datasets required for the

query

Date Item [Metrics]Shipping](https://image.slidesharecdn.com/target-druidsummit2020-200902235726/75/Building-an-Enterprise-Scale-Dashboarding-Analytics-Platform-Powered-by-the-Capabilities-of-Druid-9-2048.jpg)

![1212

Multiple Data Ingestion Options

• Initially used Hadoop Indexer to ingest all datasets

• Evolved to optionally leverage Native Indexer for smaller datasets

• Using Kafka Indexer to address growing demand for real-time ingestion

Cardinality Aggregators

• Provides ”introspection” capabilities for the query engine to make execution decisions

“Time” as a Central Concept

• Arbitrary granularity extension supports Target’s fiscal calendar

• Ability to provide array of intervals provides powerful period-over-period analytical capabilities.

Other Key Druid Features

"intervals": [

"2020-02-02T00:00:00+00:00/2020-08-30T00:00:00.00000+00:00"

],

"granularity": {

"intervals": [

"2020-02-02T00:00:00+00:00/2020-03-01T00:00:00+00:00",

"2020-03-01T00:00:00+00:00/2020-04-05T00:00:00+00:00",

"2020-04-05T00:00:00+00:00/2020-05-03T00:00:00+00:00",

"2020-05-03T00:00:00+00:00/2020-05-31T00:00:00+00:00",

"2020-05-31T00:00:00+00:00/2020-07-05T00:00:00+00:00",

"2020-07-05T00:00:00+00:00/2020-08-02T00:00:00+00:00",

"2020-08-02T00:00:00+00:00/2020-08-30T00:00:00+00:00"

],

"type": "arbitrary"

},](https://image.slidesharecdn.com/target-druidsummit2020-200902235726/75/Building-an-Enterprise-Scale-Dashboarding-Analytics-Platform-Powered-by-the-Capabilities-of-Druid-12-2048.jpg)