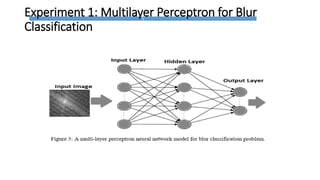

This document presents a blur classification approach using a Convolution Neural Network (CNN). It discusses types of image degradation including blur, different blur models, and prior work on blur classification using features and neural networks. The proposed method uses a CNN to classify images into four blur categories (motion, defocus, box, and Gaussian blur) based on the images' frequency spectra. The method is evaluated on a dataset with over 2800 synthetically blurred images from 24 people performing 10 gestures. The CNN achieves an average accuracy of 97% for blur classification, outperforming alternatives using multilayer perceptrons or handcrafted features.

![Literature Review

Bolan et al. [16] offered a blur image classification and blurred region

recognition technique.this work considers only two classes of blur.

Tiwari et al. [18] blur classification using statistical texture features and

neural network classification into motion, defocus and combined blur.

Tiwari et al. [19] blur classification using wavelet features and neural

network classification into motion, defocus and combined blur.

Tiwari et al. [20] blur classification using Ridgelet texture features and

neural network classification into motion, defocus and combined blur.

Tiwari et al. [21] blur classification using Curvelet features and neural

network classification into motion, defocus and combined blur.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-13-320.jpg)

![Image dataset

The proposed method is evaluated using Triesch gesture database [33].

This database consists of 720 images taken by 24 different persons in

3 dissimilar backgrounds comprise of 10 hand gestures for each. All the

images are synthetically blurred to create the database. All the images

are blurred separately using each class of blur namely motion, defocus,

box and Gaussian blur. Therefore, the blurred image database has the

size 2880 images.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-15-320.jpg)

![References

[1] Mitra, S., & Acharya, T. (2007). Gesture recognition: A survey. IEEE Transactions on Systems, Man, and Cybernetics, Part C (Applications and Reviews), 37(3), 311-324.

[2] Rautaray, S. S., & Agrawal, A. (2015). Vision based hand gesture recognition for human computer interaction: a survey. Artificial Intelligence Review, 43(1), 1-54.

[3] Nicholas, C. G., Marti, L. M., van der Merwe, R., & Kassebaum, J. (2017). U.S. Patent No. 9,679,414. Washington, DC: U.S. Patent and Trademark Office.

[4] Eisaku, O., Hiroshi, H., Lim, A. H. (2004). Barcode readers using the camera device in mobile phones. In Proc. of Internet. Conf. on Cyber worlds, pp. 260–265.

[5] Thielemann, J. T., Schumann-Olsen, H., Schulerud, H., and Kirkhus, T. (2004). Handheld PC with camera used for reading information dense barcodes. In Proc. IEEE Int. Conf. on

Computer Vision and Pattern Recognition, Demonstration Program, Washington, DC, pp. 102-112.

[6] Joseph, E., and Pavlidis, T. (1994). Bar code waveform recognition using peak locations. Pattern Analysis and Machine Intelligence, IEEE Transactions on, vol. 16(6), pp. 630-640.

[7] Selim, E. (2004). Blind deconvolution of barcode signals. Inverse Prob., vol. 20(1), pp. 121– 135.

[8] Tong, H., Li, M., Zhang, H., and Zhang, C. (2004). Blur detection for digital images using wavelet transform. In proceedings of IEEE international conference on Multimedia and Expo,

vol. 1, pp. 17-20.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-21-320.jpg)

![[9] Yang, Q., Yi, X., and Yang, X. (2013). No-reference image blur assessment based on gradient profile sharpness. IEEE International Symposium

on Broadband Multimedia Systems and Broadcasting (BMSB), pp.1-4.

[10] Rugna, J. D., and Konik, H. (2006). Blur identification in image processing. International Joint Conference on Neural Networks (IJCNN '06.),

pp. 2536-2541.

[11] Crete, F., Dolmiere, T., Ladret, P., and Nicolas, M. (2007). The blur effect: Perception and estimation with a new no-reference perceptual

blur metric. In SPIE Human Vision & Electronic Imaging, vol. 6492, pp. 1-11.

[12] Chi, Z. (2008). An unsupervised approach to determination of main subject regions in images with low depth of field. IEEE 10th Workshop

on Multimedia Signal Processing, pp. 650-653.

[13] Chong, R.M., and Tanaka, T. (2008). Image extrema analysis and blur detection with identification. IEEE International Conference on Signal

Image Technology and Internet Based Systems (SITIS '08), pp. 320-326.

[14] Liu, R., Li, Z., and Jia, J. (2008). Image partial blur detection and classification. In Proc. CVPR, pp. 23–28.

[15] Aizenberg, I., Paliy, D. V., Zurada, J. M., and Astola, J. T. (2008). Blur identification by multilayer neural network based on multivalued

neurons. IEEE Transactions on Neural Networks, vol. 19(5), pp.883-898.

[16] Bolan S., Lu, S., and Tan, C. (2011). Blurred image region detection and classification. In Proc. ACM Multimedia, pp.1397–1400.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-22-320.jpg)

![[17] Yan, R., and Shao, L. (2013). Image Blur classification and parameter identification using two-stage deep belief

networks. British Machine Vision Conference (BMVC), Bristol, UK, pp. 1-11.

[18] Tiwari, S., Shukla, V. P., Biradar, S., & Singh, A. (2013). Texture features based blur classification in barcode

images. International Journal of Information Engineering and Electronic Business, 5(5), 34.

[19] Tiwari, S., Shukla, V. P., Biradar, S. R., & Singh, A. K. (2014). Blur Classification Using Wavelet Transform and Feed

Forward Neural Network. International Journal of Modern Education and Computer Science, 6(4), 16.

[20] Tiwari, S. (2017). A Pattern Classification Based approach for Blur Classification. Indonesian Journal of Electrical

Engineering and Informatics (IJEEI), 5(2).

[21] Tiwari, S., Shukla, V. P., Biradar, S. R., & Singh, A. K. (2014). Blur classification using ridgelet transform and feed

forward neural network. International Journal of Image, Graphics and Signal Processing, 6(9), 47.

[22] Pan, H., Feng, X. F., & Daly, S. (2005, September). LCD motion blur modeling and analysis. In Image Processing,

2005. ICIP 2005. IEEE International Conference on (Vol. 2, pp. II-21). IEEE.

[23] Dobes, M., Machala, L., and Frst, T. (2010). Blurred image restoration: A fast method of finding the motion

length and angle. Digital Signal Processing, vol. 20(6), pp. 1677–1686.

[24] Sakano, M., Suetake, N., and Uchino, E. (2007). A robust point spread function estimation for out-of-focus

blurred and noisy images based on a distribution of gradient vectors on the polar plane. Journal of Optical Society of

Japan, vol. 14(5), pp. 297-303.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-23-320.jpg)

![[25] Tiwari, S., Shukla, V. P., Biradar, S. R., & Singh, A. K. (2014). Blur parameters identification for simultaneous defocus and motion blur. CSI transactions on

ICT, 2(1), 11-22.

[26] Gardner, M. W., & Dorling, S. R. (1998). Artificial neural networks (the multilayer perceptron)—a review of applications in the atmospheric sciences.

Atmospheric environment, 32(14-15), 2627-2636.

[27] Tang, J., Deng, C., & Huang, G. B. (2016). Extreme learning machine for multilayer perceptron. IEEE transactions on neural networks and learning systems,

27(4), 809-821.

[28] Chaudhuri, B. B., & Bhattacharya, U. (2000). Efficient training and improved performance of multilayer perceptron in pattern classification.

Neurocomputing, 34(1-4), 11-27.

[29] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in neural information

processing systems (pp. 1097-1105).

[30] Sahiner, B., Chan, H. P., Petrick, N., Wei, D., Helvie, M. A., Adler, D. D., & Goodsitt, M. M. (1996). Classification of mass and normal breast tissue: a

convolution neural network classifier with spatial domain and texture images. IEEE transactions on Medical Imaging, 15(5), 598-610.

[31] Howard, A. G. (2013). Some improvements on deep convolutional neural network based image classification. arXiv preprint arXiv:1312.5402.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-24-320.jpg)

![[32] Oquab, M., Bottou, L., Laptev, I., & Sivic, J. (2014). Learning and transferring mid-level image representations using convolutional neural

networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1717-1724).

[33] Triesch, J., & Von Der Malsburg, C. (1996). Robust classification of hand postures against complex backgrounds. In Automatic Face and

Gesture Recognition, Proceedings of the Second International Conference on (pp. 170-175). IEEE.

[34] Billsus, D., & Pazzani, M. J. (1998, July). Learning Collaborative Information Filters. In Icml (Vol. 98, pp. 46-54).

[35] Fawcett, T. (2006). An introduction to ROC analysis. Pattern recognition letters, 27(8), 861-874.

[36] Ferri, C., Hernández-Orallo, J., & Salido, M. A. (2003, September). Volume under the ROC surface for multi-class problems. In European

Conference on Machine Learning (pp. 108-120). Springer, Berlin, Heidelberg.](https://image.slidesharecdn.com/blurclassification-200803052127/85/Blurclassification-25-320.jpg)