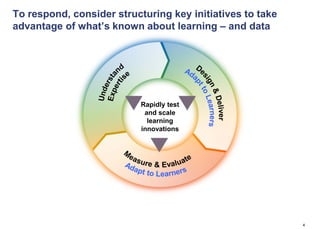

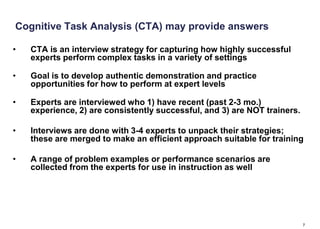

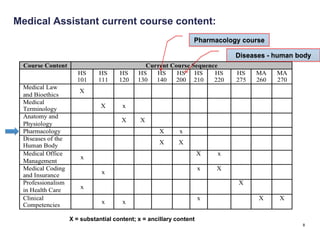

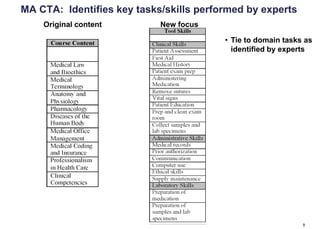

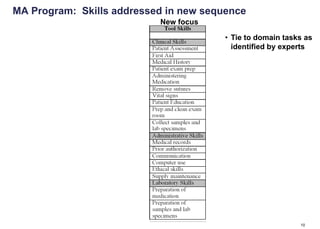

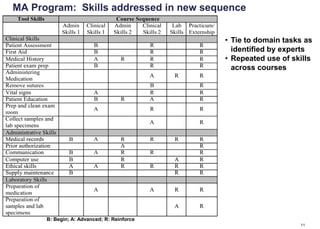

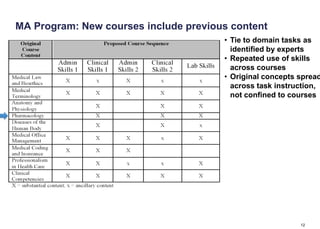

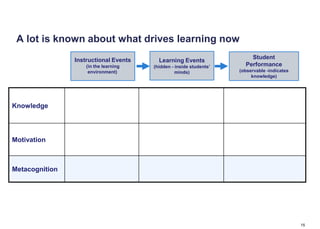

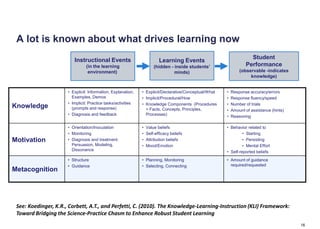

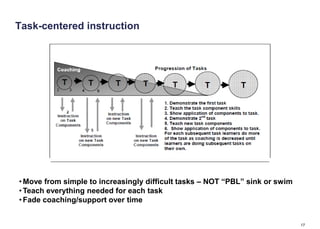

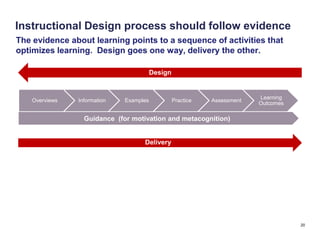

Cognitive task analysis was used to redesign medical assistant courses at Kaplan University. By interviewing experts, the key tasks and skills needed for the job were identified. Course content was reorganized to focus on these tasks and skills, ensuring they were addressed across multiple courses in a scaffolded manner from basic to advanced. The redesign also incorporated knowledge about how learning occurs, such as tying instructional events like examples and practice to the cognitive and motivational processes involved in learning.