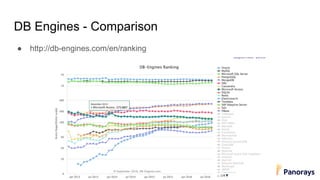

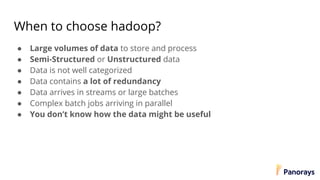

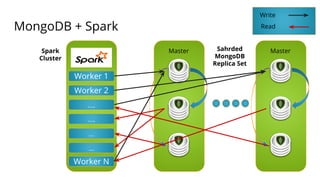

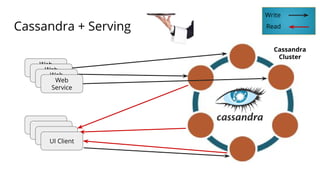

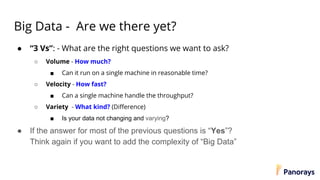

The document discusses the concepts and challenges of big data, focusing on its characteristics, the limitations of traditional relational databases, and the rise of NoSQL databases. It introduces key frameworks like Hadoop, along with its components, and emphasizes the importance of monitoring and automation in distributed systems. The conclusion advises caution before adopting big data solutions and highlights the need for tailored storage and monitoring strategies.