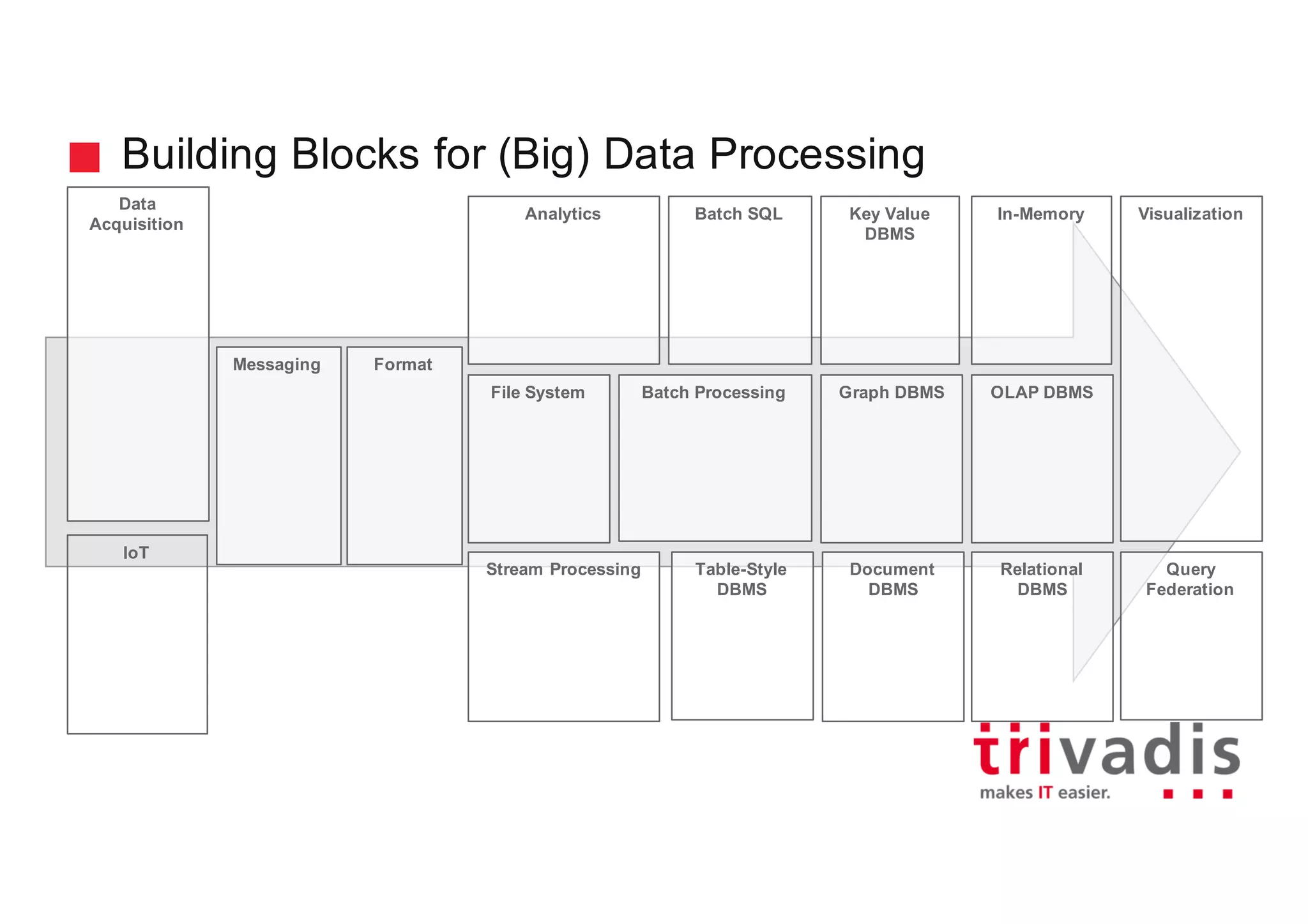

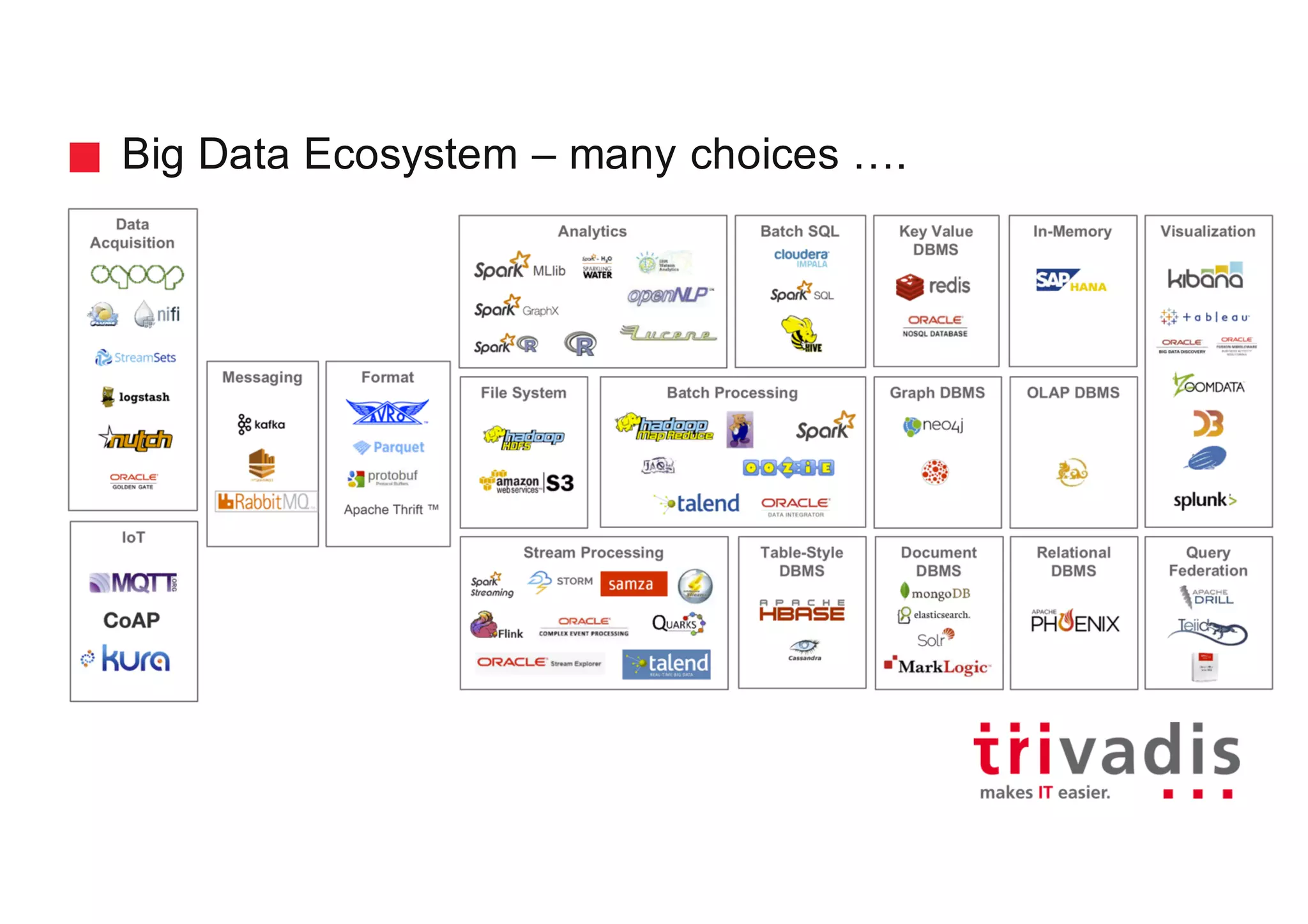

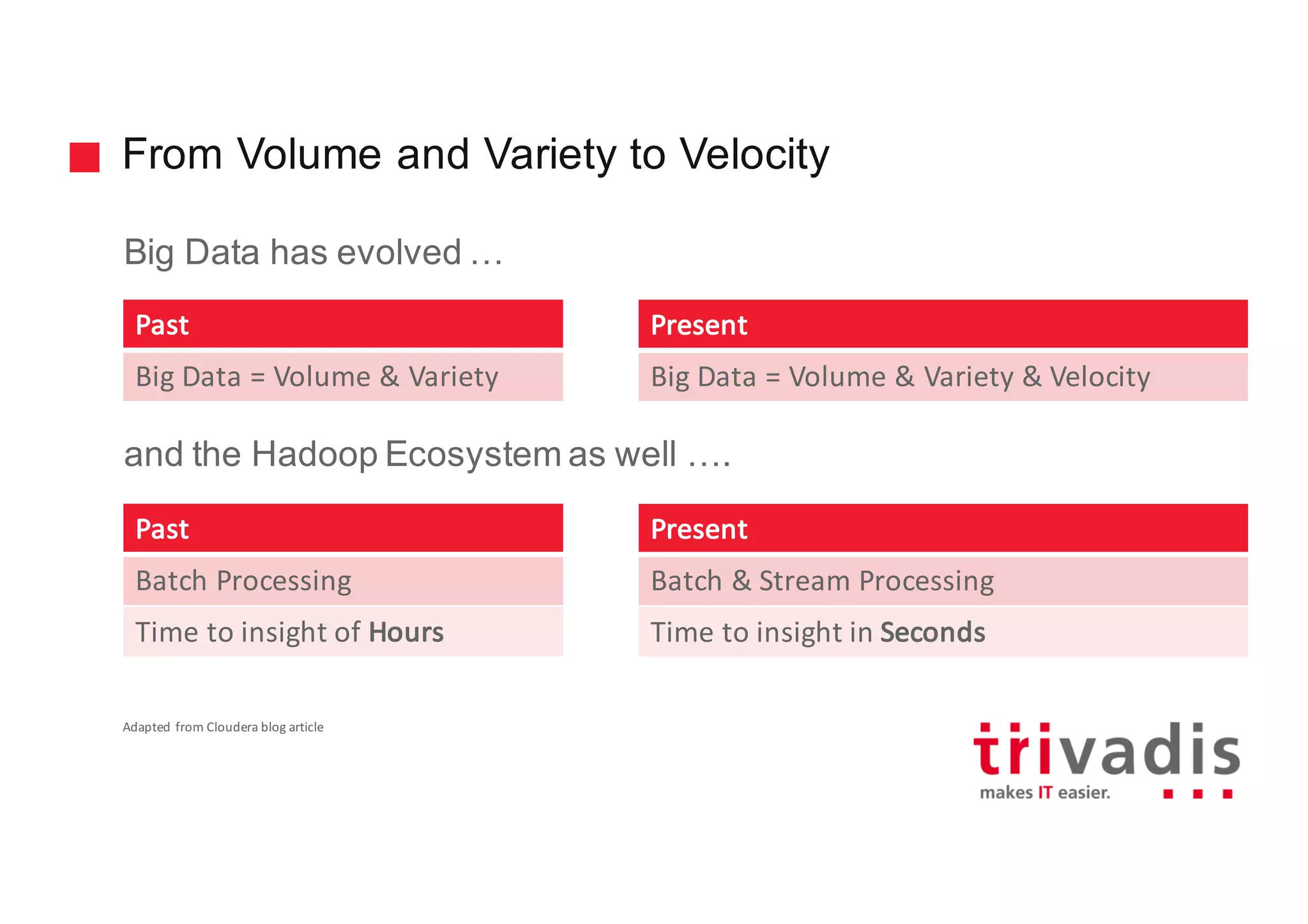

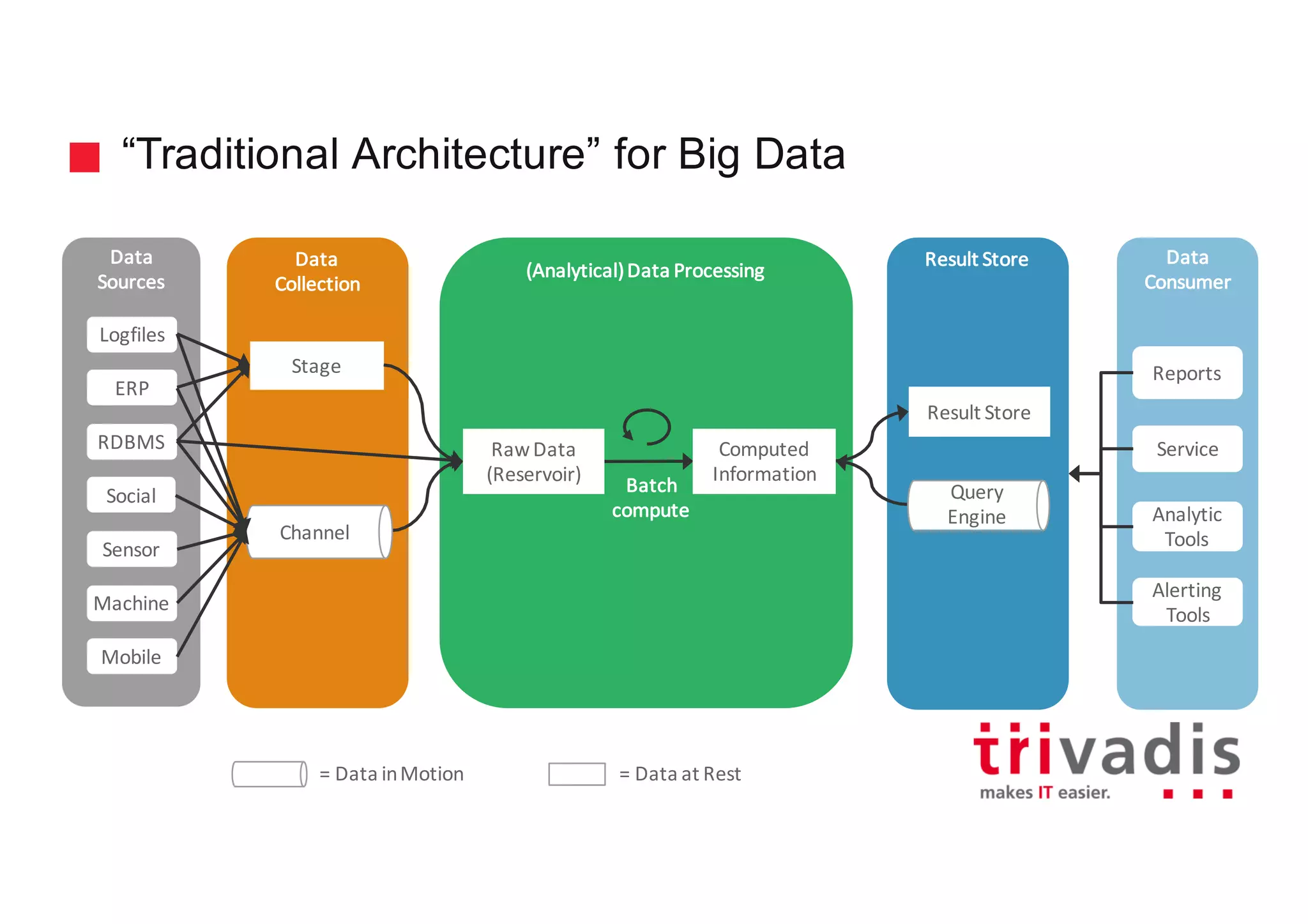

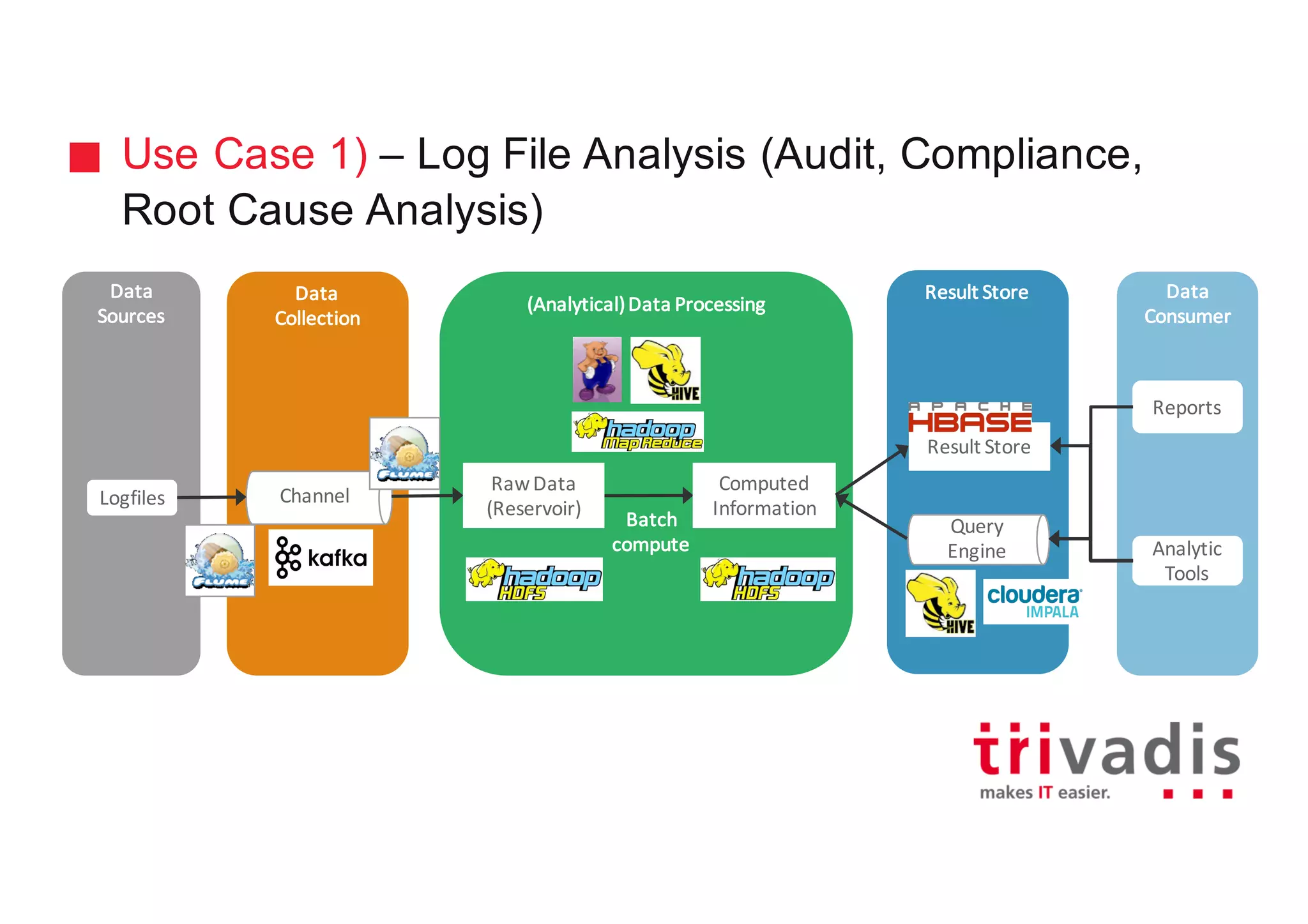

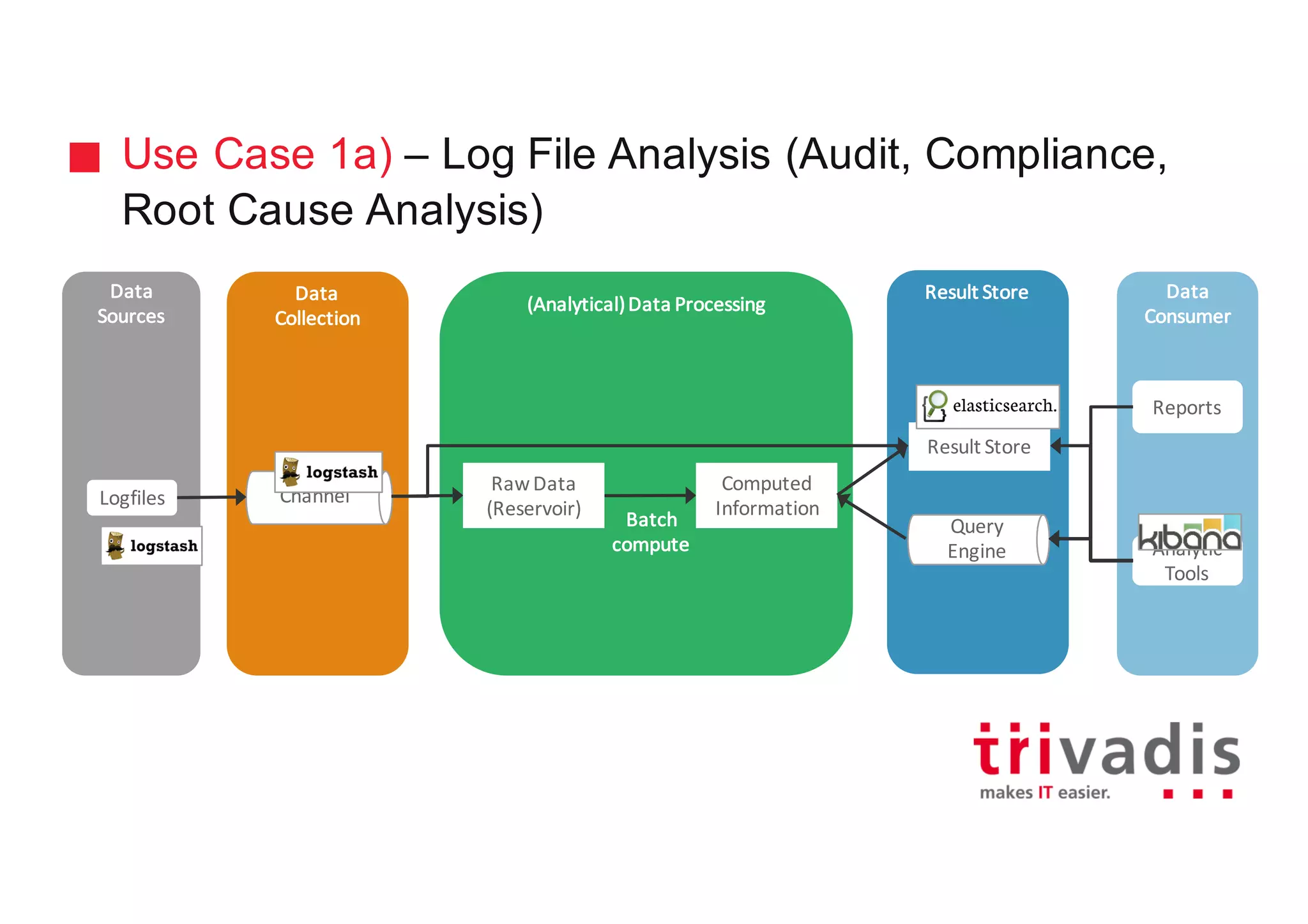

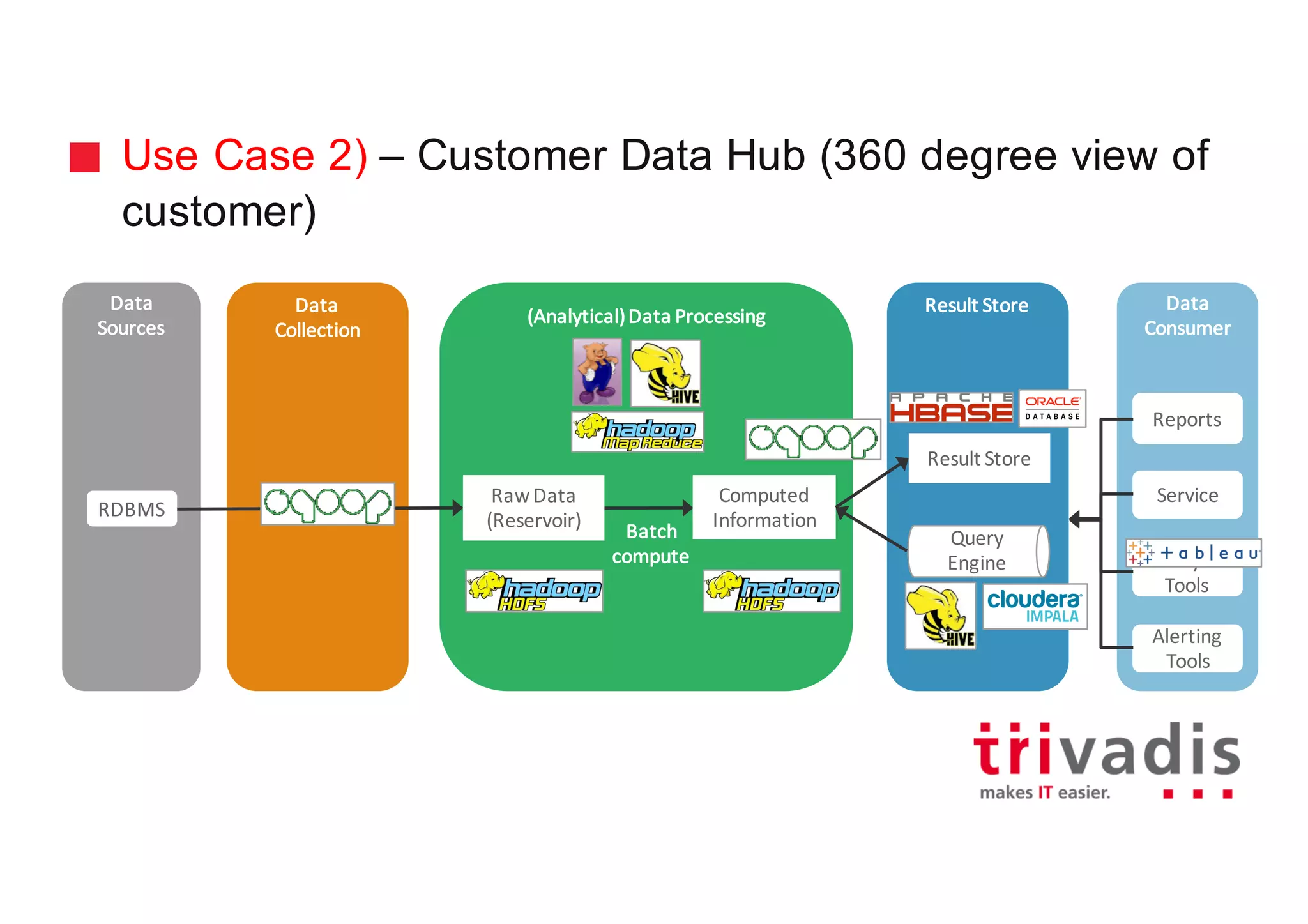

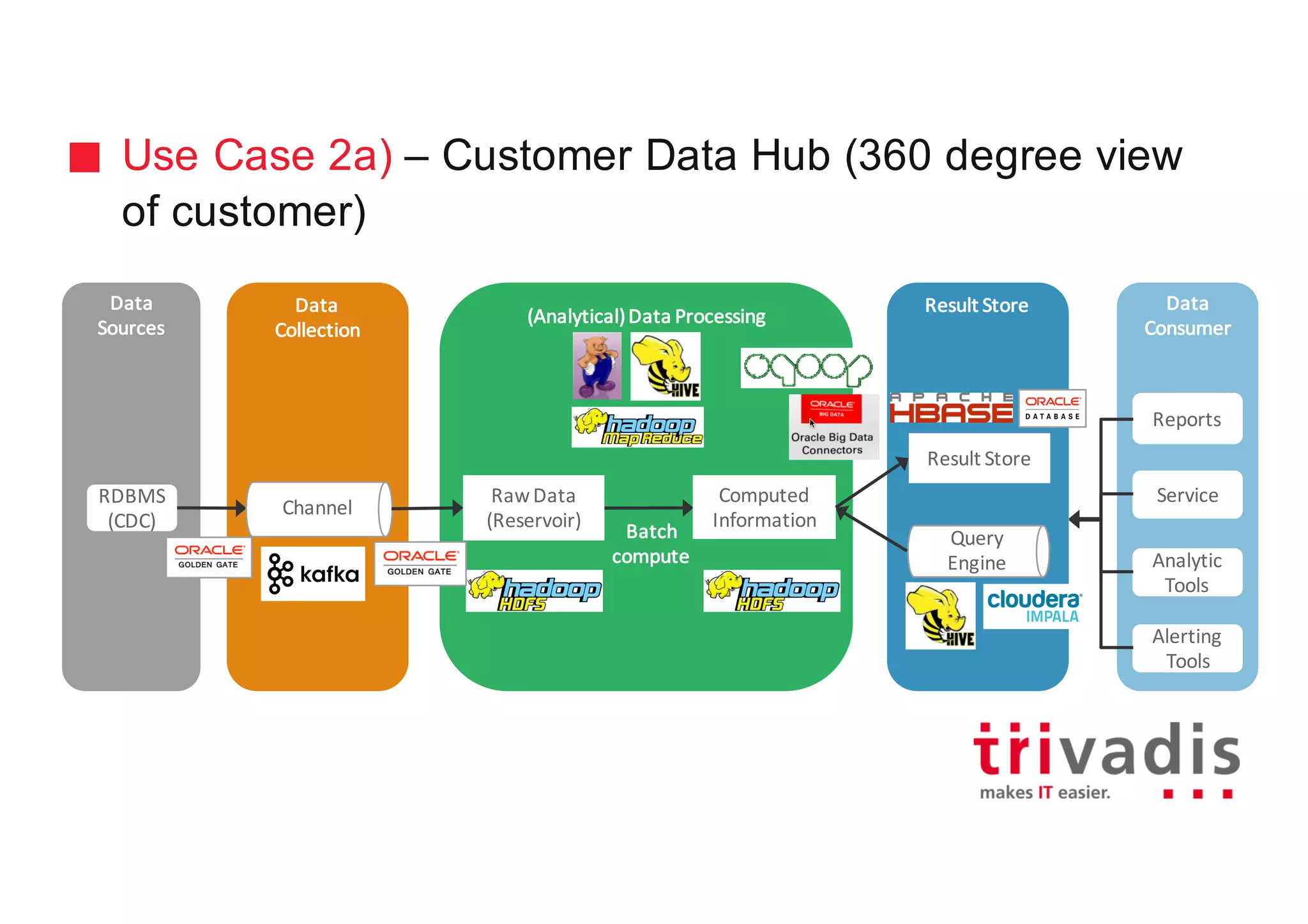

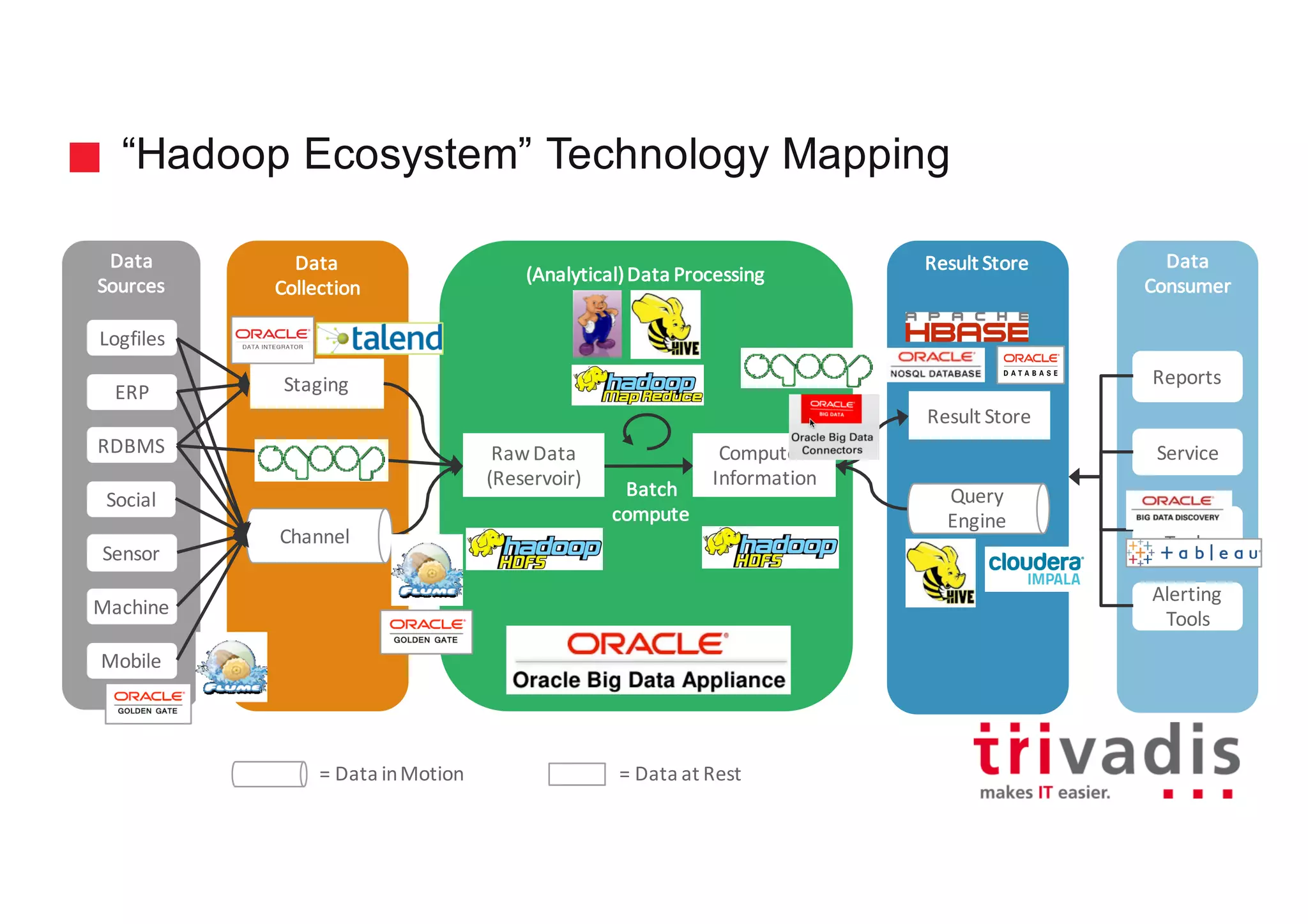

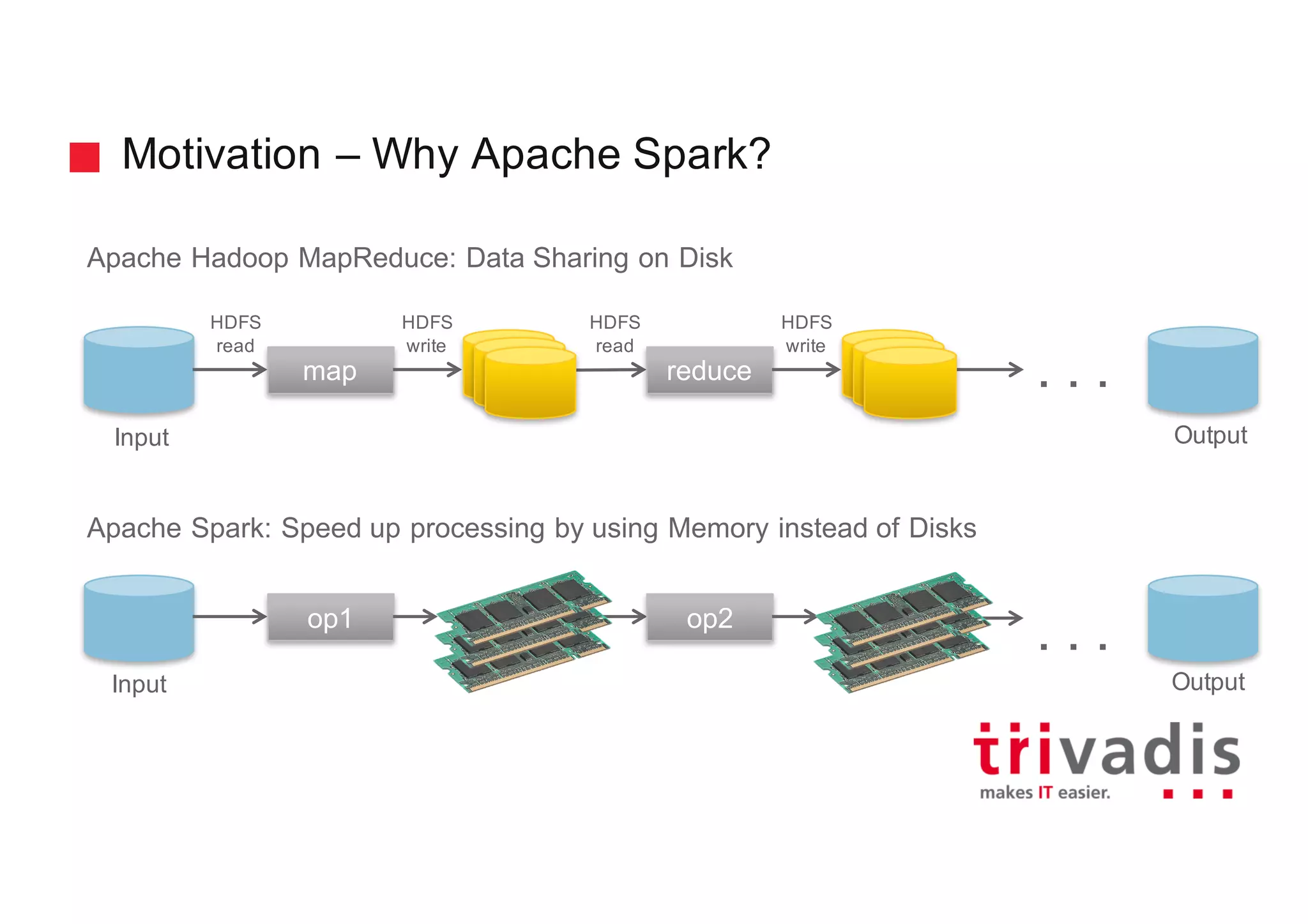

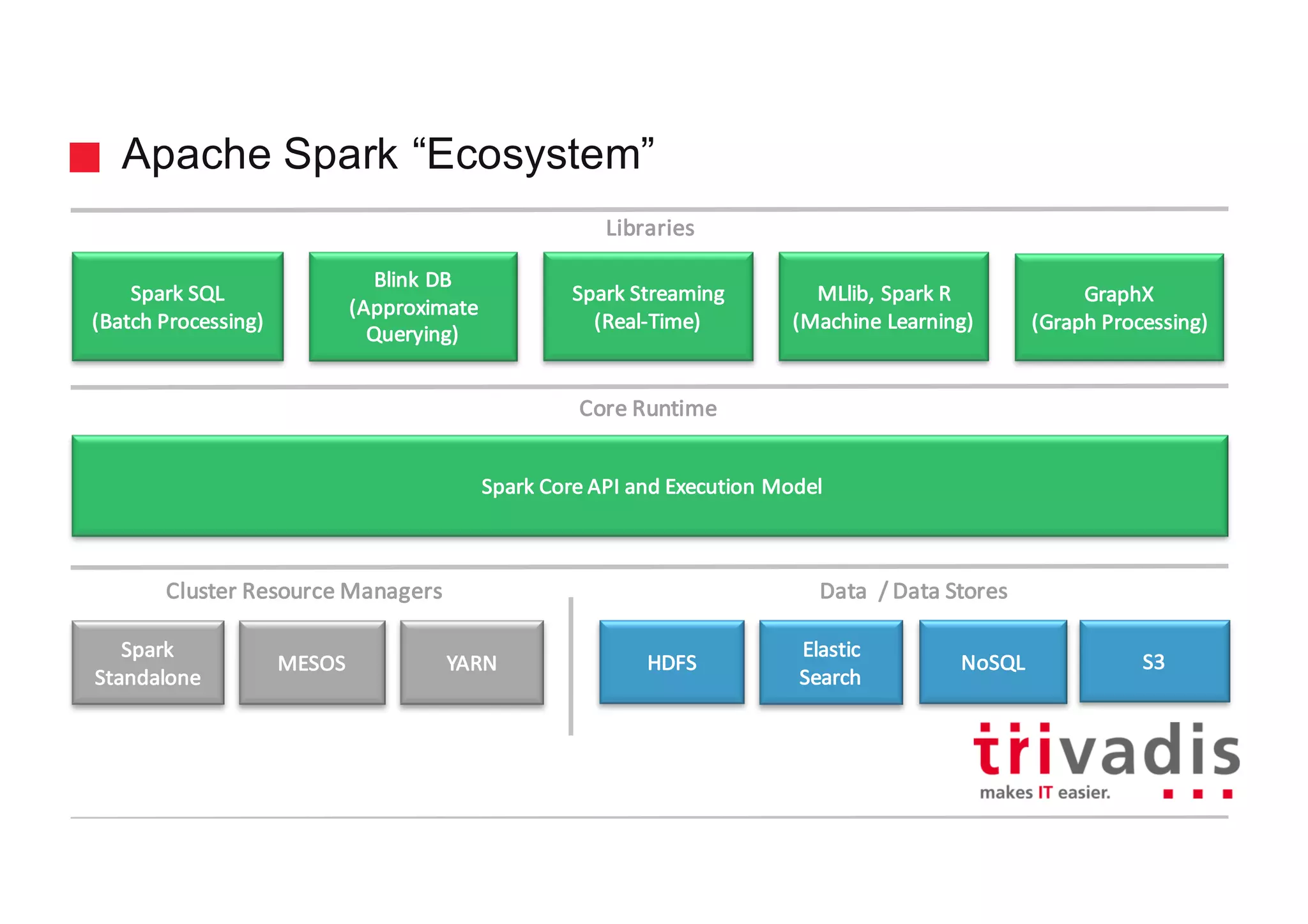

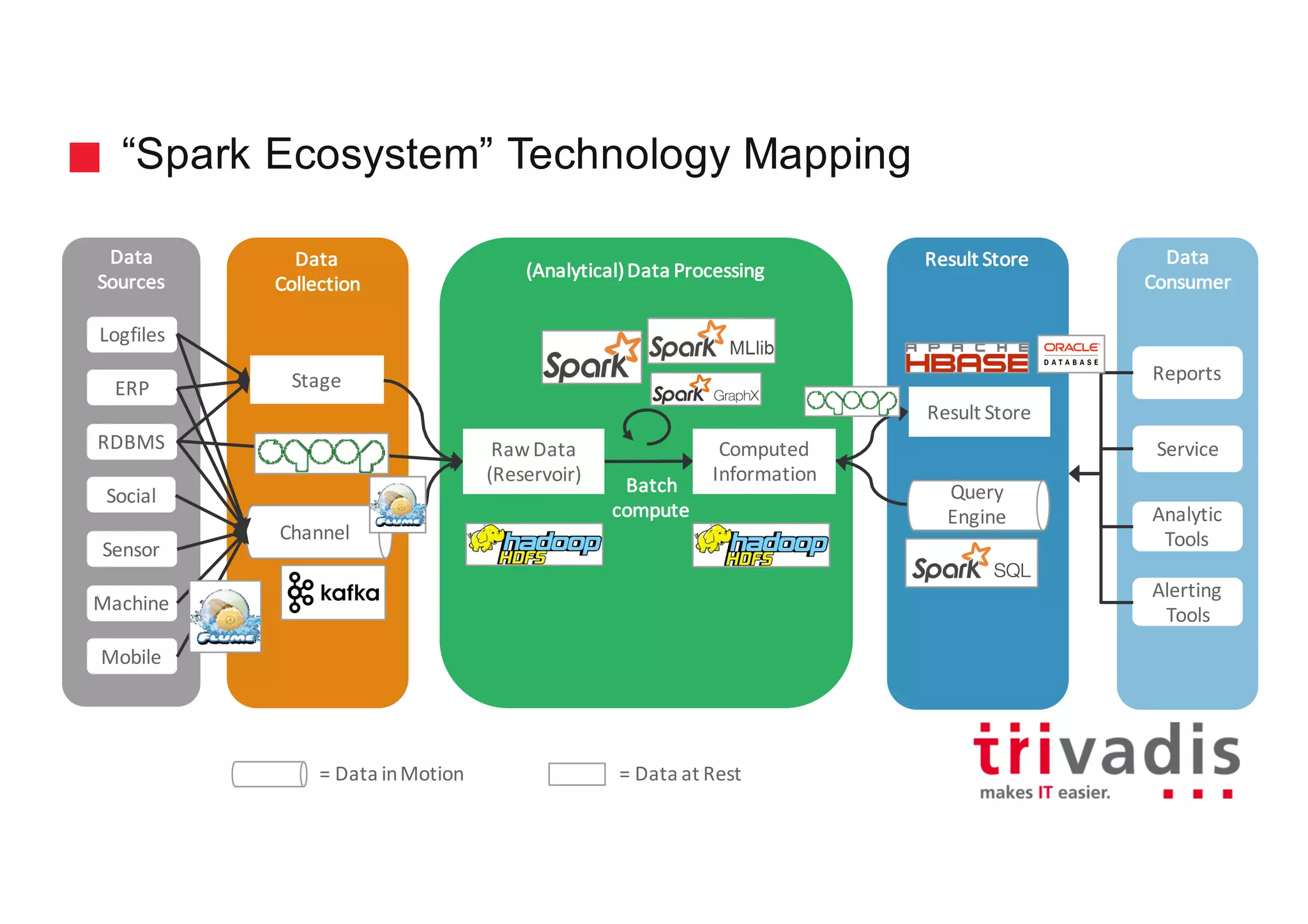

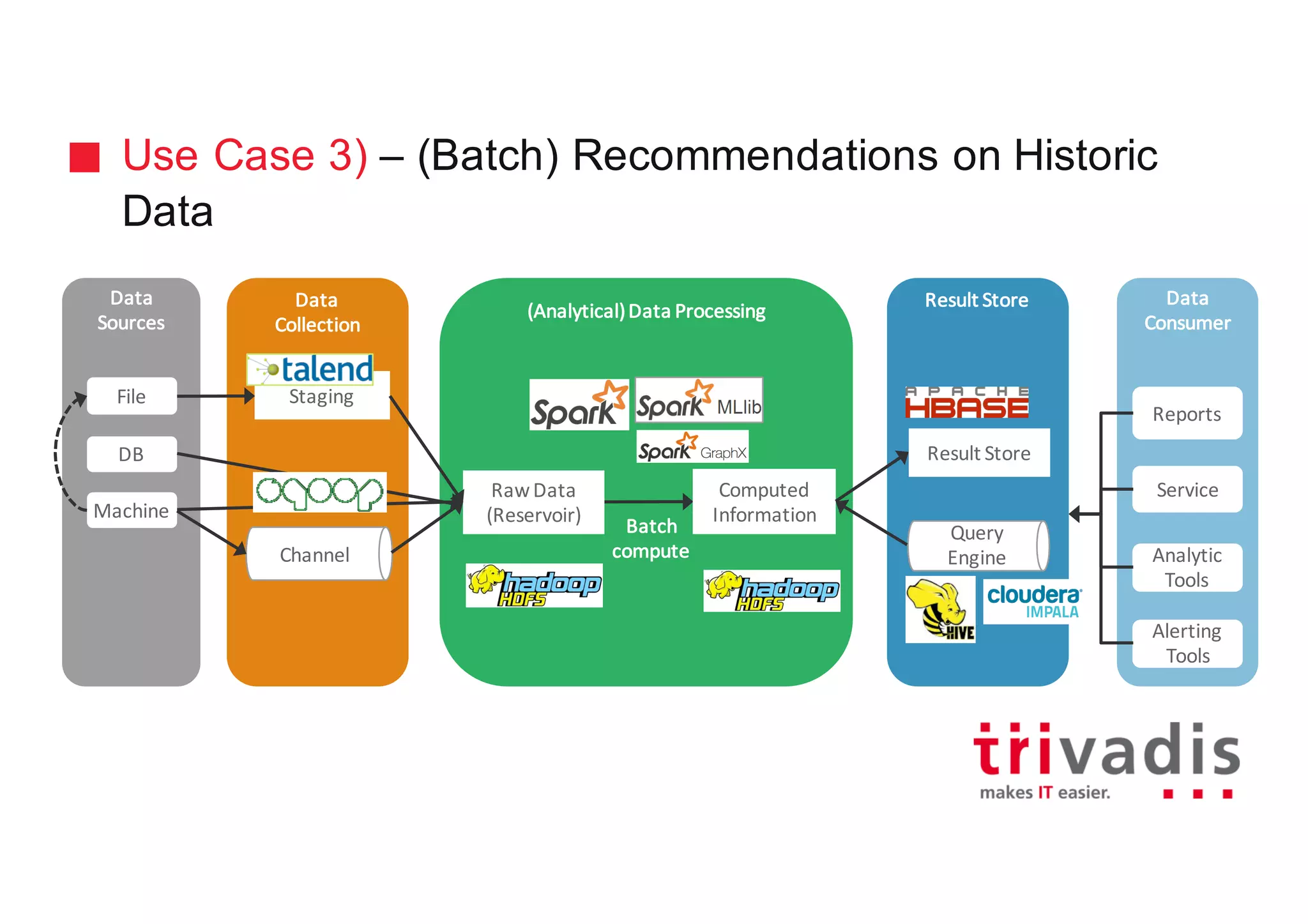

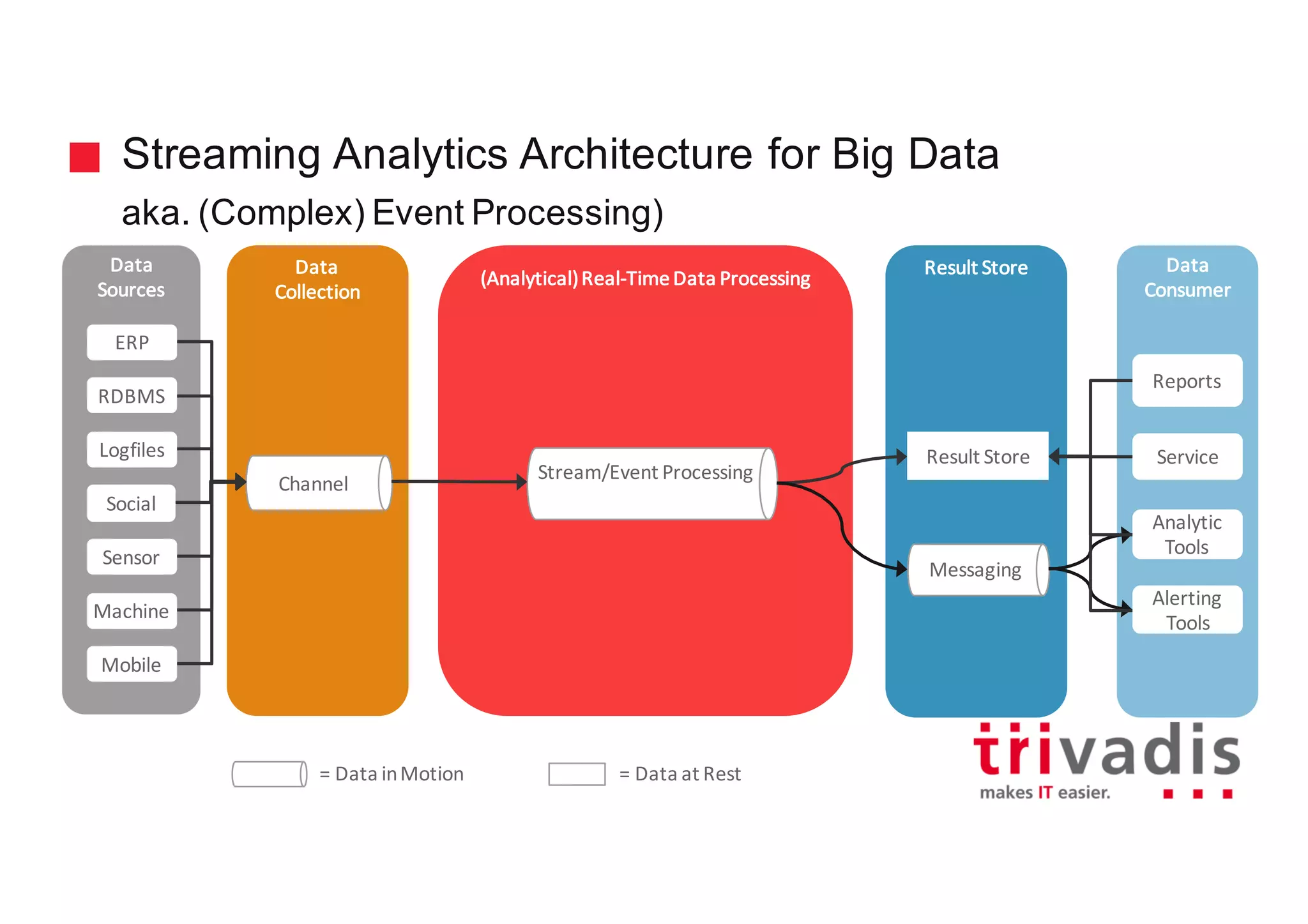

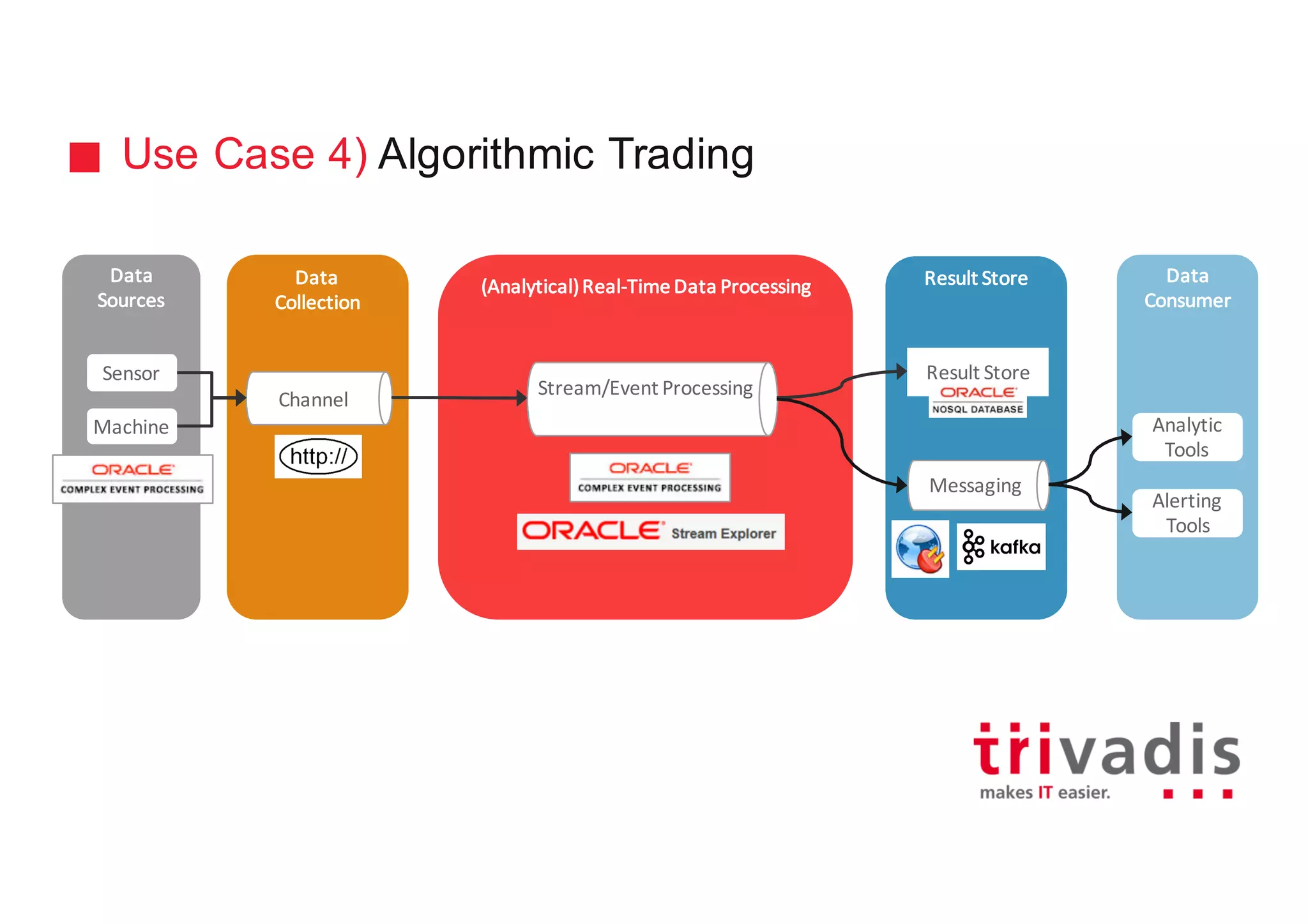

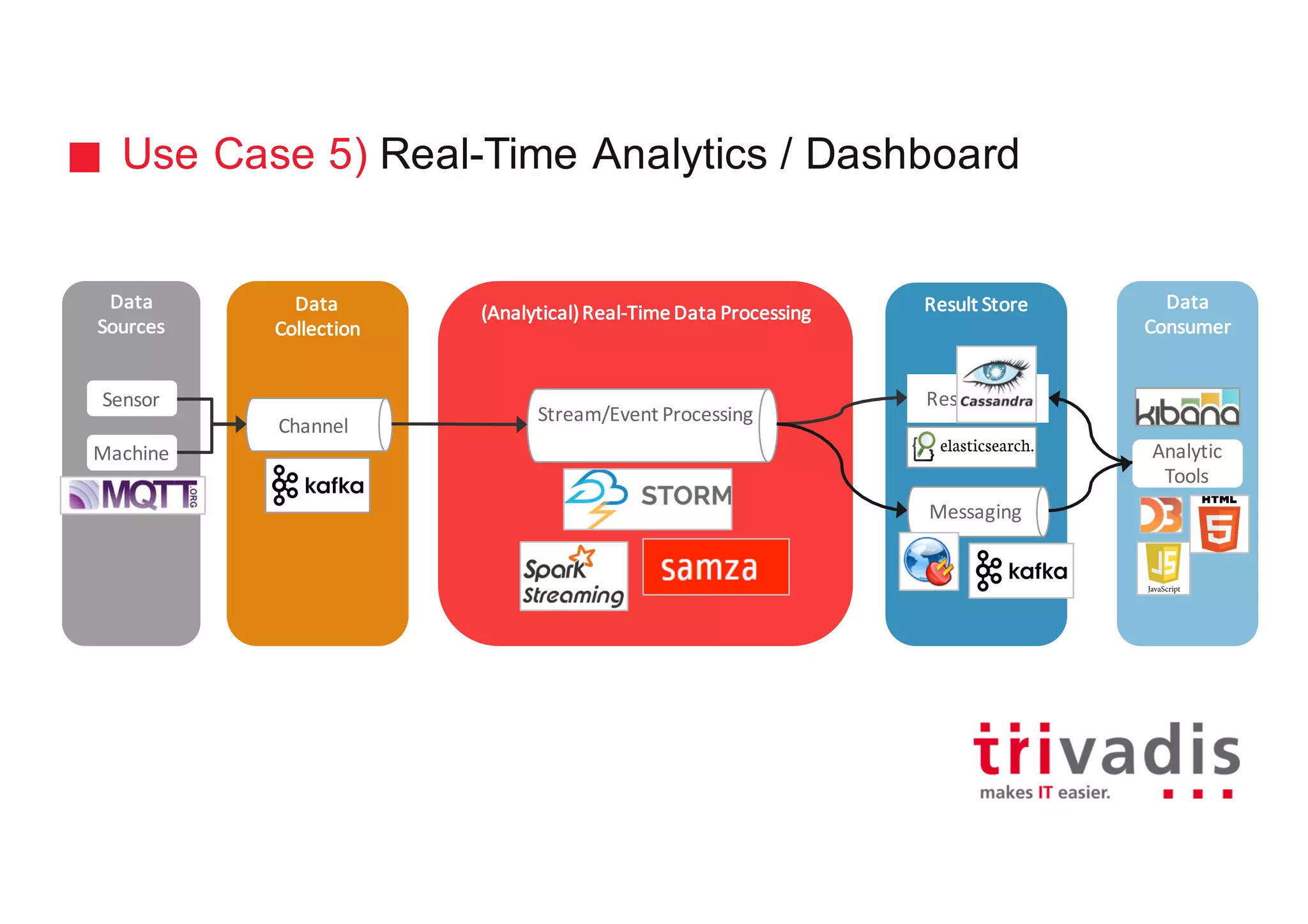

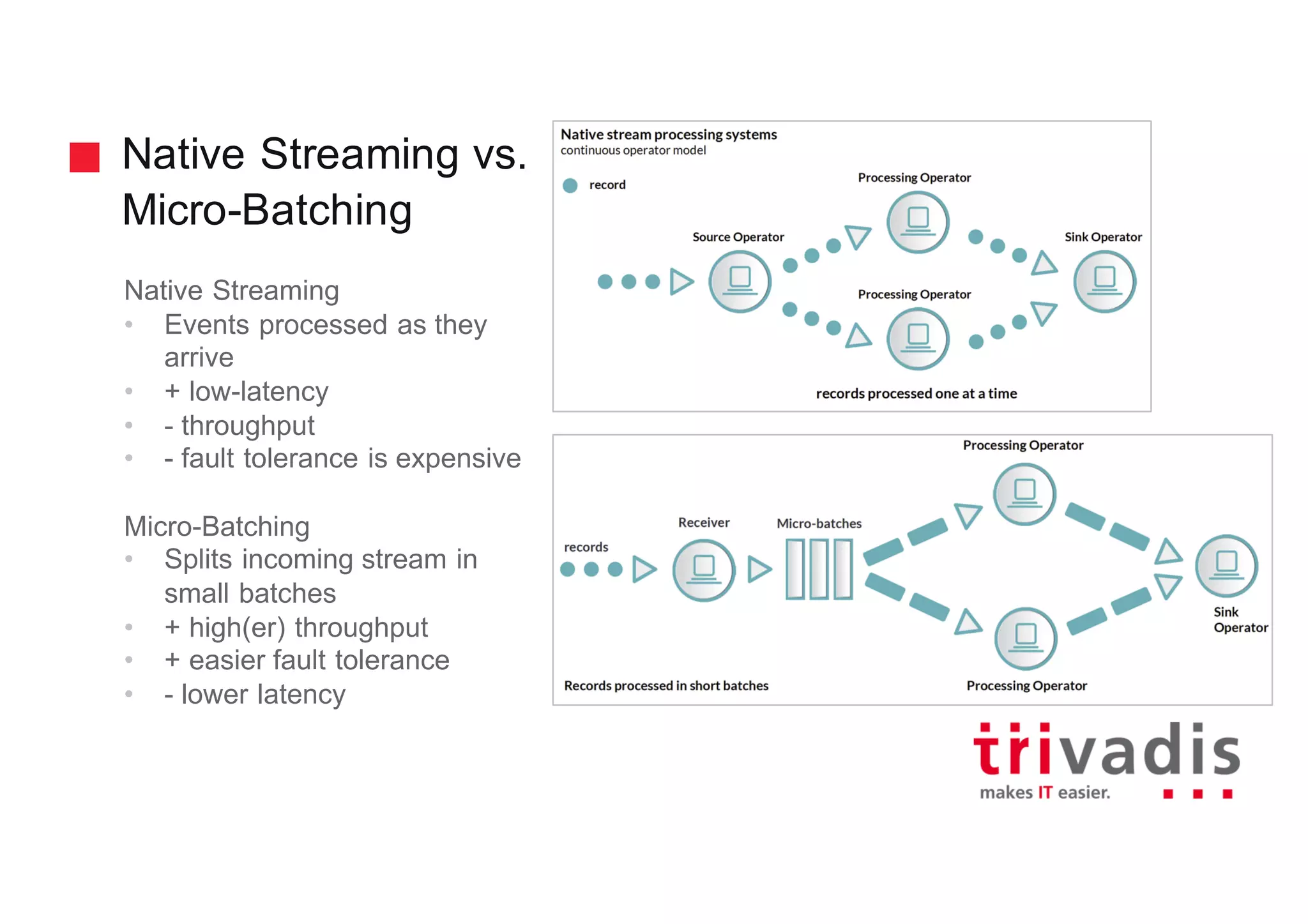

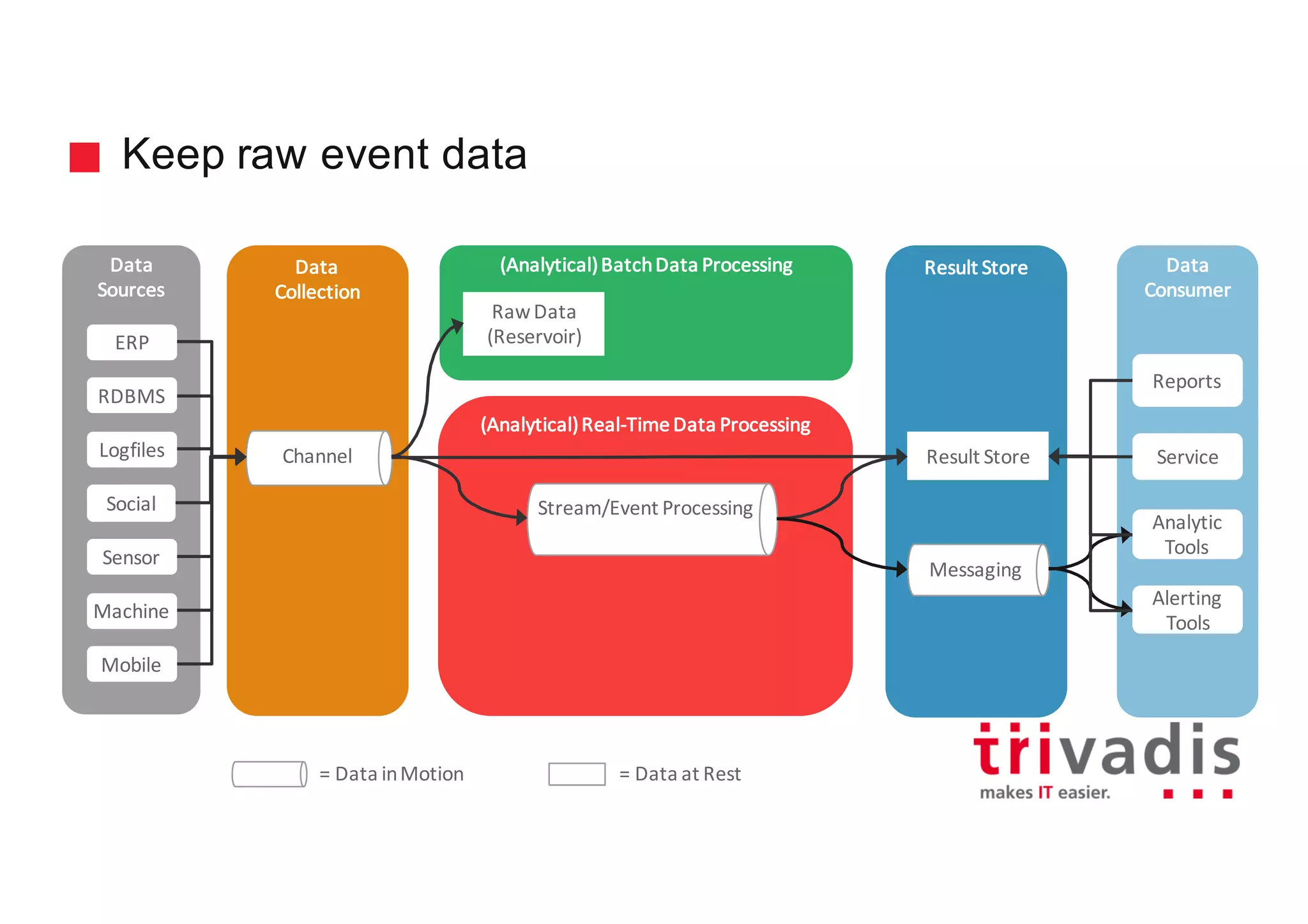

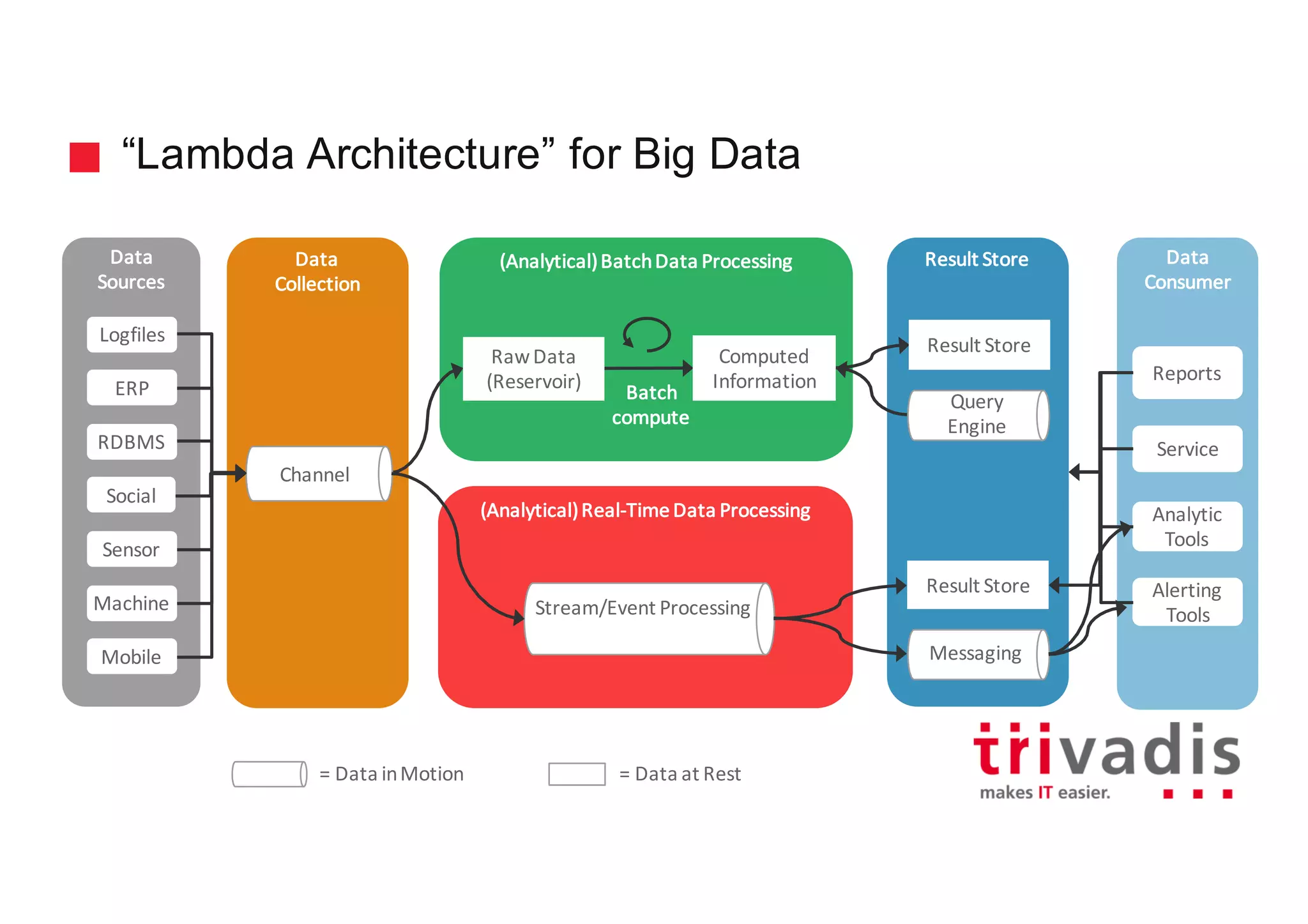

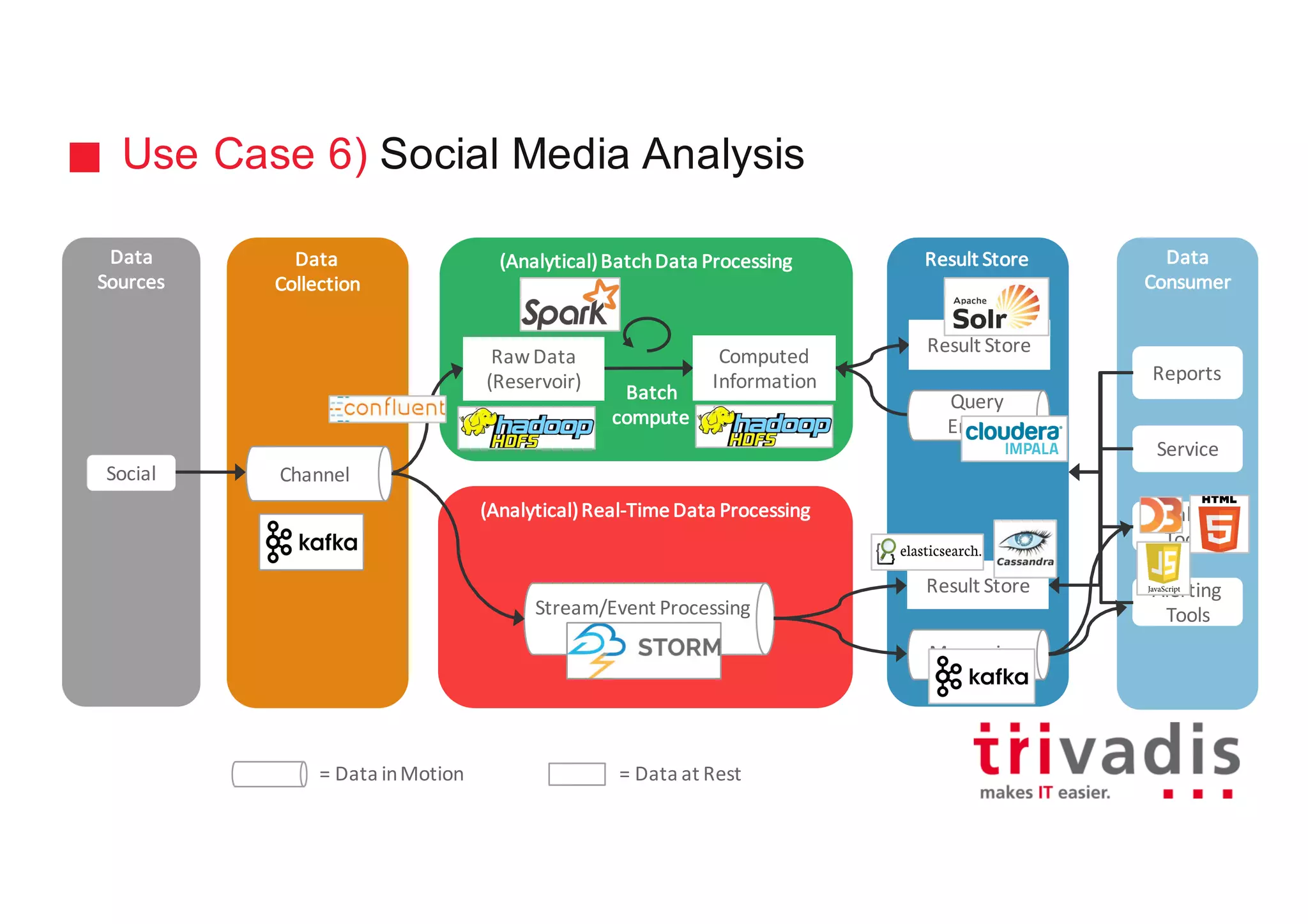

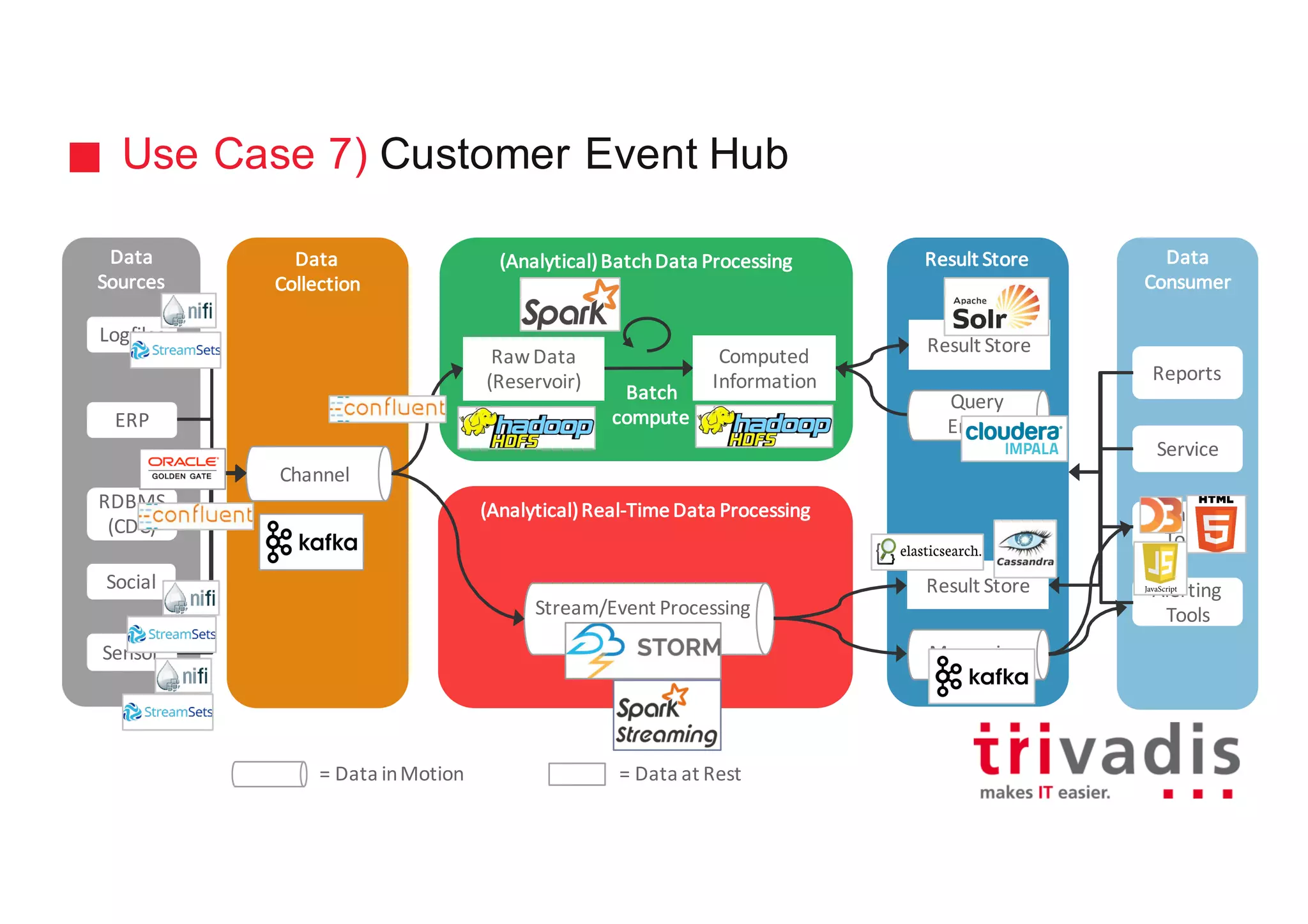

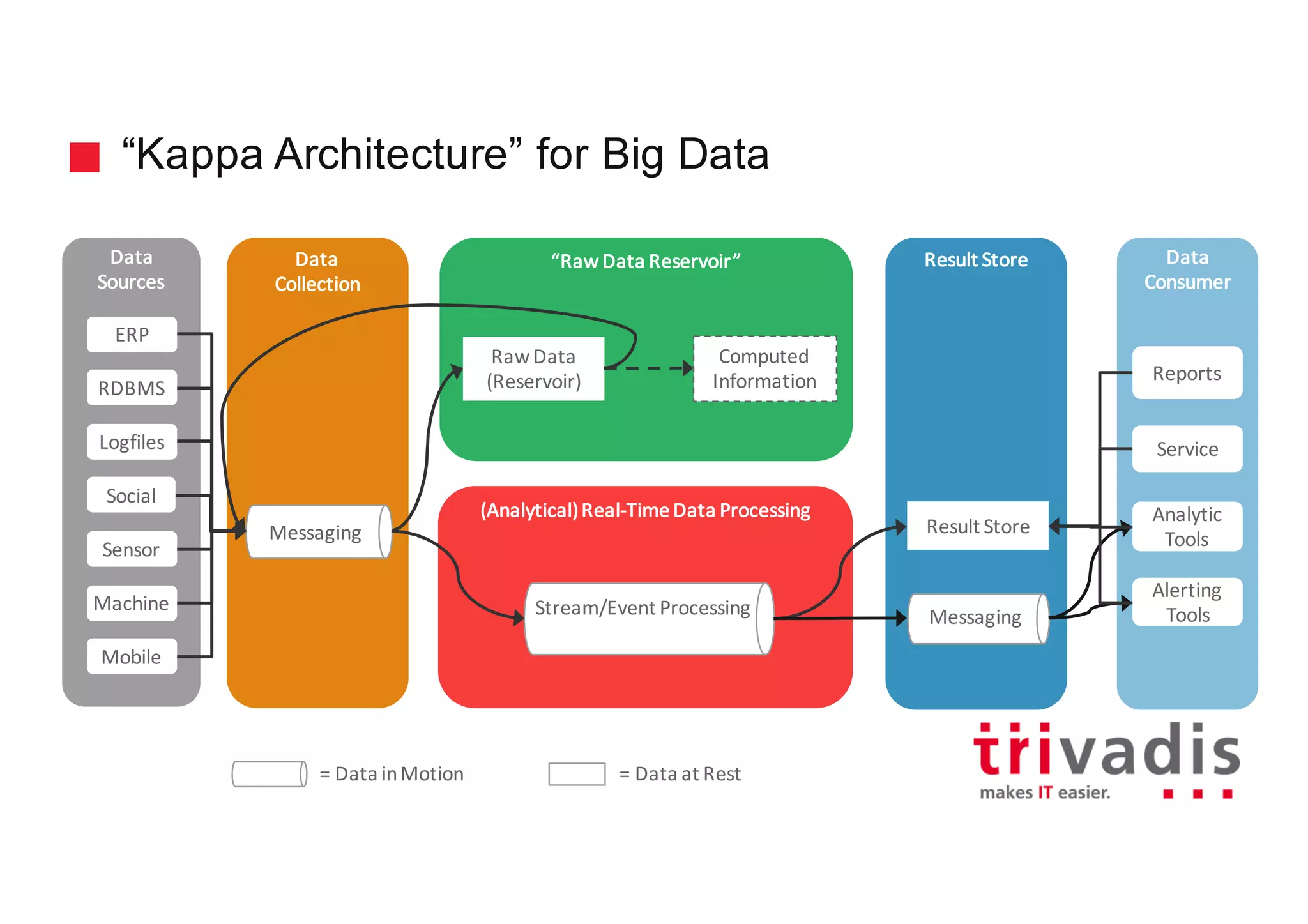

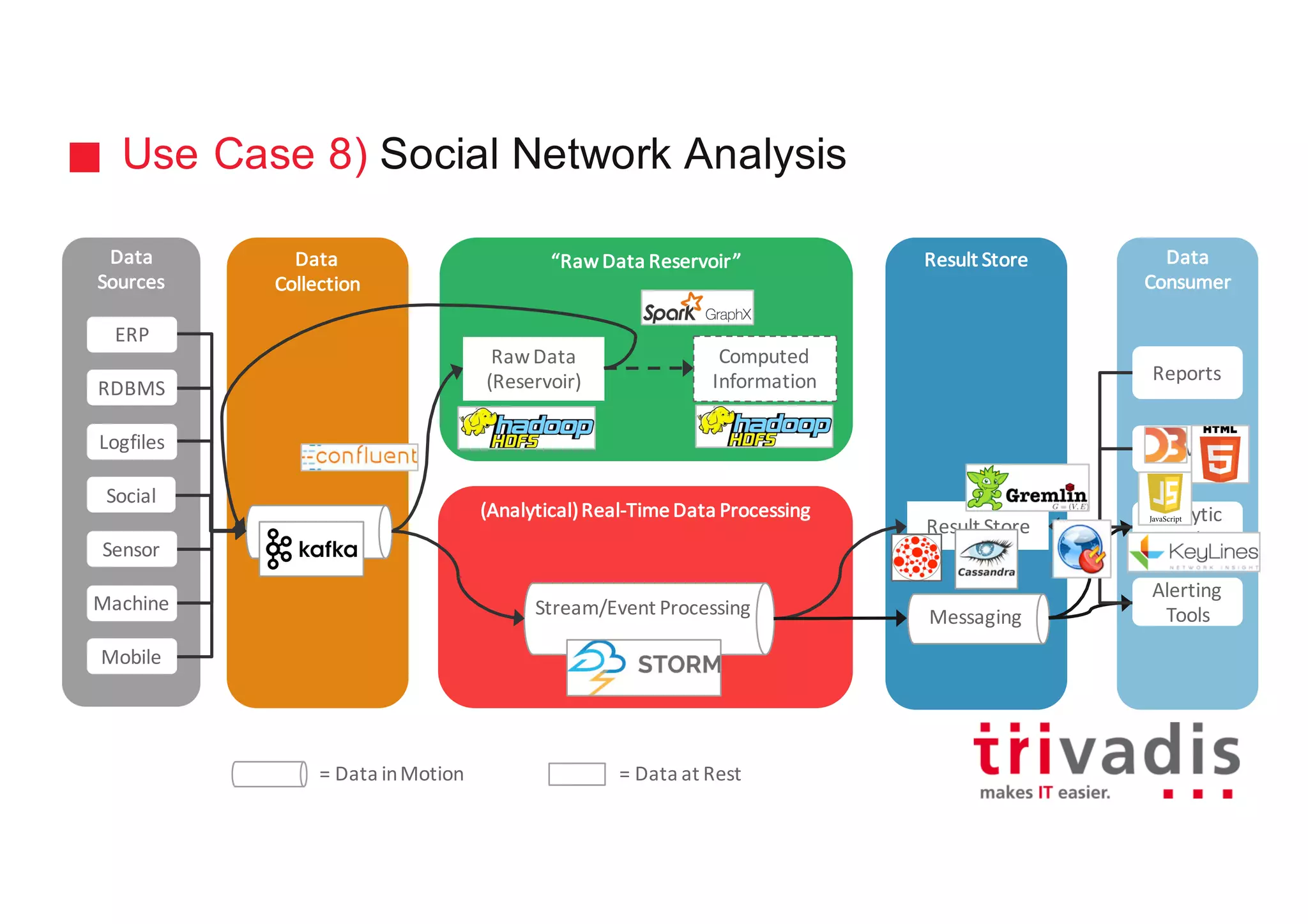

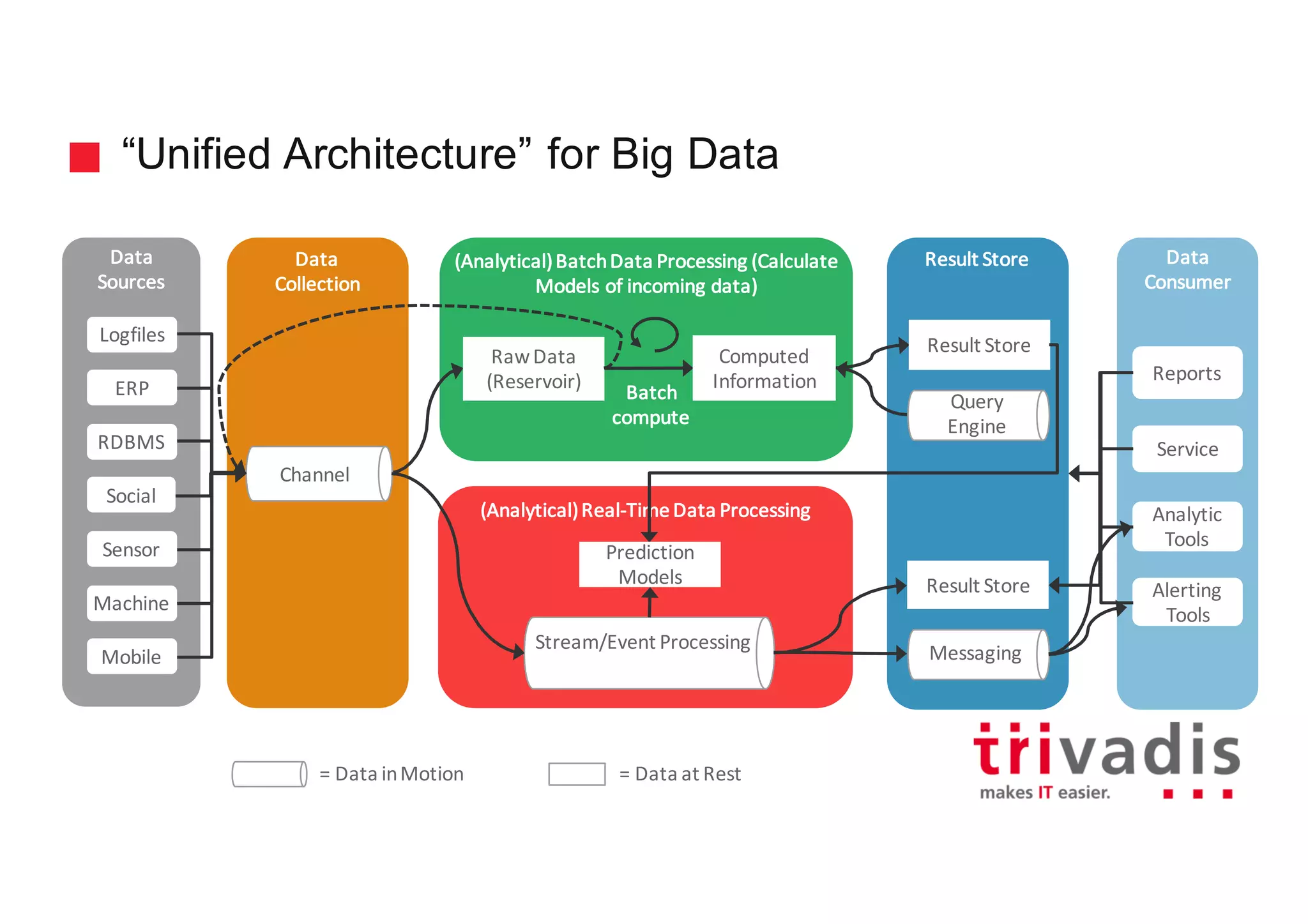

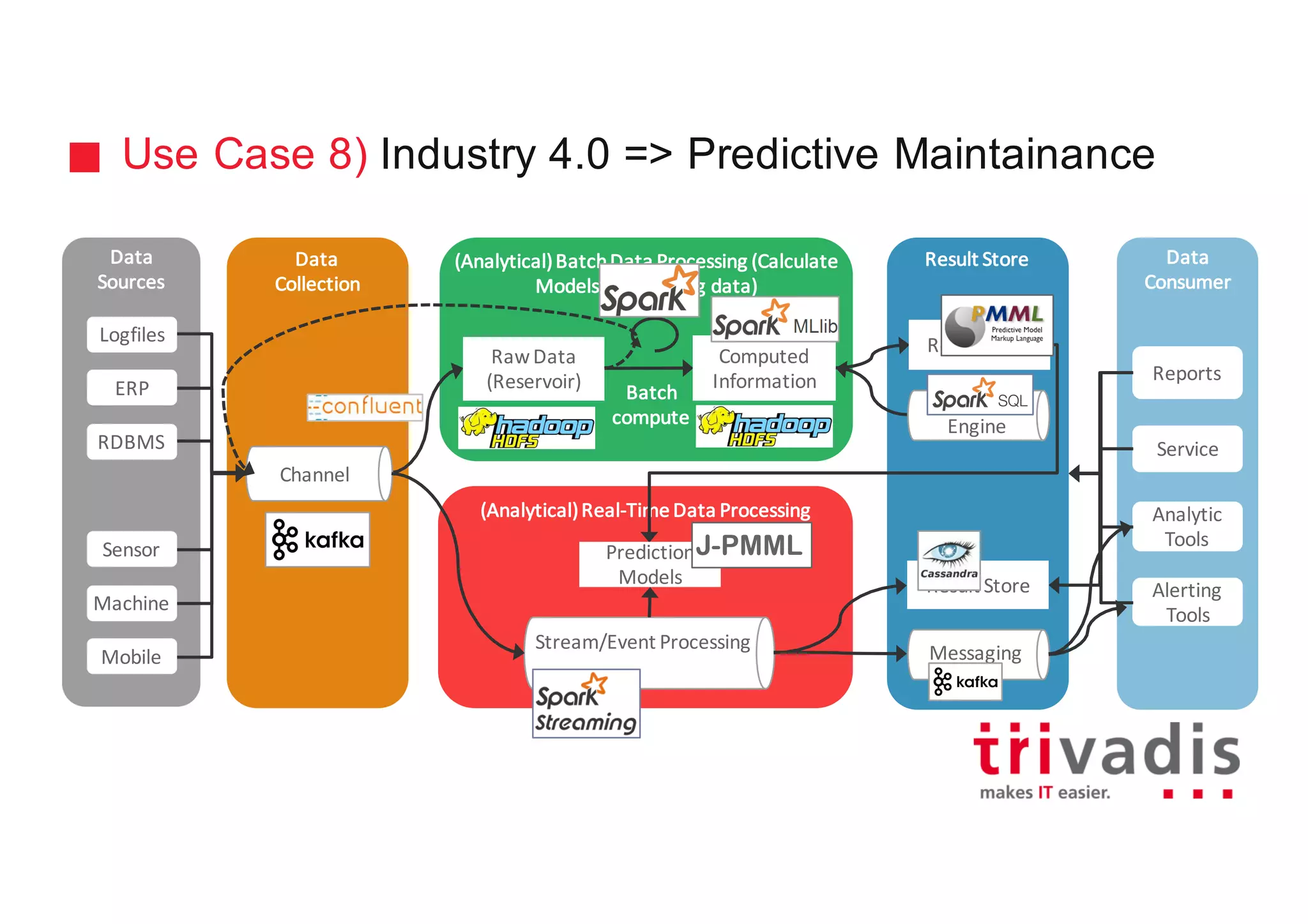

The document discusses various architectures for Big Data solutions, including traditional, streaming, lambda, kappa, and unified architectures. It emphasizes the importance of understanding specific use cases when selecting the appropriate architecture, as Big Data remains an evolving field without standardized practices. Apache Spark is highlighted as a leading technology that enhances performance, while the document details the integration of batch and real-time processing approaches to improve data analytics outcomes.