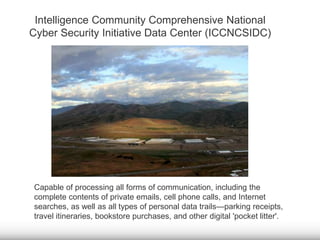

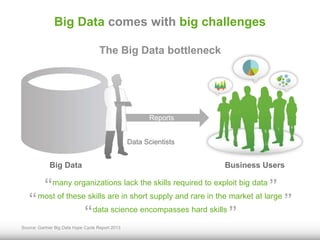

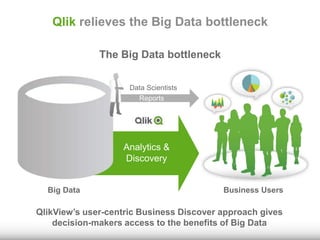

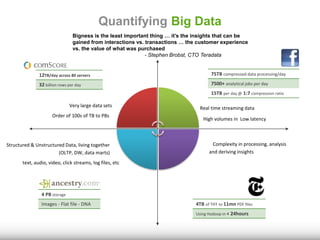

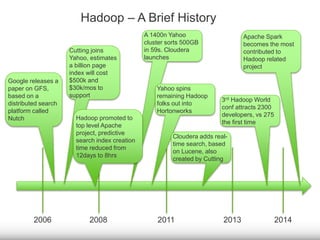

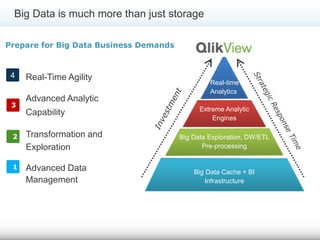

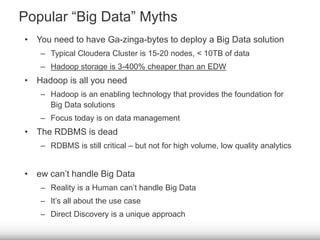

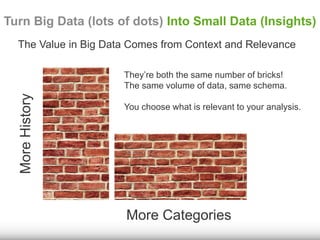

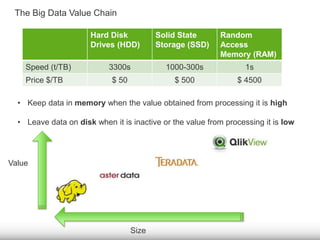

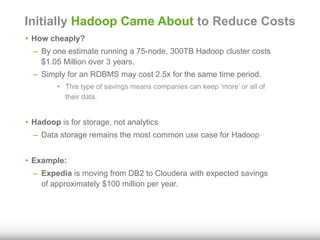

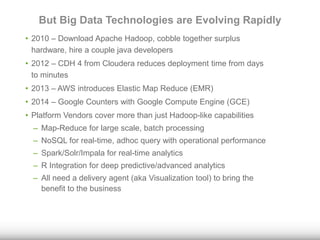

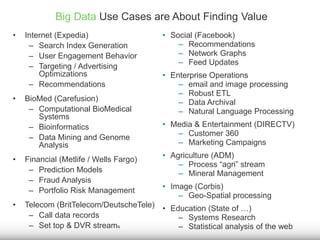

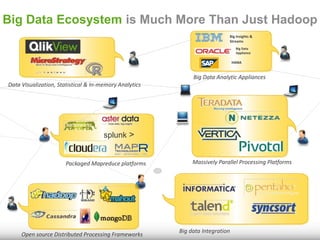

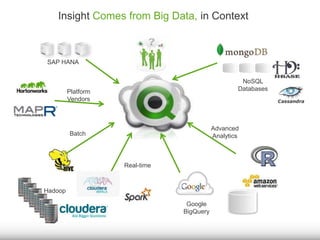

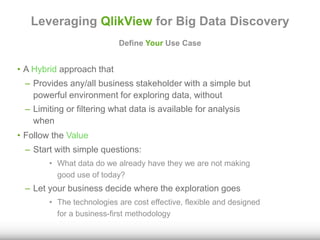

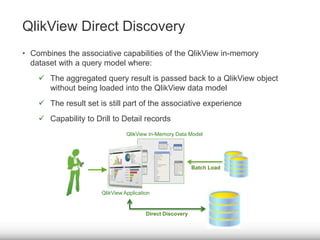

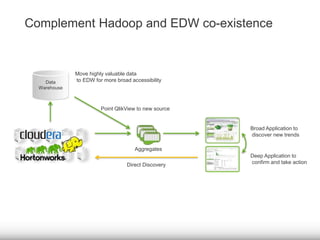

The document discusses the significance of big data for business users, emphasizing the challenges and solutions to effectively harness it. It outlines Qlik's approach to alleviating the big data bottleneck, enabling users to leverage insights without the need for extensive technical skills. Additionally, it highlights the evolution of big data technologies, potential use cases across various industries, and the importance of context and relevance in deriving value from data.