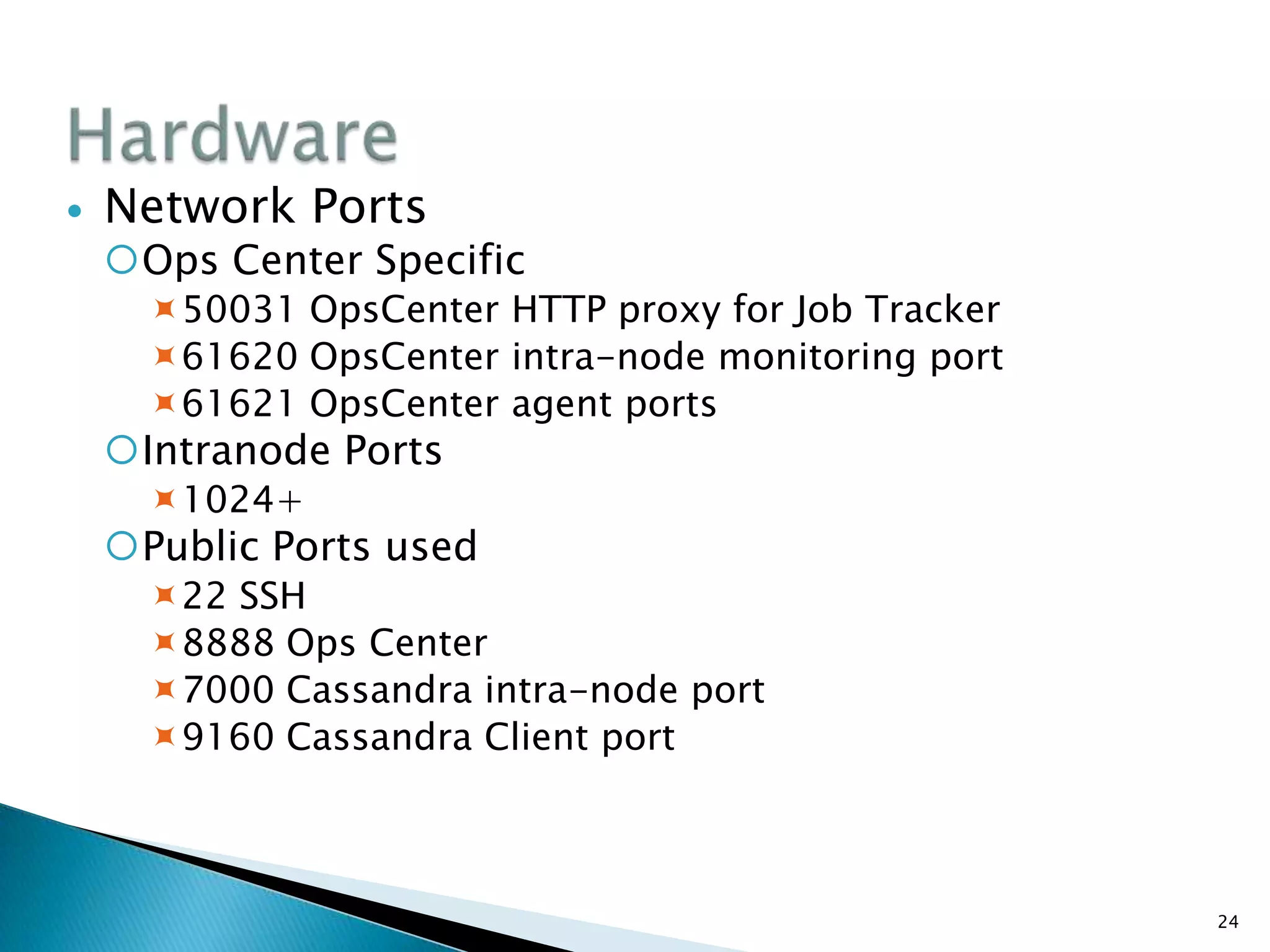

The document outlines Kalpesh Pradhan's expertise in big data technologies, specifically focusing on NoSQL solutions like Apache Cassandra for database migration and analytics. It highlights the challenges and advantages of big data in various fields, emphasizing its role in informed decision-making and competitive advantages for organizations. Additionally, it discusses the technical requirements for deploying Cassandra, including hardware needs, data storage, and system architecture.