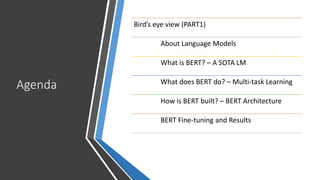

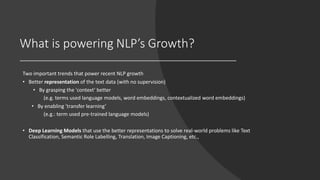

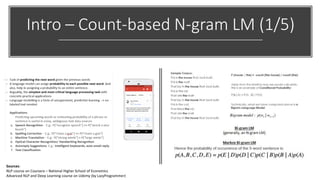

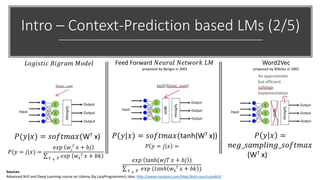

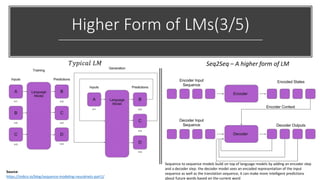

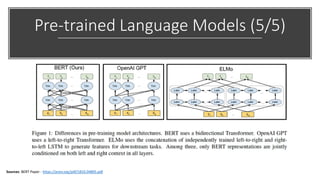

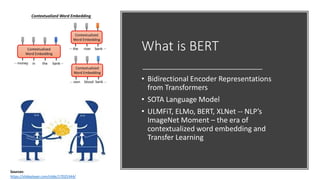

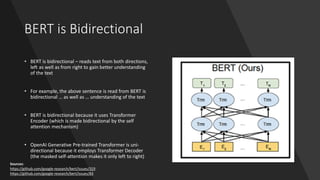

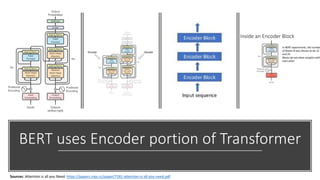

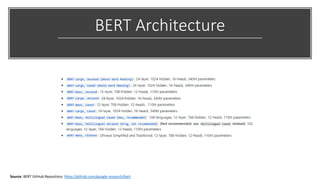

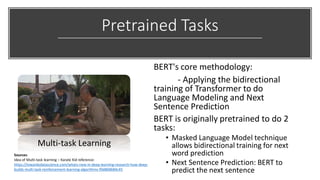

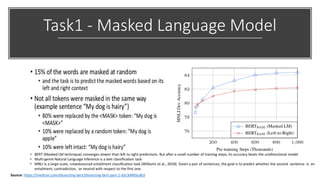

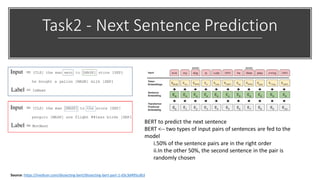

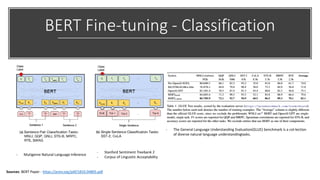

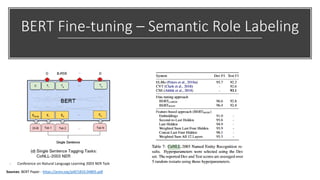

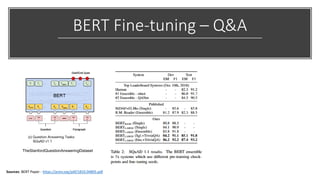

The document discusses BERT, a state-of-the-art language model that utilizes a bidirectional transformer architecture for multi-task learning. Key topics include the importance of better text representation, the introduction of transfer learning, and how BERT improves upon traditional language models through tasks like masked language modeling and next sentence prediction. It also highlights various NLP applications of BERT and the foundational concepts behind its design and capabilities.