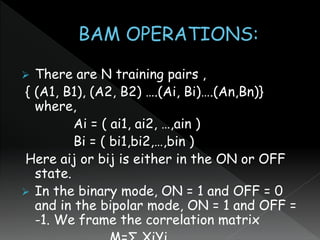

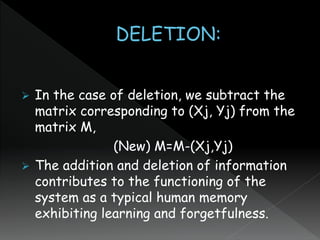

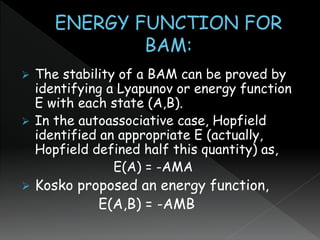

- Autocorrelators were introduced as a theoretical concept by Donald Hebb in 1949 and were further analyzed by Amari in the 1970s. They store bipolar patterns by summing their outer products to create a connection matrix.

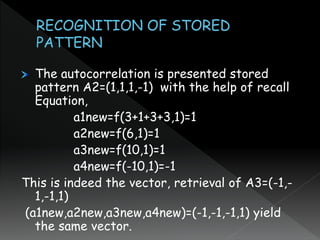

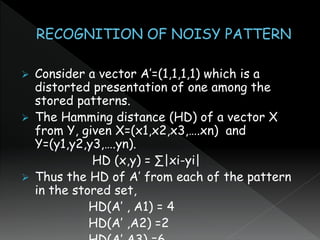

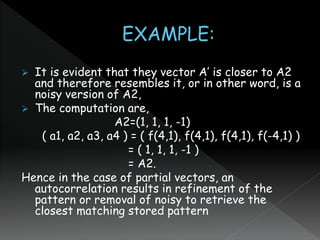

- The autocorrelator recalls patterns using a vector-matrix multiplication recall equation. For example, it can correctly recall the stored pattern A2 from a distorted input pattern A' by reducing the noise through the computation.

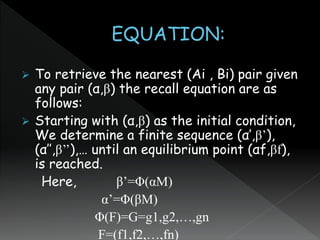

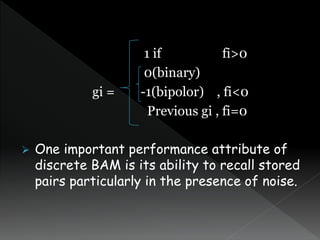

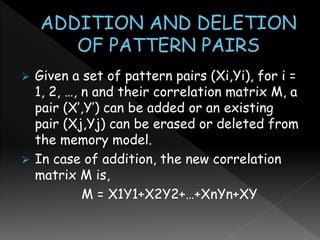

- Bidirectional associative memories (BAMs) are two-layer neural networks based on earlier autocorrelator models. They can store and recall pairs of patterns using a correlation matrix and recall equations to iteratively update input-output pairs until equilibrium is

![A first order auto correlated stores M

bipolar pattern A1,A2,A3,…..Am by

summing together M outer product as,

T= ∑ [Ai][Aj]

Here,

T=[tij] is a (p*p) connection matrix and

Ai È {-1,1}.

The Autocorrelator is recalls Equation is

Vector matrix Multipulication.](https://image.slidesharecdn.com/sc-191003142116/85/AUTO-HETRO-CORRELATOR-3-320.jpg)

![Consider the following pattern’s

A1=(-1,1,-1,1)

A2=(1,1,1,-1)

A3=(-1,-1,-1,1)

The Connection Matrix:-

3 1 3 -3

T = ∑ [Ai][Aj]= 1 3 1 -1

3 1 3 -3

-3 -1 -3 3](https://image.slidesharecdn.com/sc-191003142116/85/AUTO-HETRO-CORRELATOR-4-320.jpg)